机器学习深度学习基础笔记(4)——Backpropagation算法实现

- 该系列是笔者在机器学习深度学习系列课程学习过程中记录的笔记,简单粗暴,仅供参考。

- 下面的算法代码来自https://github.com/mnielsen/neural-networks-and-deep-learning

- 再次强调,代码不是笔者自己写的,是从上面的链接下载的!

更新权重和偏重公式:

更新权重和偏重代码回顾:

def update_mini_batch(self, mini_batch, eta):

"""Update the network's weights and biases by applying

gradient descent using backpropagation to a single mini batch.

The ``mini_batch`` is a list of tuples ``(x, y)``, and ``eta``

is the learning rate."""

nabla_b = [np.zeros(b.shape) for b in self.biases]

nabla_w = [np.zeros(w.shape) for w in self.weights]

for x, y in mini_batch:

delta_nabla_b, delta_nabla_w = self.backprop(x, y)

nabla_b = [nb+dnb for nb, dnb in zip(nabla_b, delta_nabla_b)]

nabla_w = [nw+dnw for nw, dnw in zip(nabla_w, delta_nabla_w)]

self.weights = [w-(eta/len(mini_batch))*nw

for w, nw in zip(self.weights, nabla_w)]

self.biases = [b-(eta/len(mini_batch))*nb

for b, nb in zip(self.biases, nabla_b)]其中backprop():

def backprop(self, x, y):

"""Return a tuple ``(nabla_b, nabla_w)`` representing the

gradient for the cost function C_x. ``nabla_b`` and

``nabla_w`` are layer-by-layer lists of numpy arrays, similar

to ``self.biases`` and ``self.weights``."""

nabla_b = [np.zeros(b.shape) for b in self.biases]#初始化bias

nabla_w = [np.zeros(w.shape) for w in self.weights]#初始化weight

# feedforward

activation = x

activations = [x] # list to store all the activations, layer by layer

zs = [] # list to store all the z vectors, layer by layer

for b, w in zip(self.biases, self.weights):

z = np.dot(w, activation)+b

zs.append(z)

activation = sigmoid(z)

activations.append(activation)

# backward pass

delta = self.cost_derivative(activations[-1], y) * \

sigmoid_prime(zs[-1])

nabla_b[-1] = delta

nabla_w[-1] = np.dot(delta, activations[-2].transpose())

# Note that the variable l in the loop below is used a little

# differently to the notation in Chapter 2 of the book. Here,

# l = 1 means the last layer of neurons, l = 2 is the

# second-last layer, and so on. It's a renumbering of the

# scheme in the book, used here to take advantage of the fact

# that Python can use negative indices in lists.

for l in xrange(2, self.num_layers):

z = zs[-l]

sp = sigmoid_prime(z)

delta = np.dot(self.weights[-l+1].transpose(), delta) * sp

nabla_b[-l] = delta

nabla_w[-l] = np.dot(delta, activations[-l-1].transpose())

return (nabla_b, nabla_w)解释:

返回的是一层一层的numpy arrays

初始化bias

初始化weight

1.输入x:设置输入层activation a

activation = x:传入的784向量赋给activation

activations = [x]:初始化一个空的list,用来存所有的activation,是个矩阵

zs = []:初始化一个空的list,用于储存后面一层一层的,所有的z vectores

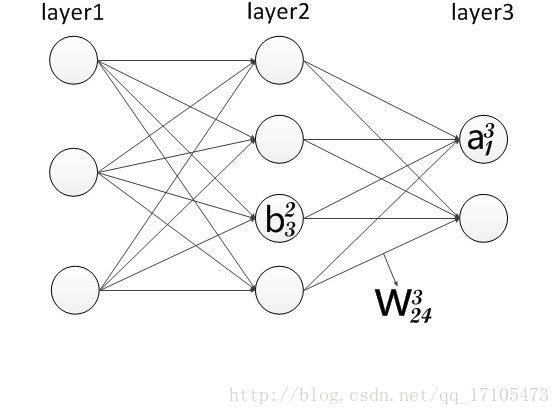

2.正向更新:对于l=1,2,3,4,……,L计算

zl=wlal−1+bl

z = np.dot(w, activation)+b:中间变量的值

zs.append(z):把z的值插入到zs中

activation = sigmoid(z):计算激活值

activations.append(activation):插入到list中

到这里就完成了正向传播。

3.计算出输出层error

δL=▽aC⊙σ'(zL)

activations[-1]:倒数第一个激活值

derivative():求导

self.cost_derivative(activations[-1], y) : ▽aC

sigmoid_prime(zs[-1]): σ'(zL)

区分:

def sigmoid(z):#sigmoid方程

return 1.0/(1.0+np.exp(-z))

def sigmoid_prime(z):#sigmoid方程的求导

return sigmoid(z)*(1-sigmoid(z))delta = self.cost_derivative(activations[-1], y) * sigmoid_prime(zs[-1]):输出层的error

4.反向更新error(Backpropagate error)

∂C∂blj=δlj

∂C∂wljk=al−1kδlj

倒数第一层的b和w更新为:

nabla_b[-1] = delta

nabla_w[-1] = np.dot(delta, activations[-2].transpose())

.transpose():矩阵转置

self.num_layers:层数

δl=((wl+1)Tδl+1)⊙σ′(zL)

dot():点乘运算 ⊙

self.weights[-l+1].transpose(): ((wl+1)T

delta: δl+1

sp = sigmoid_prime(z): σ′(zL)

for l in xrange(2, self.num_layers):#l=2开始循环

z = zs[-l]#z从倒数第二行开始

sp = sigmoid_prime(z)#求导

delta = np.dot(self.weights[-l+1].transpose(), delta) * sp

nabla_b[-l] = delta

nabla_w[-l] = np.dot(delta, activations[-l-1].transpose())

return (nabla_b, nabla_w)5.输出

∂C∂blj=δlj

∂C∂wljk=al−1kδlj

nabla_b[-l] = delta

nabla_w[-l] = np.dot(delta, activations[-l-1].transpose())

最后通过循环输出,实现更新。

为什么Backpropagation算法快?

假设为了求 ∂C∂w和∂C∂b

1.用微积分中的乘法法则太复杂

2.把cost函数当作只有权重的函数,于是定义:

∂C∂wj≈C(w+ϵej)−C(w)ϵ

一个很小的: ϵ>0

单位向量: ej

看似可以,但是具体计算时,假设我们的神经网络中有1000000个权重,对于每一个权重weight,我们都需要通过遍历一遍神经网络来计算 C(w+ϵej)

对于1000000个权重,我们需要遍历神经网络1000000次!仅仅对于1个训练实例x和y。

Backpropagation算法的优势在于让一正一反遍历一遍神经网络的时候就可以把所有的偏导数计算出来 ∂C∂wj (对于所有的w)

也就是两遍就完成一次整个层的权重更新,速度快很多。

- 最后提一下,这个是笔者的听课学习笔记,简单粗暴,仅供参考,如有错误,欢迎指正,谢谢。