Android存储系统解析

目 录

存储框架概述 2

$一、framework篇 2

一、前期布置和准备 3

1、onStart() 4

2、onBootPhase(int phase) 6

二、消息的接收 8

三、消息的发送 12

四、StorageManager 14

$二、VOLD篇 14

一、NetlinkManager 17

二、VloumeManager 23

三、CommandListener 26

$三、USB篇 26

Android存储系统解析

存储框架概述

Android存储系统涉及到内容较多,除了我们熟知的usb相关移动设备(eg:U盘、移动硬盘等)之外,Android内部的分区挂载等操作也是有该部分负责完成,整个过程几乎贯穿Android的整个启动流程。原本该部分主要包括framework和vold两个模块,但为了能与底层的硬件识别关联起来,这里额外增添了和USB相关部分。需要序列图的朋友向下面blog看齐:

http://blog.csdn.net/gulinxieying/article/details/78751200

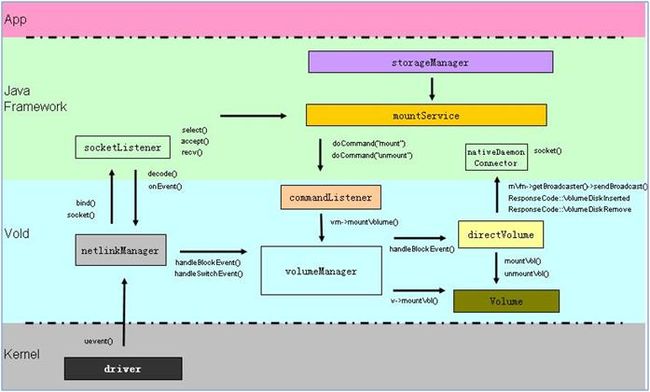

这里借用网上一张较为详细的框图:

-

Linux Kernel:通过uevent向Vold的NetlinkManager发送Uevent事件;

-

NetlinkManager:接收来自Kernel的Uevent事件,再转发给VolumeManager;

-

VolumeManager:接收来自NetlinkManager的事件,再转发给CommandListener进行处理;

-

CommandListener:接收来自VolumeManager的事件,通过socket通信方式发送给MountService;

-

MountService:接收来自CommandListener的事件。

-

StorageManager:MountService的客户端,可以通过binder获取MountService相关操作。

这里较为陌生的就是uevent了,这里简单介绍下。

uevent:它和Linux的Udev设备文件系统及设备模型有关,本身实际上就是一字符串,是用户空间和kernel之间沟通的事件(如usb的插拔操作等),自身携带能与kernel通信的指令。

$一、framework篇

framework封装了很多可供用户调用的接口,这里与存储相关的主要是MountService服务和StorageManager。用户通过创建StorageManager通过binder机制与MountService沟通,通过MountService向下传输操作信息。

一、前期布置和准备

MountService属于Android框架内部的服务,由SystemServer启动,具体代码如下:

private void startOtherServices() {

......

if (mFactoryTestMode != FactoryTest.FACTORY_TEST_LOW_LEVEL) {

if (!disableStorage &&

!"0".equals(SystemProperties.get("system_init.startmountservice"))) {

try {

/*

* NotificationManagerService is dependant on MountService,

* (for media / usb notifications) so we must start MountService first.

*/

mSystemServiceManager.startService(MOUNT_SERVICE_CLASS);

mountService = IMountService.Stub.asInterface(

ServiceManager.getService("mount"));

} catch (Throwable e) {

reportWtf("starting Mount Service", e);

}

}

}

......

}

如代码所示,此处通过MOUNT_SERVICE_CLASS宏启动MountService,MOUNT_SERVICE_CLASS宏定义:

private static final String MOUNT_SERVICE_CLASS =

"com.android.server.MountService$Lifecycle";

下面重点关注MountService中的Lifecycle内部类:

public static class Lifecycle extends SystemService {

private MountService mMountService;

public Lifecycle(Context context) {

super(context);

}

@Override

public void onStart() {

mMountService = new MountService(getContext());

publishBinderService("mount", mMountService);

}

@Override

public void onBootPhase(int phase) {

if (phase == SystemService.PHASE_ACTIVITY_MANAGER_READY) {

mMountService.systemReady();

}

}

......

}

-

onStart():新建并绑定了MountService。

- onBootPhase(int phase):待到SystemServer初始化到PHASE_THIRD_PARTY_APPS_CAN_START阶段时,开始发送H_SYSTEM_READY的广播。

1、onStart()

MountService是在此函数中得到创建并初始化的,须得我们细看一番。来到MountService的构造中,主要代码摘录如下:

public MountService(Context context) {

......

mCallbacks = new Callbacks(FgThread.get().getLooper());

// XXX: This will go away soon in favor of IMountServiceObserver

mPms = (PackageManagerService) ServiceManager.getService("package");

HandlerThread hthread = new HandlerThread(TAG);

hthread.start();

mHandler = new MountServiceHandler(hthread.getLooper());

// Add OBB Action Handler to MountService thread.

mObbActionHandler = new ObbActionHandler(IoThread.get().getLooper());

// Initialize the last-fstrim tracking if necessary

File dataDir = Environment.getDataDirectory();

File systemDir = new File(dataDir, "system");

mLastMaintenanceFile = new File(systemDir, LAST_FSTRIM_FILE);

......

mSettingsFile = new AtomicFile(

new File(Environment.getSystemSecureDirectory(), "storage.xml"));

.......

LocalServices.addService(MountServiceInternal.class, mMountServiceInternal);

/*

* Create the connection to vold with a maximum queue of twice the

* amount of containers we'd ever expect to have. This keeps an

* "asec list" from blocking a thread repeatedly.

*/

mConnector = new NativeDaemonConnector(this, "vold", MAX_CONTAINERS * 2, VOLD_TAG, 25,

null);

mConnector.setDebug(true);

Thread thread = new Thread(mConnector, VOLD_TAG);

thread.start();

// Reuse parameters from first connector since they are tested and safe

mCryptConnector = new NativeDaemonConnector(this, "cryptd",

MAX_CONTAINERS * 2, CRYPTD_TAG, 25, null);

mCryptConnector.setDebug(true);

Thread crypt_thread = new Thread(mCryptConnector, CRYPTD_TAG);

crypt_thread.start();

final IntentFilter userFilter = new IntentFilter();

userFilter.addAction(Intent.ACTION_USER_ADDED);

userFilter.addAction(Intent.ACTION_USER_REMOVED);

mContext.registerReceiver(mUserReceiver, userFilter, null, mHandler);

addInternalVolume();

// Add ourself to the Watchdog monitors if enabled.

if (WATCHDOG_ENABLE) {

Watchdog.getInstance().addMonitor(this);

}

}

其主要功能依次是:

-

创建ICallbacks回调方法,FgThread线程名为"android.fg",此处用到的Looper便是线程"android.fg"中的Looper;

-

创建并启动线程名为"MountService"的handlerThread;

-

创建OBB操作的handler,IoThread线程名为"android.io",此处用到的的Looper便是线程"android.io"中的Looper;

-

创建NativeDaemonConnector对象

-

创建并启动线程名为"VoldConnector"的线程;

-

创建并启动线程名为"CryptdConnector"的线程;

-

注册监听用户添加、删除的广播;

如上,该过程共创建了3个线程:

-

MountService主线程:

HandlerThread hthread = new HandlerThread(TAG);

hthread.start();

mHandler = new MountServiceHandler(hthread.getLooper());

// Add OBB Action Handler to MountService thread.

mObbActionHandler = new ObbActionHandler(IoThread.get().getLooper());

-

VoldConnector线程,用于与Vold通信:

mConnector = new NativeDaemonConnector(this, "vold", MAX_CONTAINERS * 2, VOLD_TAG, 25,

null);

mConnector.setDebug(true);

Thread thread = new Thread(mConnector, VOLD_TAG);

thread.start();

-

CryptdConnector线程,用户加密:

mCryptConnector = new NativeDaemonConnector(this, "cryptd",

MAX_CONTAINERS * 2, CRYPTD_TAG, 25, null);

mCryptConnector.setDebug(true);

Thread crypt_thread = new Thread(mCryptConnector, CRYPTD_TAG);

crypt_thread.start();

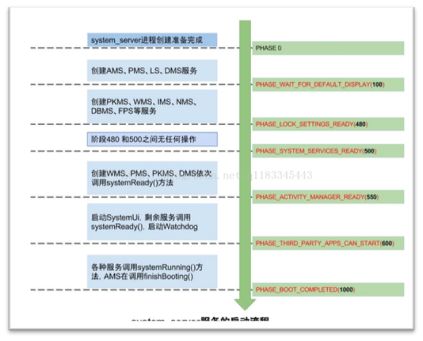

2、onBootPhase(int phase)

该函数中,当SystemServer启动到PHASE_ACTIVITY_MANAGER_READY阶段时,开始向MountServiceHandler实例mHandler发送H_SYSTEM_READY广播:

private void systemReady() {

mSystemReady = true;

mHandler.obtainMessage(H_SYSTEM_READY).sendToTarget();

}

具体systemserver启动流程见:http://blog.csdn.net/q1183345443/article/details/53518579,摘录启动阶段图如下:

-

PHASE_WAIT_FOR_DEFAULT_DISPLAY=100,该阶段等待Display有默认显示;

-

PHASE_LOCK_SETTINGS_READY=480,进入该阶段服务能获取锁屏设置的数据;

-

PHASE_SYSTEM_SERVICES_READY=500,进入该阶段服务能安全地调用核心系统服务,如PMS;

-

PHASE_ACTIVITY_MANAGER_READY=550,进入该阶段服务能广播Intent;

-

PHASE_THIRD_PARTY_APPS_CAN_START=600,进入该阶段服务能start/bind第三方apps,app能通过BInder调用service;

-

PHASE_BOOT_COMPLETED=1000,该阶段是发生在Boot完成和home应用启动完毕。系统服务更倾向于监听该阶段,而不是注册广播ACTION_BOOT_COMPLETED,从而降低系统延迟。

下面分析在MountServiceHandler类中的处理:

class MountServiceHandler extends Handler {

......

@Override

public void handleMessage(Message msg) {

switch (msg.what) {

case H_SYSTEM_READY: {

handleSystemReady();

break;

}

.......

}

此处伴随着前期的准备工作,逐渐开始进入到MountService消息的发送过程,具体见第三小节。

到此,MountService前期的布置工作已基本完成。由于该服务的主要工作就是与vold交互,分为消息的发送和接收两个方面。下面我们就根据这两个方面逐一梳理。

二、消息的接收

线程VoldConnector(NativeDaemonConnector实例)创建好之后,就开始循环监听最重要的socket了:

@Override

public void run() {

mCallbackHandler = new Handler(mLooper, this);

while (true) {

try {

listenToSocket();

} catch (Exception e) {

loge("Error in NativeDaemonConnector: " + e);

SystemClock.sleep(5000);

}

}

}

在listenToSocket()中有一个死循环,该死循环中,始终对inputStream进行监听,一旦发现有消息,就取过来放到buffer中,然后经rawEvent解析出event。接下来分类进行处理:

private void listenToSocket() throws IOException {

LocalSocket socket = null;

try {

socket = new LocalSocket();

LocalSocketAddress address = determineSocketAddress();

socket.connect(address);

InputStream inputStream = socket.getInputStream();

synchronized (mDaemonLock) {

mOutputStream = socket.getOutputStream();

}

mCallbacks.onDaemonConnected();

byte[] buffer = new byte[BUFFER_SIZE];

int start = 0;

while (true) {

......

for (int i = 0; i < count; i++) {

......

try {

final NativeDaemonEvent event = NativeDaemonEvent.parseRawEvent(

rawEvent);

log("RCV <- {" + event + "}");

if (event.isClassUnsolicited()) {

// TODO: migrate to sending NativeDaemonEvent instances

if (mCallbacks.onCheckHoldWakeLock(event.getCode())

&& mWakeLock != null) {

mWakeLock.acquire();

releaseWl = true;

}

if (mCallbackHandler.sendMessage(mCallbackHandler.obtainMessage(

event.getCode(), event.getRawEvent()))) {

releaseWl = false;

}

} else {

mResponseQueue.add(event.getCmdNumber(), event);

}

} catch (IllegalArgumentException e) {

......

}

跟踪上述代码可知,既然是监听,首先将新建并连接需要监听的socket,然后在onDaemonConnected之后进行event的解析。如果解析出来的code介于600~700之间,则直接向mCallbackHandler根据解析出来的结果发送消息。此处经handleMessage回调onEvent函数来处理event事件:

@Override

public boolean handleMessage(Message msg) {

String event = (String) msg.obj;

try {

if (!mCallbacks.onEvent(msg.what, event, NativeDaemonEvent.unescapeArgs(event))) {

log(String.format("Unhandled event '%s'", event));

}

} catch (Exception e) {

loge("Error handling '" + event + "': " + e);

} finally {

if (mCallbacks.onCheckHoldWakeLock(msg.what) && mWakeLock != null) {

mWakeLock.release();

}

}

return true;

}

这里的mCallbacks是INativeDaemonConnectorCallbacks的一个接口实例,由于MountService实现了该接口,我们回到MountService中查看回调函数onEvent:

/**

* Callback from NativeDaemonConnector

*/

@Override

public boolean onEvent(int code, String raw, String[] cooked) {

synchronized (mLock) {

return onEventLocked(code, raw, cooked);

}

}

这里直接调用了onEventLocked,这里就是消息的分发处理处:

private boolean onEventLocked(int code, String raw, String[] cooked) {

switch (code) {

case VoldResponseCode.DISK_CREATED: {

if (cooked.length != 3) break;

final String id = cooked[1];

int flags = Integer.parseInt(cooked[2]);

if (SystemProperties.getBoolean(StorageManager.PROP_FORCE_ADOPTABLE, false)

|| mForceAdoptable) {

flags |= DiskInfo.FLAG_ADOPTABLE;

}

mDisks.put(id, new DiskInfo(id, flags));

break;

}

.....

return true;

}

进入到该函数,我们会发现很多有关VoldResponseCode的事件,具体数组如下:

/*

* Internal vold response code constants

*/

class VoldResponseCode {

/*

* 100 series - Requestion action was initiated; expect another reply

* before proceeding with a new command.

*/

public static final int VolumeListResult = 110;

public static final int AsecListResult = 111;

public static final int StorageUsersListResult = 112;

public static final int CryptfsGetfieldResult = 113;

/*

* 200 series - Requestion action has been successfully completed.

*/

public static final int ShareStatusResult = 210;

public static final int AsecPathResult = 211;

public static final int ShareEnabledResult = 212;

/*

* 400 series - Command was accepted, but the requested action

* did not take place.

*/

public static final int OpFailedNoMedia = 401;

public static final int OpFailedMediaBlank = 402;

public static final int OpFailedMediaCorrupt = 403;

public static final int OpFailedVolNotMounted = 404;

public static final int OpFailedStorageBusy = 405;

public static final int OpFailedStorageNotFound = 406;

/*

* 600 series - Unsolicited broadcasts.

*/

public static final int DISK_CREATED = 640;

public static final int DISK_SIZE_CHANGED = 641;

public static final int DISK_LABEL_CHANGED = 642;

public static final int DISK_SCANNED = 643;

public static final int DISK_SYS_PATH_CHANGED = 644;

public static final int DISK_DESTROYED = 649;

public static final int VOLUME_CREATED = 650;

public static final int VOLUME_STATE_CHANGED = 651;

public static final int VOLUME_FS_TYPE_CHANGED = 652;

public static final int VOLUME_FS_UUID_CHANGED = 653;

public static final int VOLUME_FS_LABEL_CHANGED = 654;

public static final int VOLUME_PATH_CHANGED = 655;

public static final int VOLUME_INTERNAL_PATH_CHANGED = 656;

public static final int VOLUME_DESTROYED = 659;

public static final int MOVE_STATUS = 660;

public static final int BENCHMARK_RESULT = 661;

public static final int TRIM_RESULT = 662;

}

通过上述的英文注释也会发现,这些消息被分成了几个种类:

| 响应码 |

事件类别 |

对应方法 |

| [100, 200) |

部分响应,随后继续产生事件 |

isClassContinue |

| [200, 300) |

成功响应 |

isClassOk |

| [400, 500) |

远程服务端错误 |

isClassServerError |

| [500, 600) |

本地客户端错误 |

isClassClientError |

| [600, 700) |

远程Vold进程自触发的事件 |

isClassUnsolicited |

这里我们重点关注那些"不请自来的"事件,他们是由底层触发的事件, 主要是针对disk,volume的一系列操作,比如设备创建,状态、路径改变,以及文件类型、uid、标签改变等事件都是底层直接触发。

三、消息的发送

该函数在SystemServer的PHASE_THIRD_PARTY_APPS_CAN_START阶段时,开始发送H_SYSTEM_READY的广播。跟踪代码,我们直接来到H_SYSTEM_READY消息的处理处:

@Override

public void handleMessage(Message msg) {

switch (msg.what) {

case H_SYSTEM_READY: {

handleSystemReady();

break;

}

......

}

继续跟踪handleSystemReady,最终来到resetIfReadyAndConnectedLocked:

private void resetIfReadyAndConnectedLocked() {

Slog.d(TAG, "Thinking about reset, mSystemReady=" + mSystemReady

+ ", mDaemonConnected=" + mDaemonConnected);

if (mSystemReady && mDaemonConnected) {

killMediaProvider();

mDisks.clear();

mVolumes.clear();

addInternalVolume();

try {

mConnector.execute("volume", "reset");

// Tell vold about all existing and started users

final UserManager um = mContext.getSystemService(UserManager.class);

final List

for (UserInfo user : users) {

mConnector.execute("volume", "user_added", user.id, user.serialNumber);

}

for (int userId : mStartedUsers) {

mConnector.execute("volume", "user_started", userId);

}

} catch (NativeDaemonConnectorException e) {

Slog.w(TAG, "Failed to reset vold", e);

}

}

}

在满足SystemReady 和DaemonConnected(该条件的成立在NativeDaemonConnector中的listenToSocket函数中响应的)的情况下,首先进行MediaProvider相关进程的关闭,disk和volume相关的清理。接着开始添加内部存储volume:

private void addInternalVolume() {

// Create a stub volume that represents internal storage

final VolumeInfo internal = new VolumeInfo(VolumeInfo.ID_PRIVATE_INTERNAL,

VolumeInfo.TYPE_PRIVATE, null, null);

internal.state = VolumeInfo.STATE_MOUNTED;

internal.path = Environment.getDataDirectory().getAbsolutePath();

mVolumes.put(internal.id, internal);

}

这里将internalVolume相关信息添加到mVolumes中,接着就开始执行volume的reset指令了:

mConnector.execute("volume", "reset");

继续跟踪,最终来到executeForList函数:

public NativeDaemonEvent[] executeForList(long timeoutMs, String cmd, Object... args)

throws NativeDaemonConnectorException {

......

log("SND -> {" + logCmd + "}");

synchronized (mDaemonLock) {

if (mOutputStream == null) {

throw new NativeDaemonConnectorException("missing output stream");

} else {

try {

mOutputStream.write(rawCmd.getBytes(StandardCharsets.UTF_8));

} catch (IOException e) {

throw new NativeDaemonConnectorException("problem sending command", e);

}

}

}

NativeDaemonEvent event = null;

do {

event = mResponseQueue.remove(sequenceNumber, timeoutMs, logCmd);

if (event == null) {

loge("timed-out waiting for response to " + logCmd);

throw new NativeDaemonTimeoutException(logCmd, event);

}

if (VDBG) log("RMV <- {" + event + "}");

events.add(event);

} while (event.isClassContinue());

final long endTime = SystemClock.elapsedRealtime();

if (endTime - startTime > WARN_EXECUTE_DELAY_MS) {

loge("NDC Command {" + logCmd + "} took too long (" + (endTime - startTime) + "ms)");

}

if (event.isClassClientError()) {

throw new NativeDaemonArgumentException(logCmd, event);

}

if (event.isClassServerError()) {

throw new NativeDaemonFailureException(logCmd, event);

}

return events.toArray(new NativeDaemonEvent[events.size()]);

-

首先,将带执行的命令mSequenceNumber执行加1操作;

-

再将cmd(例如3 volume reset)写入到socket的输出流;

-

通过循环与poll机制阻塞等待底层响应该操作完成的结果;

-

有两个情况会跳出循环:

-

当超过1分钟未收到vold相应事件的响应码,则跳出阻塞等待;

-

当收到底层的响应码,且响应码不属于[100,200)区间,则跳出循环。

-

-

对于执行时间超过500ms的时间,则额外输出以NDC Command开头的log信息,提示可能存在优化之处。

在该函数中同时包含了指令的发送和接收后的处理首次处理部分。至此指令的发送也就完成了,整个MountService也就分析完了。

四、StorageManager

(略)

$二、VOLD篇

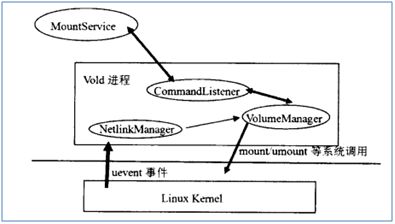

vold全称Volume Daemon,是个常驻进程,始发于init,通过init进程经init.rc解析时启动。通过概述中图示可知,Vold在整个存储模块中处于中间层,是整个存储模块的核心。主要由三部分构成:NetlinkManager(简称NM)、VloumeManager(简称VM)和CommandListener(简称CL)。整体架构如下图:

从上图中可以大致的了解Android针对外部存储的管理流程。

- 外部存储插入的时候,Linux Kernel会发出uevent事件给Vold

- Vold守护进程通过Socket机制从Linux Kernel获取相应的uevent,并解析成不同的状态;

- 然后在由MountService来获取这些由Vold解析出的相应状态决定发出什么样的广播、给Vold作出什么样的反应。

- 进而Vold依据MountService的反应稍加处理交由Kernel处理。

这里需要走出一个误区:当插入SD卡的时候Kernel发出uevent、Vold处理event,MountService从Vold获取相应信息发出广播,app在接收到广播之后会作出相应的处理;其实到这里SD卡并没有真正的被挂载到系统中,仅仅是触发了相应的uevent,而真正的挂载并没有执行。实际情况是如上图所示,先得到Uevent在交由Vold进行解析,然后由MountService获取信息发出mount、unmount等命令给Vold,在由Kernel进行针对存储设备的挂载、卸载、格式化等操作。

在没有具体分析之前,我们先看下该模块的入口——main函数。

该函数在main.c中:

int main(int argc, char** argv) {

setenv("ANDROID_LOG_TAGS", "*:v", 1);

......

VolumeManager *vm;

CommandListener *cl;

CryptCommandListener *ccl;

NetlinkManager *nm;

parse_args(argc, argv);

......

// Quickly throw a CLOEXEC on the socket we just inherited from init

fcntl(android_get_control_socket("vold"), F_SETFD, FD_CLOEXEC);

fcntl(android_get_control_socket("cryptd"), F_SETFD, FD_CLOEXEC);

mkdir("/dev/block/vold", 0755);

......

/* Create our singleton managers */

if (!(vm = VolumeManager::Instance())) {

LOG(ERROR) << "Unable to create VolumeManager";

exit(1);

}

if (!(nm = NetlinkManager::Instance())) {

LOG(ERROR) << "Unable to create NetlinkManager";

exit(1);

}

#ifdef SUPPORT_DIG

DigManager::StartDig();

#endif

if (property_get_bool("vold.debug", false)) {

vm->setDebug(true);

}

cl = new CommandListener();

ccl = new CryptCommandListener();

vm->setBroadcaster((SocketListener *) cl);

nm->setBroadcaster((SocketListener *) cl);

if (vm->start()) {

PLOG(ERROR) << "Unable to start VolumeManager";

exit(1);

}

if (process_config(vm)) {

PLOG(ERROR) << "Error reading configuration... continuing anyways";

}

if (nm->start()) {

PLOG(ERROR) << "Unable to start NetlinkManager";

exit(1);

}

set_media_poll_time();

coldboot("/sys/block");

// coldboot("/sys/class/switch");

/*

* Now that we're up, we can respond to commands

*/

if (cl->startListener()) {

PLOG(ERROR) << "Unable to start CommandListener";

exit(1);

}

if (ccl->startListener()) {

PLOG(ERROR) << "Unable to start CryptCommandListener";

exit(1);

}

// Eventually we'll become the monitoring thread

while(1) {

sleep(1000);

}

LOG(ERROR) << "Vold exiting";

exit(0);

}

函数结构较为简单,主要就是新建VM、NM实例,分别设置广播,并启动监听。下面逐一简介。

一、NetlinkManager

NM主要负责接收从kernel中发过来的Uevent消息,经加工转换成一个NetlinkEvent对象,最后调用VM的处理函数来处理这个对象。

这里我们从nm->start()开始看:

int NetlinkManager::start() {

struct sockaddr_nl nladdr;

int sz = 64 * 1024;

int on = 1;

memset(&nladdr, 0, sizeof(nladdr));

nladdr.nl_family = AF_NETLINK;

nladdr.nl_pid = getpid();

nladdr.nl_groups = 0xffffffff;

if ((mSock = socket(PF_NETLINK, SOCK_DGRAM | SOCK_CLOEXEC,

NETLINK_KOBJECT_UEVENT)) < 0) {

SLOGE("Unable to create uevent socket: %s", strerror(errno));

return -1;

}

if (setsockopt(mSock, SOL_SOCKET, SO_RCVBUFFORCE, &sz, sizeof(sz)) < 0) {

SLOGE("Unable to set uevent socket SO_RCVBUFFORCE option: %s", strerror(errno));

goto out;

}

if (setsockopt(mSock, SOL_SOCKET, SO_PASSCRED, &on, sizeof(on)) < 0) {

SLOGE("Unable to set uevent socket SO_PASSCRED option: %s", strerror(errno));

goto out;

}

if (bind(mSock, (struct sockaddr *) &nladdr, sizeof(nladdr)) < 0) {

SLOGE("Unable to bind uevent socket: %s", strerror(errno));

goto out;

}

mHandler = new NetlinkHandler(mSock);

if (mHandler->start()) {

SLOGE("Unable to start NetlinkHandler: %s", strerror(errno));

goto out;

}

return 0;

out:

close(mSock);

return -1;

}

从代码上看,NM的start函数分为两个步骤:

-

创建地址族为PF_NETLINK类型的socket并做一些设置,这样就可以和kernel通信录。

-

创建 NetlinkHandler对象,并调用其start,后续工作也就交由该handler完成了。

如此看来,NetlinkHandler此时后面的主角了,简称NLH。跟踪NLH的创建过程,我们来到这里:

NetlinkListener::NetlinkListener(int socket) :

SocketListener(socket, false) {

mFormat = NETLINK_FORMAT_ASCII;

}

其调用基类SocketListener的构造函数:

SocketListener::SocketListener(const char *socketName, bool listen) {

init(socketName, -1, listen, false);

}

这里也直接调用了一个初始化函数init:

void SocketListener::init(const char *socketName, int socketFd, bool listen, bool useCmdNum) {

mListen = listen;

mSocketName = socketName;

mSock = socketFd;

mUseCmdNum = useCmdNum;

pthread_mutex_init(&mClientsLock, NULL);

mClients = new SocketClientCollection();

}

到此,NLH就创建好了。我们再跟踪其start函数,最终我们来到SocketListener.cpp中的startListener函数:

int SocketListener::startListener(int backlog) {

if (!mSocketName && mSock == -1) {

SLOGE("Failed to start unbound listener");

errno = EINVAL;

return -1;

} else if (mSocketName) {

if ((mSock = android_get_control_socket(mSocketName)) < 0) {

SLOGE("Obtaining file descriptor socket '%s' failed: %s",

mSocketName, strerror(errno));

return -1;

}

SLOGV("got mSock = %d for %s", mSock, mSocketName);

fcntl(mSock, F_SETFD, FD_CLOEXEC);

}

if (mListen && listen(mSock, backlog) < 0) {

SLOGE("Unable to listen on socket (%s)", strerror(errno));

return -1;

} else if (!mListen)

mClients->push_back(new SocketClient(mSock, false, mUseCmdNum));

if (pipe(mCtrlPipe)) {

SLOGE("pipe failed (%s)", strerror(errno));

return -1;

}

if (pthread_create(&mThread, NULL, SocketListener::threadStart, this)) {

SLOGE("pthread_create (%s)", strerror(errno));

return -1;

}

return 0;

}

前面一大段都是socket的建立与对接,最终创建了一个线程,我们直接看其threadStart函数:

void *SocketListener::threadStart(void *obj) {

SocketListener *me = reinterpret_cast

me->runListener();

pthread_exit(NULL);

return NULL;

}

接续跟踪,重点来了——me->runListener():

void SocketListener::runListener(){

SocketClientCollection pendingList;

while(1) {

SocketClientCollection::iterator it;

fd_set read_fds;

int rc = 0;

int max = -1;

FD_ZERO(&read_fds);

if (mListen) {

max = mSock;

FD_SET(mSock, &read_fds);

}

FD_SET(mCtrlPipe[0], &read_fds);

if (mCtrlPipe[0] > max)

max = mCtrlPipe[0];

pthread_mutex_lock(&mClientsLock);

for (it = mClients->begin(); it != mClients->end(); ++it) {

// NB: calling out to an other object with mClientsLock held (safe)

int fd = (*it)->getSocket();

FD_SET(fd, &read_fds);

if (fd > max) {

max = fd;

}

}

pthread_mutex_unlock(&mClientsLock);

SLOGV("mListen=%d, max=%d, mSocketName=%s", mListen, max, mSocketName);

if ((rc = select(max + 1, &read_fds, NULL, NULL, NULL)) < 0) {

if (errno == EINTR)

continue;

SLOGE("select failed (%s) mListen=%d, max=%d", strerror(errno), mListen, max);

sleep(1);

continue;

} else if (!rc)

continue;

if (FD_ISSET(mCtrlPipe[0], &read_fds)) {

char c = CtrlPipe_Shutdown;

TEMP_FAILURE_RETRY(read(mCtrlPipe[0], &c, 1));

if (c == CtrlPipe_Shutdown) {

break;

}

continue;

}

if (mListen && FD_ISSET(mSock, &read_fds)) {

struct sockaddr addr;

socklen_t alen;

int c;

do {

alen = sizeof(addr);

c = accept(mSock, &addr, &alen);

SLOGV("%s got %d from accept", mSocketName, c);

} while (c < 0 && errno == EINTR);

if (c < 0) {

SLOGE("accept failed (%s)", strerror(errno));

sleep(1);

continue;

}

fcntl(c, F_SETFD, FD_CLOEXEC);

pthread_mutex_lock(&mClientsLock);

mClients->push_back(new SocketClient(c, true, mUseCmdNum));

pthread_mutex_unlock(&mClientsLock);

}

/* Add all active clients to the pending list first */

pendingList.clear();

pthread_mutex_lock(&mClientsLock);

for (it = mClients->begin(); it != mClients->end(); ++it) {

SocketClient* c = *it;

// NB: calling out to an other object with mClientsLock held (safe)

int fd = c->getSocket();

if (FD_ISSET(fd, &read_fds)) {

pendingList.push_back(c);

c->incRef();

}

}

pthread_mutex_unlock(&mClientsLock);

/* Process the pending list, since it is owned by the thread,

* there is no need to lock it */

while (!pendingList.empty()) {

/* Pop the first item from the list */

it = pendingList.begin();

SocketClient* c = *it;

pendingList.erase(it);

/* Process it, if false is returned, remove from list */

if (!onDataAvailable(c)) {

release(c, false);

}

c->decRef();

}

}

}

这里又来了一个大大的while(1)循环,在真个循环过程中,onDataAvailable(c)才是重点,因为收到的数据首先会交给它处理:

bool NetlinkListener::onDataAvailable(SocketClient *cli)

{

int socket = cli->getSocket();

ssize_t count;

uid_t uid = -1;

bool require_group = true;

if (mFormat == NETLINK_FORMAT_BINARY_UNICAST) {

require_group = false;

}

count = TEMP_FAILURE_RETRY(uevent_kernel_recv(socket,

mBuffer, sizeof(mBuffer), require_group, &uid));

if (count < 0) {

if (uid > 0)

LOG_EVENT_INT(65537, uid);

SLOGE("recvmsg failed (%s)", strerror(errno));

return false;

}

NetlinkEvent *evt = new NetlinkEvent();

if (evt->decode(mBuffer, count, mFormat)) {

onEvent(evt);

} else if (mFormat != NETLINK_FORMAT_BINARY) {

// Don't complain if parseBinaryNetlinkMessage returns false. That can

// just mean that the buffer contained no messages we're interested in.

SLOGE("Error decoding NetlinkEvent");

}

delete evt;

return true;

}

decode函数就是接收到的Uevent信息填充到一个NetlinkEvent对象中,其中包含Action等信息。再来看onEvent:

void NetlinkHandler::onEvent(NetlinkEvent *evt) {

VolumeManager *vm = VolumeManager::Instance();

const char *subsys = evt->getSubsystem();

if (!subsys) {

SLOGW("No subsystem found in netlink event");

return;

}

if (!strcmp(subsys, "block")) {

vm->handleBlockEvent(evt);

}

}

由于我们的存储这块都是block类型,这里开始就将信息传递给了VM了。

二、VloumeManager

像NM一样,我们也先看其建立过程,该过程也是在main函数中进行的,这里也是从start函数开始看。

int VolumeManager::start() {

// Always start from a clean slate by unmounting everything in

// directories that we own, in case we crashed.

unmountAll();

// Assume that we always have an emulated volume on internal

// storage; the framework will decide if it should be mounted.

CHECK(mInternalEmulated == nullptr);

mInternalEmulated = std::shared_ptr

new android::vold::EmulatedVolume("/data/media"));

mInternalEmulated->create();

return 0;

}

首先,Unmount了所有,然后开始新建/data/media存储。到这里VM就初始化完了。

再回到main函数中,接着会通过函数process_config布置内部分区:

static int process_config(VolumeManager *vm) {

std::string path(android::vold::DefaultFstabPath());

fstab = fs_mgr_read_fstab(path.c_str());

if (!fstab) {

PLOG(ERROR) << "Failed to open default fstab " << path;

return -1;

}

/* Loop through entries looking for ones that vold manages */

bool has_adoptable = false;

for (int i = 0; i < fstab->num_entries; i++) {

if (fs_mgr_is_voldmanaged(&fstab->recs[i])) {

if (fs_mgr_is_nonremovable(&fstab->recs[i])) {

LOG(WARNING) << "nonremovable no longer supported; ignoring volume";

continue;

}

std::string sysPattern(fstab->recs[i].blk_device);

std::string nickname(fstab->recs[i].label);

int flags = 0;

if (fs_mgr_is_encryptable(&fstab->recs[i])) {

flags |= android::vold::Disk::Flags::kAdoptable;

has_adoptable = true;

}

if (fs_mgr_is_noemulatedsd(&fstab->recs[i])

|| property_get_bool("vold.debug.default_primary", false)) {

flags |= android::vold::Disk::Flags::kDefaultPrimary;

}

vm->addDiskSource(std::shared_ptr

new VolumeManager::DiskSource(sysPattern, nickname, flags)));

}

}

property_set("vold.has_adoptable", has_adoptable ? "1" : "0");

return 0;

}

从代码中可以看出,该过程的主要功能就是解析vold.fstab分区表。该分区表中。详细描述了各分区的名称,挂载点等信息,如:

# Android fstab file.

#

# The filesystem that contains the filesystem checker binary (typically /system) cannot

# specify MF_CHECK, and must come before any filesystems that do specify MF_CHECK

/dev/block/system /system ext4 ro wait

/dev/block/data /data ext4 noatime,nosuid,nodev,nodelalloc,nomblk_io_submit,errors=panic wait,check,encryptable=/dev/block/misc

/dev/block/cache /cache ext4 noatime,nosuid,nodev,nodelalloc,nomblk_io_submit,errors=panic wait,check

/devices/*.sd/mmc_host/sd* auto vfat defaults voldmanaged=sdcard1:auto,noemulatedsd

/devices/*dwc3/xhci-hcd.0.auto/usb?/*/host*/target*/block/sd* auto vfat defaults voldmanaged=udisk0:auto

/devices/*dwc3/xhci-hcd.0.auto/usb?/*/host*/target*/block/sd* auto vfat defaults voldmanaged=udisk1:auto

/devices/*dwc3/xhci-hcd.0.auto/usb?/*/host*/target*/block/sr* auto vfat defaults voldmanaged=sr0:auto

/dev/block/loop auto loop defaults voldmanaged=loop:auto

# Add for zram. zramsize can be in numeric (byte) , in percent or auto (detect by the system)

/dev/block/zram0 /swap_zram0 swap defaults wait,zramsize=auto

/dev/block/tee /tee ext4 noatime,nosuid,nodev,nodelalloc,nomblk_io_submit,errors=panic wait,check

不同设备其分区表不同,具体细节不再解析。接下来我们继续看VM和NM的交互。

前面说NM将NetlinkEvent经NetlinkHandler传递给VM的处理,该工作开始于VM的handleBlockEvent函数中:

void VolumeManager::handleBlockEvent(NetlinkEvent *evt) {

std::lock_guard

.....

switch (evt->getAction()) {

case NetlinkEvent::Action::kAdd: {

for (auto source : mDiskSources) {

if (source->matches(eventPath)) {

// For now, assume that MMC devices are SD, and that

// everything else is USB

int flags = source->getFlags();

if (major == kMajorBlockMmc) {

flags |= android::vold::Disk::Flags::kSd;

} else {

flags |= android::vold::Disk::Flags::kUsb;

}

auto disk = new android::vold::Disk(eventPath, device,

source->getNickname(), flags);

disk->create();

mDisks.push_back(std::shared_ptr

break;

}

}

break;

}

......

}

前面说过,NM会将Uevent以NetlinkEvent的形式传递给VM,里面还有action等信息。这里便根据其携带过来的action进行分别处理。如上述代码中,便是block的add处理分支。至此,VM解析完成。

三、CommandListener

CommandListener较为简单,其基类是FrameworkListener,来看下其创建过程:

CommandListener::CommandListener() :

FrameworkListener("vold", true) {

registerCmd(new DumpCmd());

registerCmd(new VolumeCmd());

registerCmd(new AsecCmd());

registerCmd(new ObbCmd());

registerCmd(new StorageCmd());

#ifdef HAS_VIRTUAL_CDROM

registerCmd(new LoopCmd());

#endif

registerCmd(new CryptfsCmd());

registerCmd(new FstrimCmd());

}

源于FrameworkListener的构造函数FrameworkListener("vold", true),最后来到了SocketListener的init初始化函数中:

void SocketListener::init(const char *socketName, int socketFd, bool listen, bool useCmdNum) {

mListen = listen;

mSocketName = socketName;

mSock = socketFd;

mUseCmdNum = useCmdNum;

pthread_mutex_init(&mClientsLock, NULL);

mClients = new SocketClientCollection();

}

CL会创建一个监听端的socket。

客户端发送指令给CL,它则从mCommands中找到对应指令,交给该命令的runCommand函数处理。

$三、USB篇

(由于篇幅过长,另辟篇章)