Flink集群运行问题小记

最近Flink项目中遇到一个问题,关于classloader的问题,在此小记一下

问题

直接抛运行异常日志把,很显眼的一句 You may be missing the 'flink-hadoop-compatibility' dependency

org.apache.flink.client.program.ProgramInvocationException: The main method caused an error: Could not load the TypeInformation for the class 'org.apache.hadoop.io.Writable'. You may be missing the 'flink-hadoop-compatibility' dependency.

at org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:546)

at org.apache.flink.client.program.PackagedProgram.invokeInteractiveModeForExecution(PackagedProgram.java:421)

at org.apache.flink.client.program.ClusterClient.run(ClusterClient.java:423)

at org.apache.flink.client.cli.CliFrontend.executeProgram(CliFrontend.java:813)

at org.apache.flink.client.cli.CliFrontend.runProgram(CliFrontend.java:287)

at org.apache.flink.client.cli.CliFrontend.run(CliFrontend.java:213)

at org.apache.flink.client.cli.CliFrontend.parseParameters(CliFrontend.java:1050)

at org.apache.flink.client.cli.CliFrontend.lambda$main$11(CliFrontend.java:1126)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1924)

at org.apache.flink.runtime.security.HadoopSecurityContext.runSecured(HadoopSecurityContext.java:41)

at org.apache.flink.client.cli.CliFrontend.main(CliFrontend.java:1126)

Caused by: java.lang.RuntimeException: Could not load the TypeInformation for the class 'org.apache.hadoop.io.Writable'. You may be missing the 'flink-hadoop-compatibility' dependency.

at org.apache.flink.api.java.typeutils.TypeExtractor.createHadoopWritableTypeInfo(TypeExtractor.java:2082)

at org.apache.flink.api.java.typeutils.TypeExtractor.privateGetForClass(TypeExtractor.java:1701)

at org.apache.flink.api.java.typeutils.TypeExtractor.privateGetForClass(TypeExtractor.java:1643)

at org.apache.flink.api.java.typeutils.TypeExtractor.createTypeInfoWithTypeHierarchy(TypeExtractor.java:921)

at org.apache.flink.api.java.typeutils.TypeExtractor.createSubTypesInfo(TypeExtractor.java:1142)

at org.apache.flink.api.java.typeutils.TypeExtractor.createTypeInfoWithTypeHierarchy(TypeExtractor.java:853)

at org.apache.flink.api.java.typeutils.TypeExtractor.privateCreateTypeInfo(TypeExtractor.java:803)

at org.apache.flink.api.java.typeutils.TypeExtractor.getUnaryOperatorReturnType(TypeExtractor.java:587)

at org.apache.flink.api.java.typeutils.TypeExtractor.getFlatMapReturnTypes(TypeExtractor.java:196)

at org.apache.flink.streaming.api.datastream.DataStream.flatMap(DataStream.java:611)

说没有依赖这个包,赶紧去看一下项目依赖,难道pom依赖打包没有成功?

再三确认,项目中依赖此包,构建时也打包在jar中,那为啥提交集群运行居然报还是没有依赖?

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-hadoop-compatibility_2.12artifactId>

<version>1.8.0version>

dependency>

源码分析

话不多说,直接GitHub上看源码,TypeExtractor

中2097行异常,代码摘出来如下:

// visible for testing

public static <T> TypeInformation<T> createHadoopWritableTypeInfo(Class<T> clazz) {

checkNotNull(clazz);

Class<?> typeInfoClass;

try {

typeInfoClass = Class.forName(HADOOP_WRITABLE_TYPEINFO_CLASS, false, TypeExtractor.class.getClassLoader());

}

catch (ClassNotFoundException e) {

throw new RuntimeException("Could not load the TypeInformation for the class '"

+ HADOOP_WRITABLE_CLASS + "'. You may be missing the 'flink-hadoop-compatibility' dependency.");

}

...

...

}

定位看到是在执行Class.forName加载class失败,遇到此问题,应该进一步就是分析到classLoader在运行环境中是什么东东。

此时不得不说Alibaba的Java诊断利器Arthas了

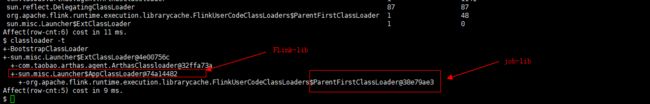

直接查看进程的classloader情况如下

$ classloader -t

+-BootstrapClassLoader

+-sun.misc.Launcher$ExtClassLoader@10724b7b

+-com.taobao.arthas.agent.ArthasClassloader@6c16887f

+-sun.misc.Launcher$AppClassLoader@74a14482

+-org.apache.flink.runtime.execution.librarycache.FlinkUserCodeClassLoaders$ParentFirstClassLoader@38e79ae3

+-com.alibaba.fastjson.util.ASMClassLoader@4d7aaca2

发现此处有自定义的classloader FlinkUserCodeClassLoaders,通过阅读flink源码:JobManagerSharedServices中加载了此classloader

// ------------------------------------------------------------------------

// Creating the components from a configuration

// ------------------------------------------------------------------------

public static JobManagerSharedServices fromConfiguration(

Configuration config,

BlobServer blobServer) throws Exception {

checkNotNull(config);

checkNotNull(blobServer);

final String classLoaderResolveOrder =

config.getString(CoreOptions.CLASSLOADER_RESOLVE_ORDER);

final String[] alwaysParentFirstLoaderPatterns = CoreOptions.getParentFirstLoaderPatterns(config);

final BlobLibraryCacheManager libraryCacheManager =

new BlobLibraryCacheManager(

blobServer,

FlinkUserCodeClassLoaders.ResolveOrder.fromString(classLoaderResolveOrder),

alwaysParentFirstLoaderPatterns);

final FiniteDuration timeout;

try {

timeout = AkkaUtils.getTimeout(config);

} catch (NumberFormatException e) {

throw new IllegalConfigurationException(AkkaUtils.formatDurationParsingErrorMessage());

}

final ScheduledExecutorService futureExecutor = Executors.newScheduledThreadPool(

Hardware.getNumberCPUCores(),

new ExecutorThreadFactory("jobmanager-future"));

final StackTraceSampleCoordinator stackTraceSampleCoordinator =

new StackTraceSampleCoordinator(futureExecutor, timeout.toMillis());

final int cleanUpInterval = config.getInteger(WebOptions.BACKPRESSURE_CLEANUP_INTERVAL);

final BackPressureStatsTrackerImpl backPressureStatsTracker = new BackPressureStatsTrackerImpl(

stackTraceSampleCoordinator,

cleanUpInterval,

config.getInteger(WebOptions.BACKPRESSURE_NUM_SAMPLES),

config.getInteger(WebOptions.BACKPRESSURE_REFRESH_INTERVAL),

Time.milliseconds(config.getInteger(WebOptions.BACKPRESSURE_DELAY)));

futureExecutor.scheduleWithFixedDelay(

backPressureStatsTracker::cleanUpOperatorStatsCache,

cleanUpInterval,

cleanUpInterval,

TimeUnit.MILLISECONDS);

return new JobManagerSharedServices(

futureExecutor,

libraryCacheManager,

RestartStrategyFactory.createRestartStrategyFactory(config),

stackTraceSampleCoordinator,

backPressureStatsTracker,

blobServer);

}

进一步查看要加载的 HADOOP_WRITABLE_TYPEINFO_CLASS在AppClassLoader和ParentFirstClassLoader的加载情况

- {flink_home}/lib 中无

flink-hadoop-compatibility_2.12-1.8.0.jar

# AppClassLoader

$ classloader -c 74a14482 -r org/apache/flink/api/java/typeutils/WritableTypeInfo.class

# ParentFirstClassLoader

$ classloader -c 38e79ae3 -r org/apache/flink/api/java/typeutils/WritableTypeInfo.class

jar:file:/data/myApp/flink-demo.jar!/org/apache/flink/api/java/typeutils/WritableTypeInfo.class

- {flink_home}/lib 中加入

flink-hadoop-compatibility_2.12-1.8.0.jar

# AppClassLoader

$ classloader -c 74a14482 -r org/apache/flink/api/java/typeutils/WritableTypeInfo.class

jar:file:/opt/bigdata/inst/flink-1.8.0/lib/flink-hadoop-compatibility_2.12-1.8.0.jar!/org/apache/flink/api/java/typeutils/WritableTypeInfo.class

# ParentFirstClassLoader

$ classloader -c 38e79ae3 -r org/apache/flink/api/java/typeutils/WritableTypeInfo.class

jar:file:/opt/bigdata/inst/flink-1.8.0/lib/flink-hadoop-compatibility_2.12-1.8.0.jar!/org/apache/flink/api/java/typeutils/WritableTypeInfo.class

jar:file:/data/myApp/flink-demo.jar!/org/apache/flink/api/java/typeutils/WritableTypeInfo.class

此处可以看到:

- AppClassLoader只会加载{flink_home}/lib下的class资源

- ParentFirstClassLoader会加载flink任务打包jar中的class资源

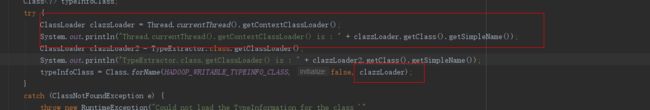

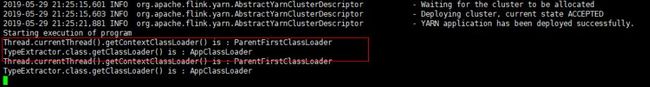

反过来分析此问题,我们查看flink源码中TypeExtractor.class.getClassLoader()是加载的哪个classloader,如下,我们修改源码直接打印如下

结果

TypeExtractor.class.getClassLoader() 为 AppClassLoader

结论

根据双亲委派模式,就可以解释为什么在我们项目中引入对应的jar包,但是启动的时候还是异常的这个问题,因为AppClassLoader中找不到对应的class

不会向下查找ParentFirstClassLoader中的class资源

解决两种方式:

- 直接将依赖包放在 {flink_home}/lib中

- 修改flink源码TypeExtractor中的classloader为

Thread.currentThread().getContextClassLoader()

links

Arthas 用户文档