深度森林(gcforest)原理讲解以及代码实现

GcForest原理

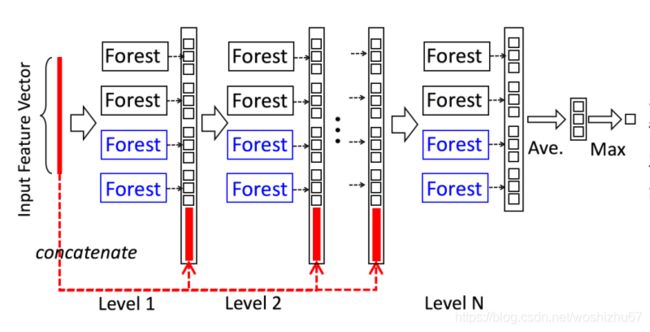

gcforest采用Cascade结构,也就是多层级结构,每层由四个随机森林组成,两个随机森林和两个极端森林,每个极端森林包含1000(超参数)个完全随机树,每个森林都会对的数据进行训练,每个森林都输出结果,我们把这个结果叫做森林生成的类向量,为了避免过拟合,我们喂给每个森林训练的数据都是通过k折交叉验证的,每一层最后生成四个类向量,下一层以上一层的四个类向量以及原有的数据为新的train data进行训练,如此叠加,最后一层将类向量进行平均,作为预测结果

个人认为这种结构非常类似于神经网络,神经网络的每个单位是神经元,而深度森林的单位元却是随机森林,单个随机森林在性能上强于单个神经元的,这就是使得深度森林很多时候尽管层级和基础森林树不多,也能取得好的结果的主要原因

GcForest代码实现原理图

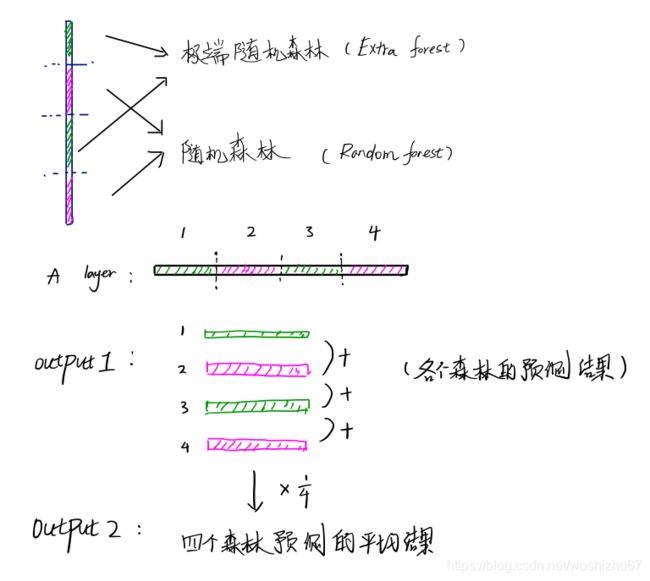

我们需要做出一个layer的结构,每个layer由二种四个森林组成

每个layer都输出两个结果:每个森林的预测结果、四个森林的预测的平均结果

为了防止过拟合我们的layer都由k折交叉验证产生

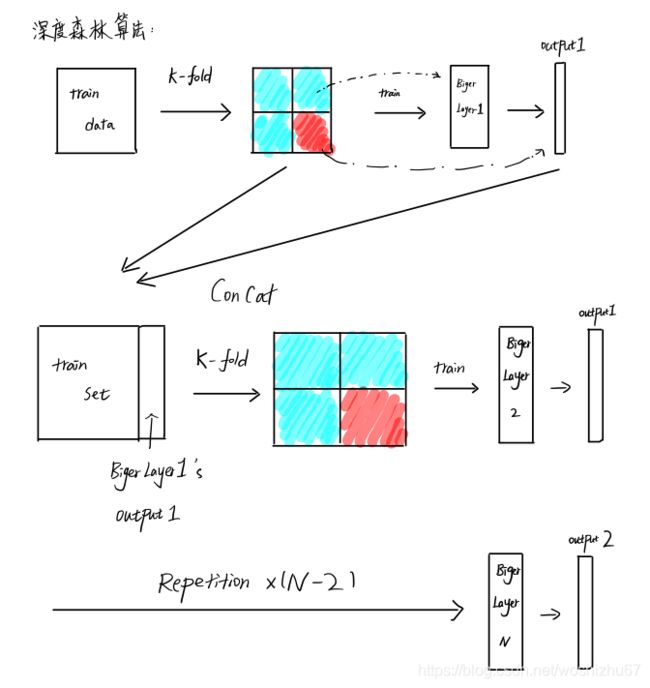

同时为了保留数据全部的特征我们将得到的小layer叠在一起定义一个Biger Layer

之后我们就可以构建深度森林了

GcForest代码

layer.py

extraTree(极端树)使用的所有的样本,只是特征是随机选取的,因为分裂是随机的,所以在某种程度上比随机森林得到的结果更加好

from sklearn.ensemble import ExtraTreesRegressor#引入极端森林回归

from sklearn.ensemble import RandomForestRegressor#引入随机森林回归

import numpy as np

class Layer:#定义层类

def __init__(self, n_estimators, num_forests, max_depth=30, min_samples_leaf=1):

self.num_forests = num_forests # 定义森林数

self.n_estimators = n_estimators # 每个森林的树个数

self.max_depth = max_depth#每一颗树的最大深度

self.min_samples_leaf = min_samples_leaf#树会生长到所有叶子都分到一个类,或者某节点所代表的样本数已小于min_samples_leaf

self.model = []#最后产生的类向量

def train(self, train_data, train_label, weight, val_data):#训练函数

val_prob = np.zeros([self.num_forests, val_data.shape[0]])#定义出该层的类向量,有self.num_forersts行,val_data.shape[0]列,这里我们认为val_data应该就是我们的weight

for forest_index in range(self.num_forests):#对具体的layer内的森林进行构建

if forest_index % 2 == 0:#如果是第偶数个设为随机森林

clf = RandomForestRegressor(n_estimators=self.n_estimators#子树的个数,

n_jobs=-1, #cpu并行树,-1表示和cpu的核数相同

max_depth=self.max_depth,#最大深度

min_samples_leaf=self.min_samples_leaf)

clf.fit(train_data, train_label, weight)#weight是取样比重Sample weights

val_prob[forest_index, :] = clf.predict(val_data)#记录类向量

else:#如果是第奇数个就设为极端森林

clf = ExtraTreesRegressor(n_estimators=self.n_estimators,#森林所含树的个数

n_jobs=-1, #并行数

max_depth=self.max_depth,#最大深度

min_samples_leaf=self.min_samples_leaf)#最小叶子限制

clf.fit(train_data, train_label, weight)

val_prob[forest_index, :] = clf.predict(val_data)#记录类向量

self.model.append(clf)#组建layer层

val_avg = np.sum(val_prob, axis=0)#按列进行求和

val_avg /= self.num_forests#求平均

val_concatenate = val_prob.transpose((1, 0))#对记录的类向量矩阵进行转置

return [val_avg, val_concatenate]#返回平均结果和转置后的类向量矩阵

def predict(self, test_data):#定义预测函数,也是最后一层的功能

predict_prob = np.zeros([self.num_forests, test_data.shape[0]])

for forest_index, clf in enumerate(self.model):

predict_prob[forest_index, :] = clf.predict(test_data)

predict_avg = np.sum(predict_prob, axis=0)

predict_avg /= self.num_forests

predict_concatenate = predict_prob.transpose((1, 0))

return [predict_avg, predict_concatenate]

class KfoldWarpper:#定义每个树进行训练的所用的数据

def __init__(self, num_forests, n_estimators, n_fold, kf, layer_index, max_depth=31, min_samples_leaf=1):#包括森林树,森林使用树的个数,k折的个数,k-折交叉验证,第几层,最大深度,最小叶子节点限制

self.num_forests = num_forests

self.n_estimators = n_estimators

self.n_fold = n_fold

self.kf = kf

self.layer_index = layer_index

self.max_depth = max_depth

self.min_samples_leaf = min_samples_leaf

self.model = []

def train(self, train_data, train_label, weight):

num_samples, num_features = train_data.shape

val_prob = np.empty([num_samples])

val_prob_concatenate = np.empty([num_samples, self.num_forests])#创建新的空矩阵,num_samples行,num_forest列,用于放置预测结果

for train_index, test_index in self.kf:#进行k折交叉验证,在train_data里创建交叉验证的补充

X_train = train_data[train_index, :]#选出训练集

X_val = train_data[test_index, :]#验证集

y_train = train_label[train_index]#训练标签

weight_train = weight[train_index]#训练集对应的权重

layer = Layer(self.n_estimators, self.num_forests, self.max_depth, self.min_samples_leaf)#加入层

val_prob[test_index], val_prob_concatenate[test_index, :] = \

layer.train(X_train, y_train, weight_train, X_val)#记录输出的结果

self.model.append(layer)#在模型中填充层级,这也是导致程序吃资源的部分,每次进行

return [val_prob, val_prob_concatenate]

def predict(self, test_data):#定义预测函数,用做下一层的训练数据

test_prob = np.zeros([test_data.shape[0]])

test_prob_concatenate = np.zeros([test_data.shape[0], self.num_forests])

for layer in self.model:

temp_prob, temp_prob_concatenate = \

layer.predict(test_data)

test_prob += temp_prob

test_prob_concatenate += temp_prob_concatenate

test_prob /= self.n_fold

test_prob_concatenate /= self.n_fold

return [test_prob, test_prob_concatenate]

gcforest.py

from sklearn.model_selection import KFold

from layer import *

import numpy as np

def compute_loss(target, predict):#对数误差函数

temp = np.log(abs(target + 1)) - np.log(abs(predict + 1))

res = np.dot(temp, temp) / len(temp)#向量点成后平均

return res

class gcForest:#定义gcforest模型

def __init__(self, num_estimator, num_forests, max_layer=2, max_depth=31, n_fold=5):

self.num_estimator = num_estimator

self.num_forests = num_forests

self.n_fold = n_fold

self.max_depth = max_depth

self.max_layer = max_layer

self.model = []

def train(self,train_data, train_label, weight):

num_samples, num_features = train_data.shape

# basis process

train_data_new = train_data.copy()

# return value

val_p = []

best_train_loss = 0.0

layer_index = 0

best_layer_index = 0

bad = 0

kf = KFold(2,True,self.n_fold).split(train_data_new.shape[0])

#这里加入k折交叉验证

while layer_index < self.max_layer:

print("layer " + str(layer_index))

layer = KfoldWarpper(self.num_forests, self.num_estimator, self.n_fold, kf, layer_index, self.max_depth, 1)#其实这一个layer是个夹心layer,是2层layer的平均结果

val_prob, val_stack= layer.train(train_data_new, train_label, weight)

#使用该层进行训练

train_data_new = np.concatenate([train_data, val_stack], axis=1)

#将该层的训练结果也加入到train_data中

temp_val_loss = compute_loss(train_label, val_prob)

print("val loss:" + str(temp_val_loss))

if best_train_loss < temp_val_loss:#用于控制加入的层数,如果加入的层数较多,且误差没有下降也停止运行

bad += 1

else:

bad = 0

best_train_loss = temp_val_loss

best_layer_index = layer_index

if bad >= 3:

break

layer_index = layer_index + 1

self.model.append(layer)

for index in range(len(self.model), best_layer_index + 1, -1):#删除多余的layer

self.model.pop()

def predict(self, test_data):

test_data_new = test_data.copy()

test_prob = []

for layer in self.model:

predict, test_stack = layer.predict(test_data_new)

test_data_new = np.concatenate([test_data, test_stack], axis=1)

return predict

test.py

import numpy as np

from gcForest import *

from time import time

def load_data():

train_data = np.load()

train_label = np.load()

train_weight = np.load()

test_data = np.load()

test_label = np.load()

test_file = np.load()

return [train_data, train_label, train_weight, test_data, test_label, test_file]

if __name__ == '__main__':

train_data, train_label, train_weight, test_data, test_label, test_file = load_data()

clf = gcForest(num_estimator = 100, num_forests = 4, max_layer=2, max_depth=100, n_fold=5)

start = time()

clf.train(train_data, train_label, train_weight)

end = time()

print("fitting time: " + str(end - start) + " sec")

start = time()

prediction = clf.predict(test_data)

end = time()

print("prediction time: " + str(end - start) + " sec")

result = {

}

for index, item in enumerate(test_file):

if item not in result:

result[item] = prediction[index]

else:

result[item] = (result[item] + prediction[index]) / 2

print(result)

注:

代码源自:科大讯飞开放平台

原理主要参考:周志华老师论文