实验五 JPEG解码

JPEG压缩编码原理简介

一般来说JPEG有两种基本压缩方法:

- 有损压缩:以DCT为基础,压缩比较高。

- 无损压缩:又称预测压缩,以二维DPCM为基础。

本文讨论基于DCT的压缩方法,其基本流程框图如下:(其实这张图也不是特别全面)

我们假设输入图像为f(x,y),对其编解码过程进行简单描述:

1.预处理

一般将输入信息转为YUV空间进行处理。

将输入图像进行像块分割,对亮度信息分成8*8的像块。

直流电平下移,即直流电平下移2^7=128,提高编码效率。

2.DCT变换

即离散余弦变换,目的是去除空间冗余,是编码过程中唯一产生失真的环节,具体过程可查阅相关资料。

变换后得到F(u,v)系数块(8*8),其特点为左上角的直流系数很大,往右下越高频系数越小。

3.量化

对F(u,v),有量化系数Q(u,v)。对亮度和色度有不同的量化系数表,是通过实验和统计后确定的视觉阈值。

利用了人眼对色度信息和高频信息较不敏感的特点,量化系数中高频大于低频,色度大于亮度。

[ F ( u , v ) ] Q = ( i n t ) F ( u , v ) Q ( u , v ) [F(u,v)]_Q=(int)\frac{F(u,v)}{Q(u,v)} [F(u,v)]Q=(int)Q(u,v)F(u,v)

在解码端即

F ′ ( u , v ) = [ F ( u , v ) ] Q ∗ Q ( u , v ) F'(u,v)=[F(u,v)]_Q*Q(u,v) F′(u,v)=[F(u,v)]Q∗Q(u,v)

即可得到重建的F’(u,v)。

4.之字形扫描

量化后,右下角的高频系数大多为0.为提高游程编码效率用之字形(Zigzag)扫描读取量化后数据,顺序如下图。

5.DC系数DPCM编码

DC系数即系数块左上角的系数,代表该像块的直流部分,数值较大。

因为相邻像块直流系数往往有很大相关性,采用DPCM系数进行编码。

传送当前块和前一个块的DC系数差值。

6.AC系数游程编码

即对像块中除了左上角以外的系数,以之前zigzag扫描确定的顺序进行游程编码。

7.可变长熵编码

对编码为码字后的系数,为了消除其码字中的统计冗余,进行可变长编码。

采用的是Huffman编码。对亮度的DC,AC,色度的DC,AC有不同的Huffman编码表,还有“幅度值”的可变长编码表。具体过程可查阅相关资料。

8.解码

简单来说即编码的逆过程,最后得到重建图像f’(x,y),和原图像之间有一定的误差e(x,y).

JPEG文件格式简介

JPEG数据语法规则

在JPEG文件中,数据被分成一个个segment,每一个都由一个marker作为开头标记这个字段的内容。

每个marker都有0xFF开头(除了SOI,EOI),后跟1字节的标记标识符和2字节的标识长度(高位在前低位在后),以及该标记对应的payload。

此外,若0xFF后跟0x00,则跳过不予分析。

通用的marker如下:

| name | Bytes | payload | 内容 |

|---|---|---|---|

| SOI(Start of Image) | 0xFFD8 | — | 图像开始 |

| SOF0(Start of Image Baseline DCT) | 0xFFC0 | 可变 | 说明采用Baseline DCT变换,并指明图像宽,高,采样格式等 |

| SOF2(Start of Image Progressive DCT) | 0xFFC2 | 可变 | 说明采用Progressive DCT变换,并指明图像宽,高,采样格式等 |

| DHT(Define Huffman Table) | 0xFFC4 | 可变 | 一个或多个Huffman表 |

| DQT(Define Quantization Table) | 0xFFDB | 可变 | 一个或多个量化表 |

| DRI(Define Restart Interval) | 0xFFDD | 2 bytes | 指明RST段之间的间距 |

| SOS(Start of Scan) | 0xFFDA | 可变 | 开始对图像的扫描 |

| RSTn(Restart) | 0xFFDn | 可变 | 略 |

| APPn(Application Specific) | 0xFFEn | 可变 | 略 |

| COM(Comment) | 0xFFFE | 可变 | 文本信息 |

| EOI(End of Image) | 0xFFD9 | — | 图像结束 |

就不在此以一个JPEG文件为例说明了,时间不允许。。。

简而言之,要解码一个JPEG文件,首先要从它的marker入手。

JPEG解码程序

程序运行流程的简单分析

main()

int main(int argc, char *argv[])

{

int output_format = TINYJPEG_FMT_YUV420P;

char *output_filename, *input_filename;

clock_t start_time, finish_time;

unsigned int duration;

int current_argument;

int benchmark_mode = 0;

#if TRACE

p_trace=fopen(TRACEFILE,"w");

if (p_trace==NULL)

{

printf("trace file open error!");

}

#endif

if (argc < 3)

usage();

current_argument = 1;

while (1)

{

if (strcmp(argv[current_argument], "--benchmark")==0)

benchmark_mode = 1;

else

break;

current_argument++;

}

if (argc < current_argument+2)

usage();

input_filename = argv[current_argument];

if (strcmp(argv[current_argument+1],"yuv420p")==0)

output_format = TINYJPEG_FMT_YUV420P;

else if (strcmp(argv[current_argument+1],"rgb24")==0)

output_format = TINYJPEG_FMT_RGB24;

else if (strcmp(argv[current_argument+1],"bgr24")==0)

output_format = TINYJPEG_FMT_BGR24;

else if (strcmp(argv[current_argument+1],"grey")==0)

output_format = TINYJPEG_FMT_GREY;

else

exitmessage("Bad format: need to be one of yuv420p, rgb24, bgr24, grey\n");

output_filename = argv[current_argument+2];

start_time = clock();

if (benchmark_mode)

load_multiple_times(input_filename, output_filename, output_format);

else

convert_one_image(input_filename, output_filename, output_format);

finish_time = clock();

duration = finish_time - start_time;

snprintf(error_string, sizeof(error_string),"Decoding finished in %u ticks\n", duration);

#if TRACE

fclose(p_trace);

#endif

return 0;

}

可以看到默认输出格式为YUV420。

对于JPEG解码从两个函数中选择,load_multiple_times()和covert_one_image()。选择哪个由变量benchmark确定。

两个函数大致相同,以下为convert_one_image()的 代码:

convert_one_image()

int convert_one_image(const char *infilename, const char *outfilename, int output_format)

{

FILE *fp;

unsigned int length_of_file;

unsigned int width, height;

unsigned char *buf;

struct jdec_private *jdec;

unsigned char *components[3];

/* Load the Jpeg into memory */

fp = fopen(infilename, "rb");

if (fp == NULL)

exitmessage("Cannot open filename\n");

length_of_file = filesize(fp);

buf = (unsigned char *)malloc(length_of_file + 4);

if (buf == NULL)

exitmessage("Not enough memory for loading file\n");

fread(buf, length_of_file, 1, fp);

fclose(fp);

/* Decompress it */

jdec = tinyjpeg_init();

if (jdec == NULL)

exitmessage("Not enough memory to alloc the structure need for decompressing\n");

if (tinyjpeg_parse_header(jdec, buf, length_of_file)<0)

exitmessage(tinyjpeg_get_errorstring(jdec));

/* Get the size of the image */

tinyjpeg_get_size(jdec, &width, &height);

snprintf(error_string, sizeof(error_string),"Decoding JPEG image...\n");

if (tinyjpeg_decode(jdec, output_format) < 0)

exitmessage(tinyjpeg_get_errorstring(jdec));

/*

* Get address for each plane (not only max 3 planes is supported), and

* depending of the output mode, only some components will be filled

* RGB: 1 plane, YUV420P: 3 planes, GREY: 1 plane

*/

tinyjpeg_get_components(jdec, components);

/* Save it */

switch (output_format)

{

case TINYJPEG_FMT_RGB24:

case TINYJPEG_FMT_BGR24:

write_tga(outfilename, output_format, width, height, components);

break;

case TINYJPEG_FMT_YUV420P:

write_yuv(outfilename, width, height, components);

break;

case TINYJPEG_FMT_GREY:

write_pgm(outfilename, width, height, components);

break;

}

/* Only called this if the buffers were allocated by tinyjpeg_decode() */

tinyjpeg_free(jdec);

/* else called just free(jdec); */

free(buf);

return 0;

}

大致流程为:

先将JPEG流输入变量buf中。

然后通过tiny_jpeg_parser()函数解析JPEG流中的头部信息(marker),获取解码需要的信息。

再用tiny_jpeg_decode()函数解码文件,解码流储存在变量jdec。

最后根据参数中的输出格式输出为新文件。

load_multiple_times()总体流程无二,只不过变为解码了1000次(一个for循环)后再输出文件。

再来看marker解析中的部分代码:

tinyjpeg_parse_header()

int tinyjpeg_parse_header(struct jdec_private *priv, const unsigned char *buf, unsigned int size)

{

int ret;

/* Identify the file */

if ((buf[0] != 0xFF) || (buf[1] != SOI))

snprintf(error_string, sizeof(error_string),"Not a JPG file ?\n");

priv->stream_begin = buf+2;

priv->stream_length = size-2;

priv->stream_end = priv->stream_begin + priv->stream_length;

ret = parse_JFIF(priv, priv->stream_begin);

return ret;

}

static int parse_JFIF(struct jdec_private *priv, const unsigned char *stream)

{

int chuck_len;

int marker;

int sos_marker_found = 0;

int dht_marker_found = 0;

const unsigned char *next_chunck;

/* Parse marker */

while (!sos_marker_found)

{

if (*stream++ != 0xff)

goto bogus_jpeg_format;

/* Skip any padding ff byte (this is normal) */

while (*stream == 0xff)

stream++;

marker = *stream++;

chuck_len = be16_to_cpu(stream);

next_chunck = stream + chuck_len;

switch (marker)

{

case SOF:

if (parse_SOF(priv, stream) < 0)

return -1;

break;

case DQT:

if (parse_DQT(priv, stream) < 0)

return -1;

break;

case SOS:

if (parse_SOS(priv, stream) < 0)

return -1;

sos_marker_found = 1;

break;

case DHT:

if (parse_DHT(priv, stream) < 0)

return -1;

dht_marker_found = 1;

break;

case DRI:

if (parse_DRI(priv, stream) < 0)

return -1;

break;

default:

......

return -1;

}

大致流程为:

- 检查SOI

- 检查APP0中的JFIF标识。

- 对DQT,DHT,SOF0,SOS进行解析,获得量化表内容和序号,Huffman表内容和序号,每个颜色分量的采样格式,使用表的序号等信息,为解码做好准备。(没有Huffman表则使用默认的Huffman表。)

对0xFF的进行忽略。

parse_SOF()

static int parse_SOF(struct jdec_private *priv, const unsigned char *stream)

{

int i, width, height, nr_components, cid, sampling_factor;

int Q_table;

struct component *c;

......

print_SOF(stream);

height = be16_to_cpu(stream+3);

width = be16_to_cpu(stream+5);

nr_components = stream[7];

......

stream += 8;

for (i=0; i<nr_components; i++) {

cid = *stream++;

sampling_factor = *stream++;

Q_table = *stream++;

c = &priv->component_infos[i];

......

c->Vfactor = sampling_factor&0xf;

c->Hfactor = sampling_factor>>4;

c->Q_table = priv->Q_tables[Q_table];

......

}

priv->width = width;

priv->height = height;

......

return 0;

}

height,width获取当前图像的宽度高度,cid为每个分量的id,Q_table为使用的量化表序号。sampling_factor为水平&垂直采样因子。nr_components为颜色分量数。

parse_DHT()

对DHT的解析:函数parse_DHT()

static int parse_DHT(struct jdec_private *priv, const unsigned char *stream)

{

......

while (length>0) {

index = *stream++;

/* We need to calculate the number of bytes 'vals' will takes */

huff_bits[0] = 0;

count = 0;

for (i=1; i<17; i++) {

huff_bits[i] = *stream++;

count += huff_bits[i];

}

......

if (index & 0xf0 )

build_huffman_table(huff_bits, stream, &priv->HTAC[index&0xf]);

else

build_huffman_table(huff_bits, stream, &priv->HTDC[index&0xf]);

length -= 1;

length -= 16;

length -= count;

stream += count;

}

......

不同长度 Huffman 的码字数量:固定为 16 个字节,每个字节代表从长度为 1 到长度为 16 的码字的个数,以表中的分析,这 16 个字节之后的字节对应的就是每个符字对应的权值,这些权值的含义即 为 DC 系数经 DPCM 编码后幅度值的位长。

首先将流中HuffmanTable有关数据输入数组huff_bits,使用**build_huffman_table()**建立Huffman表。

其中index & 0xf0即为Huffman表的序号,0为DC,1为AC。

parse_DQT()

static int parse_DQT(struct jdec_private *priv, const unsigned char *stream)

{

int qi;

float *table;

const unsigned char *dqt_block_end;

......

dqt_block_end = stream + be16_to_cpu(stream);

stream += 2; /* Skip length */

while (stream < dqt_block_end)

{

qi = *stream++;

......

table = priv->Q_tables[qi];

build_quantization_table(table, stream);

stream += 64;

return 0;

}

qi存储量化表的ID。一般来说量化表ID取值为0-3,分为亮度的DC,AC量化,色度的DC,AC量化。

调用build_quantization_table()函数建立量化表。(每个表各调用一次)

parse_SOS()

unsigned int i, cid, table;

unsigned int nr_components = stream[2];

stream += 3;

for (i=0;i<nr_components;i++) {

cid = *stream++;

table = *stream++;

fprintf(p_trace,"ComponentId:%d tableAC:%d tableDC:%d\n", cid, table&0xf, table>>4);

fflush(p_trace);

}

得到各分量分别使用的Huffman表,即cid和table。

解码:tiny_jpeg_decode()

获得必要的信息后即可开始解码。

int tinyjpeg_decode(struct jdec_private *priv, int pixfmt)

{

unsigned int x, y, xstride_by_mcu, ystride_by_mcu;

unsigned int bytes_per_blocklines[3], bytes_per_mcu[3];

decode_MCU_fct decode_MCU;

const decode_MCU_fct *decode_mcu_table;

const convert_colorspace_fct *colorspace_array_conv;

convert_colorspace_fct convert_to_pixfmt;

if (setjmp(priv->jump_state))

return -1;

/* To keep gcc happy initialize some array */

bytes_per_mcu[1] = 0;

bytes_per_mcu[2] = 0;

bytes_per_blocklines[1] = 0;

bytes_per_blocklines[2] = 0;

decode_mcu_table = decode_mcu_3comp_table;

switch (pixfmt) {

case TINYJPEG_FMT_YUV420P:

colorspace_array_conv = convert_colorspace_yuv420p;

if (priv->components[0] == NULL)

priv->components[0] = (uint8_t *)malloc(priv->width * priv->height);

if (priv->components[1] == NULL)

priv->components[1] = (uint8_t *)malloc(priv->width * priv->height/4);

if (priv->components[2] == NULL)

priv->components[2] = (uint8_t *)malloc(priv->width * priv->height/4);

bytes_per_blocklines[0] = priv->width;

bytes_per_blocklines[1] = priv->width/4;

bytes_per_blocklines[2] = priv->width/4;

bytes_per_mcu[0] = 8;

bytes_per_mcu[1] = 4;

bytes_per_mcu[2] = 4;

break;

case TINYJPEG_FMT_RGB24:

colorspace_array_conv = convert_colorspace_rgb24;

if (priv->components[0] == NULL)

priv->components[0] = (uint8_t *)malloc(priv->width * priv->height * 3);

bytes_per_blocklines[0] = priv->width * 3;

bytes_per_mcu[0] = 3*8;

break;

case TINYJPEG_FMT_BGR24:

colorspace_array_conv = convert_colorspace_bgr24;

if (priv->components[0] == NULL)

priv->components[0] = (uint8_t *)malloc(priv->width * priv->height * 3);

bytes_per_blocklines[0] = priv->width * 3;

bytes_per_mcu[0] = 3*8;

break;

case TINYJPEG_FMT_GREY:

decode_mcu_table = decode_mcu_1comp_table;

colorspace_array_conv = convert_colorspace_grey;

if (priv->components[0] == NULL)

priv->components[0] = (uint8_t *)malloc(priv->width * priv->height);

bytes_per_blocklines[0] = priv->width;

bytes_per_mcu[0] = 8;

break;

default:

#if TRACE

fprintf(p_trace,"Bad pixel format\n");

fflush(p_trace);

#endif

return -1;

}

xstride_by_mcu = ystride_by_mcu = 8;

if ((priv->component_infos[cY].Hfactor | priv->component_infos[cY].Vfactor) == 1) {

decode_MCU = decode_mcu_table[0];

convert_to_pixfmt = colorspace_array_conv[0];

#if TRACE

fprintf(p_trace,"Use decode 1x1 sampling\n");

fflush(p_trace);

#endif

} else if (priv->component_infos[cY].Hfactor == 1) {

decode_MCU = decode_mcu_table[1];

convert_to_pixfmt = colorspace_array_conv[1];

ystride_by_mcu = 16;

#if TRACE

fprintf(p_trace,"Use decode 1x2 sampling (not supported)\n");

fflush(p_trace);

#endif

} else if (priv->component_infos[cY].Vfactor == 2) {

decode_MCU = decode_mcu_table[3];

convert_to_pixfmt = colorspace_array_conv[3];

xstride_by_mcu = 16;

ystride_by_mcu = 16;

#if TRACE

fprintf(p_trace,"Use decode 2x2 sampling\n");

fflush(p_trace);

#endif

} else {

decode_MCU = decode_mcu_table[2];

convert_to_pixfmt = colorspace_array_conv[2];

xstride_by_mcu = 16;

#if TRACE

fprintf(p_trace,"Use decode 2x1 sampling\n");

fflush(p_trace);

#endif

}

resync(priv);

/* Don't forget that block can be either 8 or 16 lines */

bytes_per_blocklines[0] *= ystride_by_mcu;

bytes_per_blocklines[1] *= ystride_by_mcu;

bytes_per_blocklines[2] *= ystride_by_mcu;

bytes_per_mcu[0] *= xstride_by_mcu/8;

bytes_per_mcu[1] *= xstride_by_mcu/8;

bytes_per_mcu[2] *= xstride_by_mcu/8;

/* Just the decode the image by macroblock (size is 8x8, 8x16, or 16x16) */

for (y=0; y < priv->height/ystride_by_mcu; y++)

{

//trace("Decoding row %d\n", y);

priv->plane[0] = priv->components[0] + (y * bytes_per_blocklines[0]);

priv->plane[1] = priv->components[1] + (y * bytes_per_blocklines[1]);

priv->plane[2] = priv->components[2] + (y * bytes_per_blocklines[2]);

for (x=0; x < priv->width; x+=xstride_by_mcu)

{

decode_MCU(priv);

convert_to_pixfmt(priv);

priv->plane[0] += bytes_per_mcu[0];

priv->plane[1] += bytes_per_mcu[1];

priv->plane[2] += bytes_per_mcu[2];

if (priv->restarts_to_go>0)

{

priv->restarts_to_go--;

if (priv->restarts_to_go == 0)

{

priv->stream -= (priv->nbits_in_reservoir/8);

resync(priv);

if (find_next_rst_marker(priv) < 0)

return -1;

}

}

}

}

......

return 0;

}

首先通过垂直/水平采样因子确定MCU(minimum coding unit)的大小和输入图像的格式,得到每个MCU中8*8的宏块数量。

调用decode_MCU()进行解码,不同的MCU(不同的采样频率)有不同的算法,调用不同的函数。

比如:

decode_MCU_2x2_3planes对应的是4:2:0的采样格式

decode_MCU_2x1_3planes对应的是4:2:2的采样格式

decode_MCU_1x1_3planes对应的是4:4:4的采样格式

以decode_MCU_2x2_3planes为例:

static void decode_MCU_2x2_3planes(struct jdec_private *priv)

{

// Y

process_Huffman_data_unit(priv, cY);

IDCT(&priv->component_infos[cY], priv->Y, 16);

process_Huffman_data_unit(priv, cY);

IDCT(&priv->component_infos[cY], priv->Y+8, 16);

process_Huffman_data_unit(priv, cY);

IDCT(&priv->component_infos[cY], priv->Y+64*2, 16);

process_Huffman_data_unit(priv, cY);

IDCT(&priv->component_infos[cY], priv->Y+64*2+8, 16);

// Cb

process_Huffman_data_unit(priv, cCb);

IDCT(&priv->component_infos[cCb], priv->Cb, 8);

// Cr

process_Huffman_data_unit(priv, cCr);

IDCT(&priv->component_infos[cCr], priv->Cr, 8);

}

大概过程为:

调用**process_Huffman_data_unit()得到DCT系数,调用IDCT()**函数得到YUV。这部分都在decode_MCU()中。

每次遇到RSTn时要清空之前的DCT系数。

具体代码(实在太长啦)不在此罗列。

最后得到YUV分量后,转换为特定的色彩空间存进priv,解完所有MCU后解码完成,输出特定格式得文件。

zigzag排序

在解码过程中需要用到zigzag排序。程序用这种方法解决。

static const unsigned char zigzag[64] =

{

0, 1, 5, 6, 14, 15, 27, 28,

2, 4, 7, 13, 16, 26, 29, 42,

3, 8, 12, 17, 25, 30, 41, 43,

9, 11, 18, 24, 31, 40, 44, 53,

10, 19, 23, 32, 39, 45, 52, 54,

20, 22, 33, 38, 46, 51, 55, 60,

21, 34, 37, 47, 50, 56, 59, 61,

35, 36, 48, 49, 57, 58, 62, 63

};

即存储每个宏块中各块zigzag排序后的序号。

程序中定义的三个结构体

程序中定义的三个结构体优化了程序算法。三个结构体定义代码在tinyjpeg-internal.h中。

huffman_table

struct huffman_table

{

/* Fast look up table, using HUFFMAN_HASH_NBITS bits we can have directly the symbol,

* if the symbol is <0, then we need to look into the tree table */

short int lookup[HUFFMAN_HASH_SIZE];

/* code size: give the number of bits of a symbol is encoded */

unsigned char code_size[HUFFMAN_HASH_SIZE];

/* some place to store value that is not encoded in the lookup table

* FIXME: Calculate if 256 value is enough to store all values

*/

uint16_t slowtable[16-HUFFMAN_HASH_NBITS][256];

};

Huffman表结构体。其中lookup[]为快速查表。code_size为码长。

component

struct component

{

unsigned int Hfactor;

unsigned int Vfactor;

float *Q_table; /* Pointer to the quantisation table to use */

struct huffman_table *AC_table;

struct huffman_table *DC_table;

short int previous_DC; /* Previous DC coefficient */

short int DCT[64]; /* DCT coef */

#if SANITY_CHECK

unsigned int cid;

#endif

};

宏块结构体。Hfactor,Vfactor为水平,垂直采样。定义了两个Huffman_table成员分别对应AC表和DC表。

previous_DC为前一个宏块得DC系数,用于DC系数的DPC编码。

DCT[64]即存储了该宏块中8*8得DCT系数数值。

jdec_private

struct jdec_private

{

/* Public variables */

uint8_t *components[COMPONENTS];

unsigned int width, height; /* Size of the image */

unsigned int flags;

/* Private variables */

const unsigned char *stream_begin, *stream_end;

unsigned int stream_length;

const unsigned char *stream; /* Pointer to the current stream */

unsigned int reservoir, nbits_in_reservoir;

struct component component_infos[COMPONENTS];

float Q_tables[COMPONENTS][64]; /* quantization tables */

struct huffman_table HTDC[HUFFMAN_TABLES]; /* DC huffman tables */

struct huffman_table HTAC[HUFFMAN_TABLES]; /* AC huffman tables */

int default_huffman_table_initialized;

int restart_interval;

int restarts_to_go; /* MCUs left in this restart interval */

int last_rst_marker_seen; /* Rst marker is incremented each time */

/* Temp space used after the IDCT to store each components */

uint8_t Y[64*4], Cr[64], Cb[64];

jmp_buf jump_state;

/* Internal Pointer use for colorspace conversion, do not modify it !!! */

uint8_t *plane[COMPONENTS];

};

JPEG流结构体,在主程序中用此结构体存储解码后得到的JPEG流。

数据成员有图像的宽高信息,流长度信息,量化表和Huffman表信息等。

可以看到三个结构体可以看成三个层,层与层之间由嵌套关系。这种结构体的定义方法应该可以带来不小启发。

命令行参数

程序的命令行参数由以下几部分组成:

--benchmark(可无) 输入文件名(带后缀) 输出格式 输出文件名(不带后缀)

其中第一个可以不写。有这个即代表benchMode,解码1000次后才输出。

实验任务完成情况

任务1

逐步调试JPEG解码器程序。将输入的JPG文件进行解码,将输出文件保存为可供YUVViewer观看的YUV文件.

输入实验提供的test.jpg(1024*1024)。

源代码中没有输出为YUV文件的代码,只有输出为Y,U,V分量三个文件的代码:

static void write_yuv(const char *filename, int width, int height, unsigned char **components)

{

FILE *F;

char temp[1024];

snprintf(temp, 1024, "%s.Y", filename);

F = fopen(temp, "wb");

fwrite(components[0], width, height, F);

fclose(F);

snprintf(temp, 1024, "%s.U", filename);

F = fopen(temp, "wb");

fwrite(components[1], width*height/4, 1, F);

fclose(F);

snprintf(temp, 1024, "%s.V", filename);

F = fopen(temp, "wb");

fwrite(components[2], width*height/4, 1, F);

fclose(F);

}

要输出YUV文件,加上直接输出YUV文件的代码:

//tinyjpeg.h中

enum tinyjpeg_fmt {

TINYJPEG_FMT_GREY = 1,

TINYJPEG_FMT_BGR24,

TINYJPEG_FMT_RGB24,

TINYJPEG_FMT_YUV420P,

TINYJPEG_FMT_YUV,//newly added

};

......

//main函数中

input_filename = argv[current_argument];

if (strcmp(argv[current_argument+1],"yuv420p")==0)

output_format = TINYJPEG_FMT_YUV420P;

else if (strcmp(argv[current_argument+1],"rgb24")==0)

output_format = TINYJPEG_FMT_RGB24;

else if (strcmp(argv[current_argument+1],"bgr24")==0)

output_format = TINYJPEG_FMT_BGR24;

else if (strcmp(argv[current_argument+1],"grey")==0)

output_format = TINYJPEG_FMT_GREY;

else if (strcmp(argv[current_argument+1],"yuv")==0)

output_format = TINYJPEG_FMT_YUV;//newly added

else

exitmessage("Bad format: need to be one of yuv420p, rgb24, bgr24, grey\n");

output_filename = argv[current_argument+2];

......

//convert_one_image()中

switch (output_format)

{

case TINYJPEG_FMT_RGB24:

case TINYJPEG_FMT_BGR24:

write_tga(outfilename, output_format, width, height, components);

break;

case TINYJPEG_FMT_YUV420P:

write_yuv(outfilename, width, height, components);

break;

case TINYJPEG_FMT_GREY:

write_pgm(outfilename, width, height, components);

case TINYJPEG_FMT_YUV:

write_yuv_single(outfilename, width, height, components);//newly added

break;

}

......

//load_multiple_times()中

switch (output_format)

{

case TINYJPEG_FMT_RGB24:

case TINYJPEG_FMT_BGR24:

write_tga(outfilename, output_format, width, height, components);

break;

case TINYJPEG_FMT_YUV420P:

write_yuv(outfilename, width, height, components);

break;

case TINYJPEG_FMT_GREY:

write_pgm(outfilename, width, height, components);

case TINYJPEG_FMT_YUV:

write_yuv_single(outfilename, width, height, components);//newly added

break;

}

......

static void usage(void)

{

fprintf(stderr, "Usage: loadjpeg [options] \n" );

fprintf(stderr, "options:\n");

fprintf(stderr, " --benchmark - Convert 1000 times the same image\n");

fprintf(stderr, "format:\n");

fprintf(stderr, " yuv420p - output 3 files .Y,.U,.V\n");

fprintf(stderr, " rgb24 - output a .tga image\n");

fprintf(stderr, " bgr24 - output a .tga image\n");

fprintf(stderr, " gray - output a .pgm image\n");

fprintf(stderr, " yuv - output a .yuv image\n");//newly added

exit(1);

}

......

tinyjpeg_decode()

decode_mcu_table = decode_mcu_3comp_table;

switch (pixfmt) {

case TINYJPEG_FMT_YUV:

case TINYJPEG_FMT_YUV420P:

colorspace_array_conv = convert_colorspace_yuv420p;

if (priv->components[0] == NULL)

priv->components[0] = (uint8_t *)malloc(priv->width * priv->height);

if (priv->components[1] == NULL)

priv->components[1] = (uint8_t *)malloc(priv->width * priv->height/4);

if (priv->components[2] == NULL)

priv->components[2] = (uint8_t *)malloc(priv->width * priv->height/4);

bytes_per_blocklines[0] = priv->width;

bytes_per_blocklines[1] = priv->width/4;

bytes_per_blocklines[2] = priv->width/4;

bytes_per_mcu[0] = 8;

bytes_per_mcu[1] = 4;

bytes_per_mcu[2] = 4;

break;

......

/**

* Save a buffer in yuv file

* newly added

*/

static void write_yuv_single(const char *filename, int width, int height, unsigned char **components)

{

FILE *F;

char temp[1024];

snprintf(temp, 1024, "%s.yuv", filename);

F = fopen(temp, "wb");

fwrite(components[0], width, height, F);

fwrite(components[1], width*height/4, 1, F);

fwrite(components[2], width*height/4, 1, F);

fclose(F);

}

我们为tiny_jpeg_fmt添加了TINYJPEG_FMT_YUV的类型,输入命令行参数为".yuv"时即可调用新添加的write_yuv_single()函数,输出4:2:0的YUV文件。

为此设置命令行参数为:

test.jpg yuv test

不调用1000次解压的函数,否则程序会跑的比较慢。

最后输出结果如下:

任务2

1.代码理解

对代码和结构体的理解已经在上面部分陈述过。

2.程序中的TRACE功能

在程序的几乎每个部分。都有如下的预处理命令块:

#if TRACE

...

#endif

这里的trace即在程序运行过程中记录下重要的信息,如解析DHT,解析DQT,采用的采样频率等等。

在main()中我们能找到如下语句:

#if TRACE

p_trace=fopen(TRACEFILE,"w");

if (p_trace==NULL)

{

printf("trace file open error!");

}

#endif

......

#if TRACE

fclose(p_trace);

#endif

说明各种信息都记录在了TRACEFILE中。

tinyjpeg.h中的

#define TRACEFILE "trace_jpeg.txt

决定了TRACEFILE的文件名。

可以看到对marker解析的记录,Huffman表的内容等。

要关闭TRACE功能,在tinyjpeg.h中的#define TRACE 1改为:

#define TRACE 0

这样在编译过程中凡是碰到TRACE都会变成0,即不会执行TRACE的代码块。

改变后,工程中所有的TRACE代码块都会变成灰色,提醒你这部分不会运行。

TRACE在程序测试过程中寻找错误,获得过程信息等都有很大作用。

任务3

以txt文件输出所有的量化矩阵和所有的HUFFMAN码表。

显然,已有专门储存它们的变量,只要添加输出Huffman表和量化表的函数即可。

为了保证程序的流畅性,此部分代码添加在新的check_table代码块中。

添加代码:

//tinyjpeg.h中添加

#define check_table 1

FILE *Q_table;//add by nxn

FILE *H_table;//add by nxn

#define QTABLEFILE "qtable_jpeg.txt"//added

#define HTABLEFILE "htable_jpeg.txt"//added

......

//main()中添加

#if check_table

Q_table=fopen(QTABLEFILE,"w");

if (Q_table==NULL)

{

printf("Q_table file open error!");

}

H_table=fopen(HTABLEFILE,"w");

if (H_table==NULL)

{

printf("H_table file open error!");

}

#endif

......

#if check_table

fclose(Q_table);

fclose(H_table);

#endif

......

//build_quantization_table()中添加

for (i=0; i<8; i++) {

for (j=0; j<8; j++) {

#if check_table

fprintf(Q_table, "%d\t", ref_table[*zz]);//added 输出表的内容

fflush(Q_table);

if (j == 7)

{

fprintf(Q_table,"\n");

fflush(Q_table);

}

#endif

*qtable++ = ref_table[*zz++] * aanscalefactor[i] * aanscalefactor[j];

}

}

......

//parse_DQT()的循环中添加

#if check_table

fprintf(Q_table, "Quantization_table [%d]:\n", qi);//added 输出量化表id

fflush(Q_table);

#endif

......

//parse_DHT()中添加

#if check_table

fprintf(H_table,"Huffman table %s[%d] length=%d\n", (index&0xf0)?"AC":"DC", index&0xf, count);//added 输出huffman表类型和id

fflush(H_table);

#endif

......

//build_huffman_table中添加

#if check_table

fprintf(H_table,"val=%2.2x code=%8.8x codesize=%2.2d\n", val, code, code_size);//added 输出Huffman表内容

fflush(H_table);

#endif

将量化表和Huffman表分别输出到qtable_jpeg.txt和htable_jpeg.txt中

输出结果如下:

任务4,5

输出DC图像并统计其概率分布。输出某一个AC值图像并统计其概率分布。

首先在tinyjpeg.h中定义:

FILE *DCFILE;//added

FILE *ACFILE;//added

#define ACDC_output 1//added

#define DCoutputFILE "dc_jpeg.yuv"//added

#define ACoutputFILE "ac_jpeg.yuv"//added

main()函数中添加文件的打开和关闭代码

#if ACDC_output

DCFILE=fopen(DCoutputFILE,"w");

if (DCFILE==NULL)

{

printf("DC output file open error!");

}

ACFILE=fopen(ACoutputFILE,"w");

if (ACFILE==NULL)

{

printf("AC output file open error!");

}

#endif

......

#if ACDC_output

fclose(DCFILE);

fclose(ACFILE);

#endif

涉及到输出的部分,直接在tinyjpeg_decode()中添加输出语句即可。在得到的DCT数组中,DCT[0]即DC分量,DCT[1],DCT[2]。。。。。。即为AC分量。我们在这里取DCT[1]。

此外DC取值范围为-512-512,先抬高512在/4变为0-255.

因为AC的值往往较小,抬高128后便于观察。

由于原图为1024x1024,取每个8x8像块的一个系数,输出图像为128x128。

相关代码如下:

int tinyjpeg_decode(struct jdec_private *priv, int pixfmt)

{

#if ACDC_output

unsigned char *DCbuf, *ACbuf, uvbuf = (unsigned char)128;

float *DC;

int k;

int ycount;

#endif

......

decode_MCU(priv);

#if ACDC_output

DC=(priv->component_infos->DCT[0]+512.0)/4;//将DCT[0]的范围压缩到0-255

DCbuf=(unsigned char)(DC+0.5);

fwrite(&DCbuf,1,1,DCFILE);

ACbuf=(unsigned char)(priv->component_infos->DCT[1]+128);

fwrite(&ACbuf,1,1,ACFILE);

ycount++;

#endif

.....

#endif

#if ACDC_output

for (k = 0; k < ycount/4*2; k++)

{

fwrite(&uvbuf, sizeof(unsigned char), 1, DCFILE);

fwrite(&uvbuf, sizeof(unsigned char), 1, ACFILE);//added 4:2:0 output

}

#endif

return 0;

}

输出结果如下:

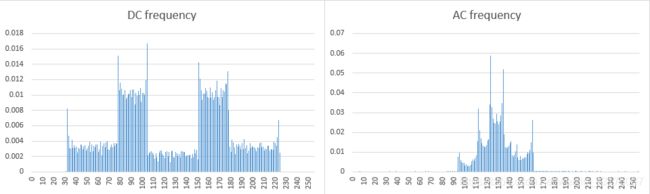

显然AC大多是原图中的边缘变化部分。一般来说,如果我们去AC为DCT[2],[3]…得到的AC图像将会越平坦。

对得到的图像另用一个程序分析其概率分布。代码如下:

#include "iostream"

#include最后输出结果如下:(AC取的是DCT[1])