Cityscapes 格式和COCO格式相互转换脚本(InstancesId.png 和Instance.json相互转换)

代码地址:

【注】 如果你只是想将Cityscapes数据集转换到COCO的json的话可以直接采用Detectron或者maskrcnn-benchmark的脚本就可以直接转换。

本文主要针对要把自己的数据集或者只有Instance标注图片的数据集转换到json格式,更易于嵌入到当下网络中。

数据格式

首先需要解释一下Cityscapes数据集和COCO数据集的格式

以上是原图像

大小为:2048 × \times × 1024 × \times × 3

命名为:frankfurt_000000_000294_leftImg8bit.png (我们的代码不需要按照这个格式)

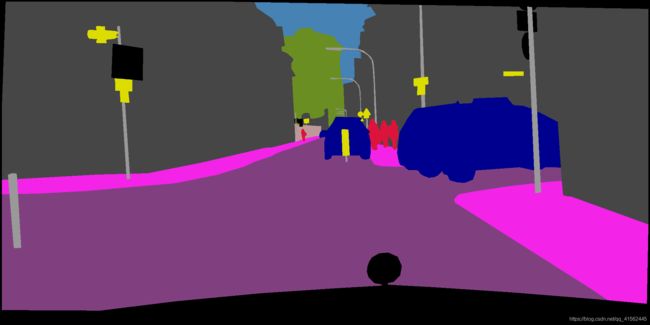

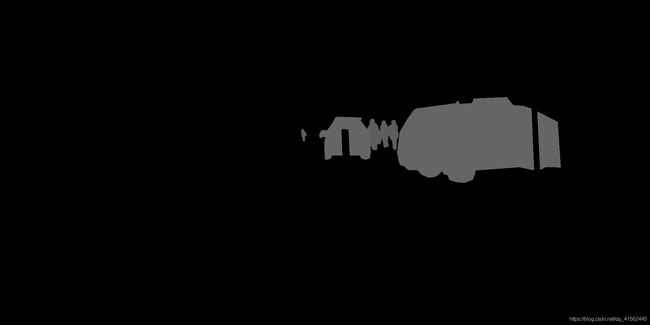

以上是可视化的图像

大小为:2048 × \times × 1024 × \times × 3

命名为:frankfurt_000000_000294_gtFine_color.png (我们的代码不需要此图像)

像素值:array([ 0, 20, 35, 60, 64, 70, 107, 128, 130, 142, 153, 180, 190,220, 232, 244], dtype=uint8) 【用cv2.imread()读图,再用np.unique()输出】

以上是可视化的图像 大小为:2048 $\times$ 1024 $\times$ 3 命名为:frankfurt_000000_000294_gtFine_color.png (我们的代码不需要此图像) 像素值:array([ 0, 20, 35, 60, 64, 70, 107, 128, 130, 142, 153, 180, 190,220, 232, 244], dtype=uint8)

以上是可视化的图像 大小为:2048 $\times$ 1024 $\times$ 3 命名为:frankfurt_000000_000294_gtFine_color.png (我们的代码不需要此图像) 像素值:array([ 0, 20, 35, 60, 64, 70, 107, 128, 130, 142, 153, 180, 190,220, 232, 244], dtype=uint8)

CITYSCAPES转COCO代码如下:

import cv2

import numpy as np

import os, glob

import datetime

import json

import os

import re

import fnmatch

from PIL import Image

import numpy as np

from pycococreatortools import pycococreatortools

ROOT_DIR = '/home/huang/dataset/cityscapes/'

IMAGE_DIR = os.path.join(ROOT_DIR, "leftImg8bit/val/frankfurt")

ANNOTATION_DIR = os.path.join(ROOT_DIR, "gtFine/val/frankfurt")

INSTANCE_DIR = os.path.join(ROOT_DIR, "frankfurt")

# ROOT_DIR = '/home/huang/dataset/iShape/antenna/dataset_ln/train'

# IMAGE_DIR = os.path.join(ROOT_DIR, "image/")

# ANNOTATION_DIR = os.path.join(ROOT_DIR, "instance_map/")

# INSTANCE_DIR = os.path.join(ROOT_DIR, "instance/")

CATEGORIES = [

{

'id': 1,

'name': '1',

'supercategory': '1',

},

]

background_label = list(range(-1, 24, 1)) + list(range(29, 34, 1))

def masks_generator(imges):

idx = 0

for pic_name in imges:

annotation_name = pic_name.split('.')[0] + '.png'

annotation = cv2.imread(os.path.join(ANNOTATION_DIR, annotation_name), -1)

name = pic_name.split('.')[0]

h, w = annotation.shape[:2]

ids = np.unique(annotation)

for id in ids:

if id in background_label:

continue

instance_id = id

class_id = instance_id // 1000

if class_id == 1:

instance_class = '1'

else:

continue

instance_mask = np.zeros((h, w, 3), dtype=np.uint8)

mask = annotation == instance_id

instance_mask[mask] = 255

mask_name = name + '_' + instance_class + '_' + str(idx) + '.png'

cv2.imwrite(os.path.join(INSTANCE_DIR, mask_name), instance_mask)

idx += 1

def filter_for_pic(files):

file_types = ['*.jpeg', '*.jpg', '*.png']

file_types = r'|'.join([fnmatch.translate(x) for x in file_types])

files = [f for f in files if re.match(file_types, f)]

# files = [os.path.join(root, f) for f in files]

return files

def filter_for_instances(root, files, image_filename):

file_types = ['*.png']

file_types = r'|'.join([fnmatch.translate(x) for x in file_types])

files = [f for f in files if re.match(file_types, f)]

basename_no_extension = os.path.splitext(os.path.basename(image_filename))[0]

file_name_prefix = basename_no_extension + '.*'

# files = [os.path.join(root, f) for f in files]

files = [f for f in files if re.match(file_name_prefix, os.path.splitext(os.path.basename(f))[0])]

return files

def main():

# for root, _, files in os.walk(ANNOTATION_DIR):

files = os.listdir(IMAGE_DIR)

image_files = filter_for_pic(files)

masks_generator(image_files)

coco_output = {

"categories": CATEGORIES,

"images": [],

"annotations": []

}

image_id = 1

segmentation_id = 1

files = os.listdir(INSTANCE_DIR)

instance_files = filter_for_pic(files)

# go through each image

for image_filename in image_files:

image_path = os.path.join(IMAGE_DIR, image_filename)

image = Image.open(image_path)

image_info = pycococreatortools.create_image_info(

image_id, os.path.basename(image_filename), image.size)

coco_output["images"].append(image_info)

# filter for associated png annotations

# for root, _, files in os.walk(INSTANCE_DIR):

annotation_files = filter_for_instances(INSTANCE_DIR, instance_files, image_filename)

# go through each associated annotation

for annotation_filename in annotation_files:

annotation_path = os.path.join(INSTANCE_DIR, annotation_filename)

print(annotation_path)

class_id = [x['id'] for x in CATEGORIES if x['name'] in annotation_filename][0]

category_info = {

'id': class_id, 'is_crowd': 'crowd' in image_filename}

binary_mask = np.asarray(Image.open(annotation_path).convert('1')).astype(np.uint8)

annotation_info = pycococreatortools.create_annotation_info(

segmentation_id, image_id, category_info, binary_mask,

image.size, tolerance=2)

if annotation_info is not None:

coco_output["annotations"].append(annotation_info)

segmentation_id = segmentation_id + 1

image_id = image_id + 1

with open('{}/coco_format_instances_2017.json'.format(ROOT_DIR), 'w') as output_json_file:

json.dump(coco_output, output_json_file)

if __name__ == "__main__":

main()

COCO转CITYSCAPES代码如下:

import cv2

import numpy as np

import os

import json

import numpy as np

from collections import defaultdict

import matplotlib.pyplot as plt

ROOT_DIR = "/home/huang/dataset/cells_coco"

ANNOTATION_FILE = os.path.join(ROOT_DIR, "annotations/val.json")

with open(ANNOTATION_FILE, 'r', encoding='utf-8') as train_new:

val = json.load(train_new)

images = [i['id'] for i in val['images']]

img_anno = defaultdict(list)

for anno in val['annotations']:

for img_id in images:

if anno['image_id'] == img_id:

img_anno[img_id].append(anno)

imgid_file = {

}

for im in val['images']:

imgid_file[im['id']] = im['file_name']

for img_idx in img_anno:

instance_png = np.zeros((522, 775), dtype=np.uint8)

for idx, ann in enumerate(img_anno[img_idx]):

im_mask = np.zeros((522, 775), dtype=np.uint8)

mask = []

for an in ann['segmentation']:

ct = np.expand_dims(np.array(an), 0).astype(int)

contour = np.stack((ct[:, ::2], ct[:, 1::2])).T

mask.append(contour)

imm = cv2.drawContours(im_mask, mask, -1, 1, -1)

imm = imm * (1000 * anno['category_id'] + idx)

instance_png = instance_png + imm

cv2.imwrite(os.path.join(ROOT_DIR, imgid_file[img_idx].split('.')[0]+".png"), instance_png)

# plt.imshow(instance_png)