Keras+tensorboard实现mnist手写数字识别(附完整代码)

Keras+tensorboard实现mnist手写数字识别

此次利用Keras的Model函数式编程方式完成框架的搭建,利用tensorboard来辅助生成各种变量的数据变化图。其中也会用到一些训练和图像处理的小技巧,下文会详尽描述。(文末附完整代码)

1. 导入所需包

from __future__ import print_function, division

from keras.datasets import mnist

from keras.layers import Input, Dense, Reshape, Flatten, Dropout

from keras.layers import BatchNormalization, Activation, ZeroPadding2D, Conv2D, Conv2DTranspose,MaxPooling2D

from keras.layers.advanced_activations import LeakyReLU

from keras.layers.convolutional import UpSampling2D

from keras.models import Sequential, Model

from keras.optimizers import Adam

from keras.utils.np_utils import to_categorical

import matplotlib.pyplot as plt

from keras.preprocessing.image import ImageDataGenerator

from sklearn.model_selection import train_test_split

from keras.callbacks import ReduceLROnPlateau

import sys

import numpy as np

2. 载入mnist数据集并进行预处理

2.1 使用from keras.datasets import mnist载入数据集

(x_train, y_train),(x_test, y_test) = mnist.load_data()

因为我们2D卷积网络的输入需要四个维度(batch_size,hight,weigh,channels),其中:

batch_size 代表每次输入的批次大小

hight 代表图片的高度

weigh 代表图片的宽度

channels 代表信道数目

2.2 归一化处理

所以我们需要将其转化为(batch_size,hight,weigh,channels)这样的形式,并,将数据归一化到(0,1)之间。

batch_size = 64 #每次传入的批次大小

epochs = 30 #训练次数

(x_train, y_train),(x_test, y_test) = mnist.load_data() #载入数据集

x_train = np.expand_dims(x_train,axis=3) / 255. #扩充维度并进行归一化处理

x_test = np.expand_dims(x_test,axis=3) / 255.

2.3 将标签进行one-hot表示

我们的数据集包含0-9这是个数字,所以需要进行10分类,这也就确定了我们神经网络的最后的输出是(batch_size,10).

我们的标签y则需要转化成类似于[0,1,0,0,0,0,0,0,0,0]的one-hot编码的形式,这里我们使用到to_categorical的函数,此函数作用是to_categorical就是将类别向量转换为二进制(只有0和1)的矩阵类型表示。其表现为将原有的类别向量转换为独热编码的形式。

y_train = to_categorical(y_train, num_classes = 10)#将标签转换成类似[0,1,0,0,0,0,0,0,0,0] one-hot编码,其中num_classes 代表类别个数

y_test = to_categorical(y_test, num_classes = 10)

2.4 进行训练集验证机的划分(使用train_test_split)

x_train, x_val, y_train, y_val = train_test_split(x_train, y_train, test_size = 0.1, random_state=42)

3. 使用keras搭建网络

3.1 搭建神经网络

#开始构建网络结构

#初始化 Input

input_ = Input(shape=(28,28,1,))

#定义卷积层、池化层、Dropout层

x = Conv2D(filters=32, kernel_size=(3, 3),activation='relu',kernel_initializer='he_normal')(input_)

x = Conv2D(32, kernel_size=(3, 3),activation='relu',kernel_initializer='he_normal')(x)

x = MaxPooling2D((2, 2))(x)

x = Dropout(0.20)(x)

x = Conv2D(64, (3, 3), activation='relu',padding='same',kernel_initializer='he_normal')(x)

x = Conv2D(64, (3, 3), activation='relu',padding='same',kernel_initializer='he_normal')(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

x = Dropout(0.25)(x)

x = Conv2D(128, (3, 3), activation='relu',padding='same',kernel_initializer='he_normal')(x)

x = Dropout(0.25)(x)

#从这里转为全连接神经网络

x = Flatten()(x)

x = Dense(128, activation='relu')(x)

x= BatchNormalization()(x)

x = Dropout(0.25)(x)

output= Dense(10,activation='softmax')(x)

model = Model(input_, output)#初始化模型

model.summary()#输出模型参数

model.compile(optimizer='Adam', #编译模型

loss = 'categorical_crossentropy',

metrics=['accuracy']

)

3.2 将图片进行变换

这个操作是为了增强模型的泛化能力,同时生成更多的训练样本。通过生成这样的生成器可以进行小批量的输入,从而减少内存的占用。这里我们对每个批次的张图片进行了15度的旋转、水平和竖直方向各移动了总像素点的0.1倍。

通过使用ImageDataGenerator来生成:

datagen = ImageDataGenerator(

featurewise_center=False, # set input mean to 0 over the dataset

samplewise_center=False, # set each sample mean to 0

featurewise_std_normalization=False, # divide inputs by std of the dataset

samplewise_std_normalization=False, # divide each input by its std

zca_whitening=False, # apply ZCA whitening

rotation_range=15, # randomly rotate images in the range (degrees, 0 to 180)

zoom_range = 0.1, # Randomly zoom image

width_shift_range=0.1, # randomly shift images horizontally (fraction of total width)

height_shift_range=0.1, # randomly shift images vertically (fraction of total height)

horizontal_flip=False, # randomly flip images

vertical_flip=False) # randomly flip images

datagen.fit(x_train)

3.3 利用TensorBoard来保存日志

tbCallBack = TensorBoard(log_dir="./model",histogram_freq=5,write_grads=True) #将日志文件放到model文件下

3.4 使用ReduceLROnPlateau来进行训练过程中的学习率更新

这个函数的作用是当在确定的训练批次内我们的监测指标不在提升,则降低我们的学习率

learning_rate_reduction = ReduceLROnPlateau(monitor='val_acc', #监测指标

patience=3, #3个epochs内指标不提升则更新学习率

verbose=1, #显示输出

factor=0.5, #lr = 0.5*lr

min_lr=0.0001)#学习率的最小值

3.5 开始训练和测试模型

history = model.fit_generator(datagen.flow(x_train,y_train, batch_size=batch_size),

epochs = epochs, validation_data = (x_val,y_val),

verbose = 1, steps_per_epoch=x_train.shape[0] // batch_size

, callbacks=[learning_rate_reduction,tbCallBack])

model.evaluate(x_test, y_test)

4. 最终结果

4.1 训练结果

loss: 0.0168 - acc: 0.9947 - val_loss: 0.0154 - val_acc: 0.9957

4.2 测试集结果

测试集的准确率到达0.9958

[0.012207253835405572, 0.9958]

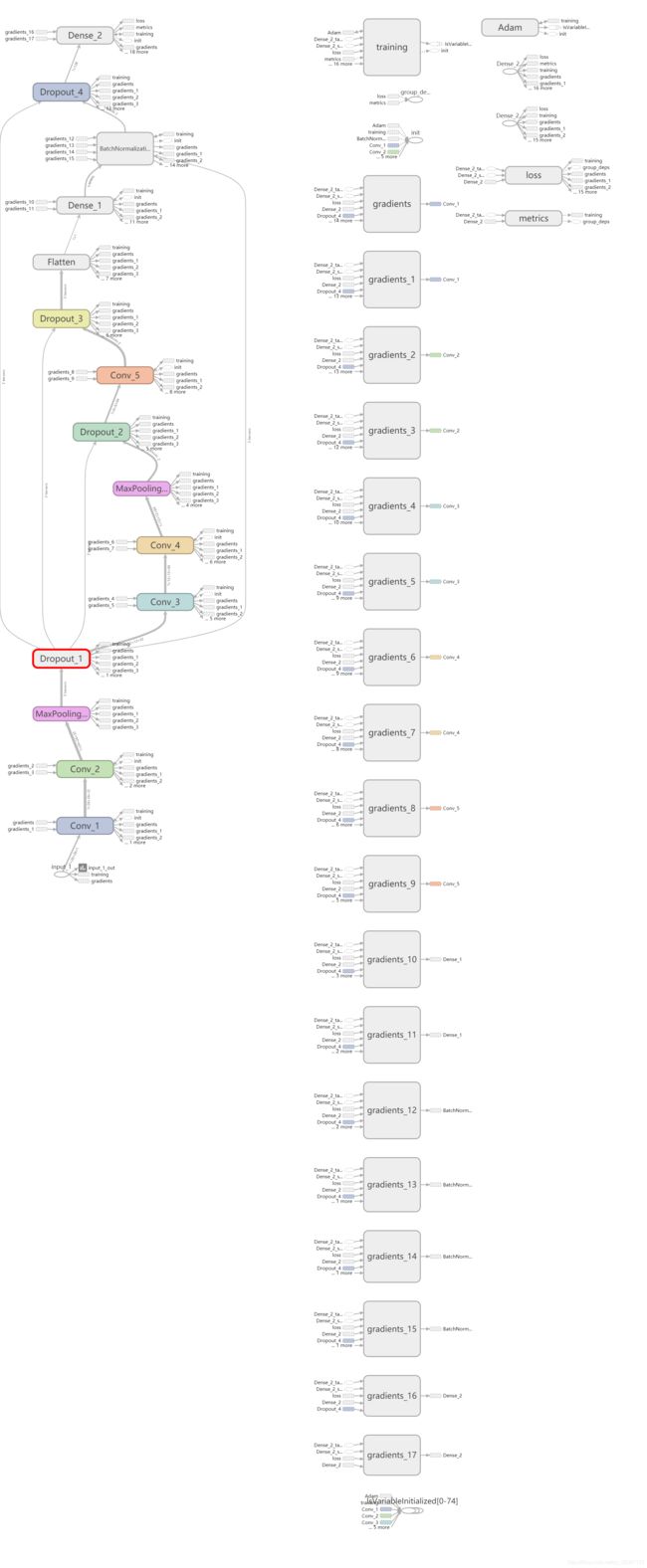

4.3 tensorboard可视化—结构图

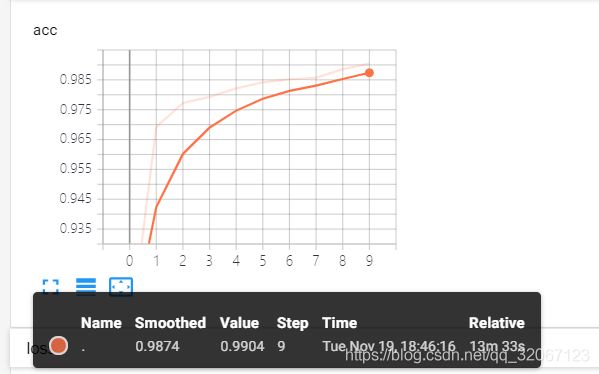

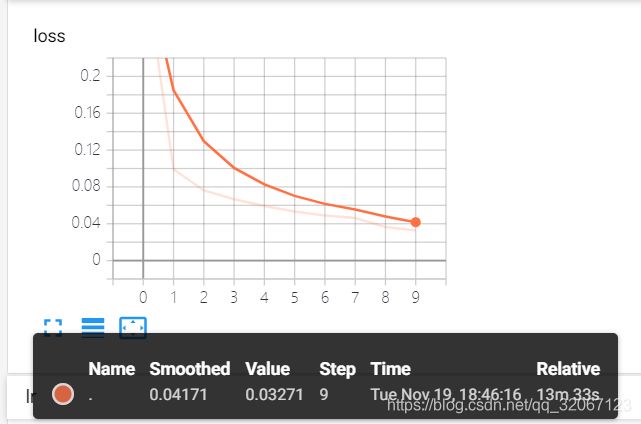

4.3 tensorboard可视化—loss、acc、lr

4.3.1 训练集的acc、loss:

4.3.2 lr

4.3.3 测试集的acc、loss

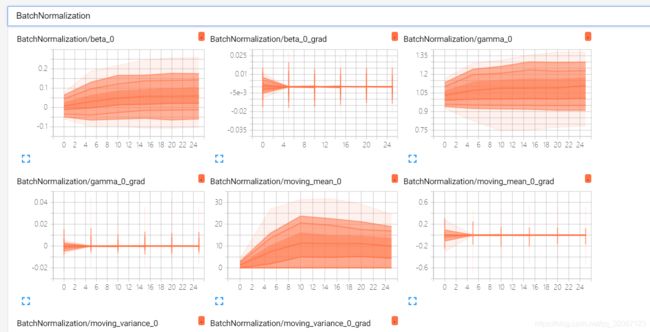

4.3 tensorboard可视化—权重和偏置值(以BN的为例)

5. 完整代码

from __future__ import print_function, division

from keras.datasets import mnist

from keras.layers import Input, Dense, Reshape, Flatten, Dropout

from keras.layers import BatchNormalization, Activation, ZeroPadding2D, Conv2D, Conv2DTranspose,MaxPooling2D

from keras.layers.advanced_activations import LeakyReLU

from keras.layers.convolutional import UpSampling2D

from keras.models import Sequential, Model

from keras.optimizers import Adam

from keras.utils.np_utils import to_categorical

import matplotlib.pyplot as plt

from keras.preprocessing.image import ImageDataGenerator

from sklearn.model_selection import train_test_split

from keras.callbacks import ReduceLROnPlateau

import sys

from keras.callbacks import TensorBoard

import numpy as np

input_ = Input(shape=(28,28,1,))

x = Conv2D(filters=32, kernel_size=(3, 3),activation='relu',kernel_initializer='he_normal',name='Conv_1')(input_)

x = Conv2D(32, kernel_size=(3, 3),activation='relu',kernel_initializer='he_normal',name='Conv_2')(x)

x = MaxPooling2D((2, 2),name='MaxPooling_1')(x)

x = Dropout(0.20,name='Dropout_1')(x)

x = Conv2D(64, (3, 3), activation='relu',padding='same',kernel_initializer='he_normal',name='Conv_3')(x)

x = Conv2D(64, (3, 3), activation='relu',padding='same',kernel_initializer='he_normal',name='Conv_4')(x)

x = MaxPooling2D(pool_size=(2, 2),name='MaxPooling_2')(x)

x = Dropout(0.25,name='Dropout_2')(x)

x = Conv2D(128, (3, 3), activation='relu',padding='same',kernel_initializer='he_normal',name='Conv_5')(x)

x = Dropout(0.25,name='Dropout_3')(x)

x = Flatten(name='Flatten')(x)

x = Dense(128, activation='relu',name='Dense_1')(x)

x= BatchNormalization(name='BatchNormalization')(x)

x = Dropout(0.25,name='Dropout_4')(x)

output= Dense(10,activation='softmax',name='Dense_2')(x)

model = Model(input_, output)

model.summary()

model.compile(optimizer='Adam',

loss = 'categorical_crossentropy',

metrics=['accuracy']

)

datagen = ImageDataGenerator(

featurewise_center=False, # set input mean to 0 over the dataset

samplewise_center=False, # set each sample mean to 0

featurewise_std_normalization=False, # divide inputs by std of the dataset

samplewise_std_normalization=False, # divide each input by its std

zca_whitening=False, # apply ZCA whitening

rotation_range=15, # randomly rotate images in the range (degrees, 0 to 180)

zoom_range = 0.1, # Randomly zoom image

width_shift_range=0.1, # randomly shift images horizontally (fraction of total width)

height_shift_range=0.1, # randomly shift images vertically (fraction of total height)

horizontal_flip=False, # randomly flip images

vertical_flip=False) # randomly flip images

learning_rate_reduction = ReduceLROnPlateau(monitor='val_acc', #监测指标

patience=3, #3个epochs内指标不提升则更新学习率

verbose=1, #显示输出

factor=0.5, #lr = 0.5*lr

min_lr=0.0001)#学习率的最小值

batch_size = 60

epochs = 30

(x_train, y_train),(x_test, y_test) = mnist.load_data()

x_train = np.expand_dims(x_train,axis=3) / 255.

x_test = np.expand_dims(x_test,axis=3) / 255.

y_train = to_categorical(y_train, num_classes = 10)#将标签转换成[0,1,0,0,0,0,0,0,0,0]

y_test = to_categorical(y_test, num_classes = 10)

x_train, x_val, y_train, y_val = train_test_split(x_train, y_train, test_size = 0.1, random_state=42)

tbCallBack = TensorBoard(log_dir="./model1",histogram_freq=5,write_grads=True)

datagen.fit(x_train)

history = model.fit_generator(datagen.flow(x_train,y_train, batch_size=batch_size),

epochs = epochs, validation_data = (x_val,y_val),

verbose = 1, steps_per_epoch=x_train.shape[0] // batch_size

, callbacks=[learning_rate_reduction,tbCallBack])

model.evaluate(x_test, y_test)