AlexNet 实现猫狗分类

AlexNet 实现猫狗分类

前言

在训练网络过程中遇到了很多问题,先在这里抱怨一下,没有硬件条件去使用庞大的ImageNet2012 数据集 。所以在选择合适的数据集上走了些弯路,最后选择有kaggle提供的猫狗数据集,因为二分类问题可能训练起来比较容易一些。实验结果和代码我放在kaggle上了,有时会加载不出来。ipynb文件百度云里面也有 下载完成后用jupyter打开。下面附上链接

| 内容 | 地址 |

|---|---|

| 数据集 | 链接 |

| kaggle实验过程 | 链接 |

| 百度云ipynb文件 | 链接 提取码:di7c |

好,我们开始介绍吧!

AlexNet简介

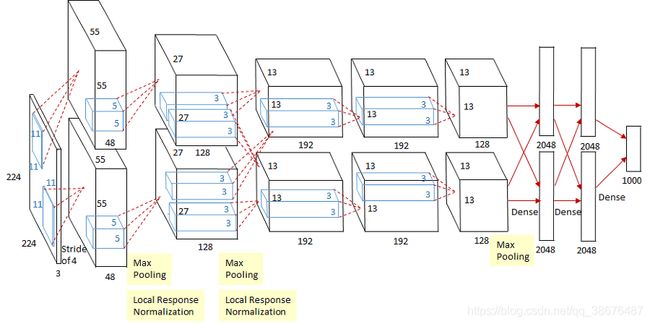

首先呢,AlexNet是2012年,由Alex Krizhevsky、 llya Sutskever 和 Geoffrey E. Hinton 提出来的一种卷积神经网络模型,并获得了2012年ILSVRC图像分类大赛的冠军,自此呢也掀起了深度学习的热潮。神经网络通常都是直接上图比较直观。

看上去好像 很复杂的样子,其实由于当时硬件条件限制,所以将图片分成了两个部分,分别用两块GPU进行训练,分别放置了一半的卷积核。相比上一篇提到的LeNet, 它有很多优点。如下表所示:

| 技巧 | AlexNet | LeNet |

|---|---|---|

| Relu,多GPU | 训练速度块 | 训练速度慢 |

| 局部响应归一化 | 提高了精度,缓解过拟合 | 无 |

| 数据扩充,丢失输出 | 减少过拟合 | 无 |

这里有争议的就是局部响应归一化(Local Response Normalization,简称LRN),在它之后有论文证明局部响应归一化并没有太大作用,我第一次实验也用的局部响应归一化但效果并不好,后面采用的是批标准化(BatchNormalization, 简称BN)。

网络结构

网络节后从图中可以详细看出,这里就不再进行赘述,我们可以看看keras搭建好的AlexNet网络结构:

Model: "AlexNet"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_3 (InputLayer) [(None, 224, 224, 3)] 0

_________________________________________________________________

zero_padding2d_2 (ZeroPaddin (None, 227, 227, 3) 0

_________________________________________________________________

conv_block_1 (Conv2D) (None, 55, 55, 96) 34944

_________________________________________________________________

max_pooling_1 (MaxPooling2D) (None, 27, 27, 96) 0

_________________________________________________________________

batch_normalization_12 (Batc (None, 27, 27, 96) 384

_________________________________________________________________

conv_block_2 (Conv2D) (None, 27, 27, 256) 614656

_________________________________________________________________

max_pooling_2 (MaxPooling2D) (None, 13, 13, 256) 0

_________________________________________________________________

batch_normalization_13 (Batc (None, 13, 13, 256) 1024

_________________________________________________________________

conv_block_3 (Conv2D) (None, 13, 13, 384) 885120

_________________________________________________________________

max_pooling_3 (MaxPooling2D) (None, 6, 6, 384) 0

_________________________________________________________________

batch_normalization_14 (Batc (None, 6, 6, 384) 1536

_________________________________________________________________

conv_block_4 (Conv2D) (None, 6, 6, 384) 1327488

_________________________________________________________________

conv_block_5 (Conv2D) (None, 6, 6, 256) 884992

_________________________________________________________________

max_pooling_5 (MaxPooling2D) (None, 2, 2, 256) 0

_________________________________________________________________

batch_normalization_15 (Batc (None, 2, 2, 256) 1024

_________________________________________________________________

flatten (Flatten) (None, 1024) 0

_________________________________________________________________

fc_1 (Dense) (None, 4096) 4198400

_________________________________________________________________

dropout_1 (Dropout) (None, 4096) 0

_________________________________________________________________

batch_normalization_16 (Batc (None, 4096) 16384

_________________________________________________________________

fc_2 (Dense) (None, 4096) 16781312

_________________________________________________________________

dropout_2 (Dropout) (None, 4096) 0

_________________________________________________________________

batch_normalization_17 (Batc (None, 4096) 16384

_________________________________________________________________

dense_2 (Dense) (None, 1000) 4097000

=================================================================

Total params: 28,860,648

Trainable params: 28,842,280

Non-trainable params: 18,368

可以清楚的看到每一层网络的输入输出及节点数。我们不用ImageNet2012作为训练数据,这里直接用一块GPU训练就好。

注意事项

由于输入是224x224x3(HWC)大小的图片,但实际运算时使用的是227x227x3(HWC)大小的图片。所以又要进行填0操作:

x = ZeroPadding2D(((3, 0), (3, 0)))(img_input)

下面请看详细代码:

- 构建网络模型:

#定义网络结构

import tensorflow as tf

from tensorflow.keras.layers import Conv2D, Flatten, Lambda, MaxPooling2D, Dropout, Input, Dense,ZeroPadding2D,BatchNormalization

from tensorflow.python.keras import backend

from tensorflow.python.keras.engine import training

from tensorflow.python.keras.utils import layer_utils

from tensorflow.keras import optimizers, losses, initializers

def alexnet(input_shape=(224, 224, 3), input_tensor=None,classes=1000):

if input_tensor is None:

img_input = Input(shape=input_shape)

else:

if not backend.is_keras_tensor(input_tensor):

img_input = Input(tensor=input_tensor, shape=input_shape)

else:

img_input = input_tensor

x = ZeroPadding2D(((3, 0), (3, 0)))(img_input)

# 第一个块

x = Conv2D(filters=96, kernel_size=(11, 11), kernel_initializer=initializers.RandomNormal(stddev=0.01),strides=4, padding='valid', name='conv_block_1', activation='relu')(

x)

x = MaxPooling2D(pool_size=(3, 3), strides=2, name='max_pooling_1')(x)

# x = Lambda(tf.nn.local_response_normalization, name='lrn_1')(x)

x=BatchNormalization()(x)

# 第二个块

x = Conv2D(filters=256, kernel_size=(5, 5),kernel_initializer=initializers.RandomNormal(stddev=0.01),strides=1, padding='same', activation='relu', name='conv_block_2')(x)

x = MaxPooling2D(pool_size=(3, 3), strides=2, name='max_pooling_2')(x)

# x = Lambda(tf.nn.local_response_normalization, name='lrn_2')(x)

x=BatchNormalization()(x)

# 第三个块

x = Conv2D(filters=384, kernel_size=(3, 3),kernel_initializer=initializers.RandomNormal(stddev=0.01),strides=1, padding='same', activation='relu', name='conv_block_3')(x)

x = MaxPooling2D(pool_size=(3, 3), strides=2, name='max_pooling_3')(x)

x=BatchNormalization()(x)

# 第四到第五块

x = Conv2D(filters=384, kernel_size=(3, 3),kernel_initializer=initializers.RandomNormal(stddev=0.01),strides=1, padding='same', activation='relu', name='conv_block_4')(x)

x = Conv2D(filters=256, kernel_size=(3, 3),kernel_initializer=initializers.RandomNormal(stddev=0.01),strides=1, padding='same', activation='relu', name='conv_block_5')(x)

x = MaxPooling2D(pool_size=(3, 3), strides=2, name='max_pooling_5')(x)

x=BatchNormalization()(x)

# 这个是将卷积接口一维化 用于链接全连接

x = Flatten(name='flatten')(x)

# 全连接层

x = Dense(4096, name='fc_1', activation='relu')(x)

x=Dropout(0.4,name='dropout_1')(x)

x=BatchNormalization()(x)

x = Dense(4096, name='fc_2', activation='relu')(x)

x = Dropout(0.4, name='dropout_2')(x)

x=BatchNormalization()(x)

x=Dense(classes,activation='softmax')(x)

if input_tensor is not None:

inputs = layer_utils.get_source_inputs(input_tensor)

else:

inputs = img_input

model = training.Model(inputs, x, name='AlexNet')

return model

model =alexnet(classes=2)

model.summary()

代码里注解得有LRN的使用方法,感兴趣的话可以自己去调试。

- 数据集加载:

这里借助的是kaggle得在线平台,直接在kaggle上使用在线数据集。自己使用时记得改路径。

import os

train_data_dir =r'../input/cat-and-dog/training_set/training_set'

test_data_dir =r'../input/cat-and-dog/test_set/test_set'

IMG_WEIGHT=224

IMG_HEIGHT=224

IMG_CHANEL=3

floders = os.listdir(train_data_dir)

NUM_Categories=len(os.listdir(train_data_dir))

print(NUM_Categories) #这里总共会有2个分类

for floder in floders:

path = train_data_dir+'/'+floder

print(floder.split('-')[-1]) #查看标签读取是否正确

import cv2

from PIL import Image

import numpy as np

floders = os.listdir(train_data_dir)

image_data=[] #用于保存分类

image_labels=[] #用于保存标签

type_dict={

} #下表和所属类别对应

index =-1 #用于字典下表和标签

for floder in floders:

index+=1#从0开始编号

path = train_data_dir+'/'+floder

print('loading '+path)

type_dict[index]=floder.split('-')[-1]

images = os.listdir(path)

for img in images:

try: #加入异常判断 防止读取的时候 出错

image = cv2.imread(path+'/'+img)

img_fromarray =Image.fromarray(image,'RGB')

img_resize = img_fromarray.resize((IMG_WEIGHT,IMG_HEIGHT))

image_data.append(np.array(img_resize))

image_labels.append(index)

except Exception as err: #防止出错

print(err)

print('Error in '+img)

- 数据转换及验证集划分:

image_data=np.array(image_data,np.float32)

image_labels=np.array(image_labels,np.int)

print(image_data.shape,image_labels.shape)

rom tensorflow import keras

from sklearn.model_selection import train_test_split

X_train, X_val, y_train, y_val = train_test_split(image_data, image_labels, train_size=0.7,random_state=42,

shuffle=True)

del image_data #删除不适用得变量,防止内存溢出

del image_labels #删除不适用得变量,防止内存溢出

X_train = X_train / 255.0 #归一化

X_val = X_val / 255.0 #归一化

y_train = keras.utils.to_categorical(y_train,NUM_Categories)

y_val = keras.utils.to_categorical(y_val,NUM_Categories)

print("X_train.shape", X_train.shape)

print("X_valid.shape", X_val.shape)

print("y_train.shape", y_train.shape)

print("y_valid.shape", y_val.shape)

- 数据数量可视化: 这里主要是为了 看样本之前得数量占比,防止某一样本数量过少,导致模型收敛过慢,个别类别学习特征较少,导致模型泛化能力

较差。

import matplotlib.pyplot as plt

def visual_train_data(train_path,classes):

"""

:param train_path: 训练数据路径

:param classes: 标签字典 如classes = { 0:'Speed limit (20km/h)',

1:'Speed limit (30km/h)',

2:'Speed limit (50km/h)',

3:'Speed limit (60km/h)',

4:'Speed limit (70km/h)'}

:return:

"""

folders = os.listdir(train_path)

train_num = []

class_num = []

index=0

for folder in folders:

train_files = os.listdir(train_path + '/' + folder)

train_num.append(len(train_files))

class_num.append(classes[index])

index+=1

zipped_lists = zip(train_num, class_num)

sorted_pair = sorted(zipped_lists)

tuples = zip(*sorted_pair) # 这里是解压

# 这个人一定是脑子有问题才压缩之后还要解压,还要用 tuples来遍历

train_num, class_num = [list(tuple) for tuple in tuples]

plt.figure(figsize=(21, 10))

plt.bar(class_num, train_num)

plt.xticks(class_num, rotation='vertical')

plt.show()

visual_train_data(train_data_dir,type_dict)

- 模型训练配置:

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.optimizers import Adam,SGD

lr=0.0001

epochs=20

opt=Adam(lr=lr,decay=lr/(epochs/0.5))

model = alexnet(classes=2)

model.compile(loss='categorical_crossentropy',optimizer=opt,metrics=['acc'])

aug = ImageDataGenerator( #这里设置数据增强,提高模型得泛化能力

rotation_range=10,

zoom_range=0.15,

width_shift_range=0.1,

height_shift_range=0.1,

shear_range=0.15,

horizontal_flip=False,

vertical_flip=False,

fill_mode='nearest'

)

history = model.fit(X_train, y_train, batch_size=50,

epochs=epochs, validation_data=(X_val, y_val))

- 测试集加载与测试:

del X_train #同样是为了防止内存溢出

del y_train

del X_val

del y_val

import cv2

from PIL import Image

import numpy as np

floders = os.listdir(test_data_dir)

test_data=[] #用于保存分类

test_labels=[] #用于保存标签

test_dict={

} #下表和所属类别对应

index =-1 #用于字典下表和标签

for floder in floders:

index+=1#从0开始编号

path = test_data_dir+'/'+floder

print('loading '+path)

test_dict[index]=floder.split('-')[-1]

images = os.listdir(path)

for img in images:

try: #加入异常判断 防止读取的时候 出错

image = cv2.imread(path+'/'+img)

img_fromarray =Image.fromarray(image,'RGB')

img_resize = img_fromarray.resize((IMG_WEIGHT,IMG_HEIGHT))

test_data.append(np.array(img_resize))

test_labels.append(index)

except Exception as err:

print(err)

print('Error in '+img)

test_data=np.array(test_data,np.float32)

test_labels=np.array(test_labels,np.int)

print(test_labels.shape,test_labels.shape)

test_data = test_data / 255.0 #归一化

test_labels = keras.utils.to_categorical(test_labels,NUM_Categories)

model.evaluate(test_data,test_labels

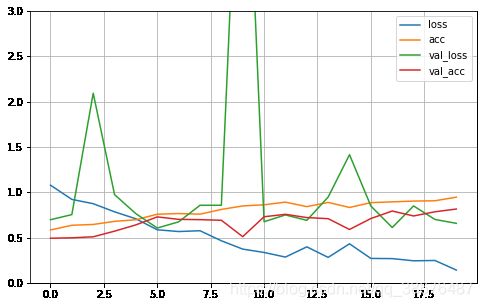

- 这里是最后的准确率:

64/64 [==============================] - 12s 171ms/step - loss: 0.6805 - acc: 0.8052

[0.680515706539154, 0.805239737033844]

- 训练过程可视化:

import pandas as pd

pd.DataFrame(history.history).plot(figsize=(8, 5))

plt.grid(True)

plt.gca().set_ylim(0, 3)

plt.show()

model.save('my_model.h5')

9. 总结:

从头开始训练一个模型太难了,所以还是了解一下迁移学习吧。我个人感觉目前的数据集和硬件条件,是深度学习面临的最大挑战。从AlexNet中学到的东西就是怎样减少过拟合。但我的理论推导有些欠缺。以后会尝试添加一点理论推导。下一篇可能做ZFNet,但它仅仅在AlexNet上有一点点创新。如把11x11的卷积核改为7x7,只用一个GPU训练等。

10. 参考:

书籍:

书名:深度学习:卷积神经网络从入门到精通

作者:李玉鑑 张婷 单传辉 刘兆英

ISBN:9787111602798

版次:1-1

字数:258

出版社:机械工业出版社

链接:

[1]https://blog.csdn.net/qq_35912099/article/details/107237182

[2]https://zhuanlan.zhihu.com/p/141530560

[3]https://blog.csdn.net/DeepLearningJay/article/details/107971526