LeNet手写数字识别

LeNet手写数字识别

简介

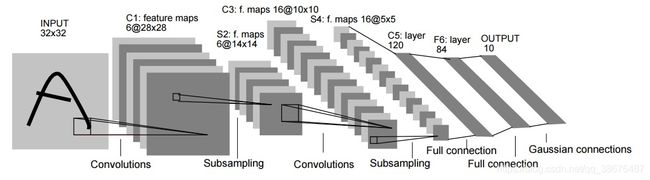

LeNet 是由Y.Lecun等人在1998年提出的第一个真正的卷积神经网络,现在的LeNet现在主要指LeNet5,其主要特点是卷积层和下采样层相结合作为网络的基本结构,其包括3个卷积层和2个下采样层和2个全连接层。最初设计LeNet的目的是识别手写字符和打印字符,效果非常好,曾被广泛应用于美 国银行支票手写体识别,取得了很大成功 。

网络结构

- C1层是卷积层,包含6个特征图,是由6个5x5的卷积核对输入图像卷积得到。

- S1层是一个下采样层,包含6个特征图,是由C1层的特征图经过2x2,步长为2的窗口进行平均池化,在利用sigmoid激活函数变换得到。

- C3是卷积层包含16个特征图,是由16个5x5的卷积核对S2进行卷积得到。

- S4是一个下采样层,包含16个特征图,是由C3层的特征图经过2x2,步长为2的窗口进行平均池化,在利用sigmoid激活函数变换得到。

- C5是卷积层包含120个特征图,是由120个5x5的卷积核对S2进行卷积得到。

- F6是包含84个神经元的全连接层,采用双曲正切激活函数。

注意事项

通过图片可以看到,LeNet的输入是32x32大小的图片,本文使用的手写数字识别数据集是mnist。图片大小为28x28。所以需要对数据集边界补充0。通过一下函数补充

x = ZeroPadding2D(((4, 0), (4, 0)))(img_input)

Keras搭建LeNet

我是根据keras中搭建VGG16为参考编写的。

import keras.losses

from keras.utils import layer_utils

from sklearn.model_selection import train_test_split

from tensorflow.python.keras import backend

from tensorflow.keras import layers

from tensorflow.python.keras.engine import training

import matplotlib.pyplot as plt

import tensorflow as tf

import pandas as pd

from tensorflow.keras.layers import ZeroPadding2D

def letNet5(input_shape=(28, 28, 1), input_tensor=None, classes=10):

if input_tensor is None:

img_input = layers.Input(shape=input_shape)

else:

if not backend.is_keras_tensor(input_tensor):

img_input = layers.Input(tensor=input_tensor, shape=input_shape)

else:

img_input = input_tensor

x = ZeroPadding2D(((4, 0), (4, 0)))(img_input) #填充图片

x = layers.Conv2D(6, (5, 5), strides=1, padding='valid', name='block1_conv1')(x)

x = layers.AveragePooling2D((2, 2), (2, 2), name='block1_pool')(x)

x=layers.Activation('sigmoid')(x)

x = layers.Conv2D(16, (5, 5), strides=1, padding='valid', name='block1_conv2')(x)

x = layers.AveragePooling2D((2, 2), (2, 2), name='block2_pool')(x)

x = layers.Activation('sigmoid')(x)

x = layers.Conv2D(120, (5, 5), strides=1, padding='valid', name='block3_conv1', activation='sigmoid')(x)

x = layers.Flatten(name='flatten')(x)

x = layers.Dense(84, activation='tanh')(x)

x = layers.Dense(classes, 'softmax')(x)

if input_tensor is not None:

inputs = layer_utils.get_source_inputs(input_tensor)

else:

inputs = img_input

model = training.Model(inputs, x, name='lenet5')

return model

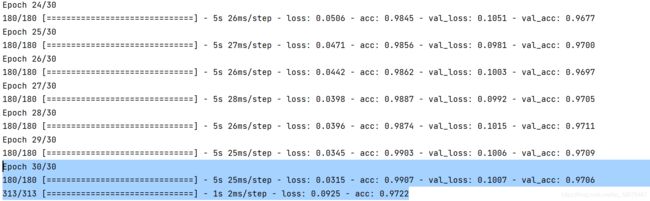

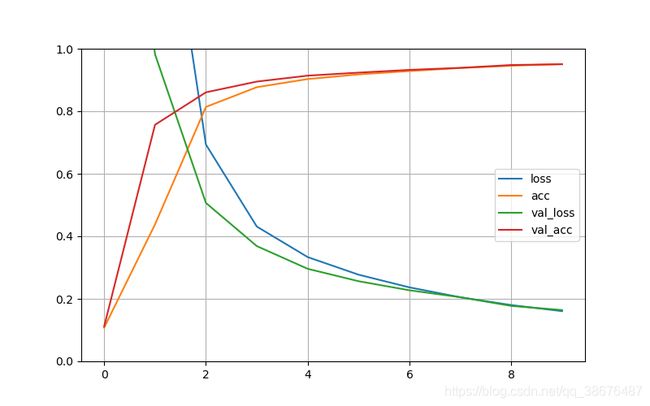

训练和测试过程

我将训练集划分为70%的训练集和30%的验证集。测试集用原来mnist提供的就好了。

if __name__ == '__main__':

from tensorflow.keras.datasets import mnist

NUM_CATEGORIES = 10

(X_train, y_train), (X_test, y_test) = mnist.load_data()

X_train, X_val, y_train, y_val = train_test_split(X_train, y_train, train_size=0.3, random_state=100,

shuffle=True)

X_train = X_train / 255

X_val = X_val / 255

X_test = X_test / 255

y_train = keras.utils.to_categorical(y_train, NUM_CATEGORIES)

y_val = keras.utils.to_categorical(y_val, NUM_CATEGORIES)

y_test = keras.utils.to_categorical(y_test, NUM_CATEGORIES)

epochs = 30 #这里可以自己设置小一点,30有点拟合了

model=letNet5()

model.compile(loss='categorical_crossentropy', optimizer=tf.keras.optimizers.Adam(learning_rate=0.001), metrics=['acc'])

history=model.fit(X_train,y_train,batch_size=100,epochs=epochs,validation_data=(X_val,y_val))

pd.DataFrame(history.history).plot(figsize=(8, 5))

plt.grid(True)

plt.gca().set_ylim(0, 1)

plt.show()

pred = model.evaluate(X_test, y_test)

输出结果:

参考

参考书籍:

书名:深度学习:卷积神经网络从入门到精通

作者:李玉鑑 张婷 单传辉 刘兆英

ISBN:9787111602798

版次:1-1

字数:258

出版社:机械工业出版社

参考链接:

[1]https://keras-cn-twkun.readthedocs.io/