http://blog.csdn.net/u013991521/article/details/52781423

1、故障现象

客服同事反馈平台系统运行缓慢,网页卡顿严重,多次重启系统后问题依然存在,使用top命令查看服务器情况,发现CPU占用率过高。

2、CPU占用过高问题定位

2.1、定位问题进程

使用top命令查看资源占用情况,发现pid为14063的进程占用了大量的CPU资源,CPU占用率高达776.1%,内存占用率也达到了29.8%

[ylp@ylp-web-01~]$ toptop -14:51:10up233days,11:40,7users, load average:6.85,5.62,3.97Tasks:192total,2running,190sleeping,0stopped,0zombie%Cpu(s):97.3us,0.3sy,0.0ni,2.5id,0.0wa,0.0hi,0.0si,0.0stKiB Mem :16268652total,5114392free,6907028used,4247232buff/cacheKiB Swap:4063228total,3989708free,73520used.8751512avail Mem PID USER PR NI VIRT RES SHR S%CPU%MEMTIME+ COMMAND14063ylp20092604884.627g11976S776.129.8117:41.66java

1

2

3

4

5

6

7

8

9

2.2、定位问题线程

使用ps -mp pid -o THREAD,tid,time命令查看该进程的线程情况,发现该进程的多个线程占用率很高

[ylp@ylp-web-01 ~]$ps-mp14063-oTHREAD,tid,timeUSER%CPUPRISCNTWCHANUSERSYSTEMTIDTIMEylp361------02:05:58ylp0.019-futex_--14063 00:00:00ylp0.019-poll_s--14064 00:00:00ylp44.519----14065 00:15:30ylp44.519----14066 00:15:30ylp44.419----14067 00:15:29ylp44.519----14068 00:15:30ylp44.519----14069 00:15:30ylp44.519----14070 00:15:30ylp44.519----14071 00:15:30ylp44.619----14072 00:15:32ylp2.219-futex_--14073 00:00:46ylp0.019-futex_--14074 00:00:00ylp0.019-futex_--14075 00:00:00ylp0.019-futex_--14076 00:00:00ylp0.719-futex_--14077 00:00:15

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

从输出信息可以看出,14065~14072之间的线程CPU占用率都很高

2.3、查看问题线程堆栈

挑选TID为14065的线程,查看该线程的堆栈情况,先将线程id转为16进制,使用printf “%x\n” tid命令进行转换

[ylp@ylp-web-01~]$printf"%x\n"1406536f1

1

2

再使用jstack命令打印线程堆栈信息,命令格式:jstack pid |grep tid -A 30

[ylp@ylp-web-01~]$ jstack14063|grep36f1 -A30"GC task thread#0 (ParallelGC)"prio=10tid=0x00007fa35001e800nid=0x36f1runnable"GC task thread#1 (ParallelGC)"prio=10tid=0x00007fa350020800nid=0x36f2runnable"GC task thread#2 (ParallelGC)"prio=10tid=0x00007fa350022800nid=0x36f3runnable"GC task thread#3 (ParallelGC)"prio=10tid=0x00007fa350024000nid=0x36f4runnable"GC task thread#4 (ParallelGC)"prio=10tid=0x00007fa350026000nid=0x36f5runnable"GC task thread#5 (ParallelGC)"prio=10tid=0x00007fa350028000nid=0x36f6runnable"GC task thread#6 (ParallelGC)"prio=10tid=0x00007fa350029800nid=0x36f7runnable"GC task thread#7 (ParallelGC)"prio=10tid=0x00007fa35002b800nid=0x36f8runnable"VM Periodic Task Thread"prio=10tid=0x00007fa3500a8800nid=0x3700waitingoncondition JNIglobalreferences:392

1

2

3

4

5

6

7

8

9

10

11

12

从输出信息可以看出,此线程是JVM的gc线程。此时可以基本确定是内存不足或内存泄露导致gc线程持续运行,导致CPU占用过高。

所以接下来我们要找的内存方面的问题

3、内存问题定位

3.1、使用jstat -gcutil命令查看进程的内存情况

[ylp@ylp-web-01~]$ jstat -gcutil14063200010S0 S1EOP YGC YGCT FGC FGCT GCT0.000.00100.0099.9926.314221.9172181484.8301506.7470.000.00100.0099.9926.314221.9172181484.8301506.7470.000.00100.0099.9926.314221.9172191496.5671518.4840.000.00100.0099.9926.314221.9172191496.5671518.4840.000.00100.0099.9926.314221.9172191496.5671518.4840.000.00100.0099.9926.314221.9172191496.5671518.4840.000.00100.0099.9926.314221.9172191496.5671518.4840.000.00100.0099.9926.314221.9172201505.4391527.3550.000.00100.0099.9926.314221.9172201505.4391527.3550.000.00100.0099.9926.314221.9172201505.4391527.355``` 从输出信息可以看出,Eden区内存占用100%,Old区内存占用99.99%,FullGC的次数高达220次,并且频繁FullGC,FullGC的持续时间也特别长,平均每次FullGC耗时6.8秒(1505.439/220)。根据这些信息,基本可以确定是程序代码上出现了问题,可能存在不合理创建对象的地方####3.2、分析堆栈使用jstat命令查看进程的堆栈情况

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

[ylp@ylp-web-01 ~]$ jstat 14063 >>jstat.out

“`

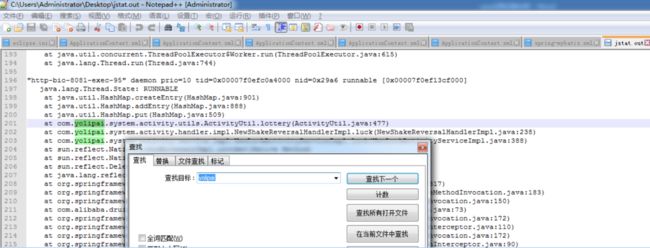

把jstat.out文件从服务器拿到本地后,用编辑器查找带有项目目录并且线程状态是RUNABLE的相关信息,从图中可以看出ActivityUtil.java类的447行正在使用HashMap.put()方法

3.3、代码定位

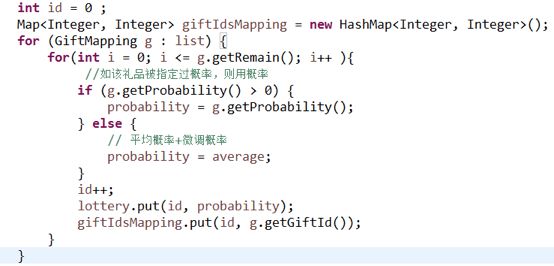

打开项目工程,找到ActivityUtil类的477行,代码如下:

找到相关同事了解后,这段代码会从数据库中获取配置,并根据数据库中remain的值进行循环,在循环中会一直对HashMap进行put操作。

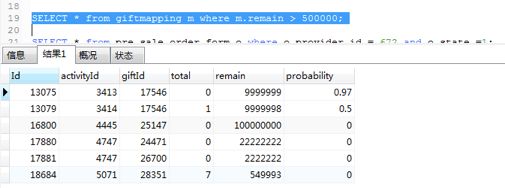

查询数据库中的配置,发现remain的数量巨大

至此,问题定位完毕。