WebMagic是一个开源的java爬虫框架。WebMagic框架的使用并不是本文的重点,具体如何使用请参考官方文档:http://webmagic.io/docs/。

本文是对spring boot+WebMagic+MyBatis做了整合,使用WebMagic爬取数据,然后通过MyBatis持久化爬取的数据到mysql数据库。本文提供的源代码可以作为java爬虫项目的脚手架。

1.添加maven依赖

4.0.0 hyzx qbasic-crawler 1.0.0 org.springframework.boot spring-boot-starter-parent 1.5.21.RELEASE UTF-8 true 1.8 3.8.1 3.1.0 5.1.47 1.1.17 1.3.4 1.2.58 3.9 2.10.2 0.7.3 org.springframework.boot spring-boot-devtools runtime true org.springframework.boot spring-boot-starter-test test org.springframework.boot spring-boot-configuration-processor true mysql mysql-connector-java ${mysql.connector.version} com.alibaba druid-spring-boot-starter ${druid.spring.boot.starter.version} org.mybatis.spring.boot mybatis-spring-boot-starter ${mybatis.spring.boot.starter.version} com.alibaba fastjson ${fastjson.version} org.apache.commons commons-lang3 ${commons.lang3.version} joda-time joda-time ${joda.time.version} us.codecraft webmagic-core ${webmagic.core.version} org.slf4j slf4j-log4j12 org.apache.maven.plugins maven-compiler-plugin ${maven.compiler.plugin.version} ${java.version} ${java.version} ${project.build.sourceEncoding} org.apache.maven.plugins maven-resources-plugin ${maven.resources.plugin.version} ${project.build.sourceEncoding} org.springframework.boot spring-boot-maven-plugin true true repackage public aliyun nexus http://maven.aliyun.com/nexus/content/groups/public/ true public aliyun nexus http://maven.aliyun.com/nexus/content/groups/public/ true false

2.项目配置文件 application.properties

配置mysql数据源,druid数据库连接池以及MyBatis的mapper文件的位置。

# mysql数据源配置 spring.datasource.name=mysql spring.datasource.type=com.alibaba.druid.pool.DruidDataSource spring.datasource.driver-class-name=com.mysql.jdbc.Driver spring.datasource.url=jdbc:mysql://192.168.0.63:3306/gjhzjl?useUnicode=true&characterEncoding=utf8&useSSL=false&allowMultiQueries=true spring.datasource.username=root spring.datasource.password=root # druid数据库连接池配置 spring.datasource.druid.initial-size=5 spring.datasource.druid.min-idle=5 spring.datasource.druid.max-active=10 spring.datasource.druid.max-wait=60000 spring.datasource.druid.validation-query=SELECT 1 FROM DUAL spring.datasource.druid.test-on-borrow=false spring.datasource.druid.test-on-return=false spring.datasource.druid.test-while-idle=true spring.datasource.druid.time-between-eviction-runs-millis=60000 spring.datasource.druid.min-evictable-idle-time-millis=300000 spring.datasource.druid.max-evictable-idle-time-millis=600000 # mybatis配置 mybatis.mapperLocations=classpath:mapper/**/*.xml

3.数据库表结构

CREATE TABLE `cms_content` ( `contentId` varchar(40) NOT NULL COMMENT '内容ID', `title` varchar(150) NOT NULL COMMENT '标题', `content` longtext COMMENT '文章内容', `releaseDate` datetime NOT NULL COMMENT '发布日期', PRIMARY KEY (`contentId`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8 COMMENT='CMS内容表';

4.实体类

import java.util.Date;

public class CmsContentPO {

private String contentId;

private String title;

private String content;

private Date releaseDate;

public String getContentId() {

return contentId;

}

public void setContentId(String contentId) {

this.contentId = contentId;

}

public String getTitle() {

return title;

}

public void setTitle(String title) {

this.title = title;

}

public String getContent() {

return content;

}

public void setContent(String content) {

this.content = content;

}

public Date getReleaseDate() {

return releaseDate;

}

public void setReleaseDate(Date releaseDate) {

this.releaseDate = releaseDate;

}

}

5.mapper接口

public interface CrawlerMapper {

int addCmsContent(CmsContentPO record);

}

6.CrawlerMapper.xml文件

insert into cms_content (contentId, title, releaseDate, content) values (#{contentId,jdbcType=VARCHAR}, #{title,jdbcType=VARCHAR}, #{releaseDate,jdbcType=TIMESTAMP}, #{content,jdbcType=LONGVARCHAR})

7.知乎页面内容处理类ZhihuPageProcessor

主要用于解析爬取到的知乎html页面。

@Component

public class ZhihuPageProcessor implements PageProcessor {

private Site site = Site.me().setRetryTimes(3).setSleepTime(1000);

@Override

public void process(Page page) {

page.addTargetRequests(page.getHtml().links().regex("https://www\\.zhihu\\.com/question/\\d+/answer/\\d+.*").all());

page.putField("title", page.getHtml().xpath("//h1[@class='QuestionHeader-title']/text()").toString());

page.putField("answer", page.getHtml().xpath("//div[@class='QuestionAnswer-content']/tidyText()").toString());

if (page.getResultItems().get("title") == null) {

// 如果是列表页,跳过此页,pipeline不进行后续处理

page.setSkip(true);

}

}

@Override

public Site getSite() {

return site;

}

}

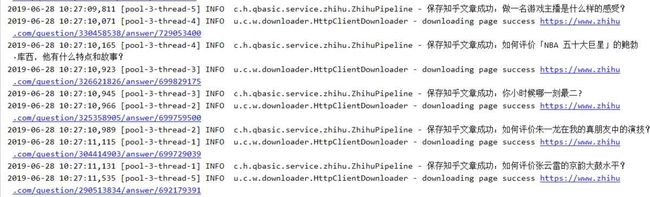

8.知乎数据处理类ZhihuPipeline

主要用于将知乎html页面解析出的数据存储到mysql数据库。

@Component

public class ZhihuPipeline implements Pipeline {

private static final Logger LOGGER = LoggerFactory.getLogger(ZhihuPipeline.class);

@Autowired

private CrawlerMapper crawlerMapper;

public void process(ResultItems resultItems, Task task) {

String title = resultItems.get("title");

String answer = resultItems.get("answer");

CmsContentPO contentPO = new CmsContentPO();

contentPO.setContentId(UUID.randomUUID().toString());

contentPO.setTitle(title);

contentPO.setReleaseDate(new Date());

contentPO.setContent(answer);

try {

boolean success = crawlerMapper.addCmsContent(contentPO) > 0;

LOGGER.info("保存知乎文章成功:{}", title);

} catch (Exception ex) {

LOGGER.error("保存知乎文章失败", ex);

}

}

}

9.知乎爬虫任务类ZhihuTask

每十分钟启动一次爬虫。

@Component

public class ZhihuTask {

private static final Logger LOGGER = LoggerFactory.getLogger(ZhihuPipeline.class);

@Autowired

private ZhihuPipeline zhihuPipeline;

@Autowired

private ZhihuPageProcessor zhihuPageProcessor;

private ScheduledExecutorService timer = Executors.newSingleThreadScheduledExecutor();

public void crawl() {

// 定时任务,每10分钟爬取一次

timer.scheduleWithFixedDelay(() -> {

Thread.currentThread().setName("zhihuCrawlerThread");

try {

Spider.create(zhihuPageProcessor)

// 从https://www.zhihu.com/explore开始抓

.addUrl("https://www.zhihu.com/explore")

// 抓取到的数据存数据库

.addPipeline(zhihuPipeline)

// 开启2个线程抓取

.thread(2)

// 异步启动爬虫

.start();

} catch (Exception ex) {

LOGGER.error("定时抓取知乎数据线程执行异常", ex);

}

}, 0, 10, TimeUnit.MINUTES);

}

}

10.Spring boot程序启动类

@SpringBootApplication

@MapperScan(basePackages = "com.hyzx.qbasic.dao")

public class Application implements CommandLineRunner {

@Autowired

private ZhihuTask zhihuTask;

public static void main(String[] args) throws IOException {

SpringApplication.run(Application.class, args);

}

@Override

public void run(String... strings) throws Exception {

// 爬取知乎数据

zhihuTask.crawl();

}

}

到此这篇关于springboot+WebMagic+MyBatis爬虫框架的使用的文章就介绍到这了,更多相关springboot+WebMagic+MyBatis爬虫内容请搜索脚本之家以前的文章或继续浏览下面的相关文章希望大家以后多多支持脚本之家!