Task 01- LR-Softmax-MLP

Task 01 线性回归-Softmax-MLP

1 线性回归

1.1 是什么

即单层神经网络,是最简单的机器学习模型之一:

y = x 1 w 1 + x 2 w 2 + . . . + x n w n + b = x w + b \bold y=x_1w_1+x_2w_2+...+x_nw_n+b=\bold x \bold w+b y=x1w1+x2w2+...+xnwn+b=xw+b

其中 w \bold w w即权重, b b b即偏置。

1.2 模型训练

- 损失:均方误差

L ( w , b ) = 1 n ∑ i = 1 n 1 2 ( w T x ( i ) + b − y ( i ) ) 2 L(\bold w,b)=\frac {1}{n} \sum_{i=1}^n\frac {1}{2}(\bold{w}^{T}\bold{x}^{(i)}+b-y^{(i)})^2 L(w,b)=n1i=1∑n21(wTx(i)+b−y(i))2

- 优化:mini-batch stochastic gradient descent (SGD)

( w , b ) ← ( w , b ) − α ∣ β ∣ ∑ i ∈ β ∂ ( w , b ) l ( i ) ( w , b ) o r θ ← θ − α ∣ β ∣ ∇ θ l ( i ) ( θ ) ∇ θ l ( i ) ( θ ) = [ x 1 ( i ) x 2 ( i ) 1 ] ( y ^ ( i ) − y ( i ) ) (\bold{w},b) \leftarrow (\bold{w},b)-\frac{\alpha}{\left|{\beta}\right|} \sum_{i\in{\beta}}\partial_{(\bold w,b)}l^{(i)}(\bold w,b) \\ or \\ \bold\theta\leftarrow\theta-\frac{\alpha}{\left|{\beta}\right|}\nabla_{\bold\theta}l^{(i)}(\bold\theta) \\ \nabla_{\bold\theta}l^{(i)}(\bold\theta)=\begin{bmatrix} x_1^{(i)} \\ x_2^{(i)} \\ 1 \end{bmatrix}(\hat{y}^{(i)}-y^{(i)}) (w,b)←(w,b)−∣β∣αi∈β∑∂(w,b)l(i)(w,b)orθ←θ−∣β∣α∇θl(i)(θ)∇θl(i)(θ)=⎣⎢⎡x1(i)x2(i)1⎦⎥⎤(y^(i)−y(i))

其中 α \alpha α为学习率, β \beta β为批次大小

1.3 表示方法

from 《动手学深度学习》

1.0.0 学习代码

# define a timer class to record time

class Timer(object):

"""Record multiple running times."""

def __init__(self):

self.times = []

self.start()

def start(self):

# start the timer

self.start_time = time.time()

def stop(self):

# stop the timer and record time into a list

self.times.append(time.time() - self.start_time)

return self.times[-1]

def avg(self):

# calculate the average and return

return sum(self.times)/len(self.times)

def sum(self):

# return the sum of recorded time

return sum(self.times)

2 Softmax

2.0 逻辑回归

- 信息熵

H ( X ) = − ∑ i = 1 n p ( x i ) l o g ( p ( x i ) ) H(X)=-\sum_{i=1}^{n}p(x_i)log(p(x_i)) H(X)=−i=1∑np(xi)log(p(xi))

-

相对熵(Kullback-leibler散度):描述两个概率分布的差距

K L ( P ∣ ∣ Q ) = ∑ P ( x ) l o g P ( x ) Q ( x ) KL(P||Q)=\sum P(x)log\frac{P(x)}{Q(x)} KL(P∣∣Q)=∑P(x)logQ(x)P(x)

H ( p , q ) = − ∑ p ( x i ) l o g ( q ( x i ) ) H(p,q)=-\sum{p(x_i)log(q(x_i))} H(p,q)=−∑p(xi)log(q(xi))

相对熵=信息熵+交叉熵

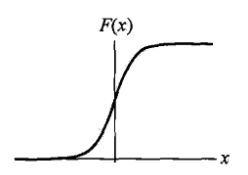

- Logistic分布( F ( x ) = 1 1 + e − ( x − μ / γ ) F(x)=\frac{1}{1+e^{-(x-\mu/\gamma)}} F(x)=1+e−(x−μ/γ)1)图形为“S形曲线”(sigmoid curve)

-

几率(odds):某事件发生的概率与不发生的概率之比

-

对数几率(logit函数):

l o g i t ( p ) = l o g p 1 − p logit(p)=log\frac{p}{1-p} logit(p)=log1−pp -

Logistic regression (for classification)

l o g i t ( p ) = w x logit(p)=\bold{wx} logit(p)=wx

2.1 是什么

单层神经网络的一种,线性回归用于连续值,softmax用于多输出离散值(分类任务)

2.2 模型运算

o 1 = x 1 w 11 + x 2 w 21 + x 3 w 31 + x 4 w 41 + b 1 o 2 = x 1 w 12 + x 2 w 22 + x 3 w 32 + x 4 w 42 + b 2 o 3 = x 1 w 13 + x 2 w 23 + x 3 w 33 + x 4 w 43 + b 3 y ^ 1 , y ^ 2 , y ^ 3 = softmax ( o 1 , o 2 , o 3 ) y ^ 1 = exp ( o 1 ) ∑ i = 1 3 exp ( o i ) , y ^ 2 = exp ( o 2 ) ∑ i = 1 3 exp ( o i ) , y ^ 3 = exp ( o 3 ) ∑ i = 1 3 exp ( o i ) . o r O = X W + b , Y ^ = softmax ( O ) , \begin{aligned} o_1 &= x_1 w_{11} + x_2 w_{21} + x_3 w_{31} + x_4 w_{41} + b_1 \end{aligned} \\ \begin{aligned} o_2 &= x_1 w_{12} + x_2 w_{22} + x_3 w_{32} + x_4 w_{42} + b_2 \end{aligned} \\ \begin{aligned} o_3 &= x_1 w_{13} + x_2 w_{23} + x_3 w_{33} + x_4 w_{43} + b_3 \end{aligned} \\ \hat{y}_1, \hat{y}_2, \hat{y}_3 = \text{softmax}(o_1, o_2, o_3) \\ \hat{y}_1 = \frac{ \exp(o_1)}{\sum_{i=1}^3 \exp(o_i)},\quad \hat{y}_2 = \frac{ \exp(o_2)}{\sum_{i=1}^3 \exp(o_i)},\quad \hat{y}_3 = \frac{ \exp(o_3)}{\sum_{i=1}^3 \exp(o_i)}. \\ or \\ \begin{aligned} \boldsymbol{O} &= \boldsymbol{X} \boldsymbol{W} + \boldsymbol{b},\\ \boldsymbol{\hat{Y}} &= \text{softmax}(\boldsymbol{O}), \end{aligned} o1=x1w11+x2w21+x3w31+x4w41+b1o2=x1w12+x2w22+x3w32+x4w42+b2o3=x1w13+x2w23+x3w33+x4w43+b3y^1,y^2,y^3=softmax(o1,o2,o3)y^1=∑i=13exp(oi)exp(o1),y^2=∑i=13exp(oi)exp(o2),y^3=∑i=13exp(oi)exp(o3).orOY^=XW+b,=softmax(O),

- 损失函数:交叉熵(cross entropy)

H ( y ( i ) , y ^ ( i ) ) = − ∑ j = 1 q y j ( i ) log y ^ j ( i ) ℓ ( Θ ) = 1 n ∑ i = 1 n H ( y ( i ) , y ^ ( i ) ) , H\left(\boldsymbol y^{(i)}, \boldsymbol {\hat y}^{(i)}\right ) = -\sum_{j=1}^q y_j^{(i)} \log \hat y_j^{(i)} \\ \ell(\boldsymbol{\Theta}) = \frac{1}{n} \sum_{i=1}^n H\left(\boldsymbol y^{(i)}, \boldsymbol {\hat y}^{(i)}\right ), H(y(i),y^(i))=−j=1∑qyj(i)logy^j(i)ℓ(Θ)=n1i=1∑nH(y(i),y^(i)),

3 MLP (Multi-Layer Perceptron)

3.1 是什么

多层感知机在单层神经网络的基础上引入了⼀到多个隐藏层(hidden layer)。隐藏层位于输入层和输出层之间。

3.2 模型运算

H = X W h + b h , O = H W o + b o , \begin{aligned} \boldsymbol{H} &= \boldsymbol{X} \boldsymbol{W}_h + \boldsymbol{b}_h,\\ \boldsymbol{O} &= \boldsymbol{H} \boldsymbol{W}_o + \boldsymbol{b}_o, \end{aligned} HO=XWh+bh,=HWo+bo,