手把手教你实现实时人脸识别,带你刷脸刷颜值

dlib库的安装

问题

dlib原本是一个开源的c++人脸识别库,在github上接近万星,是一个非常优秀的开源框架。对于dlib库的c++版安装是比较麻烦的,python版dlib库安装网上资料一大堆,可以这样说,百分之九十九都是错的(不要问我为什么,我在本机和服务器上亲自试验过几遍)。下面,我将说明dlib库的安装流程。

解决方案

要知道一点,搜索引擎首选google(百度是真的烂),至于google的问题,还是百度一下“谷歌访问助手”,下载一下这个插件,然后你就可以免费访问google了(这是访问google最简单的方式了)。

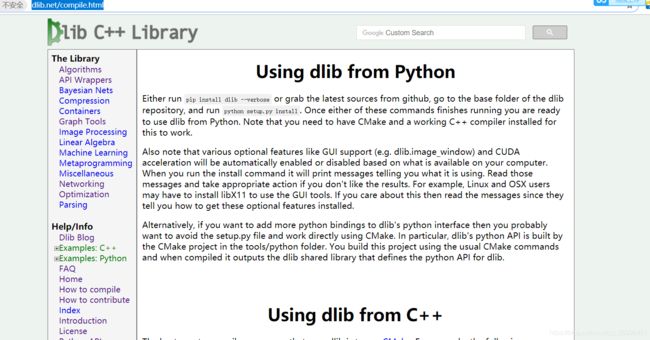

我这里要说的是,直接看dlib的官方文档。无论谁写的安装教程,都不如官方文档来的权威,并且大大节省了你在搜索引擎上花的时间。

直接看图,官网说的是直接pip安装就ok了,但是麻烦的地方是需要c++的环境,还有cmake。所以,看下面的安装步骤

1、保证你的windows有c++的环境,建议你安装visual studio 2017,具体安装流程看这里https://www.jianshu.com/p/ed645d7b2c8a

2、安装一个anaconda,我建议大家都安装这个神器,他自带的虚拟环境管理工具是非常棒的,并且现在的人工智能环境下,基本都是用他,不多说,谁用谁知道。不过conda官网下载会非常慢,建议镜像下载。

3、接下来是安装cmake,很简单 pip install cmake就搞定了

4、最后就是安装dlib了,直接pip install dlib。这一步很可能会有一个大坑,你安装是能安装上的,但是多半import dlib会出错,是因为dlib版本太高了,pip默认下载的最新版dlib为19.18.0,我们应该换成19.17.0,所以,安装命令为:pip install dlib==19.17.0 别看这一个小小的错误,把我们组的一个技术老大都搞了一天。我算幸运,我安装的时候还没有19.18.0这个版本。

上面就是dlib安装的所有步骤了,你搜索很多博客会发现,让你装各种东西,什么boost这些,根本就不需要,官网都没有。

开始人脸验证

问题

人脸验证的问题,实际上就是我们过车站安检的过程,拿着你的身份证,摆个pose,看看身份证上的照片是不是您,也就是看你是否照骗。江湖也称这个过程为1:1人脸验证。

可别看这个小小的过程,实际包含了很多的细节,在照片的预处理中,就有几个环节。

1、人脸截取

在一张你的照片中,定位到你的人脸,然后截取人脸。

2、人脸校准

你拍的照片可能脸是侧脸,头是扬起来的,这样会影响模型的识别准确率,所以需要把人脸摆正

这里我结合opencv,写了一个实时人脸定位+校准的代码

import cv2

import dlib

# from skimage import io

import time

vc = cv2.VideoCapture(0)

# 使用特征提取器get_frontal_face_detector

detector = dlib.get_frontal_face_detector()

# dlib的68点模型,使用作者训练好的特征预测器

predictor = dlib.shape_predictor("shape_predictor_68_face_landmarks.dat")

while True:

# Grab a single frame of video

ret, img = vc.read()

# img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# 特征提取器的实例化

start = time.time()

# dets = detector(img, 1)

# print(dets)

# print(time.time()-start)

start = time.time()

dets, scores, idx = detector.run(img, 1, 0)

print(type(scores))

print(dets, scores)

print(time.time()-start)

# print(dets)

# print("人脸数:", len(dets))

for k, d in enumerate(dets):

# print("第", k+1, "个人脸d的坐标:",

# "left:", d.left(),

# "right:", d.right(),

# "top:", d.top(),

# "bottom:", d.bottom())

width = d.right() - d.left()

heigth = d.bottom() - d.top()

# print('人脸面积为:',(width*heigth))

# 利用预测器预测

shape = predictor(img, d)

# 标出68个点的位置

for i in range(68):

cv2.circle(img, (shape.part(i).x, shape.part(i).y), 4, (0, 255, 0), -1, 8)

cv2.putText(img, "", (shape.part(i).x, shape.part(i).y), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 255, 255))

# 显示一下处理的图片,然后销毁窗口

# Display the resulting image

cv2.imshow('Video', img)

# Hit 'q' on the keyboard to quit!

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Release handle to the webcam

video_capture.release()

cv2.destroyAllWindows()

其中有个模型文件需要去dlib官网下载http://dlib.net/files/

只要下载了模型,把代码拷贝到你的IDE,就能直接运行了

人脸验证

把你自己的模板图片,再给自己照一张图片,输入模型,就能进行人脸验证了,结果返回的是2个128维的向量,计算他们的欧式距离,就能算出相似度了。dlib库用的模型是34层的resnet网络,我自己测试过多次,效果不错。不过要想达到工程能用的水平,自己得去调优。

这里我也写了一个实时得人脸验证代码,老规矩,直接拷贝就能运行

import face_recognition

import cv2

import numpy as np

# This is a demo of running face recognition on live video from your webcam. It's a little more complicated than the

# other example, but it includes some basic performance tweaks to make things run a lot faster:

# 1. Process each video frame at 1/4 resolution (though still display it at full resolution)

# 2. Only detect faces in every other frame of video.

# PLEASE NOTE: This example requires OpenCV (the `cv2` library) to be installed only to read from your webcam.

# OpenCV is *not* required to use the face_recognition library. It's only required if you want to run this

# specific demo. If you have trouble installing it, try any of the other demos that don't require it instead.

# Get a reference to webcam #0 (the default one)

video_capture = cv2.VideoCapture(0)

# Load a sample picture and learn how to recognize it.

obama_image = face_recognition.load_image_file("cut.jpg")

# print(face_recognition.face_encodings(obama_image))

face_locations = face_recognition.face_locations(obama_image)

# print(face_locations)

obama_face_encoding = face_recognition.face_encodings(obama_image, face_locations)[0]

# Load a second sample picture and learn how to recognize it.

# biden_image = face_recognition.load_image_file("biden.jpg")

# biden_face_encoding = face_recognition.face_encodings(biden_image)[0]

# Create arrays of known face encodings and their names

known_face_encodings = [

obama_face_encoding

# biden_face_encoding

]

known_face_names = [

"zh"

]

# Initialize some variables

face_locations = []

face_encodings = []

face_names = []

process_this_frame = True

while True:

# Grab a single frame of video

ret, frame = video_capture.read()

# Resize frame of video to 1/4 size for faster face recognition processing

small_frame = cv2.resize(frame, (0, 0), fx=1, fy=1)

# Convert the image from BGR color (which OpenCV uses) to RGB color (which face_recognition uses)

rgb_small_frame = small_frame[:, :, ::-1]

# Only process every other frame of video to save time

if process_this_frame:

# Find all the faces and face encodings in the current frame of video

face_locations = face_recognition.face_locations(rgb_small_frame)

face_encodings = face_recognition.face_encodings(rgb_small_frame, face_locations)

face_names = []

for face_encoding in face_encodings:

# See if the face is a match for the known face(s)

matches = face_recognition.compare_faces(known_face_encodings, face_encoding, tolerance=0.5)

# print("matchs:"+str(matches))

name = "Un"

# # If a match was found in known_face_encodings, just use the first one.

# if True in matches:

# first_match_index = matches.index(True)

# name = known_face_names[first_match_index]

# Or instead, use the known face with the smallest distance to the new face

face_distances = face_recognition.face_distance(known_face_encodings, face_encoding)

print("face_distances:" + str(face_distances))

best_match_index = np.argmin(face_distances)

if matches[best_match_index]:

name = known_face_names[best_match_index]

face_names.append(name)

process_this_frame = not process_this_frame

# Display the results

for (top, right, bottom, left), name in zip(face_locations, face_names):

# Scale back up face locations since the frame we detected in was scaled to 1/4 size

# top *= 4

# right *= 4

# bottom *= 4

# left *= 4

# Draw a box around the face

cv2.rectangle(frame, (left, top), (right, bottom), (0, 0, 255), 2)

# Draw a label with a name below the face

cv2.rectangle(frame, (left, bottom - 35), (right, bottom), (0, 0, 255), cv2.FILLED)

font = cv2.FONT_HERSHEY_DUPLEX

cv2.putText(frame, name, (left + 6, bottom - 6), font, 1.0, (255, 255, 255), 1)

# Display the resulting image

cv2.imshow('Video', frame)

# Hit 'q' on the keyboard to quit!

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Release handle to the webcam

video_capture.release()

cv2.destroyAllWindows()

这里其实是dlib库的一个上层库,face_recognition,先pip install face_recognition,然后就能运行了。

至于原生的dlib写的人脸验证代码,我写成了一个服务,我们项目组也在一些地方使用了,对dlib的人脸识别进行了进一步的优化,达到了能工程落地,如果想要获得整个代码,请关注“暮秋梵星”,后台回复dlib,即可获得可以落地的人脸识别代码

今天的内容到此为止,后续有更加精彩的python小知识的文章更新,欢迎阅读。

对您能有哪怕一点帮助,就是我坚持写下去的动力。