【Pytorch】神经网络-卷积层 - 学习笔记

视频地址

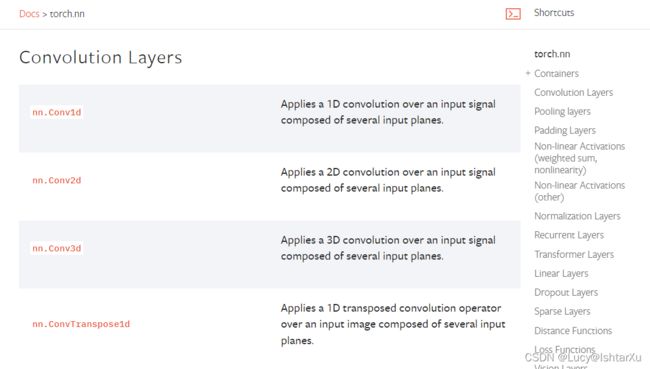

打开torch官方文档的卷积层页面

最常用的是nn.conv2d,点击

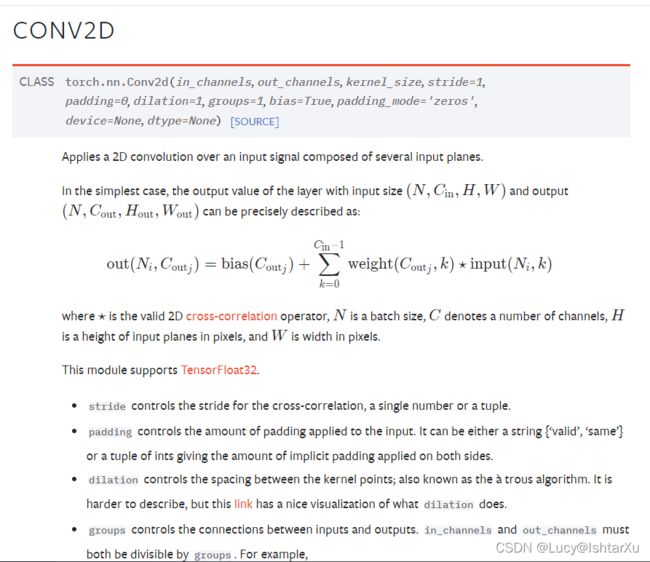

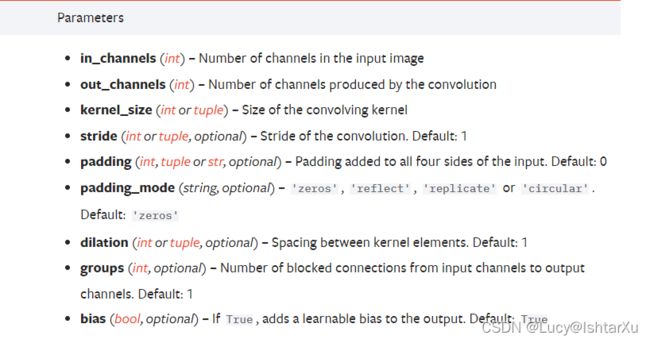

CLASStorch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode='zeros', device=None, dtype=None)

一些不太清楚的

- groups:分组卷积,几乎用不到

- bias:通常设置为True

写代码初始化一下model

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset, batch_size=64)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0) # 把self后面的变量在其它函数里也能够用到

def forward(self, x):

x = self.conv1(x)

return x

model = Model()

print(model)

输出结果为

D:\Anaconda3\envs\pytorch\python.exe D:/研究生/代码尝试/nn_conv2d.py

Model(

(conv1): Conv2d(3, 6, kernel_size=(3, 3), stride=(1, 1))

)

进程已结束,退出代码为 0

再把dataloder装进去,即图片输入

for data in dataloader:

imgs, targets = data

output = model(imgs)

print(imgs.shape)

print(output.shape)

输出结果为(前两行)

torch.Size([64, 3, 32, 32])

torch.Size([64, 6, 30, 30])

可以看出,这里的batch_size是64,输入channel有3层,卷积后有6个channel,卷积核不需要初始化,是自动初始化的

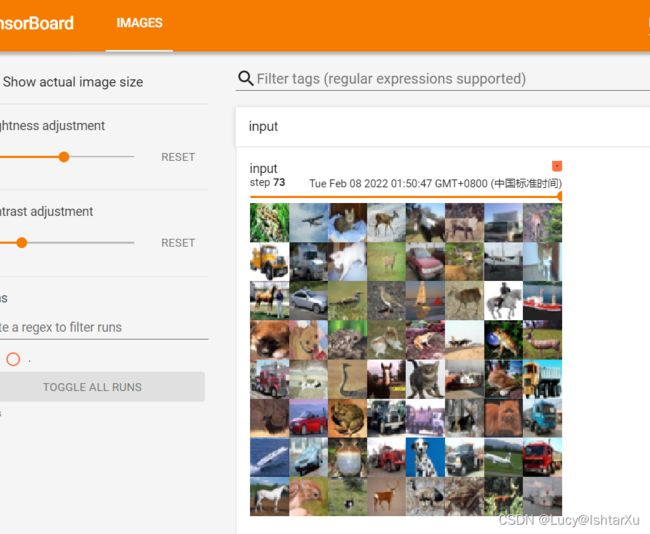

可视化,用Tensorboard显示一下,顺便把代码贴全

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset, batch_size=64)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0) # 把self后面的变量在其它函数里也能够用到

def forward(self, x):

x = self.conv1(x)

return x

model = Model()

writer = SummaryWriter("./logs")

step = 0

for data in dataloader:

imgs, targets = data

output = model(imgs)

print(imgs.shape)

print(output.shape)

# 输入大小 torch.Size([64, 3, 32, 32])

writer.add_images("input", imgs, step)

# 输出大小 torch.Size([64, 6, 30, 30]) 但是六个channel没法显示,所以要转换一下

# ([64, 6, 30, 30]) ->([xxx, 3, 30, 30])

output = torch.reshape(output, (-1, 3, 30, 30))

writer.add_images("output", output, step)

step = step + 1

还是老样子

tensorboard --logdir=logs