sklearn-SVM 模型保存、交叉验证与网格搜索

目录

一、sklearn-SVM

1、SVM模型训练

2、SVM模型参数输出

3、SVM模型保存与读取

二、交叉验证与网络搜索

1、交叉验证

1)、k折交叉验证(Standard Cross Validation)

2)、留一法交叉验证(leave-one-out)

3)、打乱划分交叉验证(shufflfle-split cross-validation)

2、交叉验证与网络搜索

1)简单网格搜索: 遍历法

2)其他情况

一、sklearn-SVM

1、SVM模型训练

需要注意的是,我们传入的数据是2维度的(数量,特征),标签是一维度的。

示例代码:

# -*- coding: utf-8 -*-

from __future__ import print_function

import numpy as np

from sklearn.svm import SVC

from sklearn import metrics

# --------------------加载数据---------------------

Xtrain = np.load("./data/data/3D/svm训练x.npy")

ytrain = np.load("./data/data/3D/svm训练y.npy")

Xtest = np.load("./data/data/3D/svm测试x.npy")

ytest = np.load("./data/data/3D/svm测试y.npy")

# 调用SVC, 即SVM

clf = SVC(C=10, decision_function_shape='ovr', kernel='rbf')

# 训练

clf.fit(Xtrain,ytrain)

# 预测

predicted=clf.predict(Xtest)

print('预测值:',predicted[0:10]) # 输出结果是对应数据的标签

print('实际值:',ytest[0:10])

# 获取训练集的准确率

train_score = clf.score(Xtrain,ytrain)

print("训练集:",train_score)

# 获取验证集的准确率

test_score = clf.score(Xtest,ytest)

print("测试集:",test_score)

# 采用混淆矩阵(metrics)计算各种评价指标

print('精准值:',metrics.precision_score(ytest, predicted, average='weighted'))

print('召回率:',metrics.recall_score(ytest, predicted, average='weighted'))

print('F1:',metrics.f1_score(ytest, predicted, average='weighted'))

print("准确率:",np.mean(ytest == predicted))

# 分类报告

class_report=metrics.classification_report(ytest, predicted, target_names=["class 1","class 2","class 3",'class 4'])

print(class_report)

# 输出混淆矩阵

confusion_matrix=metrics.confusion_matrix(ytest, predicted)

print('--混淆矩阵--')

print(confusion_matrix)输出:

# 预测值: [1. 1. 1. 1. 1. 1. 1. 0. 1. 1.]

# 实际值: [1. 1. 1. 1. 1. 1. 1. 0. 1. 0.]

# 训练集: 0.8335901386748844

# 测试集: 0.8317925012840267

# 精准值: 0.8288307806194355

# 召回率: 0.8317925012840267

# F1: 0.8211867046380255

# 准确率: 0.8317925012840267

# precision recall f1-score support

#

# class 1 0.81 0.56 0.66 1140

# class 2 0.84 0.95 0.89 2727

# class 3 0.00 0.00 0.00 3

# class 4 1.00 1.00 1.00 24

#

# avg / total 0.83 0.83 0.82 3894

#

# --混淆矩阵--

# [[ 637 503 0 0]

# [ 149 2578 0 0]

# [ 0 3 0 0]

# [ 0 0 0 24]]

#

# Process finished with exit code 02、SVM模型参数输出

直接print()即可。

示例代码:

# -*- coding: utf-8 -*-

from sklearn.svm import SVC

# 调用SVC, 即SVM

clf = SVC(C=10, decision_function_shape='ovr', kernel='rbf')

print(clf)

# 输出

# D:\anaconda3\python.exe D:/me-zt/0.py

# SVC(C=10, cache_size=200, class_weight=None, coef0=0.0,

# decision_function_shape='ovr', degree=3, gamma='auto', kernel='rbf',

# max_iter=-1, probability=False, random_state=None, shrinking=True,

# tol=0.001, verbose=False)

#

# Process finished with exit code 0

3、SVM模型保存与读取

- joblib 方法保存

# -*- coding: utf-8 -*-

from __future__ import print_function

import numpy as np

from sklearn.svm import SVC

from sklearn.externals import joblib # jbolib模块

# 最新版本:import joblib

# --------------------加载数据---------------------

Xtrain = np.load("./data/data/3D/svm训练x.npy")

ytrain = np.load("./data/data/3D/svm训练y.npy")

Xtest = np.load("./data/data/3D/svm测试x.npy")

ytest = np.load("./data/data/3D/svm测试y.npy")

# 调用SVC, 即SVM

clf = SVC(C=10, decision_function_shape='ovr', kernel='rbf')

# 训练

clf.fit(Xtrain,ytrain)

# 预测

predicted=clf.predict(Xtest)

print('预测值:',predicted[0:10]) # 输出结果是对应数据的标签

print('实际值:',ytest[0:10])

# 保存Model

joblib.dump(clf, './clf.pkl')

# 读取Model

clf_1 = joblib.load('./clf.pkl')

# 测试读取后的Model

print(clf_1.predict(Xtest[0:10]))-

pickle 方法保存

有时候可能采用 joblib 保存的模型在读取的时候会出现错误。此时利用 pickle 方法保存读取正常。

from thundersvm import SVC

import pickle

svm_model = SVC(C=1, kernel = 'rbf')

# 保存模型

with open('./log/knn.pkl',"wb") as f:

pickle.dump(svm_model,f)

# 读取模型

with open('./log/knn_pickle.pkl',"rb") as f:

clf_1 = pickle.load(f)二、交叉验证与网络搜索

1、交叉验证

1)、k折交叉验证(Standard Cross Validation)

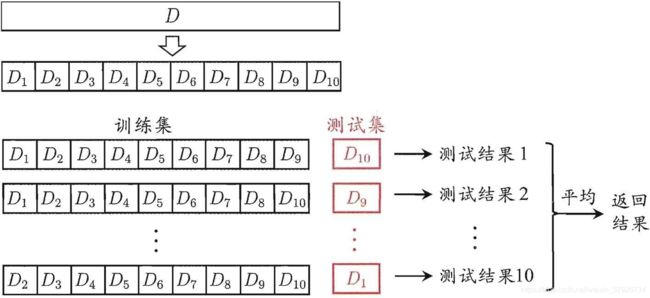

k通常取5或者10,如果取10,则表示再原始数据集上,进行10次划分,每次划分都进行以此训练、评估,对5次划分结果求取平均值作为最终的评价结果。10折交叉验证的原理图如下所示(引用地址:Python中sklearn实现交叉验证_嵌入式技术的博客-CSDN博客_sklearn 交叉验证):

代码:

# -*- coding: utf-8 -*-

from __future__ import print_function

import numpy as np

from sklearn.svm import SVC

from sklearn.model_selection import cross_val_score

# --------------------加载数据---------------------

Xtrain = np.load("./data/data/3D/svm训练x.npy")

ytrain = np.load("./data/data/3D/svm训练y.npy")

Xtest = np.load("./data/data/3D/svm测试x.npy")

ytest = np.load("./data/data/3D/svm测试y.npy")

# 调用SVC, 即SVM

clf = SVC(C=10, decision_function_shape='ovr', kernel='rbf')

# 以下指令中的cross_val_score自动数据进行k折交叉验证,

# 注意:cv默认是3,可以修改cv为5或10,即表示5或10折交叉验证,

scores = cross_val_score(clf,Xtrain,ytrain,n_jobs=5,cv=5) # 同时工作的cpu个数(-1代表全部)

# 打印分析结果,由于cv为5,即5折交叉验证,所以第一个输出为 5 个指标值

print("Cross validation scores:{}".format(scores))

print("Mean cross validation score:{:2f}".format(scores.mean()))

# 输出

# Cross validation scores:[0.81410256 0.83568678 0.81643132 0.83161954 0.83547558]

# Mean cross validation score:0.826663★ 引入交叉验证分离器(cross-validation splitter)方式 -分层k折交叉验证 ★

代码:

# -*- coding: utf-8 -*-

from __future__ import print_function

import numpy as np

from sklearn.svm import SVC

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import KFold

# --------------------加载数据---------------------

Xtrain = np.load("./data/data/3D/svm训练x.npy")

ytrain = np.load("./data/data/3D/svm训练y.npy")

Xtest = np.load("./data/data/3D/svm测试x.npy")

ytest = np.load("./data/data/3D/svm测试y.npy")

# 调用SVC, 即SVM

clf = SVC(C=10, decision_function_shape='ovr', kernel='rbf')

# 引入交叉分离器

kfold = KFold(n_splits=5) # 不分层k折交叉验证,此处为 5

# kfold = KFold(n_splits=5, shuffle=True, random_state=0) # 将数据打乱来代替分层的方式

scores = cross_val_score(clf,Xtrain,ytrain,cv=kfold)

# for train, test in kfold.split(X=Xtrain):

# print("%s-%s" % (train, test))2)、留一法交叉验证(leave-one-out)

★ 引入交叉验证分离器(cross-validation splitter)方式 -分层k折交叉验证 ★

代码:

# -*- coding: utf-8 -*-

from __future__ import print_function

import numpy as np

from sklearn.svm import SVC

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import LeaveOneOut

# --------------------加载数据---------------------

Xtrain = np.load("./data/data/3D/svm训练x.npy")

ytrain = np.load("./data/data/3D/svm训练y.npy")

Xtest = np.load("./data/data/3D/svm测试x.npy")

ytest = np.load("./data/data/3D/svm测试y.npy")

# 调用SVC, 即SVM

clf = SVC(C=10, decision_function_shape='ovr', kernel='rbf')

# 引入交叉分离器

loo = LeaveOneOut()

scores = cross_val_score(clf,Xtrain,ytrain,cv=loo)3)、打乱划分交叉验证(shufflfle-split cross-validation)

★ 引入交叉验证分离器(cross-validation splitter)方式 -分层k折交叉验证 ★

在打乱划分交叉验证中,每次划分为训练集取样 train_size 个点,为测试集取样 test_size 个(不相交的)点。重复 n_iter 次。

示例图:

![]()

代码:

# -*- coding: utf-8 -*-

from __future__ import print_function

import numpy as np

from sklearn.svm import SVC

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import ShuffleSplit

# --------------------加载数据---------------------

Xtrain = np.load("./data/data/3D/svm训练x.npy")

ytrain = np.load("./data/data/3D/svm训练y.npy")

Xtest = np.load("./data/data/3D/svm测试x.npy")

ytest = np.load("./data/data/3D/svm测试y.npy")

# 调用SVC, 即SVM

clf = SVC(C=10, decision_function_shape='ovr', kernel='rbf')

# 引入交叉分离器

shuffle_split = ShuffleSplit(test_size=1000, train_size=10, n_splits=10)

scores = cross_val_score(clf,Xtrain,ytrain,cv=shuffle_split)2、交叉验证与网络搜索

1)简单网格搜索: 遍历法

代码:

import numpy as np

import pandas as pd

from sklearn.model_selection import GridSearchCV

from sklearn.svm import SVC

# --------------------加载数据---------------------

Xtrain = np.load("./data/data/3D/svm训练x.npy")

ytrain = np.load("./data/data/3D/svm训练y.npy")

Xtest = np.load("./data/data/3D/svm测试x.npy")

ytest = np.load("./data/data/3D/svm测试y.npy")

#调用SVC()

clf = SVC(decision_function_shape='ovr', kernel='rbf')

# 这里要实验的超参数有两个 3 个 svg__gama 和 3 个 svg__C 一共 9 种组合

param_grid = {'C': [0.1, 1, 100], 'gamma': [0.1, 10, 100]}

# 创建一个网格搜索: 9 组参数组合,5 折交叉验证

grid_search = GridSearchCV(clf, param_grid, cv=5)

# 开始搜索

grid_search.fit(Xtrain,ytrain)

grid_search.score(Xtrain,ytrain) # 输出精度

# grid_search.best_params_ 获得最优参数

# grid_search.best_score_ 保存的是交叉验证的平均精度,是在训练集上进行交叉验证得到的

print(grid_search.best_params_,grid_search.best_score_) # 最优参数

print(grid_search.best_estimator_) # 访问最佳参数对应的模型,它是在整个训练集上训练得到的

results = pd.DataFrame(grid_search.cv_results_) # 网格搜索的结果,将字典转换成 pandas 数据框后再查看

print(results)

# ★★★以下为最优模型在测试集上的得分以及模型保存★★★

# 获取最优模型

optimal_SVM = grid_search.best_estimator_

# 预测

predicted = optimal_SVM.predict(Xtest)

print('预测值:', predicted[0:21]) # 输出结果是对应数据的标签

print('实际值:', ytest[0:21])

# 获取验证集的准确率

test_score = optimal_SVM.score(Xtest, ytest)

print("测试集:", test_score)

# 保存Model

joblib.dump(optimal_SVM, './optimal_SVM.pkl')输出:

# {'C': 100, 'gamma': 0.1} 0.8703133025166924

#

# SVC(C=100, cache_size=200, class_weight=None, coef0=0.0,

# decision_function_shape='ovr', degree=3, gamma=0.1, kernel='rbf',

# max_iter=-1, probability=False, random_state=None, shrinking=True,

# tol=0.001, verbose=False)

#

# mean_fit_time ... std_train_score

# 0 12.477704 ... 0.002534

# 1 35.585408 ... 0.000101

# 2 35.656311 ... 0.000101

# 3 10.782130 ... 0.001715

# 4 47.109424 ... 0.000000

# 5 49.324211 ... 0.000000

# 6 10.869440 ... 0.001241

# 7 49.921145 ... 0.000000

# 8 50.169114 ... 0.000000

#

# [9 rows x 22 columns]

#

# Process finished with exit code 02)其他情况

- 在非网格的空间中搜索

GridSearchCV 的 param_grid 可以是字典组成的列表(a list of dictionaries)。列表中的每个字典可扩展为一个独立的网格。

param_grid =

[{'kernel': ['rbf'],'C': [0.001, 0.01, 0.1, 1, 10, 100],'gamma': [0.001, 0.01, 0.1, 1, 10, 100]},{'kernel': ['linear'],'C': [0.001, 0.01, 0.1, 1, 10, 100]}]- 使用不同的交叉验证策略进行网格搜索

将数据单次划分为训练集和测试集,这可能会导致结果不稳定,过于依赖数据的此次划分。

嵌套交叉验证(nested cross-validation):不是只将原始数据一次划分为训练集和测试集,而是使用交叉验证进行多次划分。

scores = cross_val_score(GridSearchCV(SVC(), param_grid, cv=5), iris.data, iris.target, cv=5)参考:

1、https://blog.csdn.net/xylbill97/article/details/106012517

2、https://blog.csdn.net/Suyebiubiu/article/details/102985349

3、K折交叉验证(KFold)常见使用方法_微学苑