使用Tensorflow完成

#加载包

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

#定义超参数,就是模型训练过程中的参数,包括学习率、隐层神经元个数、批数据个数

learning_rate = 0.01

max_train_steps = 1000

log_step = 5

#训练集

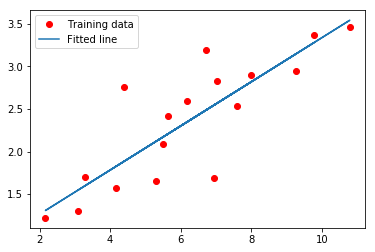

train_X = np.array([[3.3],[4.4],[5.5],[6.71],[6.93],[4.168],[9.779],[6.182],[7.59],[2.167], [7.042],[10.791],[5.313],[7.997],[5.654],[9.27],[3.1]], dtype=np.float32)

train_Y = np.array([[1.7],[2.76],[2.09],[3.19],[1.694],[1.573],[3.366],[2.596],[2.53], [1.221],[2.827],[3.465],[1.65],[2.904],[2.42],[2.94],[1.3]], dtype=np.float32)

total_samples = train_X.shape[0]

#构建模型

#输入数据

X = tf.placeholder(tf.float32, [None, 1])

#模型参数

W = tf.Variable(tf.random_normal([1,1]), name="weight")

b = tf.Variable(tf.zeros([1]), name="bias")

#推理值

Y = tf.matmul(X, W) + b

#实际值

Y_ = tf.placeholder(tf.float32, [None, 1])

#均方差损失

loss = tf.reduce_sum(tf.pow(Y-Y_, 2)) / (total_samples)

#随机梯度下降

optimizer = tf.train.GradientDescentOptimizer(learning_rate)

#最小化损失值

train_op = optimizer.minimize(loss)

with tf.Session() as sess:

#初始化全局变量

sess.run(tf.global_variables_initializer())

print("Start training:")

#分布训练

for step in range(max_train_steps):

sess.run(train_op, feed_dict={X: train_X, Y_:train_Y})

#打印日志 每log_step步打印损失、权值和偏置

if step % log_step == 0:

c = sess.run(loss, feed_dict={X: train_X, Y_:train_Y})

print("Step%d:%d, loss==%.4f, W==%.4f, b==%.4f"%(step, max_train_steps, c, sess.run(W), sess.run(b)))

#结果的损失

final_loss = sess.run(loss, feed_dict={X: train_X, Y_:train_Y})

print("Step:%d, loss==%.4f, W==%.4f, b==%.4f"%(max_train_steps, final_loss, sess.run(W), sess.run(b)))

#结果模型输出

weight, bias = sess.run([W, b])

print("Linear Regression Model: Y==%.4f*X+%.4f"%(weight, bias))

Start training:

Step0:1000, loss==1.1118, W==0.1942, b==0.1869

Step5:1000, loss==0.1962, W==0.3341, b==0.2140

Step10:1000, loss==0.1952, W==0.3331, b==0.2210

Step15:1000, loss==0.1942, W==0.3321, b==0.2280

Step20:1000, loss==0.1932, W==0.3312, b==0.2349

Step25:1000, loss==0.1923, W==0.3302, b==0.2417

Step30:1000, loss==0.1914, W==0.3293, b==0.2484

Step35:1000, loss==0.1905, W==0.3283, b==0.2551

......

Step965:1000, loss==0.1543, W==0.2596, b==0.7421

Step970:1000, loss==0.1542, W==0.2595, b==0.7428

Step975:1000, loss==0.1542, W==0.2594, b==0.7435

Step980:1000, loss==0.1542, W==0.2593, b==0.7441

Step985:1000, loss==0.1542, W==0.2593, b==0.7448

Step990:1000, loss==0.1542, W==0.2592, b==0.7454

Step995:1000, loss==0.1542, W==0.2591, b==0.7461

Step:1000, loss==0.1542, W==0.2590, b==0.7466

Linear Regression Model: Y==0.2590*X+0.7466

#可视化

%matplotlib inline

plt.plot(train_X, train_Y, 'ro', label='Training data')

plt.plot(train_X, weight * train_X + bias, label='Fitted line')

#添加图例

plt.legend()

#显示图像

plt.show()