tf中的常见卷积网络(残差网络ResNet)

tf中的常见网络

目录

- tf中的常见网络

-

- 1. tf中常见的卷积网络介绍

- 2. 1x1卷积核的作用

- 3. ResNet(残差网络)

- 4. CFAR100实战(基于ResNet18)

1. tf中常见的卷积网络介绍

- LeNet-5:2卷积层,2下采样层,3全连层

- AlexNet:添加了池化层

- VGG:运算量较大,卷积+池化+全连(如tf中的卷积神经网络实战中网络)

- GoogLeNet:在一个层中使用不同的卷积核

- ResNet:有效解决了因层数增加引起的训练困难问题(层数的加深导致后面层的梯度很难传播到前面的层)

- DenseNet:ResNet的短接线不只是与前面某一层相连,而是与所有层相连

2. 1x1卷积核的作用

- 降/升维,具体只改变通道的维度

3. ResNet(残差网络)

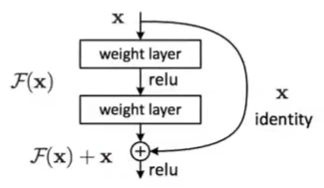

- 网络结构:

- 基本思想:随着网络层数的增加,准确率并不会一直增加(在22层左右开始出现此情况),ResNet在超过可训练的最大层数外的层加上一条连接线,当训练效果不好时,遗忘下面多余的层,获得至少准确率一直增加层数的极限;当训练效果好时(即多加的层能有训练效果时),便获得比准确率一直增加层数的极限好的准确率

- 残差的含义:最后的输出与输入x的关系是H(x),中间卷积层的状态函数是F(x),则输出输入之间的关系如下,可以理解为残差 F ( x ) = H ( x ) − x F(x)=H(x)-x F(x)=H(x)−x

- 一般将一个残差网络(如上图结构所示)称为基本残差网路结构(Basic Block),将一组Basic Block称为Res Block。其中Basic Block实现如下

class BasicBlock(layers.Layer):

def __int__(self, filter_num, stride=1):

super(BasicBlock, self).__init__()

# 一般将卷积层,正态层,relu层组成一个unit(单元)

self.conv1 = layers.Conv2D(filter_num, (3,3), strides=stride, padding='same')

self.bn1 = layers.BatchNormalization()

self.relu = layers.Activation('relu')

self.conv1 = layers.Conv2D(filter_num, (3,3), strides=1 padding='same')

self.bn1 = layers.BatchNormalization()

# 保证输出与遗忘线所连处的维度一致

if stride !=1:

self.downsample = Sequential()

self.downsample.add(layers.Conv2D(filter_num, (1,1), strides=strides))

self.downsample.add(layers.BatchNormalization())

else:

self.downsample = lambda x: x

def call(self, inputs, training=None):

residual = self.downsample(inputs)

conv1 = self.conv1(inputs)

bn1 = self.bn1(conv1)

relu1 = self.relu(bn1)

conv2 = self.conv2(relu1)

bn2 = self.bn2(conv2)

# 添加遗忘线

add = layers.add([bn2, residual])

out = self.relu(add)

return out

Res Block实现如下

def _build_resblock(self, block, filter_num, blocks, stride=1):

res_blocks = keras.Sequential()

res_blocks.add(block(filter_num, stride))

for _ in range(1, blocks):

res_blocks.add(block(filter_num, stride=1))

return res_blocks

4. CFAR100实战(基于ResNet18)

- strides参数详解

- resnet18代码

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers, Sequential

# 设计BasicBlock

class BasicBlock(layers.Layer):

def __init__(self, filter_num, stride=1):

# filter_num指的是卷积核个数

super(BasicBlock, self).__init__()

self.conv1 = layers.Conv2D(filter_num, (3, 3), strides=stride, padding='same')

self.bn1 = layers.BatchNormalization()

self.relu = layers.Activation('relu')

self.conv2 = layers.Conv2D(filter_num, (3, 3), strides=1, padding='same')

self.bn2 = layers.BatchNormalization()

# 更改短接线数据的维度,保持与输出一致(这样才可做相加)

if stride != 1:

self.downsample = Sequential()

self.downsample.add(layers.Conv2D(filter_num, (1, 1), strides=stride))

else:

self.downsample = lambda x: x

def call(self, inputs, training=None):

out = self.conv1(inputs)

out = self.bn1(out, training=training)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

identify = self.downsample(inputs) # 短接线

output = layers.add([out, identify]) # 将短接线与输出相连

output = tf.nn.relu(output)

return output

# 设计ResBlock

class ResNet(keras.Model):

# layer_dims指一个BasicBlock包含几个BasicBlock,num_class指分类的类别总数目

def __init__(self, layer_dims, num_class=100):

super(ResNet, self).__init__()

# ResNet18的第一层(第一层不是ResBlock)

self.stem = Sequential([

layers.Conv2D(64, (3, 3), strides=(1, 1)),

layers.BatchNormalization(),

layers.Activation('relu'),

layers.MaxPool2D(pool_size=(2, 2), strides=(1, 1), padding='same')

])

# 四层ResBlock

self.layer1 = self.build_resblock(64, layer_dims[0])

self.layer2 = self.build_resblock(128, layer_dims[1], stride=2) # stride=2表示下采样(大于1即可)

self.layer3 = self.build_resblock(256, layer_dims[2], stride=2)

self.layer4 = self.build_resblock(512, layer_dims[3], stride=2)

# ResNet18的最后一层,输出为[b,512,h,w]

self.avgpool = layers.GlobalAveragePooling2D()

self.fc = layers.Dense(num_class)

def call(self, inputs, training=None):

x = self.stem(inputs, training=training)

x = self.layer1(x, training=training)

x = self.layer2(x, training=training)

x = self.layer3(x, training=training)

x = self.layer4(x, training=training)

x = self.avgpool(x) # 输出为[b,c]

x = self.fc(x) # 输出为[b,100]

return x

def build_resblock(self, filter_num, blocks, stride=1):

res_blocks = Sequential()

res_blocks.add(BasicBlock(filter_num, stride))

for _ in range(1, blocks):

res_blocks.add(BasicBlock(filter_num, stride=1))

return res_blocks

def resnet18():

return ResNet([2, 2, 2, 2]) # ResNet18的网络结构,指每组ResBlock含有BasicBlock的数量

- main函数代码

import tensorflow as tf

from tensorflow.keras import layers, optimizers, datasets, Sequential

from resnet import resnet18

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

def pre_process(x, y):

x = tf.cast(x, dtype=tf.float32) / 255. - 0.5

y = tf.cast(y, dtype=tf.int32)

return x, y

batch_size = 128

(x, y), (x_test, y_test) = datasets.cifar100.load_data()

# 将标签降维

y = tf.squeeze(y, axis=1)

y_test = tf.squeeze(y_test, axis=1)

train_db = tf.data.Dataset.from_tensor_slices((x, y))

train_db = train_db.shuffle(1000).map(pre_process).batch(batch_size)

test_db = tf.data.Dataset.from_tensor_slices((x_test, y_test))

test_db = test_db.map(pre_process).batch(batch_size)

sample = next(iter(train_db))

def main():

model = resnet18()

model.build(input_shape=(None, 32, 32, 3))

model.summary() # 打印参数信息

optimizer = optimizers.Adam(lr=1e-3)

for epoch in range(300):

for step, (x, y) in enumerate(train_db):

with tf.GradientTape() as tape:

output = model(x, training=None)

y_onehot = tf.one_hot(y, depth=100)

loss = tf.losses.categorical_crossentropy(y_onehot, output, from_logits=True)

loss = tf.reduce_mean(loss)

grads = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(grads, model.trainable_variables))

if step % 100 == 0:

print(epoch, step, 'loss:', float(loss))

# 测试

total_num = 0

total_correct = 0

for x,y in test_db:

output = model(x, training=False)

prob = tf.nn.softmax(output, axis=1)

pred = tf.argmax(prob, axis=1)

pred = tf.cast(pred, dtype=tf.int32)

correct = tf.cast(tf.equal(pred, y), dtype=tf.int32)

correct = tf.reduce_sum(correct)

total_num += x.shape[0]

total_correct += int(correct)

accuracy = total_correct / total_num

accuracy = accuracy * 100

print(epoch, '准确率', accuracy, "%")

if __name__ == '__main__':

main()