附上资源下载链接:pytorch实现cnn手写识别-深度学习文档类资源-CSDN文库

1.代码

import torch.nn as nn

import torch

from torch.utils.data import DataLoader

import torchvision

import matplotlib.pyplot as plt

from tqdm import tqdm

# 神经网络模型

class Net(nn.Module):

def __init__(self):

# 调用父类的构造函数

super(Net, self).__init__()

# 将方法进行包装

self.model = torch.nn.Sequential(

# 像素是 28x28

nn.Conv2d(in_channels=1, out_channels=16, kernel_size=3, stride=1, padding=1), # 卷积层

# (28像素-3卷积核大小+2*1pad)/1步长 + 1 = 28

nn.ReLU(), # 激活函数

nn.MaxPool2d(kernel_size=2, stride=2), # 池化层

# (28像素-2池化核大小)/2步长 + 1 = 14

# 像素是 14x14

nn.Conv2d(in_channels=16, out_channels=32, kernel_size=3, stride=1, padding=1), # 卷积层

# (14像素-3卷积核大小+2*1pad)/1步长 + 1 = 14

nn.ReLU(), # 激活函数

nn.MaxPool2d(kernel_size=2, stride=2), # 池化层

# (14像素-2池化核大小)/2步长 + 1 = 7

# 像素是 7x7

nn.Conv2d(in_channels=32, out_channels=64, kernel_size=3, stride=1, padding=1), # 卷积层

nn.ReLU(), # 激活函数

nn.Flatten(), # 展平

nn.Linear(in_features=7 * 7 * 64, out_features=128), # 全连接层

nn.ReLU(), # 激活函数

nn.Linear(in_features=128, out_features=10), # 全连接层

nn.Softmax(dim=1) # 多分类器

)

# forward方法是必须要重写的,它是实现模型的功能,实现各个层之间的连接关系的核心

def forward(self, input):

output = self.model(input)

return output

# 超参数设置

BATCH_SIZE = 250

EPOCHS = 10

# 创建实例

NET = Net()

# 利用gpu进行加速,没有的话就用cpu

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# 图像处理成张量并进行归一化处理

transform = torchvision.transforms.Compose([torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(mean=[0.5], std=[0.5])])

preTrainData = torchvision.datasets.MNIST('./data/', train=True, transform=transform, download=True)

preTestData = torchvision.datasets.MNIST('./data/', train=False, transform=transform)

trainData = DataLoader(dataset=preTrainData, batch_size=BATCH_SIZE, shuffle=True)

testData = DataLoader(dataset=preTestData, batch_size=BATCH_SIZE)

NET.to(device) # gpu或cpu

criterion = nn.CrossEntropyLoss() # 交叉熵损失函数,与softmax激活函数一起用,一起构成softmax损失函数;分类器

optimizer = torch.optim.Adam(NET.parameters()) # 调用优化器Adam调整参数

history = {'Test Loss': [], 'Test Accuracy': []} # 用字典来存储测试损失历史值和精度历史值

for epoch in range(EPOCHS): # 循环10次

processBar = tqdm(trainData, unit='batch_idx') # 进度条过程可视化

NET.train(True)

for batch_idx, (trainImgs, labels) in enumerate(processBar): # 迭代器

trainImgs = trainImgs.to(device)

labels = labels.to(device)

outputs = NET.forward(trainImgs) # 输入图片数据,进行训练

loss = criterion(outputs, labels) # 计算损失值

predictions = torch.argmax(outputs, dim=1)

accuracy = torch.sum(predictions == labels) / labels.shape[0]

optimizer.zero_grad()

loss.backward()

optimizer.step()

processBar.set_description("[%d/%d] Loss: %.4f, Acc: %.4f" %

(epoch+1, EPOCHS, loss.item(), accuracy.item()))

if batch_idx == len(processBar) - 1:

correct, totalLoss = 0, 0

NET.train(False)

with torch.no_grad():

for testImgs, labels in testData:

testImgs = testImgs.to(device)

labels = labels.to(device)

outputs = NET(testImgs)

loss = criterion(outputs, labels)

predictions = torch.argmax(outputs, dim=1)

totalLoss += loss

correct += torch.sum(predictions == labels)

testAccuracy = correct / (BATCH_SIZE * len(testData))

testLoss = totalLoss / len(testData)

processBar.set_description("[%d/%d] Loss: %.4f, Acc: %.4f, Test Loss: %.4f, Test Acc: %.4f" %

(epoch+1, EPOCHS, loss.item(), accuracy.item(), testLoss.item(), testAccuracy.item()))

testAccuracy = correct / (BATCH_SIZE * len(testData))

testLoss = totalLoss / len(testData)

history['Test Loss'].append(testLoss.item())

history['Test Accuracy'].append(testAccuracy.item())

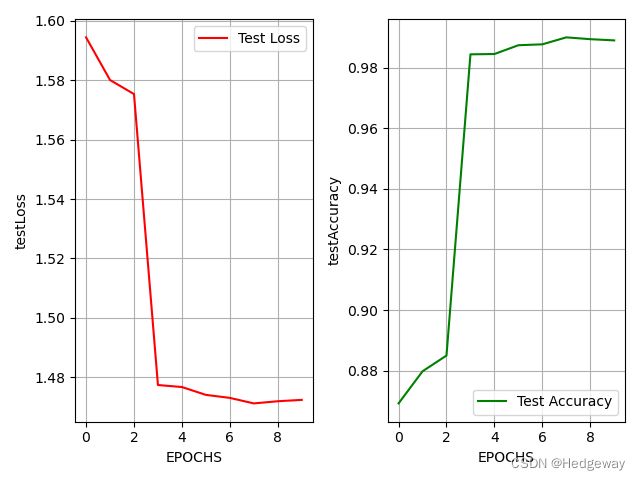

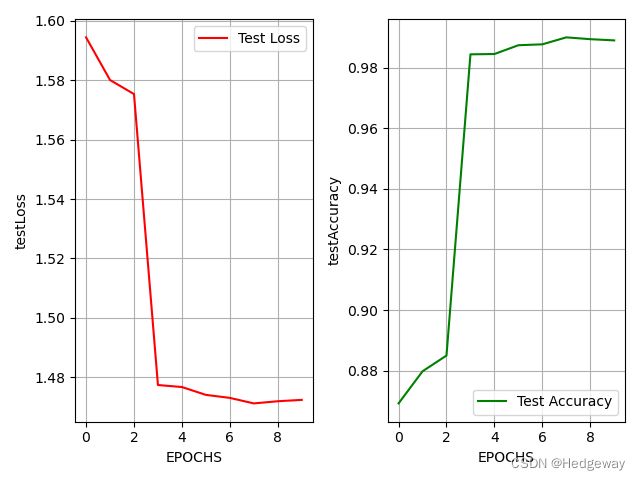

# 测试结果可视化

plt.subplot(1, 2, 1)

plt.plot(history['Test Loss'], color='red', label='Test Loss')

plt.legend(loc='best')

plt.grid(True)

plt.xlabel('EPOCHS')

plt.ylabel('testLoss')

plt.subplot(1, 2, 2)

plt.plot(history['Test Accuracy'], color='green', label='Test Accuracy')

plt.legend(loc='best')

plt.grid(True)

plt.xlabel('EPOCHS')

plt.ylabel('testAccuracy')

plt.show()

# 保存模型

torch.save(NET, './model.pth')

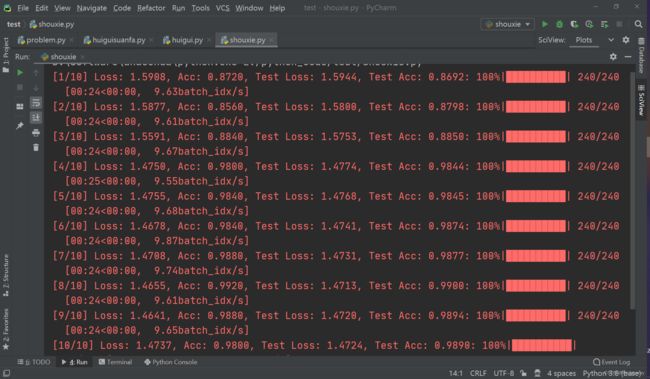

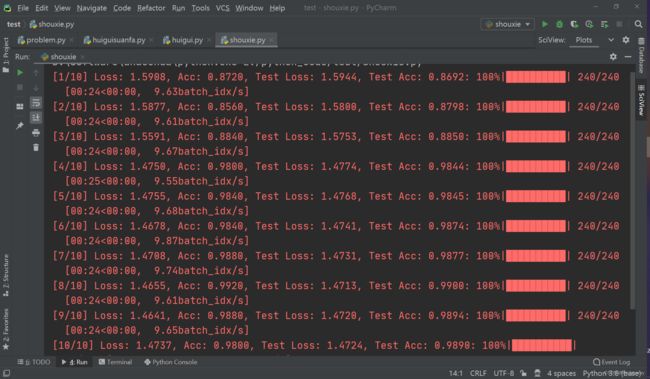

2.训练过程可视化

3.测试结果