【深度学习实战】【nlp-beginner】基于深度学习的文本分类

任务说明:NLP-Beginner:自然语言处理入门练习 任务二

数据下载:Sentiment Analysis on Movie Reviews

参考资料:

- Convolutional Neural Networks for Sentence Classificatio

- PyTorch官方文档

- 关于深度学习与自然语言处理的一些基础知识:【深度学习实战】从零开始深度学习(四):RNN与自然语言处理

- TorchText文本数据集读取操作

代码:NLP-Beginner-Exercise

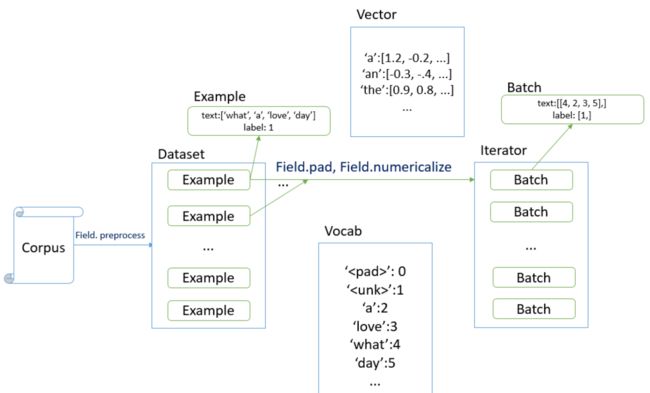

该任务主要利用torchtext这个包来进行。

目录

-

- 导入需要的包

- 创建Field对象

- 从文件中读取数据——TabularDataset

- 构建词表

- 生成迭代器

- 创建网络模型

- 模型训练

- 模型结果(一)

- 模型改进——使用预训练好的词向量

- 模型结果(二)

- 模型再改进——使用LSTM

导入需要的包

import torch

from torch import nn, optim

import torch.nn.functional as F

from torchtext import data

from torchtext.data import Field, TabularDataset, BucketIterator

import time

这里主要用到torchtext的四个模块:

- Field——用来定义文本预处理的模式

- Example——数据样本,“文本+标签”

- TabularDataset——用于从文件中读取数据

- BucketIterator——迭代器,生成Batch

创建Field对象

fix_length = 40

# 创建Field对象

TEXT = Field(sequential = True, lower=True, fix_length = fix_length)

LABEL = Field(sequential = False, use_vocab = False)

Field参数说明(摘自参考资料4):

- sequential——是否把数据表示成序列,如果是False, 不能使用分词 默认值: True.

- use_vocab——是否使用词典对象. 如果是False 数据的类型必须已经是数值类型. 默认值: True.

- init_token——每一条数据的起始字符 默认值: None. eos_token——每条数据的结尾字符 默认值: None.

- fix_length——修改每条数据的长度为该值,不够的用pad_token补全. 默认值: None.

- tensor_type——把数据转换成的tensor类型 默认值: torch.LongTensor.

- preprocessing——在分词之后和数值化之前使用的管道,默认值: None.

- postprocessing——数值化之后和转化成tensor之前使用的管道,默认值: None.

- lower——是否把数据转化为小写。默认值: False.

- tokenize——分词函数. 默认值: string.split().

- include_lengths——是否返回一个已经补全的最小batch的元组和和一个包含每条数据长度的列表 . 默认值: False.

- batch_first——Whether to produce tensors with the batch dimension

- first. 默认值: False. pad_token——用于补全的字符. 默认值: “

”. - unk_token——不存在词典里的字符. 默认值: “

”. - pad_first——是否补全第一个字符. 默认值:False.

Field几个重要的方法(摘自参考资料4):

- pad(minibatch)——在一个batch对齐每条数据

- build_vocab()—— 建立词典

- numericalize()——把文本数据数值化,返回tensor

从文件中读取数据——TabularDataset

torchtext的TabularDataset可以用于从CSV、TSV或者JSON文件中读取数据。

TabularDataset参数说明

- path——文件存储路径

- format——读取文件的格式(CSV/TSV/JSON)

- fields——tuple(str, Field)。如果使用列表,格式必须是CSV或TSV,列表的值应该是(name, Field)的元组。字段的顺序应该与CSV或TSV文件中的列相同,而(name, None)的元组表示将被忽略的列。如果使用dict,键应该是JSON键或CSV/TSV列的子集,值应该是(名称、字段)的元组。不存在于输入字典中的键将被忽略。这允许用户从JSON/CSV/TSV键名重命名列,还允许选择要加载的列子集。

- skip_header——是否要跳过表的第一行(表头)

- csv_reader_params——读取csv或者tsv所需要的一些参数。具体可以参考csv.reader

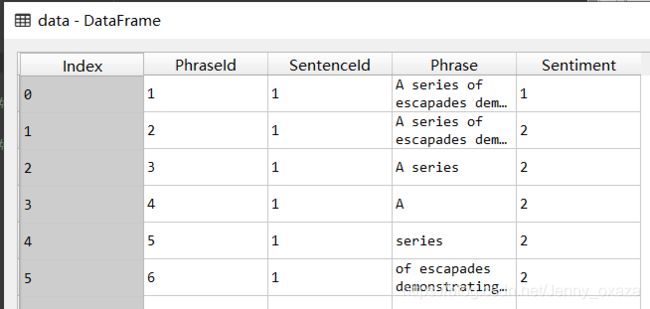

我们可以先看一下数据的样子

实际上我们只需要知道文本内容(即Phrase列)和标签(即Sentiment列)就可以了,设置fields读取器如下:

fields = [('PhraseId', None), ('SentenceId', None), ('Phrase', TEXT), ('Sentiment', LABEL)]

设置好读取器后,可以利用TabularDataset读取数据:

# 从文件中读取数据

fields = [('PhraseId', None), ('SentenceId', None), ('Phrase', TEXT), ('Sentiment', LABEL)]

dataset, test = TabularDataset.splits(path = './', format = 'tsv',

train = 'train.tsv', test = 'test.tsv',

skip_header = True, fields = fields)

train, vali = dataset.split(0.7)

这里需要注意的是,我们读进来的train.tsv文件其实是包含训练集和测试集的,利用dataset.split(0.7)将其分成训练集和测试集,数据样本数的比例的是7:3。

构建词表

torchtext.data.Field对象提供了build_vocab方法来帮助构建词表。build_vocab()方法的参数参考Vocab

从训练集中构建词表的代码如下。文本数据的词表最多包含10000个词,并删除了出现频次不超过10的词。

# 构建词表

TEXT.build_vocab(train, max_size=10000, min_freq=10)

LABEL.build_vocab(train)

生成迭代器

trochtext提供了BucketIterator,可以帮助我们批处理所有文本并将词替换成词的索引。如果序列的长度差异很大,则填充将消耗大量浪费的内存和时间。BucketIterator可以将每个批次的相似长度的序列组合在一起,以最小化填充。

# 生成向量的批数据

bs = 64

train_iter, vali_iter = BucketIterator.splits((train, vali), batch_size = bs,

device= torch.device('cpu'),

sort_key=lambda x: len(x.Phrase),

sort_within_batch=False,

shuffle = True,

repeat = False)

创建网络模型

class EmbNet(nn.Module):

def __init__(self,emb_size,hidden_size1,hidden_size2=400):

super().__init__()

self.embedding = nn.Embedding(emb_size,hidden_size1)

self.fc = nn.Linear(hidden_size2,3)

self.log_softmax = nn.LogSoftmax(dim = -1)

def forward(self,x):

embeds = self.embedding(x).view(x.size(0),-1)

out = self.fc(embeds)

out = self.log_softmax(out)

return out

model = EmbNet(len(TEXT.vocab.stoi), 20)

model = model.cuda()

optimizer = optim.Adam(model.parameters(),lr=0.001)

模型包括三层,首先是一个embedding层,它接收两个参数,即词表的大小和希望为每个单词创建的word embedding的维度。对于一个句子来说,所有的词的word embedding向量收尾相接(扁平化),通过一个线性层和一个log_softmax层得到最后的分类。

这里的优化器选择Adam,在实践中的效果比SGD要好很多。

模型训练

# 训练模型

def fit(epoch, model, data_loader, phase = 'training'):

if phase == 'training':

model.train()

if phase == 'validation':

model.eval()

running_loss = 0.0

running_correct = 0.0

for batch_idx, batch in enumerate(data_loader):

text, target = batch.Phrase, batch.Sentiment

# if torch.cuda.is_available():

# text, target = text.cuda(), target.cuda()

if phase == 'training':

optimizer.zero_grad()

output = model(text)

loss = F.cross_entropy(output, target)

running_loss += F.cross_entropy(output, target, reduction='sum').item()

preds = output.data.max(dim=1, keepdim = True)[1]

running_correct += preds.eq(target.data.view_as(preds)).cpu().sum()

if phase == 'training':

loss.backward()

optimizer.step()

loss = running_loss/len(data_loader.dataset)

accuracy = 100. * running_correct/len(data_loader.dataset)

print(f'{phase} loss is {loss:{5}.{4}} and {phase} accuracy is {running_correct}/{len(data_loader.dataset)}{accuracy:{10}.{4}}')

return loss, accuracy

train_losses , train_accuracy = [],[]

val_losses , val_accuracy = [],[]

t0 = time.time()

for epoch in range(1, 100):

print('epoch no. {} :'.format(epoch) + '-'* 15)

epoch_loss, epoch_accuracy = fit(epoch, model, train_iter,phase='training')

val_epoch_loss, val_epoch_accuracy = fit(epoch, model, vali_iter,phase='validation')

train_losses.append(epoch_loss)

train_accuracy.append(epoch_accuracy)

val_losses.append(val_epoch_loss)

val_accuracy.append(val_epoch_accuracy)

tend = time.time()

print('总共用时:{} s'.format(tend-t0))

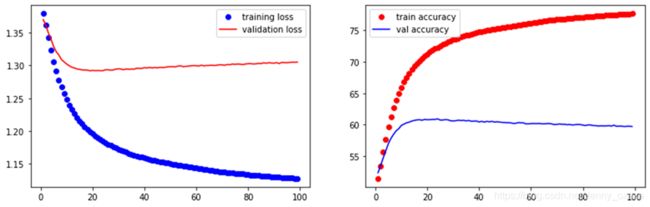

模型结果(一)

模型一共训练了100轮,在val的准确率大致在60%左右。

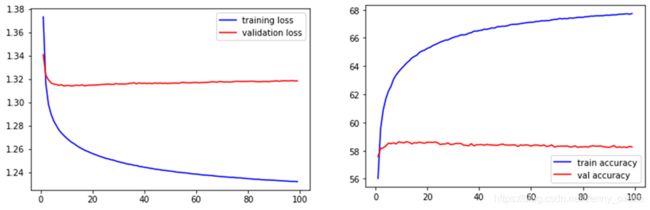

模型改进——使用预训练好的词向量

很多时候,在处理特定领域的NLP任务时,使用预训练好的词向量会非常有用。通常使用预训练的词向量包括下面三个步骤。

下载词向量

TEXT.build_vocab(train, vectors=GloVe(name='6B', dim=300), max_size=10000, min_freq=20)

在模型中加载词向量

model = EmbNet(len(TEXT.vocab.stoi),300, 300*fix_length)

# 利用预训练好的词向量

model.embedding.weight.data = TEXT.vocab.vectors

冻结embedding层

# 冻结embedding层的权重

model.embedding.weight.requires_grad = False

optimizer = optim.Adam([ param for param in model.parameters() if param.requires_grad == True],lr=0.001)

模型结果(二)

模型再改进——使用LSTM

## 创建模型

class LSTM(nn.Module):

def __init__(self, vocab, hidden_size, n_cat, bs = 1, nl = 2):

super().__init__()

self.hidden_size = hidden_size

self.bs = bs

self.nl = nl

self.n_vocab = len(vocab)

self.n_cat = n_cat

self.e = nn.Embedding(self.n_vocab, self.hidden_size)

self.rnn = nn.LSTM(self.hidden_size, self.hidden_size, self.nl)

self.fc2 = nn.Linear(self.hidden_size, self.n_cat)

self.sofmax = nn.LogSoftmax(dim = -1)

def forward(self, x):

bs = x.size()[1]

if bs != self.bs:

self.bs = bs

e_out = self.e(x)

h0, c0 = self.init_paras()

rnn_o, _ = self.rnn(e_out, (h0, c0))

rnn_o = rnn_o[-1]

fc = self.fc2(rnn_o)

out = self.sofmax(fc)

return out

def init_paras(self):

h0 = Variable(torch.zeros(self.nl, self.bs, self.hidden_size))

c0 = Variable(torch.zeros(self.nl, self.bs, self.hidden_size))

return h0, c0

model = LSTM(TEXT.vocab, hidden_size = 300, n_cat = 5, bs = bs)

# 利用预训练好的词向量

model.e.weight.data = TEXT.vocab.vectors

# 冻结embedding层的权重

model.e.weight.requires_grad = False

optimizer = optim.Adam([ param for param in model.parameters() if param.requires_grad == True],lr=0.001)