基于深度学习的数字调制信号盲识别研究概述信号调试方式识别技术在军、民等领域具有重要研究价值,信号调制方式的正确识别是通信接收机正确解调译码的前提。盲调制识别技术旨在接收未知调制信号类型并自动分类识别,此技术能在大量复杂多样的调制信号中正确识别调制格式,其对通信效率的提升颇有益处。目前基于深度学习的盲调制识别技术能发挥深度学习在图像识别分类方面的极大优势,其稳定性和准确率都达到了空前高度。 本实验将使用不同信噪比调制信号、不同类型调制信号与不同网络匹配并选取分类效果出色的AlexNet和GoogLeNet作为研究网络,利用华为MindSpore框架搭建网络,使能网络高效运算,得出分类识别效果。AlexNet2012年Hinton团队提出AlexNet网络,其将分类准确率由传统的70%提升至80%有余。AlexNet结构由5个卷积层实现对数据卷积操作并连接3个全连接层展平数据参数,数据最终经softmax层输出分类。

AlexNet采用并行模式,使用ReLu激活函数,在最后2个全连接层中随机失活50%神经元,避免模型过拟合的同时提高网络适应能力,降低网络复杂度,提升运算速度和分类准确性,这是AlexNet解决图像分类问题的独特优势。为保证网络的各层之间数据匹配和顺畅运行,计算卷积和池化后特征矩阵输出尺寸公式如下 ![]()

其中,N是矩阵卷积或者池化后输出特征矩阵尺寸的长和宽,输入图片尺寸为W×W,F是计算卷积核或者池化核尺寸为F×F,S为步长,P是补零列数或行数。GoogLeNet2014年GoogLeNet网络以 93.33%的准确率在ISLVRC竞赛上大放异彩,它使用创新结构,在深度增加的同时,整个网络大小却小于AlexNet数倍,在一定的计算资源下,GoogLeNet体现出更好的性能优势。

GoogLeNet引入Inception模块、辅助分类器等。GoogLeNet中Inception 模块

Inception模块引入并行结构,将特征矩阵并行输入四个分支处理,对处理后不同尺度特征矩阵按深度拼接。四个分支分别是1×1的卷积核,3×3的卷积核,5×5的卷积核和池化核大小为3×3的最大池化下采样。其中三个分支,使用1×1卷积核进行降维,减少参数,降低计算复杂度。GoogLeNet辅助分类器![]()

增加的辅助分类器如,它有利于避免梯度消失,并对中间层数据反馈,起到向前传导梯度的作用。两个辅助分类器结构相同,均采用平均下采样处理,通过1×1卷积核和ReLU激活函数减少参数。AlexNet参数根据的参数计算公式和计算方法,得到特征矩阵尺寸,构建AlexNet网络结构参数如下

网络构建代码"""Alexnet."""

import numpy as np

import mindspore.nn as nn

from mindspore.ops import operations as P

from mindspore.ops import functional as F

from mindspore.common.tensor import Tensor

import mindspore.common.dtype as mstype

def conv(in_channels, out_channels, kernel_size, stride=1, padding=0, pad_mode="valid", has_bias=True):

return nn.Conv2d(in_channels, out_channels, kernel_size=kernel_size, stride=stride, padding=padding,

has_bias=has_bias, pad_mode=pad_mode)

def fc_with_initialize(input_channels, out_channels, has_bias=True):

return nn.Dense(input_channels, out_channels, has_bias=has_bias)

class DataNormTranspose(nn.Cell):

"""Normalize an tensor image with mean and standard deviation.

Given mean: (R, G, B) and std: (R, G, B),

will normalize each channel of the torch.*Tensor, i.e.

channel = (channel - mean) / std

Args:

mean (sequence): Sequence of means for R, G, B channels respectively.

std (sequence): Sequence of standard deviations for R, G, B channels

respectively.

"""

def __init__(self):

super(DataNormTranspose, self).__init__()

self.mean = Tensor(np.array([0.485 * 255, 0.456 * 255, 0.406 * 255]).reshape((1, 1, 1, 3)), mstype.float32)

self.std = Tensor(np.array([0.229 * 255, 0.224 * 255, 0.225 * 255]).reshape((1, 1, 1, 3)), mstype.float32)

def construct(self, x):

x = (x - self.mean) / self.std

x = F.transpose(x, (0, 3, 1, 2))

return x

class AlexNet(nn.Cell):

"""

Alexnet

"""

def __init__(self, num_classes=4, channel=3, phase='train', include_top=True, off_load=False):

super(AlexNet, self).__init__()

self.off_load = off_load

if self.off_load is True:

self.data_trans = DataNormTranspose()

self.conv1 = conv(channel, 64, 11, stride=4, pad_mode="same", has_bias=True)

self.conv2 = conv(64, 128, 5, pad_mode="same", has_bias=True)

self.conv3 = conv(128, 192, 3, pad_mode="same", has_bias=True)

self.conv4 = conv(192, 256, 3, pad_mode="same", has_bias=True)

self.conv5 = conv(256, 256, 3, pad_mode="same", has_bias=True)

self.relu = P.ReLU()

self.max_pool2d = nn.MaxPool2d(kernel_size=3, stride=2, pad_mode='valid')

self.include_top = include_top

if self.include_top:

dropout_ratio = 0.65

if phase == 'test':

dropout_ratio = 1.0

self.flatten = nn.Flatten()

self.fc1 = fc_with_initialize(6 * 6 * 256, 4096)

self.fc2 = fc_with_initialize(4096, 4096)

self.fc3 = fc_with_initialize(4096, num_classes)

self.dropout = nn.Dropout(dropout_ratio)

def construct(self, x):

"""define network"""

if self.off_load is True:

x = self.data_trans(x)

x = self.conv1(x)

x = self.relu(x)

x = self.max_pool2d(x)

x = self.conv2(x)

x = self.relu(x)

x = self.max_pool2d(x)

x = self.conv3(x)

x = self.relu(x)

x = self.conv4(x)

x = self.relu(x)

x = self.conv5(x)

x = self.relu(x)

x = self.max_pool2d(x)

if not self.include_top:

return x

x = self.flatten(x)

x = self.fc1(x)

x = self.relu(x)

x = self.dropout(x)

x = self.fc2(x)

x = self.relu(x)

x = self.dropout(x)

x = self.fc3(x)

return x复制AlexNet配置参数(部分)

GoogLeNet参数如下

GoogLeNet网络结构,输入图像先经过卷积层和池化层后输入inception 3、inception 4和inception 5处理,并在inception 4a和inception 4d部分连接辅助分类器,再经随机失活、展平和softmax得到输出概率分布。网络构建代码"""GoogleNet"""

import mindspore.nn as nn

from mindspore.common.initializer import TruncatedNormal

from mindspore.ops import operations as P

def weight_variable():

"""Weight variable."""

return TruncatedNormal(0.02)

class Conv2dBlock(nn.Cell):

"""

Basic convolutional block

Args:

in_channles (int): Input channel.

out_channels (int): Output channel.

kernel_size (int): Input kernel size. Default: 1

stride (int): Stride size for the first convolutional layer. Default: 1.

padding (int): Implicit paddings on both sides of the input. Default: 0.

pad_mode (str): Padding mode. Optional values are "same", "valid", "pad". Default: "same".

Returns:

Tensor, output tensor.

"""

def __init__(self, in_channels, out_channels, kernel_size=1, stride=1, padding=0, pad_mode="same"):

super(Conv2dBlock, self).__init__()

self.conv = nn.Conv2d(in_channels, out_channels, kernel_size=kernel_size, stride=stride,padding=padding, pad_mode=pad_mode, weight_init=weight_variable())

self.bn = nn.BatchNorm2d(out_channels, eps=0.001)

self.relu = nn.ReLU()

def construct(self, x):

x = self.conv(x)

x = self.bn(x)

x = self.relu(x)

return x

class Inception(nn.Cell):

"""

Inception Block

"""

def __init__(self, in_channels, n1x1, n3x3red, n3x3, n5x5red, n5x5, pool_planes):

super(Inception, self).__init__()

self.b1 = Conv2dBlock(in_channels, n1x1, kernel_size=1)

self.b2 = nn.SequentialCell([Conv2dBlock(in_channels, n3x3red, kernel_size=1),Conv2dBlock(n3x3red, n3x3, kernel_size=3, padding=0)])

self.b3 = nn.SequentialCell([Conv2dBlock(in_channels, n5x5red, kernel_size=1),Conv2dBlock(n5x5red, n5x5, kernel_size=3, padding=0)])

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=1, pad_mode="same")

self.b4 = Conv2dBlock(in_channels, pool_planes, kernel_size=1)

self.concat = P.Concat(axis=1)

def construct(self, x):

branch1 = self.b1(x)

branch2 = self.b2(x)

branch3 = self.b3(x)

cell = self.maxpool(x)

branch4 = self.b4(cell)

return self.concat((branch1, branch2, branch3, branch4))

class GoogLeNet(nn.Cell):

"""

Googlenet architecture

"""

def __init__(self, num_classes, include_top=True):

super(GoogLeNet, self).__init__()

self.conv1 = Conv2dBlock(3, 64, kernel_size=7, stride=2, padding=0)

self.maxpool1 = nn.MaxPool2d(kernel_size=3, stride=2, pad_mode="same")

self.conv2 = Conv2dBlock(64, 64, kernel_size=1)

self.conv3 = Conv2dBlock(64, 192, kernel_size=3, padding=0)

self.maxpool2 = nn.MaxPool2d(kernel_size=3, stride=2, pad_mode="same")

self.block3a = Inception(192, 64, 96, 128, 16, 32, 32)

self.block3b = Inception(256, 128, 128, 192, 32, 96, 64)

self.maxpool3 = nn.MaxPool2d(kernel_size=3, stride=2, pad_mode="same")

self.block4a = Inception(480, 192, 96, 208, 16, 48, 64)

self.block4b = Inception(512, 160, 112, 224, 24, 64, 64)

self.block4c = Inception(512, 128, 128, 256, 24, 64, 64)

self.block4d = Inception(512, 112, 144, 288, 32, 64, 64)

self.block4e = Inception(528, 256, 160, 320, 32, 128, 128)

self.maxpool4 = nn.MaxPool2d(kernel_size=2, stride=2, pad_mode="same")

self.block5a = Inception(832, 256, 160, 320, 32, 128, 128)

self.block5b = Inception(832, 384, 192, 384, 48, 128, 128)

self.dropout = nn.Dropout(keep_prob=0.8)

self.include_top = include_top

if self.include_top:

self.mean = P.ReduceMean(keep_dims=True)

self.flatten = nn.Flatten()

self.classifier = nn.Dense(1024, num_classes, weight_init=weight_variable(),bias_init=weight_variable())

def construct(self, x):

"""construct"""

x = self.conv1(x)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.maxpool2(x)

x = self.block3a(x)

x = self.block3b(x)

x = self.maxpool3(x)

x = self.block4a(x)

x = self.block4b(x)

x = self.block4c(x)

x = self.block4d(x)

x = self.block4e(x)

x = self.maxpool4(x)

x = self.block5a(x)

x = self.block5b(x)

if not self.include_top:

return x

x = self.mean(x, (2, 3))

x = self.flatten(x)

x = self.classifier(x)

return x复制GoogLeNet网络训练参数(部分)

AlexNet模型训练定义数据集函数"""Produce the dataset"""

import mindspore.dataset as ds

import mindspore.dataset.vision.c_transforms as CV

def create_dataset_imagenet(cfg, dataset_path, batch_size=32, repeat_num=1, training=True,

shuffle=True, sampler=None, class_indexing=None):

"""

create a train or eval imagenet2012 dataset for resnet50

Args:

dataset_path(string): the path of dataset.

do_train(bool): whether dataset is used for train or eval.

repeat_num(int): the repeat times of dataset. Default: 1

batch_size(int): the batch size of dataset. Default: 32

target(str): the device target. Default: Ascend

Returns:

dataset

"""

data_set = ds.ImageFolderDataset(dataset_path, shuffle=shuffle, sampler=sampler, class_indexing=class_indexing )

image_size = 224

# define map operations

transform_img = []

if training:

transform_img = [

CV.RandomCropDecodeResize(image_size, scale=(0.08, 1.0), ratio=(0.75, 1.333)),

CV.RandomHorizontalFlip(prob=0.5)

]

else:

transform_img = [

CV.Decode(),

CV.Resize((256, 256)),

CV.CenterCrop(image_size)

]

data_set = data_set.map(input_columns="image", operations=transform_img)

data_set = data_set.batch(batch_size, drop_remainder=True)

# apply dataset repeat operation

if repeat_num > 1:

data_set = data_set.repeat(repeat_num)

return data_set复制实例化模型import time

import mindspore.nn as nn

from mindspore import Tensor

from mindspore.train import Model

from mindspore.nn.metrics import Accuracy

from mindspore.train.callback import ModelCheckpoint, CheckpointConfig, LossMonitor, TimeMonitor

from mindspore.train.loss_scale_manager import DynamicLossScaleManager, FixedLossScaleManager

from A_2AlexNet import AlexNet

from A_3CreatDs import create_dataset_imagenet

from A_4Generator_lr import get_lr

from A_5Get_param_groups import get_param_groups

from A_6Config import Config_Net as config

ds & net

_off_load = True

train_ds_path='../datasets/train_10dB'

ds_train = create_dataset_imagenet(config, train_ds_path, config.batch_size,training=True)

network = AlexNet(config.num_classes, phase='train', off_load=_off_load)

metrics = {"Accuracy": Accuracy()}

step_per_epoch = ds_train.get_dataset_size()

loss = nn.SoftmaxCrossEntropyWithLogits(sparse=True, reduction="mean")

lr = get_lr(config)

opt = nn.Momentum(params=get_param_groups(network),

learning_rate=Tensor(lr),

momentum=config.momentum,

weight_decay=config.weight_decay,

loss_scale=config.loss_scale)loss_scale_manager = FixedLossScaleManager(config.loss_scale, drop_overflow_update=False)

model = Model(network,loss_fn=loss,optimizer=opt,metrics=metrics,loss_scale_manager=loss_scale_manager)

save ckpt

ckpt_save_dir = config.save_checkpoint_path

time_cb = TimeMonitor(data_size=step_per_epoch)

config_ck = CheckpointConfig(save_checkpoint_steps=config.save_checkpoint_epochs,

keep_checkpoint_max=config.keep_checkpoint_max)ckpoint_cb = ModelCheckpoint(prefix="alexnet", directory=ckpt_save_dir, config=config_ck)

train

model.train(config.epoch_size, ds_train, callbacks=[time_cb, ckpoint_cb, LossMonitor()])

复制验证import mindspore.nn as nn

from mindspore import Model,load_checkpoint,load_param_into_net

from A_2AlexNet import AlexNet

from A_3CreatDs import create_dataset_imagenet

from A_6Config import Config_Net as config

eval

eval_ds_path='../datasets/5dB_eval' #import eval_ds

eval_dataset = create_dataset_imagenet(cfg=config,dataset_path=eval_ds_path,batch_size=50,training=False)

net_eval = AlexNet(config.num_classes, phase='test', off_load=True)

ckpt_path='../ckpt/A/5dB/alexnet-100_54_0.12973762.ckpt' #import ckpt

eval_ds_dict = load_checkpoint(ckpt_path)

load_param_into_net(net_eval, eval_ds_dict)

net_eval.set_train(False)

loss = nn.SoftmaxCrossEntropyWithLogits(sparse=True, reduction="mean")

metrics = {'accuracy': nn.Accuracy(),

'ConfusionMatrix':nn.ConfusionMatrix(config.num_classes)}#'2':nn.ConfusionMatrixMetric

model_eval=Model(network=net_eval,loss_fn=loss,metrics=metrics) #model ok

eval_result = model_eval.eval(eval_dataset)

print("accuracy: ",eval_result)

复制GoogLeNet训练import time

from mindspore.nn.optim.momentum import Momentum

from mindspore.train.callback import ModelCheckpoint, CheckpointConfig, LossMonitor, TimeMonitor

from mindspore.train.model import Model

from mindspore.train.loss_scale_manager import DynamicLossScaleManager, FixedLossScaleManager

from mindspore import Tensor

from G_2GoogLeNet import GoogLeNet

from G_3Dateset import create_dataset_imagenet

from G_4Lossfun import CrossEntropySmooth

from G_5Config import Config_Net as cfg

from G_6Get_lr import get_lr

def get_param_groups(network):

""" get param groups """

decay_params = []

no_decay_params = []

for x in network.trainable_params():

parameter_name = x.name

if parameter_name.endswith('.bias'):

# all bias not using weight decay

no_decay_params.append(x)

elif parameter_name.endswith('.gamma'):

# bn weight bias not using weight decay, be carefully for now x not include BN

no_decay_params.append(x)

elif parameter_name.endswith('.beta'):

# bn weight bias not using weight decay, be carefully for now x not include BN

no_decay_params.append(x)

else:

decay_params.append(x)

return [{'params': no_decay_params, 'weight_decay': 0.0}, {'params': decay_params}]

ds & net

train_ds_path='../datasets/2dB_train'

train_ds = create_dataset_imagenet(train_ds_path,training=True,batch_size=cfg.batch_size)

batch_num = train_ds.get_dataset_size()

net_train = GoogLeNet(num_classes=cfg.num_classes)

lr = get_lr(cfg)

opt = Momentum(params=get_param_groups(net_train),

learning_rate=Tensor(lr), #cfg.lr_init

momentum=cfg.momentum,

weight_decay=cfg.weight_decay,

loss_scale=cfg.loss_scale)loss = CrossEntropySmooth(sparse=True,reduction="mean",smooth_factor=cfg.label_smooth_factor,num_classes=cfg.num_classes)

loss_scale_manager = FixedLossScaleManager(cfg.loss_scale, drop_overflow_update=False)

model = Model(net_train,loss_fn=loss,optimizer=opt,metrics={'acc'},

keep_batchnorm_fp32=False,loss_scale_manager=loss_scale_manager)save ckpt

ckpt_save_dir = cfg.save_checkpoint_path

config_ck = CheckpointConfig(save_checkpoint_steps=cfg.save_checkpoint_epochs, keep_checkpoint_max=cfg.keep_checkpoint_max)

ckpoint_cb = ModelCheckpoint(prefix="googlenet", directory=ckpt_save_dir, config=config_ck)

loss_cb = LossMonitor()

time_cb = TimeMonitor(data_size=batch_num)

cbs = [time_cb, ckpoint_cb, loss_cb]

train

model.train(cfg.epoch_size, train_ds, callbacks=cbs)

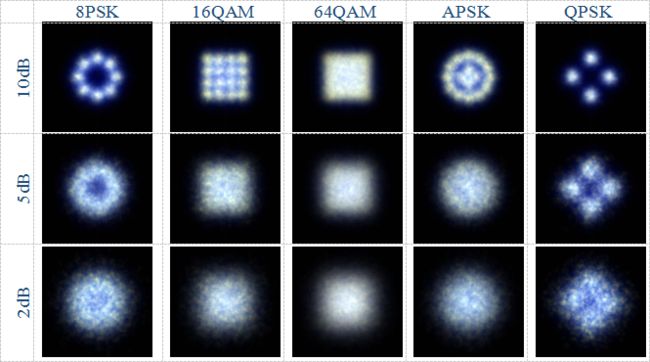

复制实验结果灰度图灰度图数据集类型

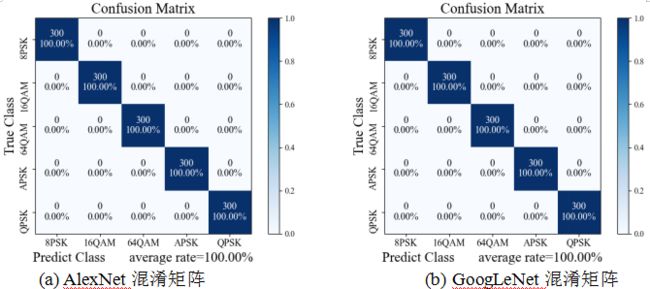

灰度图数据集下不同网络混淆矩阵

灰度图数据集下,由于使用数据集SNR均为10dB,加之网络模型参数经过实验微调,所以初始准确率整体分别达81.27%、85.47%和86.00%,其中各网络均对QPSK识别效果较好,而16QAM、64QAM、8PSK和APSK识别效果较差,这与调制类型样本点数量和生成图像形状相关。灰度增强图进一步使用灰度增强图对AlexNet和GoogLeNet训练验证,观察结合距离衰减模型后的灰度增强图对图像特征改进效果。 灰度增强图数据集类型

灰度增强图数据集下不同网络混淆矩阵

结果显示使用AlexNet时8PSK、APSK和QPSK识别准确率达90%以上,但16QAM部分被错误分类为64QAM和APSK,一些64QAM被则错误识别为QPSK和16QAM。使用GoogLeNet时8PSK、APSK和QPSK识别效果较好,但16QAM和64QAM因图像相似而被部分识别错误。总体上看灰度增强图整体效果优于灰度图。三通道图实验结果鉴于RGB图更契合网络分类数据格式,对信噪比2dB、5dB和10dB的RGB数据集训练验证,观察效果。 三通道图数据集类型

2dB RGB数据集下不同网络混淆矩阵

在低信噪比2dB时整体识别率较低,多种调制信号图像特征存在模糊和重叠,导致网络特征提取困难。AlexNet网络中APSK结果尤为明显,图像大部分被错误识别为8PSK。GoogLeNet由于不同特征提取方式并没有出现这种状况,但总体的识别率也较低。5dB RGB数据集下不同网络混淆矩阵

在信噪比5dB时情况则大大改观,AlexNet和GoogLeNet网络平均识别率分别达97.87%和98.13%,分类识别效果极佳,这体现出了网络分类识别效果的优良性能,这时能对绝大多数盲调制信号正确识别,具有很高实用价值和开发潜力。10dB RGB数据集下不同网络混淆矩阵

图像信噪比10dB时AlexNet和GoogLeNet网络的混淆矩阵,网络对调制信号识别精度均达到100%。说明10dB下网络能非常准确的提却不同调制信号图像的特征信息,对盲调制信号图像识别能有效利用深度学习网络参数模型对其精准分类。时间复杂度分析时间复杂度是对网络以及实验设备能力的评估。经样本数据多轮实验,对每个网络的训练时间统计平均值。本实验处理器为英特尔 Core i7-8700K CPU @ 3.70GHz,Windows 7操作系统。网络平均计算时间统计如下

本实验基于CPU设备完成,若对速度和性能有更高的要求还可借助GPU或昇腾处理器对网络训练其对海量数据的计算处理有更大优势,其中昇腾处理器除了计算能力的优势还具有完美适配网络框架的能力。总结将实验过程所中得识别准确率作图对比

在高信噪比情况下,AlexNet对灰度图、灰度增强图和三通道图的识别准确率由80%有余逐步提升至100%,GoogLeNet对灰度图、灰度增强图和三通道的识别率由85%逐步提升至100%,这说明图像处理过程对图像的有效特征产生了积极影响。对于RGB图像来说,两组网络在调制信号信噪比2dB时识别率分别约为80%和90%,信噪比10dB时识别率均达到100%,有了极大提升。 实验结果表明,三通道图比灰度图有更高的识别率,信噪比高的调制信号更容易被正确识别,对于网络而言AlexNet运算速度更快,GoogLeNet识别准确率更高,在使用信噪比为10dB的三通道图像时,以上两种网络识别率均为100%。