mysql同步数据到es

mysql同步数据到es

常用两种方式

1.使用 logstash

如果是历史数据同步我们可以用logstash,最快同步频率每分钟一次,如果对时效性要求高,慎用

2.使用 canal

实时同步,本文章未演示

使用logstash进行同步

logstash 特性:

- 无需开发,仅需安装配置logstash即可;

- 凡是SQL可以实现的logstash均可以实现(本就是通过sql查询数据)

- 支持每次全量同步或按照特定字段(如递增ID、修改时间)增量同步;

- 同步频率可控,最快同步频率每分钟一次(如果对实效性要求较高,慎用);

- 不支持被物理删除的数据同步物理删除ES中的数据(可在表设计中增加逻辑删除字段IsDelete标识数据删除)。

实现原理

定时查询数据库中数据,更新到es中

logstash实现步骤

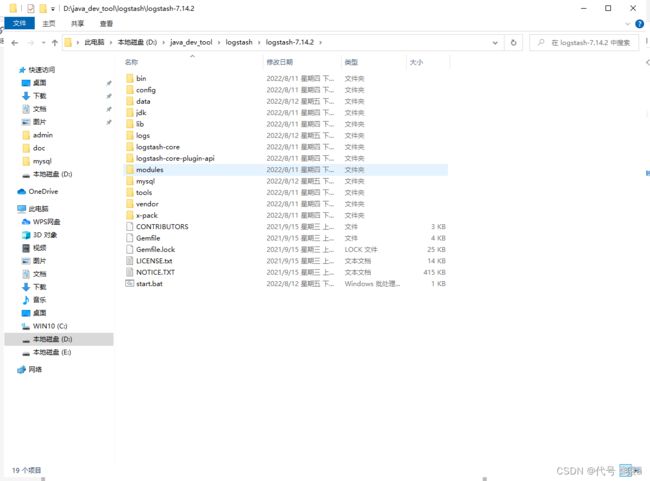

1.下载安装

注意版本要和自己的es版本一致

下载地址https://www.elastic.co/cn/downloads/past-releases#logstash

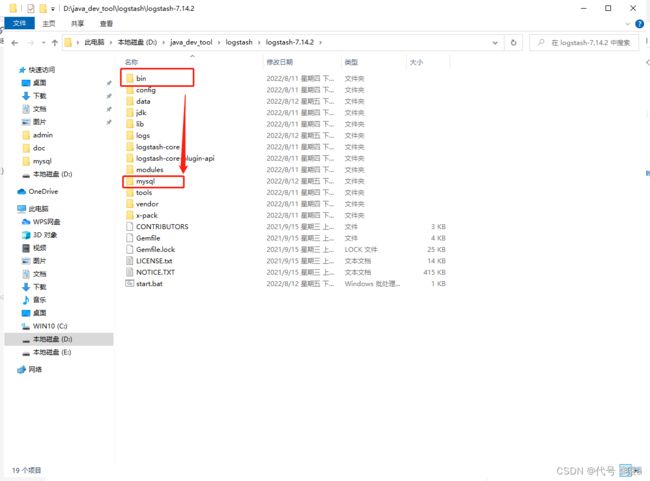

2.配置

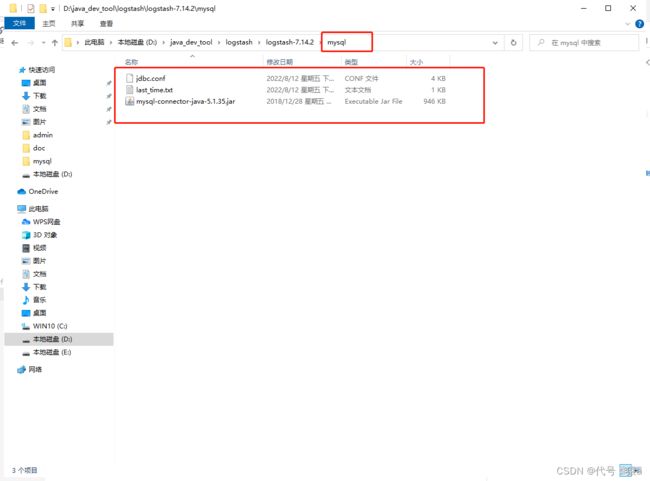

- 在bin同级目录下创建"mysql"文件夹

- 在刚创建的" mysql" 文件夹下创建文件jdbc.conf , last_time.txt 和 放入mysql驱动jar

- 配置jdbc.conf文件

单表同步

input {

stdin {}

jdbc {

type => "jdbc"

# 数据库连接地址

jdbc_connection_string => "jdbc:mysql://192.168.1.1:3306/TestDB?characterEncoding=UTF-8&autoReconnect=true""

# 数据库连接账号密码;

jdbc_user => "username"

jdbc_password => "pwd"

# MySQL依赖包路径;

jdbc_driver_library => "mysql/mysql-connector-java-5.1.34.jar"

# the name of the driver class for mysql

jdbc_driver_class => "com.mysql.jdbc.Driver"

# 数据库重连尝试次数

connection_retry_attempts => "3"

# 判断数据库连接是否可用,默认false不开启

jdbc_validate_connection => "true"

# 数据库连接可用校验超时时间,默认3600S

jdbc_validation_timeout => "3600"

# 开启分页查询(默认false不开启);

jdbc_paging_enabled => "true"

# 单次分页查询条数(默认100000,若字段较多且更新频率较高,建议调低此值);

jdbc_page_size => "500"

# statement为查询数据sql,如果sql较复杂,建议配通过statement_filepath配置sql文件的存放路径;

# sql_last_value为内置的变量,存放上次查询结果中最后一条数据tracking_column的值,此处即为ModifyTime;

# statement_filepath => "mysql/jdbc.sql"

# 注意数据库对的时间查出来和es中的时间格式不一致,会导致插入es失败,需要进行时间格式转换

statement => "SELECT t.id as id,t.`name` as name,t.num as num,t.create_by as createBy,DATE_FORMAT(t.create_time,'%Y-%m-%d %H:%i:%s') as createTime,t.update_by as updateBy,DATE_FORMAT(t.update_time,'%Y-%m-%d %H:%i:%s') as updateTime ,DATE_FORMAT(t.last_time,'%Y-%m-%d %H:%i:%s') as lastTime FROM product as t WHERE DATE_FORMAT(t.last_time,'%Y-%m-%d %H:%i:%s') >= DATE_FORMAT(:sql_last_value,'%Y-%m-%d %H:%i:%s') order by t.last_time asc"

# 是否将字段名转换为小写,默认true(如果有数据序列化、反序列化需求,建议改为false);

lowercase_column_names => false

# Value can be any of: fatal,error,warn,info,debug,默认info;

sql_log_level => warn

#

# 是否记录上次执行结果,true表示会将上次执行结果的tracking_column字段的值保存到last_run_metadata_path指定的文件中;

record_last_run => true

# 需要记录查询结果某字段的值时,此字段为true,否则默认tracking_column为timestamp的值;

use_column_value => true

# 需要记录的字段,用于增量同步,需是数据库字段

tracking_column => "ModifyTime"

# Value can be any of: numeric,timestamp,Default value is "numeric"

tracking_column_type => timestamp

# record_last_run上次数据存放位置;

last_run_metadata_path => "mysql/last_id.txt"

# 是否清除last_run_metadata_path的记录,需要增量同步时此字段必须为false;

clean_run => false

#

# 同步频率(分 时 天 月 年),默认每分钟同步一次;

schedule => "* * * * *"

}

}

filter {

json {

source => "message"

remove_field => ["message"]

}

# convert 字段类型转换,将字段TotalMoney数据类型改为float;

mutate {

convert => {

"TotalMoney" => "float"

}

}

}

output {

elasticsearch {

# host => "192.168.1.1"

# port => "9200"

# 配置ES集群地址

hosts => ["192.168.1.1:9200", "192.168.1.2:9200", "192.168.1.3:9200"]

# 索引名字,必须小写

index => "consumption"

# 数据唯一索引(建议使用数据库KeyID)

document_id => "%{KeyId}"

}

stdout {

codec => json_lines

}

}

多表同步

input {

stdin {}

jdbc {

# 多表同步时,表类型区分,建议命名为“库名_表名”,每个jdbc模块需对应一个type;

type => "TestDB_DetailTab"

# 其他配置此处省略,参考单表配置

# ...

# ...

# record_last_run上次数据存放位置;

last_run_metadata_path => "mysql\last_id.txt"

# 是否清除last_run_metadata_path的记录,需要增量同步时此字段必须为false;

clean_run => false

#

# 同步频率(分 时 天 月 年),默认每分钟同步一次;

schedule => "* * * * *"

}

jdbc {

# 多表同步时,表类型区分,建议命名为“库名_表名”,每个jdbc模块需对应一个type;

type => "TestDB_Tab2"

# 多表同步时,last_run_metadata_path配置的路径应不一致,避免有影响;

# 其他配置此处省略

# ...

# ...

}

}

filter {

json {

source => "message"

remove_field => ["message"]

}

}

output {

# output模块的type需和jdbc模块的type一致

if [type] == "TestDB_DetailTab" {

elasticsearch {

# host => "192.168.1.1"

# port => "9200"

# 配置ES集群地址

hosts => ["192.168.1.1:9200", "192.168.1.2:9200", "192.168.1.3:9200"]

# 索引名字,必须小写

index => "detailtab1"

# 数据唯一索引(建议使用数据库KeyID)

document_id => "%{KeyId}"

}

}

if [type] == "TestDB_Tab2" {

elasticsearch {

# host => "192.168.1.1"

# port => "9200"

# 配置ES集群地址

hosts => ["192.168.1.1:9200", "192.168.1.2:9200", "192.168.1.3:9200"]

# 索引名字,必须小写

index => "detailtab2"

# 数据唯一索引(建议使用数据库KeyID)

document_id => "%{KeyId}"

}

}

stdout {

codec => json_lines

}

}

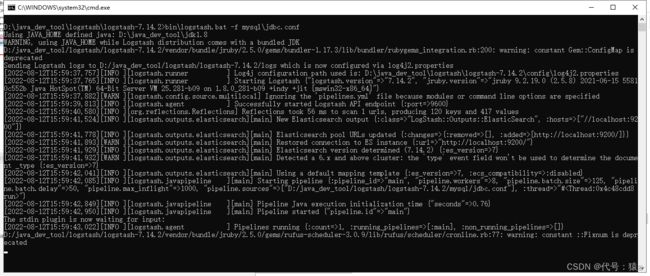

【windows】bin\logstash.bat -f mysql\jdbc.conf

【linux】nohup ./bin/logstash -f mysql/jdbc_jx_moretable.conf &

案例

提前创建好es索引,我用的是easy-es,直接启动自动创建了

- 数据库+初始数据

CREATE TABLE `product` (

`id` bigint(20) NOT NULL AUTO_INCREMENT COMMENT '主键',

`name` varchar(255) DEFAULT NULL COMMENT '名称',

`num` int(10) DEFAULT NULL COMMENT '数量',

`last_time` datetime DEFAULT NULL COMMENT '最后修改时间',

`create_time` datetime DEFAULT NULL COMMENT '创建时间',

`update_time` datetime DEFAULT NULL COMMENT '修改时间',

`create_by` varchar(255) DEFAULT NULL COMMENT '创建者',

`update_by` varchar(255) DEFAULT NULL COMMENT '修改者',

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=3 DEFAULT CHARSET=utf8mb4;

INSERT INTO `es`.`product`(`id`, `name`, `num`, `last_time`, `create_time`, `update_time`, `create_by`, `update_by`) VALUES (1, '香甜水蜜桃', 50, '2022-08-12 14:42:17', '2022-08-12 10:47:56', NULL, 'qts', NULL);

INSERT INTO `es`.`product`(`id`, `name`, `num`, `last_time`, `create_time`, `update_time`, `create_by`, `update_by`) VALUES (2, '红红的火龙果', 90, '2022-08-12 15:17:36', '2022-08-12 14:12:41', NULL, 'qts', NULL);

- es索引

{

"product": {

"aliases": {

"ee_default_alias": {}

},

"mappings": {

"properties": {

"@timestamp": {

"type": "date"

},

"@version": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

},

"createBy": {

"type": "keyword"

},

"createTime": {

"type": "date",

"format": "yyyy-MM-dd HH:mm:ss||yyyy-MM-dd||epoch_millis"

},

"id": {

"type": "long"

},

"lastTime": {

"type": "date",

"format": "yyyy-MM-dd HH:mm:ss||yyyy-MM-dd||epoch_millis"

},

"name": {

"type": "text",

"analyzer": "ik_smart",

"search_analyzer": "ik_max_word"

},

"num": {

"type": "integer"

},

"type": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

}

}

},

"settings": {

"index": {

"routing": {

"allocation": {

"include": {

"_tier_preference": "data_content"

}

}

},

"number_of_shards": "1",

"provided_name": "product",

"creation_date": "1660287566474",

"number_of_replicas": "1",

"uuid": "ey2A7AYKQB2OBvBUN-fN3Q",

"version": {

"created": "7140299"

}

}

}

}

}

- sql

SELECT

t.id AS id,

t.`name` AS NAME,

t.num AS num,

t.create_by AS createBy,

DATE_FORMAT( t.create_time, '%Y-%m-%d %H:%i:%s' ) AS createTime,

t.update_by AS updateBy,

DATE_FORMAT( t.update_time, '%Y-%m-%d %H:%i:%s' ) AS updateTime,

DATE_FORMAT( t.last_time, '%Y-%m-%d %H:%i:%s' ) AS lastTime

FROM

product AS t

WHERE

DATE_FORMAT( t.last_time, '%Y-%m-%d %H:%i:%s' ) >= DATE_FORMAT(:sql_last_value, '%Y-%m-%d %H:%i:%s' )

ORDER BY

t.last_time ASC

- jdbc.conf

input {

stdin {}

jdbc {

type => "jdbc"

# 数据库连接地址

jdbc_connection_string => "jdbc:mysql://localhost:3306/es?characterEncoding=UTF-8&autoReconnect=true"

# 数据库连接账号密码;

jdbc_user => "root"

jdbc_password => "root"

# MySQL依赖包路径;

jdbc_driver_library => "D:\java_dev_tool\logstash\logstash-7.14.2\mysql\mysql-connector-java-5.1.35.jar"

# the name of the driver class for mysql

jdbc_driver_class => "com.mysql.jdbc.Driver"

# 数据库重连尝试次数

connection_retry_attempts => "3"

# 判断数据库连接是否可用,默认false不开启

jdbc_validate_connection => "true"

# 数据库连接可用校验超时时间,默认3600S

jdbc_validation_timeout => "3600"

# 开启分页查询(默认false不开启);

jdbc_paging_enabled => "true"

# 单次分页查询条数(默认100000,若字段较多且更新频率较高,建议调低此值);

jdbc_page_size => "500"

# statement为查询数据sql,如果sql较复杂,建议配通过statement_filepath配置sql文件的存放路径;

# sql_last_value为内置的变量,存放上次查询结果中最后一条数据tracking_column的值,此处即为ModifyTime;

# statement_filepath => "mysql/jdbc.sql"

statement => "SELECT t.id as id,t.`name` as name,t.num as num,t.create_by as createBy,DATE_FORMAT(t.create_time,'%Y-%m-%d %H:%i:%s') as createTime,t.update_by as updateBy,DATE_FORMAT(t.update_time,'%Y-%m-%d %H:%i:%s') as updateTime ,DATE_FORMAT(t.last_time,'%Y-%m-%d %H:%i:%s') as lastTime FROM product as t WHERE DATE_FORMAT(t.last_time,'%Y-%m-%d %H:%i:%s') >= DATE_FORMAT(:sql_last_value,'%Y-%m-%d %H:%i:%s') order by t.last_time asc"

# 是否将字段名转换为小写,默认true(如果有数据序列化、反序列化需求,建议改为false);

lowercase_column_names => false

# Value can be any of: fatal,error,warn,info,debug,默认info;

sql_log_level => warn

#

# 是否记录上次执行结果,true表示会将上次执行结果的tracking_column字段的值保存到last_run_metadata_path指定的文件中;

record_last_run => true

# 需要记录查询结果某字段的值时,此字段为true,否则默认tracking_column为timestamp的值;

use_column_value => true

# 需要记录的字段,用于增量同步,需是数据库字段

tracking_column => "lastTime"

# Value can be any of: numeric,timestamp,Default value is "numeric"

tracking_column_type => timestamp

# record_last_run上次数据存放位置;

last_run_metadata_path => "D:\java_dev_tool\logstash\logstash-7.14.2\mysql\last_time.txt"

# 是否清除last_run_metadata_path的记录,需要增量同步时此字段必须为false;

clean_run => false

#

# 同步频率(分 时 天 月 年),默认每分钟同步一次;

schedule => "* * * * *"

}

}

filter {

json {

source => "message"

remove_field => ["message"]

}

# convert 字段类型转换,将字段TotalMoney数据类型改为float;

mutate {

convert => {

"TotalMoney" => "float"

}

}

}

output {

elasticsearch {

# host => "localhost"

# port => "9200"

# 配置ES集群地址

hosts => ["localhost:9200"]

# 索引名字,必须小写

index => "product"

# 数据唯一索引(建议使用数据库KeyID)

document_id => "%{id}"

}

stdout {

codec => json_lines

}

}

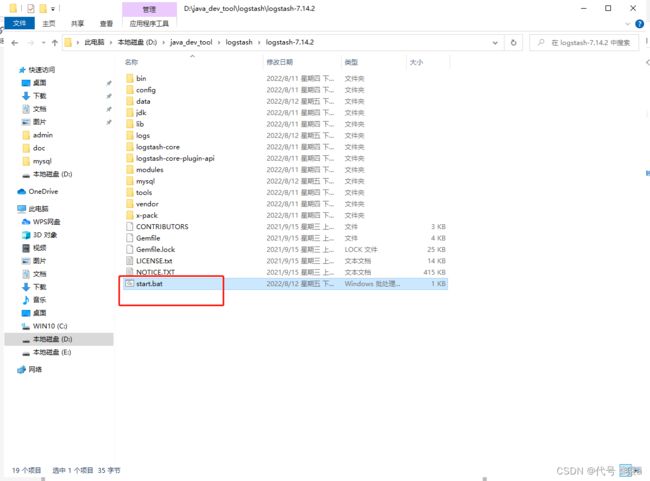

- start.bat 放在bin同级目录下

bin\logstash.bat -f mysql\jdbc.conf

http://localhost:9200/product/_search

- 结果

{

"took": 177,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 2,

"relation": "eq"

},

"max_score": 1.0,

"hits": [

{

"_index": "product",

"_type": "_doc",

"_id": "2",

"_score": 1.0,

"_source": {

"id": 2,

"lastTime": "2022-08-12 15:17:36",

"name": "红红的火龙果",

"updateBy": null,

"@version": "1",

"@timestamp": "2022-08-12T07:56:00.108Z",

"type": "jdbc",

"createBy": "qts",

"createTime": "2022-08-12 14:12:41",

"updateTime": null,

"num": 90

}

},

{

"_index": "product",

"_type": "_doc",

"_id": "1",

"_score": 1.0,

"_source": {

"id": 1,

"lastTime": "2022-08-12 15:54:58",

"name": "香甜水蜜桃",

"updateBy": null,

"@version": "1",

"@timestamp": "2022-08-12T07:57:00.212Z",

"type": "jdbc",

"createBy": "qts",

"createTime": "2022-08-12 10:47:56",

"updateTime": null,

"num": 50

}

}

]

}

}

参考文章 https://zxiaofan.blog.csdn.net/article/details/86708490?spm=1001.2101.3001.6650.2&utm_medium=distribute.pc_relevant.none-task-blog-2%7Edefault%7ECTRLIST%7ERate-2-86708490-blog-125497958.pc_relevant_multi_platform_whitelistv3&depth_1-utm_source=distribute.pc_relevant.none-task-blog-2%7Edefault%7ECTRLIST%7ERate-2-86708490-blog-125497958.pc_relevant_multi_platform_whitelistv3&utm_relevant_index=4