算法手记整理

文章目录

- 1、FPGrowth_频繁项挖掘

- 2、FTRL_在线学习

- 3、GMM高斯混合模型

- 4、HMM_隐马尔可夫

- 5、LightGBM

- 6、LOF_局部异常因子

- 7、louvain_社区挖掘

- 8、one-class SVM

- 9、PCA + DBSCAN

- 10、SimHash

- 11、编辑距离

- 12、分词工具

- 13、孤立森林

- 14、局部敏感哈希

- 15、局部敏感

- 16、时序异常检测

- 17、GNN_图神经网络

- 18、entropy_信息熵

- 19、用户画像

- 20、AutoEncoder_自动编码器

注:本文档仅针对日常接触到的各种算法进行笔记归档,不属于系列,欢迎感兴趣者自取

https://blog.csdn.net/circle2015/article/details/102771232

1、FPGrowth_频繁项挖掘

可以用来挖掘量级之间关系

解释到位并且有spark的scala代码

https://blog.csdn.net/zongzhiyuan/article/details/77883641

https://blog.csdn.net/baixiangxue/article/details/80335469

FpGrowth算法的平均效率远高于Apriori算法,但是它并不能保证高效率,它的效率依赖于数据集,当数据集中的频繁项集没有公共项时,所有的项集都挂在根结点上,不能实现压缩存储,而且Fptree还需要其他的开销,需要存储空间更大,使用FpGrowth算法前,对数据分析一下,看是否适合用FpGrowth算法

2、FTRL_在线学习

https://www.cnblogs.com/EE-NovRain/p/3810737.html

3、GMM高斯混合模型

- 浅显易懂的GMM模型及其训练过程

- 机器学习(十四)-GMM混合高斯模型(Gaussian mixture model)算法及Python实例

- 如何简单易懂的解释高斯混合(GMM)模型?

- Spark 2.1.0 入门:高斯混合模型(GMM)聚类算法

- 利用java的spark做高斯混合模型聚类

- Spark2.0机器学习系列之10: 聚类(高斯混合模型 GMM)

- 卡方检验 Chi-square test

4、HMM_隐马尔可夫

- https://stackoverflow.com/questions/39207657/hidden-markov-model-hmm-with-apache-spark

- 可以采用的github代码

- https://github.com/ldanduo/HMM/blob/master/HMM_train.py

- https://github.com/johnnatanjaramillo/Spark-HMM/tree/master/src/main/scala/org/com/jonas/hmm

- https://www.jianshu.com/p/c80ca0aa4213

- https://www.cnblogs.com/luxiaoxun/archive/2013/05/12/3074510.html

- https://www.jb51.net/article/137068.htm

- https://blog.csdn.net/danliwoo/article/details/82731157

- https://www.cnblogs.com/robin2ML/p/7340033.html

- https://blog.csdn.net/djph26741/article/details/101520579

- https://blog.csdn.net/csj941227/article/details/78564486

5、LightGBM

- LightGBM 中文文档

- LightGBM模型java部署

- 搭建基于 java + LightGBM 线上实时预测系统

- lightgbm模型通过pmml存储,在java中调用

- 如何用Apache Spark和LightGBM构建机器学习模型来预测信用卡欺诈

- 示例:python

- 官方spark包的更新

- 微软官方spark包

- 微软官方lightGBM包

- 官方spark的Maven Dependency的问题

- 官方spark文档

使用spark的包时,会发生下述报错:

User class threw exception: java.lang.NoClassDefFoundError: Lcom/microsoft/ml/lightgbm/SWIGTYPE_p_void;java.lang.NoClassDefFoundError: Lcom/microsoft/ml/lightgbm/SWIGTYPE_p_void;

原因是:

https://github.com/Azure/mmlspark/issues/482

6、LOF_局部异常因子

- https://blog.csdn.net/wangyibo0201/article/details/51705966

- https://blog.csdn.net/Jasminexjf/article/details/88240598

- https://blog.csdn.net/qq_37667364/article/details/91125932

- https://github.com/hibayesian/spark-lof

- https://www.cnblogs.com/bonelee/p/9848019.html

- https://scikit-learn.org/stable/modules/generated/sklearn.neighbors.LocalOutlierFactor.html#sklearn.neighbors.LocalOutlierFactor

7、louvain_社区挖掘

- 博客介绍的各个图算法,其中指出了DGA库的一些性能问题

- DGA库官方文档

- DGA整体库,支持spark2.0

- DGA中louvain的单独库,不支持spark2.0

- 某位小哥的采用上述中的库,支持spark2.0

- java版本的

- 利用spark进行层次社团发现(louvain算法测试),单纯跑上面官方代码,但是指出了各个字段含义

- python 包

8、one-class SVM

- https://www.cnblogs.com/wj-1314/p/10701708.html

%matplotlib inline

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.font_manager

from sklearn import svm

xx, yy = np.meshgrid(np.linspace(-5, 5, 500), np.linspace(-5, 5, 500))

# Generate train data

X = 0.3 * np.random.randn(100, 2)

X_train = np.r_[X + 2, X - 2]

X_test = np.r_[X + 2, X-2]

# Generate some abnormal novel observations

X_outliers = np.random.uniform(low=0.1, high=4, size=(20, 2))

# fit the model

clf = svm.OneClassSVM(nu=0.1, kernel='rbf', gamma=0.1)

clf.fit(X_train)

y_pred_train = clf.predict(X_train)

y_pred_test = clf.predict(X_test)

y_pred_outliers = clf.predict(X_outliers)

n_error_train = y_pred_train[y_pred_train == -1].size

n_error_test = y_pred_test[y_pred_test == -1].size

n_error_outlier = y_pred_outliers[y_pred_outliers == 1].size

print("size of train: %s, number of error: %s" % (X_train.size, n_error_train))

print("size of test: %s, number of error: %s" % (X_test.size, n_error_test))

print("size of outliers: %s, number of error: %s" % (X_outliers.size, n_error_outlier))

# plot the line , the points, and the nearest vectors to the plane

Z = clf.decision_function(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

plt.title("Novelty Detection")

plt.contourf(xx, yy, Z, levels=np.linspace(Z.min(), 0, 7), cmap=plt.cm.PuBu)

a = plt.contour(xx, yy, Z, levels=[0, Z.max()], colors='palevioletred')

s =40

b1 = plt.scatter(X_train[:, 0], X_train[:, 1], c='white', s=s, edgecolors='k')

b2 = plt.scatter(X_test[:, 0], X_test[:, 1], c='blueviolet', s=s, edgecolors='k')

c = plt.scatter(X_outliers[:, 0], X_outliers[:, 1], c='gold', s=s, edgecolors='k')

plt.axis('tight')

plt.xlim((-5, 5))

plt.ylim((-5, 5))

plt.legend([a.collections[0], b1, b2, c],

["learned frontier", 'training observations',

"new regular observations", "new abnormal observations"],

loc="upper left",

prop=matplotlib.font_manager.FontProperties(size=11))

plt.xlabel(

"error train: %d/200; errors novel regular: %d/200; errors novel abnormal:%d/40"%(

n_error_train, n_error_test, n_error_outlier) )

plt.show()

9、PCA + DBSCAN

- SparkML之PCA主成分分析

- 用Spark和DBSCAN对地理定位数据进行聚类

- DBSCAN的scala代码

- Spark跑「DBSCAN」算法,工业级代码长啥样?

<dependency>

<groupId>org.scalanlpgroupId>

<artifactId>nak_2.11artifactId>

<version>1.3version>

dependency>

10、SimHash

- simHash 简介以及 java 实现

- simHash 简介以及java实现

- SimHash简介以及java实现

- https://github.com/commoncrawl/commoncrawl/blob/master/src/main/java/org/commoncrawl/util/shared/SimHash.java

- Java—SimHash原理与实现

11、编辑距离

属于动态规划问题

LeetCode 72. 编辑距离

scala文本编辑距离算法实现

怎样衡量两个字符串的相似度

编辑距离

编辑距离(edit distance)

/**

* 返回两个字符串之间的相似度

* @param s1

* @param s2

* @return

*/

def editDistOptimizedSimilarity(s1: String, s2: String): Double = {

val longest = if (s1.length > s2.length) s1.length else s2.length

val nums = editDistOptimized(s1, s2).toDouble

return 1 - nums / longest

}

/**

* 主要优化编辑距离时间复杂度从O(n2)到O(n)

* @param s1

* @param s2

* @return https://www.jianshu.com/p/10519b584b50

*/

def editDistOptimized(s1: String, s2: String): Int = {

if(s1 == null || !"".equals(s1) || s2 == null || !"".equals(s2)){

if(s1.length > s2.length){

s1.length

}else{

s2.length

}

}

val s1_length = s1.length + 1

val s2_length = s2.length + 1

val matrix = Array.ofDim[Int](2, s2_length)

for (j <- 0.until(s2_length)) {

matrix(0)(j) = j

}

// 进行dp操作,由上述的状态转移方程迁移而来。

// 增加两个变量,用来标记dp数组的操作方式。

var last_index = 0

var current_index = 1

var tmp =0

var cost = 0

for (i <- 1.until(s1_length)) {

// 初始化dp数组,表示删除word字符的操作数

matrix(current_index)(0) = i

for (j <- 1.until(s2_length)) {

//辅助判断左上角元素是否加1

if (s1.charAt(i - 1) == s2.charAt(j - 1)) {

cost = 0

} else {

cost = 1

}

// 通过状态方程进行跃迁

matrix(current_index)(j) = math.min(math.min(matrix(last_index)(j) + 1, matrix(current_index)(j - 1) + 1), matrix(last_index)(j - 1) + cost)

}

// 交换这两个变量,复用数组,降低空间复杂度

tmp = last_index

last_index = current_index

current_index = tmp

}

matrix(last_index)(s2_length - 1)

}

12、分词工具

涉及到 ICTCLAS、Jieba、Ansj、THULAC、FNLP、Stanford CoreNLP、LTP

https://www.cnblogs.com/en-heng/p/6225117.html

13、孤立森林

- 异常点检测算法isolation forest的分布式实现

- Isolation Forest算法原理详解

- 异常点检测算法isolation forest的分布式实现

- 0x14 异常挖掘,Isolation Forest

- isolation forest

- github上面的链接

- 本人自己的github仓库

14、局部敏感哈希

- Spark Locality Sensitive Hashing (LSH)局部哈希敏感

- 局部敏感哈希-Locality Sensitivity Hashing

- 局部敏感哈希(Locality-Sensitive Hashing, LSH)方法介绍

val movieEmbSeq = movieEmbMap.toSeq.map(item => (item._1, Vectors.dense(item._2.map(f => f.toDouble))))

val movieEmbDF = spark.createDataFrame(movieEmbSeq).toDF("movieId", "emb")

//LSH bucket model

val bucketProjectionLSH = new BucketedRandomProjectionLSH()

.setBucketLength(0.1)

.setNumHashTables(3)

.setInputCol("emb")

.setOutputCol("bucketId")

val bucketModel = bucketProjectionLSH.fit(movieEmbDF)

val embBucketResult = bucketModel.transform(movieEmbDF)

println("movieId, emb, bucketId schema:")

embBucketResult.printSchema()

println("movieId, emb, bucketId data result:")

embBucketResult.show(10, truncate = false)

//尝试对一个示例Embedding查找最近邻

println("Approximately searching for 5 nearest neighbors of the sample embedding:")

val sampleEmb = Vectors.dense(0.795,0.583,1.120,0.850,0.174,-0.839,-0.0633,0.249,0.673,-0.237)

bucketModel.approxNearestNeighbors(movieEmbDF, sampleEmb, 5).show(truncate = false)

代码取自《深度学习推荐系统实践》

https://github.com/wzhe06/SparrowRecSys

15、局部敏感

- 如何解决深度推荐系统中的Embedding冷启动问题?王喆专栏

- 深度学习中不得不学的Graph Embedding方法,王喆专栏

- Embedding

- 神经网络中的embedding层

- 神经网络嵌入详解

- 深度学习中Embedding层有什么用?

- 10分钟AI - 万物皆可Embedding(嵌入)

16、时序异常检测

- 从时序异常检测(Time series anomaly detection algorithm)算法原理讨论到时序异常检测应用的思考,很全

- 时序异常检测算法概览,笼统概述

- 时序数据异常检测,有提到机器学习

- 时间序列分解算法:STL

- 时间序列分解算法:STL

- stl 基于时间序列分解的异常检测,提到后续二次检测

- 动态时间规整—DTW算法

- DTW算法的原理实现

- DTW算法及快速计算技巧介绍

- 干货 | 携程如何基于ARIMA时序分析做业务量的预测

- 时间序列模式(ARIMA)—Python实现

- python3用ARIMA模型进行时间序列预测

- 时间序列分析之指数平滑法(holt-winters及代码),比较容易理解

- 指数平滑

- 外卖订单量预测异常报警模型实践,美团文章可以参考

17、GNN_图神经网络

- GNN系列文章

- GNN 教程:GraphSAGE

- gcn官方代码

- 图卷积网络GCN代码分析(Tensorflow版),解读非常不错

- GCN的keras版本,还不错

- keras版本代码解读

18、entropy_信息熵

- 信息熵的计算

- 信息熵及其Python计算实现

信息熵是用来度量数据集的无序程度的,其值越大,则越无序。

熵越大,随机变量的不确定性就越大。

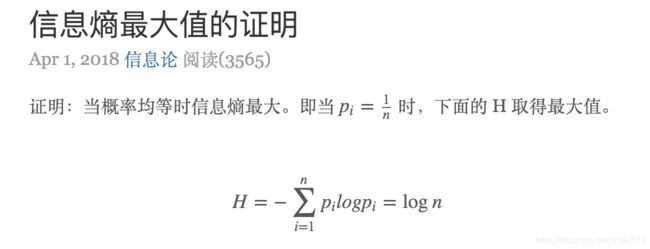

![]()

第一个函数式计算信息熵的,第二个函数是创建数据的。

import math

def cacShannonEnt(dataset):

numEntries = len(dataset)

labelCounts = {}

for featVec in dataset:

currentLabel = featVec[-1]

if currentLabel not in labelCounts.keys():

labelCounts[currentLabel] = 0

labelCounts[currentLabel] +=1

shannonEnt = 0.0

for key in labelCounts:

prob = float(labelCounts[key])/numEntries

shannonEnt -= prob*math.log(prob, 2)

return shannonEnt

def CreateDataSet():

dataset = [[1, 1, 'yes' ],

[1, 1, 'yes' ],

[1, 0, 'no'],

[0, 1, 'no'],

[0, 1, 'no']]

labels = ['no surfacing', 'flippers']

return dataset, labels

myDat,labels = CreateDataSet()

print(cacShannonEnt(myDat))

信息熵最大值的证明

相对熵 (Relative entropy),也称KL散度 (Kullback–Leibler divergence)

一文搞懂交叉熵在机器学习中的使用,透彻理解交叉熵背后的直觉

19、用户画像

- 用户画像在阅文的探索与实践

- 构建用户画像中所用到的AI算法

- 用户画像总结

- 做用户,绕不开画像!

20、AutoEncoder_自动编码器

主要思路还是经过编码和解码,保持对称,网络结构自定义

- 在 TensorFlow 中实现简单的自动编码器

- TensorFlow上实现AutoEncoder自编码器