深度学习入门--误差反向传播

前面介绍了通过数值微分计算神经网络的权重参数的梯度,虽然简单,但是计算上比较费时间。本文将介绍一个能够高效计算权重参数的梯度的方法–误差反向传播法。

正向传播将计算结果正向(从左到右)传递,其计算过程是我们日常接触的计算过程;而反向传播将局部倒数反方向(从右到左)传递。

1. 计算图

计算图就是将计算过程用图形表示出来。

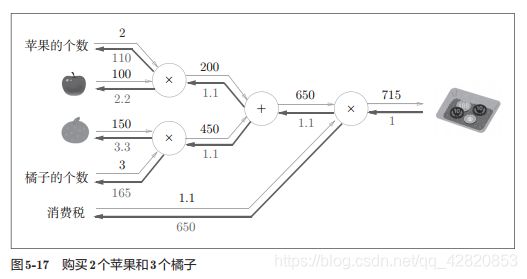

例如:太郎在超市买了2个苹果和3个橘子。其中,苹果每个100日元,橘子每个150日元。消费税是10%,请计算支付金额。

使用计算图表示求解过程:

计算图的特征是可以通过传递“局部计算”获得最终结果,局部计算是指:无论全局发生了什么,都能只根据与自己相关的信息输出接下来的结果。

计算图的优点:

(1)局部计算使各个节点致力于简单的计算,从而简化问题;

(2)计算图可以将中间的计算结果全部保存起来;

(3)最重要的一点是可以通过反向传播高效计算导数。

2. 链式法则

复合函数是由多个函数构成的函数。链式法则是关于复合函数的导数的性质:如果某个函数由复合函数表示,则该复合函数的导数可以用构成复合函数的各个函数导数的乘积表示。

例如: z = t 2 , t = x + y z=t^2, t=x+y z=t2,t=x+y,则 ∂ z ∂ x = ∂ z ∂ t ⋅ ∂ t ∂ x = 2 t ⋅ 1 = 2 ( x + y ) \frac{\partial z}{\partial x} = \frac{\partial z}{\partial t} \cdot \frac{\partial t}{\partial x} = 2t \cdot 1=2(x+y) ∂x∂z=∂t∂z⋅∂x∂t=2t⋅1=2(x+y)

反向传播是从右往左传播信号,计算顺序是:先将节点的输入信号乘以节点的局部导数(偏导数),然后再传递给下一个节点。

3. 反向传播

加法节点的反向传播

z = x + y , ∂ z ∂ x = 1 , ∂ z ∂ y = 1 z=x+y, \frac{\partial z}{\partial x} = 1, \frac{\partial z}{\partial y} =1 z=x+y,∂x∂z=1,∂y∂z=1

#加法层的实现

class AddLayer:

def __init__(self):

pass

def forward(self, x, y):

out = x + y

return out

def backward(self, dout):

dx = dout * 1

dy = dout * 1

return dx, dy

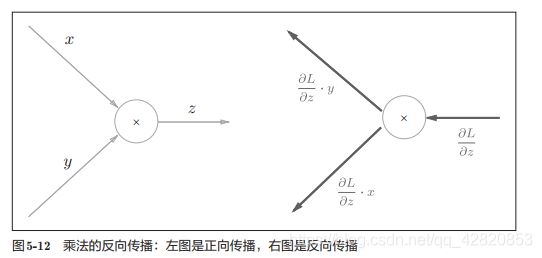

乘法节点的反向传播

z = x ⋅ y , ∂ z ∂ x = y , ∂ z ∂ y = = x z=x \cdot y, \frac{\partial z}{\partial x} = y, \frac{\partial z}{\partial y} ==x z=x⋅y,∂x∂z=y,∂y∂z==x

#乘法层的实现

class MulLayer:

def __init__(self):

self.x = None

self.y = None

def forward(self, x, y):

self.x = x

self.y = y

out = x * y

return out

def backward(self, dout):

dx = dout * self.y

dy = dout * self.x

return dx, dy

下面用计算图的反向传播计算支付金额关于苹果价格、苹果个数、橘子价格、橘子个数、消费税的导数。

最右端的输入为支付金额关于自身的导数,所以为1.将该值向左经过各个节点传播,使用加法节点和乘法节点的反向传播,最终可以得到所求的导数。

#以购买2个苹果和3个橘子为例

if __name__ == "__main__":

apple = 100

apple_num = 2

orange = 150

orange_num = 3

tax = 1.1

mul_apple_layer = MulLayer()

mul_orange_layer = MulLayer()

add_apple_orange_layer = AddLayer()

mul_tax_layer = MulLayer()

#前向传播

apple_price = mul_apple_layer.forward(apple, apple_num)

orange_price = mul_orange_layer.forward(orange, orange_num)

all_price = add_apple_orange_layer.forward(apple_price, orange_price)

price = mul_tax_layer.forward(all_price, tax)

print(price)

#反向传播

dprice = 1

dall_price, dtax = mul_tax_layer.backward(dprice)

dapple_price, dorange_price = add_apple_orange_layer.backward(dall_price)

dapple, dapple_num = mul_apple_layer.backward(dapple_price)

dorange, dorange_num = mul_orange_layer.backward(dorange_price)

print(dapple, dapple_num, dorange, dorange_num, dtax)

4.激活函数层的实现

#ReLU层

class Relu:

def __init__(self):

self.mask = None

def forward(self, x):

self.mask = (x <= 0)

out = x.copy()

out[self.mask] = 0

return out

def backward(self, dout):

dout[self.mask] = 0

dx = dout

return dx

Sigmoid层

y = 1 1 + e − x \begin{aligned} y=\frac{1}{1+e^{-x}} \end{aligned} y=1+e−x1

#sigmoid层

class Sigmoid:

def __init__(self):

self.out = None

def forward(self, x):

out = 1 / (1 + np.exp(-x))

self.out = out

return out

def backward(self, dout):

dx = dout * (1.0 - self.out) * self.out

return dx

5. Affine/Softmax层的实现

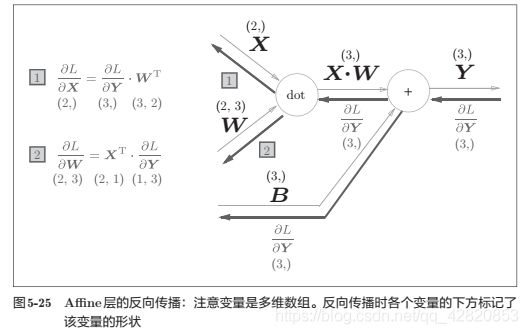

Affine层

神经网络的正向传播中,为了计算加权信号的总和,使用了矩阵的乘积运算。以矩阵为对象的反向传播,按矩阵的各个元素进行计算时,步骤和以标量为对象的计算图相同。

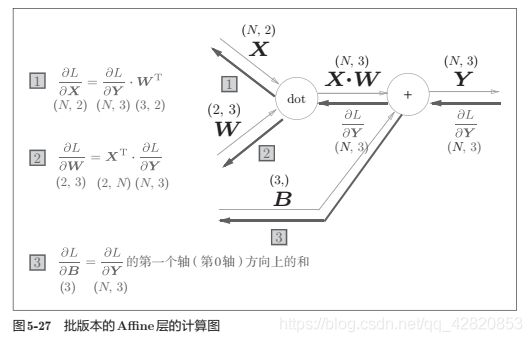

上图介绍的是Affine层的输入X是以单个数据为对象的。下面考虑N个数据一起进行正向传播的情况。

正向传播时,偏置会被加到每一个数据上,因此,反向传播时,各个数据的反向传播的值需要汇总为偏置的元素,所以对 ∂ L ∂ Y \frac{\partial L}{\partial Y} ∂Y∂L每一列取和。

#Affine层

class Affine:

def __init__(self, w, b):

self.w = w

self.b = b

self.x = None

self.dw = None

self.db = None

def forward(self, x):

self.x = x

out = np.dot(x,self.w) + self.b

return out

def backward(self, dout):

dx = np.dot(dout, self.w.T)

self.dw = np.dot(self.x.T, dout)

self.db = np.sum(dout, axis = 0)

return dx

Softmax-with-Loss层

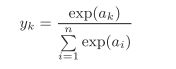

softmax层将输入值正规化之后再输出,使用的是softmax函数:

交叉熵误差: L = − ∑ k t k l o g y k L=-\sum _k t_klog y_k L=−∑ktklogyk

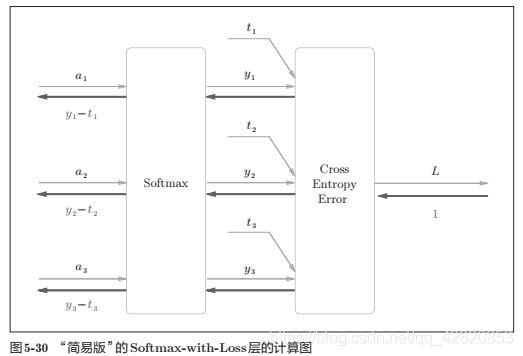

下面计算损失函数 L L L对输入 a k a_k ak的偏导数。

所以,Softmax-with-Loss层的反向传播为如下图所示。

神经网络学习的目的就是通过调整权重参数,使神经网络的输出接近监督标签。因此,必须将神经网络的输出与监督标签的误差高效地传播给前面的层。而Softmax层的反向传播得到的正是 y k − t k y_k-t_k yk−tk,直接表示了当前神经网络的输出与监督标签的误差。

使用交叉熵误差作为Softmax函数的损失函数后,反向传播能得到 y k − t k y_k-t_k yk−tk这样“漂亮”的结果,这并不是偶然的,而是为了得到这样的结果,特意设计了交叉熵误差函数。回归问题中输出层使用恒等函数,损失函数使用平方和误差,也是出于同样的理由。

#Softmax-with-Loss层

class SoftmaxWithLoss:

def __init__(self):

self.loss = None

self.y = None

self.t = None

def forward(self, x, t):

self.t = t

self.y = softmax(x)

self.loss = cross_entropy_error(self.y, self.t)

return self.loss

def backward(self, dout = 1):

batch_size = self.t.shape[0]

dx = (self.y - self.t) / batch_size

return dx

注意反向传播时,将要传播的值除以批的大小(batch_size)后,传递给前面的层的是单个数据的误差。

6. 误差反向传播算法的实现

class TwoLayerNet:

def __init__(self, input_size, hidden_size, output_size, weight_init_std = 0.01):

#初始化权重

self.params = {}

self.params['W1'] = weight_init_std * np.random.randn(input_size, hidden_size)

self.params['b1'] = np.zeros(hidden_size)

self.params['W2'] = weight_init_std * np.random.randn(hidden_size, output_size)

self.params['b2'] = np.zeros(output_size)

#生成层

self.layers = OrderedDict()#有序字典,根据添加顺序排序

self.layers['Affine1'] = Affine(self.params['W1'], self.params['b1'])

self.layers['Relu1'] = Relu()

self.layers['Affine2'] = Affine(self.params['W2'], self.params['b2'])

self.lastLayer = SoftmaxWithLoss()

def predict(self, x):

for layer in self.layers.values():

x = layer.forward(x)

return x

def loss(self, x, t):

y = self.predict(x)

return self.lastLayer.forward(y, t)

def accuracy(self, x, t):

y = self.predict(x)

y = np.argmax(y, axis = 1)

if t.ndim != 1 : t = np.argmax(t, axis = 1)

accuracy = np.sum(y == t) / float(x.shape[0])

return accuracy

#数值微分方式求导数

def num_gradient(self, x, t):

loss_W = lambda W: self.loss(x, t)

grads = {}

grads['W1'] = numerical_gradient(loss_W, self.params['W1'])

grads['b1'] = numerical_gradient(loss_W, self.params['b1'])

grads['W2'] = numerical_gradient(loss_W, self.params['W2'])

grads['b2'] = numerical_gradient(loss_W, self.params['b2'])

return grads

#误差反向传播方式求导数

def gradient(self, x, t):

#forward

self.loss(x, t)

#backward

dout = 1

dout = self.lastLayer.backward(dout)

layers = list(self.layers.values())

layers.reverse()

for layer in layers:

dout = layer.backward(dout)

grads = {}

grads['W1'] = self.layers['Affine1'].dw

grads['b1'] = self.layers['Affine1'].db

grads['W2'] = self.layers['Affine2'].dw

grads['b2'] = self.layers['Affine2'].db

return grads

#确认数值微分求出来的梯度结果和误差反向传播求出来的结果是否一致

if __name__ == "__main__":

from dataset.mnist import load_mnist

(x_train, t_train), (x_test, t_test) = load_mnist(normalize = True, one_hot_label = True)

network = TwoLayerNet(input_size = 784, hidden_size = 50, output_size = 10)

x_batch = x_train[:3]

t_batch = t_train[:3]

grad_numerical = network.num_gradient(x_batch, t_batch)

grad_back = network.gradient(x_batch, t_batch)

for key in grad_numerical.keys():

diff = np.average(np.abs(grad_back[key] - grad_numerical[key]))

print(key + ":" + str(diff))

if __name__ == "__main__":

#使用误差反向传播方法在MNIST数据集上进行mini-batch学习

from dataset.mnist import load_mnist

(x_train, t_train), (x_test, t_test) = load_mnist(normalize = True, one_hot_label = True)

train_loss_list = []

train_acc_list = []

test_acc_list = []

#超参数设置

iters_num = 10000

train_size = x_train.shape[0]

batch_size = 100

learning_rate = 0.1

network = TwoLayerNet(input_size = 784, hidden_size = 50, output_size = 10)

iter_per_epoch = max(train_size / batch_size, 1)

for i in range(iters_num):

#获取mini-batch

batch_mask = np.random.choice(train_size, batch_size)

x_batch = x_train[batch_mask]

t_batch = t_train[batch_mask]

#计算梯度

grad = network.gradient(x_batch, t_batch)

#更新参数

for key in ('W1', 'b1', 'W2', 'b2'):

network.params[key] -= learning_rate * grad[key]

#记录学习过程

loss = network.loss(x_batch, t_batch)

train_loss_list.append(loss)

if i % iter_per_epoch == 0:

train_acc = network.accuracy(x_train, t_train)

test_acc = network.accuracy(x_test, t_test)

train_acc_list.append(train_acc)

test_acc_list.append(test_acc)

print(train_acc, test_acc)