Prometheus监控系统详解

一、监控原理简介

监控系统在这里特指对数据中心的监控,主要针对数据中心内的硬件和软件进行监控和告警。

从监控对象的角度来看,可以将监控分为网络监控、存储监控、服务器监控和应用监控等。

从程序设计的角度来看,可以将监控分为基础资源监控、中间件监控、应用程序监控和日志监控。

1、基础资源监控

从监控对象的角度来看,可以将基础资源监控分为网络监控、存储监控和服务器监控。

1)网络监控

这里讲解的网络监控主要包括:

- 对数据中心内网络流量的监控;

- 对网络拓扑发现及网络设备的监控;

- 对网络性能的监控及对网络攻击的探测等。

网络监控主要有以下几个方向:

(1)网络性能监控(Network Performance Monitor,NPM):主要涉及网络监测、网络实时流量监控(网络延迟、访问量、成功率等)和历史数据统计、汇总和历史数据分析等功能。

(2)网络攻击检查:主要针对内网或者外网的网络攻击如DDos攻击等,通过分析异常流量来确定网络攻击行为。

(3)设备监控:主要针对数据中心内的多种网络设备进行监控,包括路由器、防火墙和交换机等硬件设备,可以通过SNMP等协议收集数据。

2)存储监控

存储主要分为云存储和分布式存储两部分:

- 云存储主要通过存储设备构建存储资源池,并对操作系统提供统一的存储接口,例如块存储的 SCSI和文件存储 NFS等。它们的特点是存储接口统一,并不识别存储数据的格式和内容,例如块存储只负责保存二进制数据块,这些二进制数据可能来自图片或视频,对于块存储来说都是一样的。

- 分布式存储主要构建在操作系统之上,提供分布式集群存储服务,主要是针对特定数据结构的数据存储。例如HDFS的大文件存储、Dynamo的键值数据存储、Elasticsearch的日志文档存储等。

我们可以将云存储监控分为存储性能监控、存储系统监控、存储设备监控:

- 在存储性能监控方面,块存储通常监控块的读写速率、IOPS、读写延迟、磁盘用量等;文件存储通常监控文件系统Inode、读写速度、目录权限等。

- 在存储系统监控方面,不同的存储系统有不同的指标。例如,对于Ceph存储,需要监控OSD、MON的运行状态,各种状态PG的数量,以及集群IOPS等信息。

- 在存储设备监控方面,对于构建在x86服务器上的存储设备,设备监控通过每个存储节点上的采集器统一收集磁盘、SSD、网卡等设备信息;存储厂商以黑盒方式提供的商业存储设备通常自带监控功能,可监控设备的运行状态、性能和容量等。

3)服务器监控

服务器监控包括物理服务器监控,虚拟机监控和容器监控。

- 对服务器硬件的兼容。数据中心内的服务器通常来自多个厂商如Dell、华为或者联想等,服务器监控需要获取不同厂商的服务器硬件信息。

- 对操作系统的兼容。为了适应不同软件的需求,在服务器上会安装不同的操作系统如 Windows、Linux,采集软件需要做到跨平台运行以获取对应的指标。

- 对虚拟化环境的兼容。当前,虚拟化已经成为数据中心的标配,可以更加高效便捷地获取计算和存储服务。服务器监控需要兼容各种虚拟化环境如虚拟机(KVM、VMware、Xen)及容器(Docker、rkt)。

采集方式通常分为两种:一种是内置客户端,即在每台机器上都安装采集客户端;另一种是在外部采集,例如在虚化环境中可以通过Xen API、VMware Vcenter API或者Libvirt的接口分别获取监控数据。

从操作系统层级来看,采集直指标通常如下:CPU、内存、网络IO、磁盘IO

服务器监控还包括针对物理硬件的监控,可以通过IPMI(Intelligent Platform Management Interface,智能平台管理接口)实现。IPMI是一种标准开放的硬件管理接口,不依赖于操作系统,可以提供服务器的风扇、温度、电压等信息。

4)中间件监控

常用的中间件主要有以下几类:

- 消息中间件,例如RabbitMQ、Kafka。

- Web服务中间件,例如Tomcat、Jetty。

- 缓存中间件,例如Redis、Memcached。

- 数据库中间件,例如MySQL、PostgreSQL。

5)应用程序监控(APM)

6)日志监控

目前业内比较流行的监控组合:

- Fluentd----Kafka----logstash----Elasticsearch----Kibana

- Fluentd:主要负责日志采集,其他开源组件还有Filebeta、Flume、Fluent、Bit等,也有以西而应用集成log4g等日志组件直接输出日志。

- Kafka:主要负责数据整流合并,避免突发日志流量直接冲击Logstash,业内也有用Redis替换Kafka的方案。

- Logstash:负责日志整理,可以完成日志过滤、日志修改等功能。

- Elasticserach:负责日志存储和日志检索,自带分布式存储,可以将采集的日志进行分片存储。

- Kibana:是一个日志查询组件,负责日志展现,主要通过Elasticsearch的HTTP接口展现日志。

2、监控系统实现

1)指标采集

指标采集包括数据采集、数据传输和过滤、以及数据存储

2)数据处理

数据处理分为:数据查询、数据分析和基于规则告警等。

二、Prometheus简介

prometheus受启发于Google的Brogmon监控系统(相似kubernetes是从Brog系统演变而来), 从2012年开始由google工程师Soundcloud以开源形式进行研发,并且与2015年早起对外发布早期版本。 2016年5月继kubernetes之后成为第二个加入CNCF基金会的项目,童年6月正式发布1.0版本。2017年底发布基于全兴存储层的2.0版本,能更好地与容器平台、云平台配合。

官方网站:https://prometheus.io

项目托管:https://github.com/prometheus

1)prometheus的优势

prometheus是基于一个开源的完整监控方案,其对传统监控系统的测试和告警模型进行了彻底的颠覆,形成了基于中央化的规则计算、统一分析和告警的新模型。 相对传统的监控系统有如下几个优点。

- 易于管理: 部署使用的是go编译的二进制文件,不存在任何第三方依赖问题,可以使用服务发现动态管理监控目标。

- 监控服务内部运行状态: 我们可以使用prometheus提供的常用开发语言提供的client库完成应用层面暴露数据, 采集应用内部运行信息。

- 强大的查询语言promQL: prometheus内置一个强大的数据查询语言PromQL,通过PromQL可以实现对监控数据的查询、聚合。同时PromQL也被应用于数据可视化(如grafana)以及告警中的。

- 高效: 对于监控系统而言,大量的监控任务必然导致有大量的数据产生。 而Prometheus可以高效地处理这些数据。

- 可扩展: prometheus配置比较简单, 可以在每个数据中心运行独立的prometheus server, 也可以使用联邦集群,让多个prometheus实例产生一个逻辑集群,还可以在单个prometheus server处理的任务量过大的时候,通过使用功能分区和联邦集群对其扩展。

- 易于集成: 目前官方提供多种语言的客户端sdk,基于这些sdk可以快速让应用程序纳入到监控系统中,同时还可以支持与其他的监控系统集成。

- 可视化: prometheus server自带一个ui, 通过这个ui可以方便对数据进行查询和图形化展示,可以对接grafana可视化工具展示精美监控指标。

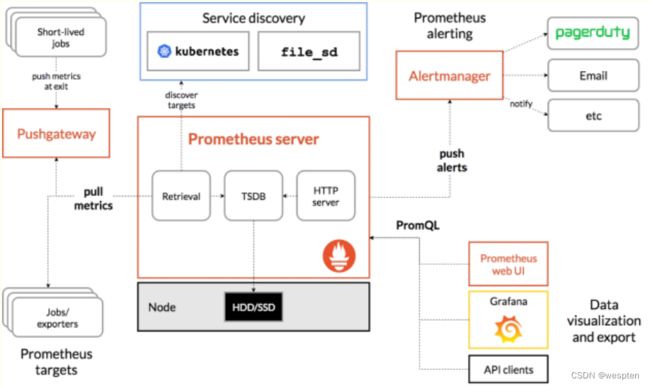

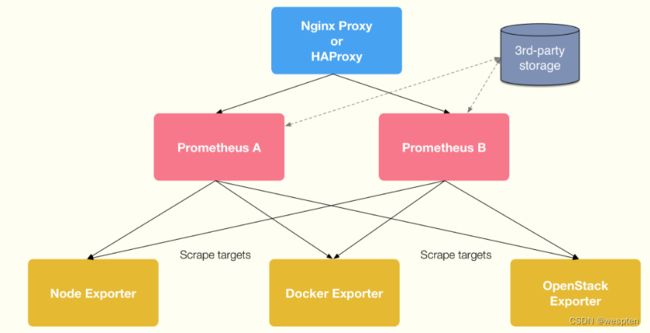

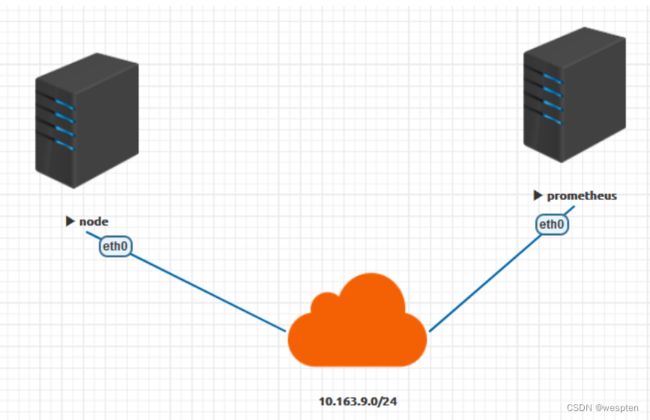

2)Prometheus基础架构

上面的架构图已经画的足够详细了。 这里在简单说下, prometheus负责从pushgateway和job中采集数据, 存储到后端Storatge中,可以通过PromQL进行查询, 推送alerts信息到AlertManager。 AlertManager根据不同的路由规则进行报警通知。

3)核心组件

(1)Prometheus

prometheus server是Prometheus组件中的核心部分,负责实现对监控数据的获取,存储以及查询。

(2)exporters

exporter简单说是采集端,通过http服务的形式保留一个url地址,prometheus server 通过访问该exporter提供的endpoint端点,即可获取到需要采集的监控数据。exporter分为2大类。

直接采集:这一类exporter直接内置了对Prometheus监控的支持,比如cAdvisor,Kubernetes等。

间接采集: 原有监控目标不支持prometheus,需要通过prometheus提供的客户端库编写监控采集程序,例如Mysql Exporter, JMX Exporter等。

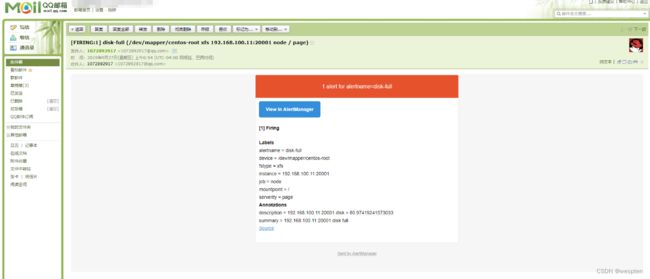

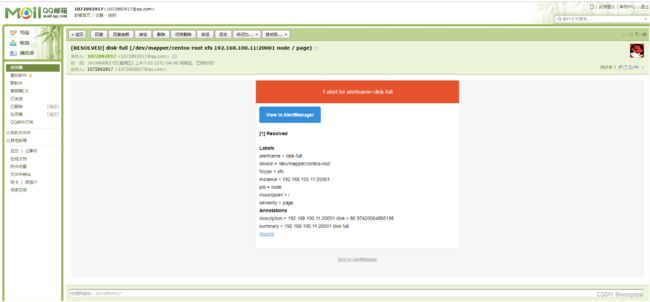

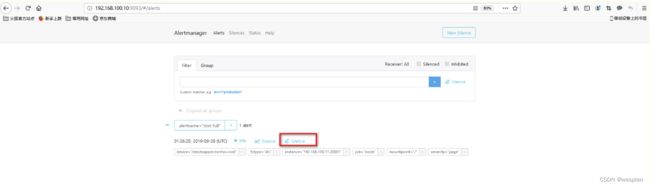

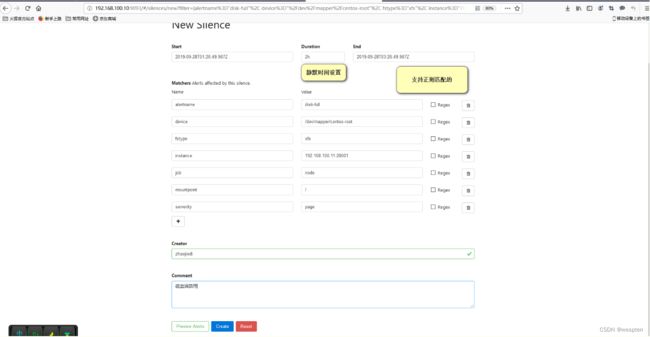

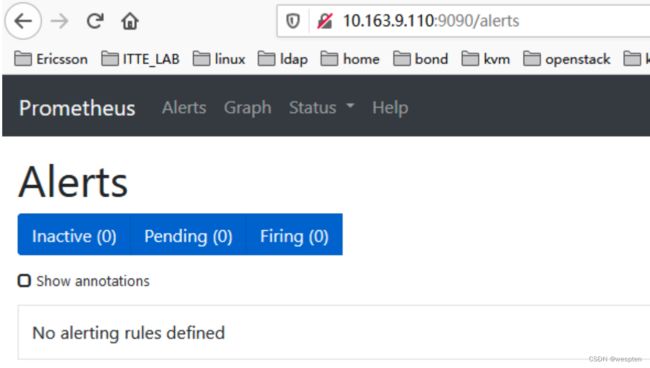

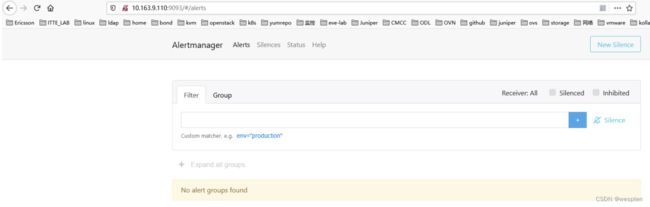

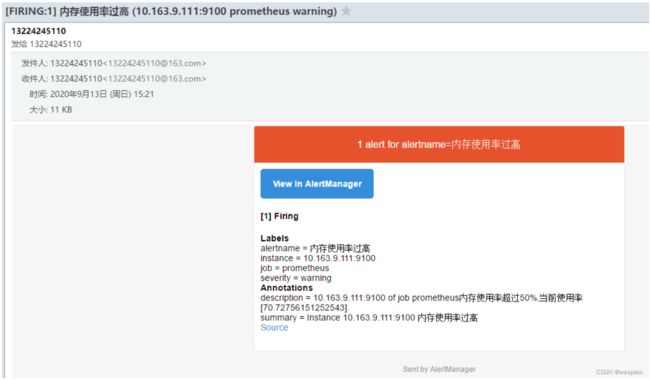

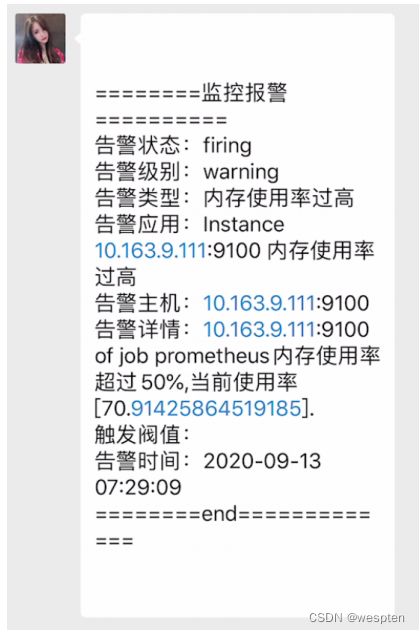

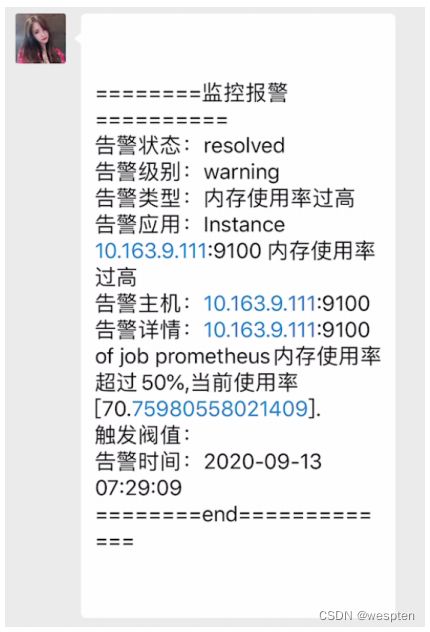

(3)AlertManager

在prometheus中,支持基于PromQL创建告警规则,如果满足定义的规则,则会产生一条告警信息,进入AlertManager进行处理。可以集成邮件,Slack或者通过webhook自定义报警。

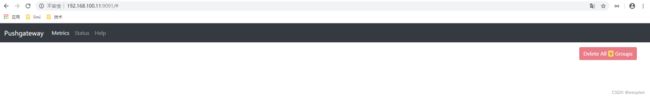

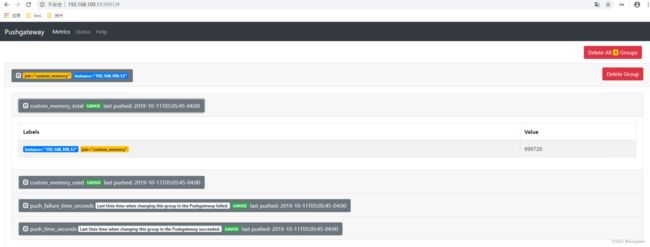

(4)PushGateway

由于Prometheus数据采集采用pull方式进行设置的, 内置必须保证prometheus server 和对应的exporter必须通信,当网络情况无法直接满足时,可以使用pushgateway来进行中转,可以通过pushgateway将内部网络数据主动push到gateway里面去,而prometheus采用pull方式拉取pushgateway中数据。

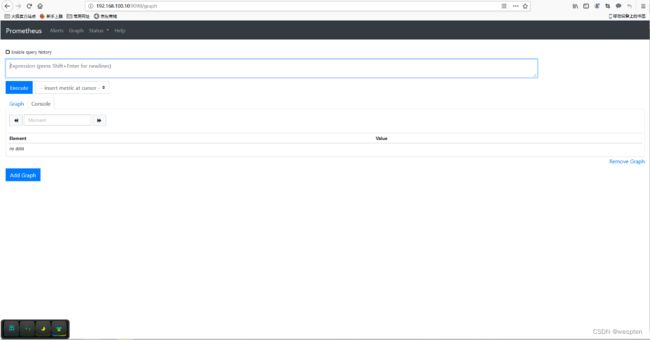

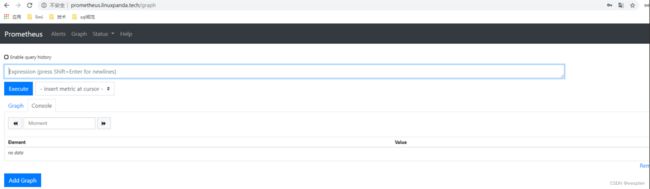

(5)Web UI

Prometheus内置一个简单的Web控制台,可以查询指标,查看配置信息或者Service Discovery等,实际工作中,查看指标或者创建仪表盘通常使用Grafana,Prometheus作为Grafana的数据源。

4)应用场景

适合场景:

普罗米修斯可以很好地记录任何纯数字时间序列。它既适合以机器为中心的监视,也适合高度动态的面向服务的体系结构的监视。在微服务的世界中,它对多维数据收集和查询的支持是一个特别的优势。普罗米修斯是为可靠性而设计的,它是您在停机期间使用的系统,允许您快速诊断问题。每台普罗米修斯服务器都是独立的,不依赖于网络存储或其他远程服务。当您的基础设施的其他部分被破坏时,您可以依赖它,并且您不需要设置广泛的基础设施来使用它。

不适合场景:

普罗米修斯值的可靠性。您总是可以查看有关系统的统计信息,即使在出现故障的情况下也是如此。如果您需要100%的准确性,例如按请求计费,普罗米修斯不是一个好的选择,因为收集的数据可能不够详细和完整。在这种情况下,最好使用其他系统来收集和分析用于计费的数据,并使用Prometheus来完成剩下的监视工作。

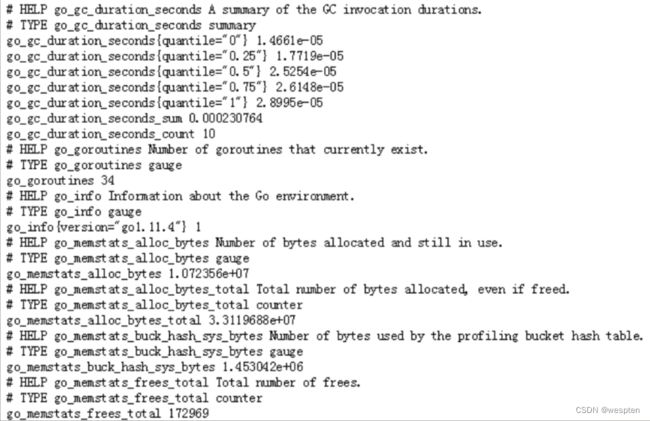

5)Prometheus数据模型

Prometheus将所有数据存储为时间序列;具有相同度量名称以及标签属于同一个指标。

每个时间序列都由度量标准名称和一组键值对(也成为标签)唯一标识。

时间序列格式:

{ 示例:

api_http_requests_total{method="POST", handler="/messages"}

度量名称{标签名=值}值。

HELP:说明指标是干什么的;

TYPE:指标类型,这个数据的指标类型;

注:度量名通常是一英文命名清晰。标签名英文、值推荐英文。

6)Prometheus指标类型

- Counter:递增的计数器

适合:API 接口请求次数,重试次数。 - Gauge:可以任意变化的数值

适合:cpu变化,类似波浪线不均匀。 - Histogram:对一段时间范围内数据进行采样,并对所有数值求和与统计数量、柱状图

适合:将web 一段时间进行分组,根据标签度量名称,统计这段时间这个度量名称有多少条。

适合:某个时间对某个度量值,分组,一段时间http相应大小,请求耗时的时间。 - Summary:与Histogram类似

三、Prometheus安装部署

1、下载

在prometheus的官网的download页面,可以找到prometheus的下载二进制包。

[root@node00 src]# cd /usr/src/

[root@node00 src]# wget https://github.com/prometheus/prometheus/releases/download/v2.12.0/prometheus-2.12.0.linux-amd64.tar.gz

[root@node00 src]# mkdir /usr/local/prometheus/

[root@node00 src]# tar xf prometheus-2.12.0.linux-amd64.tar.gz -C /usr/local/prometheus/

[root@node00 src]# cd /usr/local/prometheus/

[root@node00 prometheus]# ln -s prometheus-2.12.0.linux-amd64 prometheus

[root@node00 prometheus]# ll

total 0

lrwxrwxrwx 1 root root 29 Sep 20 05:06 prometheus -> prometheus-2.12.0.linux-amd64

drwxr-xr-x 4 3434 3434 132 Aug 18 11:40 prometheus-2.12.0.linux-amd64

[root@node00 prometheus]# cd prometheus

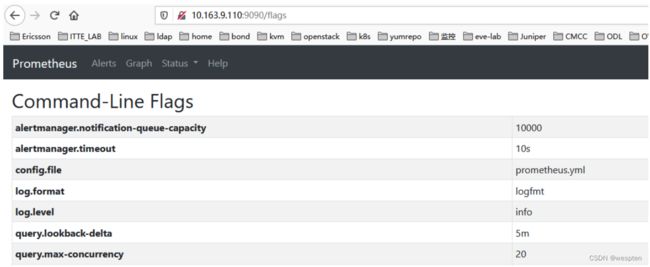

获取配置帮助:

[root@node00 prometheus]# ./prometheus --help2、启动服务

# 启动

[root@node00 prometheus]# ./prometheus

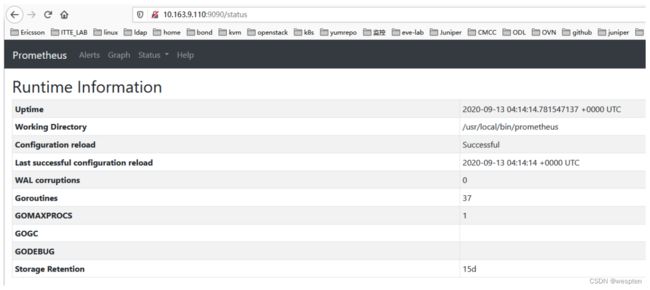

level=info ts=2019-09-20T09:45:35.470Z caller=main.go:293 msg="no time or size retention was set so using the default time retention" duration=15d

level=info ts=2019-09-20T09:45:35.470Z caller=main.go:329 msg="Starting Prometheus" version="(version=2.12.0, branch=HEAD, revision=43acd0e2e93f9f70c49b2267efa0124f1e759e86)"

level=info ts=2019-09-20T09:45:35.470Z caller=main.go:330 build_context="(go=go1.12.8, user=root@7a9dbdbe0cc7, date=20190818-13:53:16)"

level=info ts=2019-09-20T09:45:35.470Z caller=main.go:331 host_details="(Linux 3.10.0-693.el7.x86_64 #1 SMP Tue Aug 22 21:09:27 UTC 2017 x86_64 node00 (none))"

level=info ts=2019-09-20T09:45:35.470Z caller=main.go:332 fd_limits="(soft=1024, hard=4096)"

level=info ts=2019-09-20T09:45:35.470Z caller=main.go:333 vm_limits="(soft=unlimited, hard=unlimited)"

level=info ts=2019-09-20T09:45:35.473Z caller=main.go:654 msg="Starting TSDB ..."

level=info ts=2019-09-20T09:45:35.473Z caller=web.go:448 component=web msg="Start listening for connections" address=0.0.0.0:9090

level=info ts=2019-09-20T09:45:35.519Z caller=head.go:509 component=tsdb msg="replaying WAL, this may take awhile"

level=info ts=2019-09-20T09:45:35.520Z caller=head.go:557 component=tsdb msg="WAL segment loaded" segment=0 maxSegment=0

level=info ts=2019-09-20T09:45:35.520Z caller=main.go:669 fs_type=XFS_SUPER_MAGIC

level=info ts=2019-09-20T09:45:35.520Z caller=main.go:670 msg="TSDB started"

level=info ts=2019-09-20T09:45:35.520Z caller=main.go:740 msg="Loading configuration file" filename=prometheus.yml

level=info ts=2019-09-20T09:45:35.568Z caller=main.go:768 msg="Completed loading of configuration file" filename=prometheus.yml

level=info ts=2019-09-20T09:45:35.568Z caller=main.go:623 msg="Server is ready to receive web requests."

启动参数:

# 指定配置文件

--config.file="prometheus.yml"

# 指定监听地址端口

--web.listen-address="0.0.0.0:9090"

# 最大连接数

--web.max-connections=512

# tsdb数据存储的目录,默认当前data/

--storage.tsdb.path="data/"

# premetheus 存储数据的时间,默认保存15天

--storage.tsdb.retention=15d

3、测试访问

测试访问:http://localhost:9090

查看暴露指标:http://localhost.com:9090/metrics

4、配置开机自启

# 进入systemd文件目录

[root@node00 system]# cd /usr/lib/systemd/system

# 编写prometheus systemd文件

[root@node00 system]# cat prometheus.service

[Unit]

Description=prometheus

After=network.target

[Service]

User=prometheus

Group=prometheus

WorkingDirectory=/usr/local/prometheus/prometheus

ExecStart=/usr/local/prometheus/prometheus/prometheus

[Install]

WantedBy=multi-user.target

# 启动

[root@node00 system]# systemctl restart prometheus

# 查看状态

[root@node00 system]# systemctl status prometheus

● prometheus.service - prometheus

Loaded: loaded (/usr/lib/systemd/system/prometheus.service; disabled; vendor preset: disabled)

Active: active (running) since Fri 2019-09-20 06:11:21 EDT; 4s ago

Main PID: 32871 (prometheus)

CGroup: /system.slice/prometheus.service

└─32871 /usr/local/prometheus/prometheus/prometheus

Sep 20 06:11:21 node00 prometheus[32871]: level=info ts=2019-09-20T10:11:21.634Z caller=head.go:509 component=tsdb msg="replaying WAL, this may take awhile"

Sep 20 06:11:21 node00 prometheus[32871]: level=info ts=2019-09-20T10:11:21.640Z caller=head.go:557 component=tsdb msg="WAL segment loaded" segment=0 maxSegment=3

Sep 20 06:11:21 node00 prometheus[32871]: level=info ts=2019-09-20T10:11:21.640Z caller=head.go:557 component=tsdb msg="WAL segment loaded" segment=1 maxSegment=3

Sep 20 06:11:21 node00 prometheus[32871]: level=info ts=2019-09-20T10:11:21.641Z caller=head.go:557 component=tsdb msg="WAL segment loaded" segment=2 maxSegment=3

Sep 20 06:11:21 node00 prometheus[32871]: level=info ts=2019-09-20T10:11:21.641Z caller=head.go:557 component=tsdb msg="WAL segment loaded" segment=3 maxSegment=3

Sep 20 06:11:21 node00 prometheus[32871]: level=info ts=2019-09-20T10:11:21.642Z caller=main.go:669 fs_type=XFS_SUPER_MAGIC

Sep 20 06:11:21 node00 prometheus[32871]: level=info ts=2019-09-20T10:11:21.642Z caller=main.go:670 msg="TSDB started"

Sep 20 06:11:21 node00 prometheus[32871]: level=info ts=2019-09-20T10:11:21.642Z caller=main.go:740 msg="Loading configuration file" filename=prometheus.yml

Sep 20 06:11:21 node00 prometheus[32871]: level=info ts=2019-09-20T10:11:21.686Z caller=main.go:768 msg="Completed loading of configuration file" filename=prometheus.yml

Sep 20 06:11:21 node00 prometheus[32871]: level=info ts=2019-09-20T10:11:21.686Z caller=main.go:623 msg="Server is ready to receive web requests."

# 开机自启配置

[root@node00 system]# systemctl enable prometheus

Created symlink from /etc/systemd/system/multi-user.target.wants/prometheus.service to /usr/lib/systemd/system/prometheus.service.

5、后端存储配置

默认情况下prometheus会将采集的数据防止到本机的data目录的, 存储数据的大小受限和扩展不便,这是使用influxdb作为后端的数据库来存储数据。

influxdb的官方文档地址为: Downloading InfluxDB OSS | InfluxDB OSS 1.7 Documentation 根据不同系统进行下载,这里使用官方提供的rpm进行安装。

# 下载rpm

wget https://dl.influxdata.com/influxdb/releases/influxdb-1.7.8.x86_64.rpm

# 本地安装rpm

sudo yum localinstall influxdb-1.7.8.x86_64.rpm

# 查看安装的文件

[root@node00 influxdb]# rpm -ql influxdb

/etc/influxdb/influxdb.conf

/etc/logrotate.d/influxdb

/usr/bin/influx

/usr/bin/influx_inspect

/usr/bin/influx_stress

/usr/bin/influx_tsm

/usr/bin/influxd

/usr/lib/influxdb/scripts/influxdb.service

/usr/lib/influxdb/scripts/init.sh

/usr/share/man/man1/influx.1.gz

/usr/share/man/man1/influx_inspect.1.gz

/usr/share/man/man1/influx_stress.1.gz

/usr/share/man/man1/influx_tsm.1.gz

/usr/share/man/man1/influxd-backup.1.gz

/usr/share/man/man1/influxd-config.1.gz

/usr/share/man/man1/influxd-restore.1.gz

/usr/share/man/man1/influxd-run.1.gz

/usr/share/man/man1/influxd-version.1.gz

/usr/share/man/man1/influxd.1.gz

/var/lib/influxdb

/var/log/influxdb

# 备份默认的默认的配置文件,这里可以对influxdb的数据存放位置做些设置

[root@node00 influxdb]# cp /etc/influxdb/influxdb.conf /etc/influxdb/influxdb.conf.default

# 启动

[root@node00 influxdb]# systemctl restart influxdb

# 查看状态

[root@node00 influxdb]# systemctl status influxdb

# 客户端登陆测试, 创建一个prometheus的database供后续的prometheus使用。

[root@node00 influxdb]# influx

Connected to http://localhost:8086 version 1.7.8

InfluxDB shell version: 1.7.8

> show databases;

name: databases

name

----

_internal

> create database prometheus;

> show databases;

name: databases

name

----

_internal

prometheus

> exit

配置prometheus集成infludb:

官方的帮助文档在这里:

Prometheus endpoints support in InfluxDB | InfluxDB OSS 1.7 Documentation

[root@node00 prometheus]# pwd

/usr/local/prometheus/prometheus

cp prometheus.yml prometheus.yml.default

vim prometheus.yml

# 添加如下几行

remote_write:

- url: "http://localhost:8086/api/v1/prom/write?db=prometheus"

remote_read:

- url: "http://localhost:8086/api/v1/prom/read?db=prometheus"

systemctl restart prometheus

systemctl status prometheus

注意: 如果influxdb配置有密码, 请参考上面的官方文档地址进行配置。

6、测试数据是否存储到influxdb中

[root@node00 prometheus]# influx

Connected to http://localhost:8086 version 1.7.8

InfluxDB shell version: 1.7.8

> show databases;

name: databases

name

----

_internal

prometheus

> use prometheus

Using database prometheus

> show measures;

ERR: error parsing query: found measures, expected CONTINUOUS, DATABASES, DIAGNOSTICS, FIELD, GRANTS, MEASUREMENT, MEASUREMENTS, QUERIES, RETENTION, SERIES, SHARD, SHARDS, STATS, SUBSCRIPTIONS, TAG, USERS at line 1, char 6

> show MEASUREMENTS;

name: measurements

name

----

go_gc_duration_seconds

go_gc_duration_seconds_count

go_gc_duration_seconds_sum

go_goroutines

go_info

go_memstats_alloc_bytes

# 后面还是有很多,这里不粘贴了。

# 做个简单查询

> select * from prometheus_http_requests_total limit 10 ;

name: prometheus_http_requests_total

time __name__ code handler instance job value

---- -------- ---- ------- -------- --- -----

1568975686217000000 prometheus_http_requests_total 200 /metrics localhost:9090 prometheus 1

1568975701216000000 prometheus_http_requests_total 200 /metrics localhost:9090 prometheus 2

1568975716218000000 prometheus_http_requests_total 200 /metrics localhost:9090 prometheus 3

1568975731217000000 prometheus_http_requests_total 200 /metrics localhost:9090 prometheus 4

1568975746216000000 prometheus_http_requests_total 200 /metrics localhost:9090 prometheus 5

1568975761217000000 prometheus_http_requests_total 200 /metrics localhost:9090 prometheus 6

1568975776217000000 prometheus_http_requests_total 200 /metrics localhost:9090 prometheus 7

1568975791217000000 prometheus_http_requests_total 200 /metrics localhost:9090 prometheus 8

1568975806217000000 prometheus_http_requests_total 200 /metrics localhost:9090 prometheus 9

1568975821216000000 prometheus_http_requests_total 200 /metrics localhost:9090 prometheus 10

四、Prometheus数据存储

Prometheus提供了两种存储方式,分别为本地存储和与远端存储。为了兼容本地存储和远程存储,Prometheus提供了fanout接口。

1、本地存储

Prometheus的本地存储为Promethazine TSDB。TSDB的设计有两个核心:block和WAL,而block有包含chunk、index、meta.json、tombstones。

- chunks:用于保存压缩后的时序数据。每个chunk的大小为512MB。

- index:是为了对监控数据进行快速检索和查询而设计的,主要用来记录chunk中时序的偏移位置。

- tombstone:用于数据进行软删除。

- meta.json:记录block的元数据信息,主要记录一个数据块记录样本的 起始时间(mintime)、截止时间(maxtime)、样本数、时序数和数据源等信息。

WAL(Writer-ahead logging,预写日志)是关系型数据库中利用日志来实现食物性和持久性的一种技术,既在进行某个操作之前先将这个事情记录下来,以便之后对数据进行回滚、重试等操作并保证数据可靠性。

2、远端存储

面对更多历史数据的持久化,Prometheus单纯依靠本地存储远不足以应对,围殴此引入远端存储。为了适应不同远端存储,Prometheus并没有选择对接各种存储,二十定义一套读写存储接口,并引入Adapter适配器。

目前已经实现Adapter的远程存储主要包括:InfluxDB、OpenTSDB 、CreateDB、TiKV、Cortex、M3DB。

五、Prometheus exporter

1、Node_exporter安装部署

[root@node00 ~]# cd /usr/src/

[root@node00 src]# wget https://github.com/prometheus/node_exporter/releases/download/v0.18.1/node_exporter-0.18.1.linux-amd64.tar.gz

[root@node00 src]# mkdir /usr/local/exporter -pv

mkdir: created directory ‘/usr/local/exporter’

[root@node00 src]# tar xf node_exporter-0.18.1.linux-amd64.tar.gz -C /usr/local/exporter/

[root@node00 src]# cd /usr/local/exporter/

[root@node00 exporter]# ls

node_exporter-0.18.1.linux-amd64

[root@node00 exporter]# ln -s node_exporter-0.18.1.linux-amd64/ node_exporter

node_exporter启动:

[root@node00 node_exporter]# ./node_exporter

INFO[0000] Starting node_exporter (version=0.18.1, branch=HEAD, revision=3db77732e925c08f675d7404a8c46466b2ece83e) source="node_exporter.go:156"

INFO[0000] Build context (go=go1.12.5, user=root@b50852a1acba, date=20190604-16:41:18) source="node_exporter.go:157"

INFO[0000] Enabled collectors: source="node_exporter.go:97"

# 中间输出省略

INFO[0000] Listening on :9100 source="node_exporter.go:170"

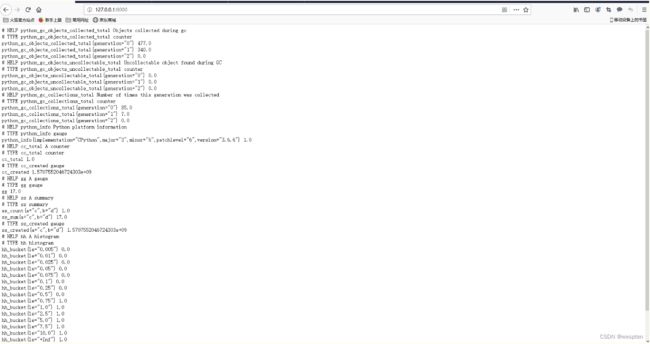

测试node_exporter:

[root@node00 ~]# curl 127.0.0.1:9100/metrics

# 这里可以看到node_exporter暴露出来的数据。

配置node_exporter开机自启:

[root@node00 system]# cd /usr/lib/systemd/system

# 准备systemd文件

[root@node00 systemd]# cat node_exporter.service

[Unit]

Description=node_exporter

After=network.target

[Service]

User=prometheus

Group=prometheus

ExecStart=/usr/local/exporter/node_exporter/node_exporter\

--web.listen-address=:20001\

--collector.systemd\

--collector.systemd.unit-whitelist=(sshd|nginx).service\

--collector.processes\

--collector.tcpstat\

--collector.supervisord

[Install]

WantedBy=multi-user.target

# 启动

[root@node00 exporter]# systemctl restart node_exporter

# 查看状态

[root@node00 exporter]# systemctl status node_exporter

● node_exporter.service - node_exporter

Loaded: loaded (/usr/lib/systemd/system/node_exporter.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2019-09-20 22:43:09 EDT; 5s ago

Main PID: 88262 (node_exporter)

CGroup: /system.slice/node_exporter.service

└─88262 /usr/local/exporter/node_exporter/node_exporter --collector.systemd --collector.systemd.unit-whitelist=(sshd|nginx).service

Sep 20 22:43:09 node00 node_exporter[88262]: time="2019-09-20T22:43:09-04:00" level=info msg=" - stat" source="node_exporter.go:104"

Sep 20 22:43:09 node00 node_exporter[88262]: time="2019-09-20T22:43:09-04:00" level=info msg=" - systemd" source="node_exporter.go:104"

Sep 20 22:43:09 node00 node_exporter[88262]: time="2019-09-20T22:43:09-04:00" level=info msg=" - textfile" source="node_exporter.go:104"

Sep 20 22:43:09 node00 node_exporter[88262]: time="2019-09-20T22:43:09-04:00" level=info msg=" - time" source="node_exporter.go:104"

Sep 20 22:43:09 node00 node_exporter[88262]: time="2019-09-20T22:43:09-04:00" level=info msg=" - timex" source="node_exporter.go:104"

Sep 20 22:43:09 node00 node_exporter[88262]: time="2019-09-20T22:43:09-04:00" level=info msg=" - uname" source="node_exporter.go:104"

Sep 20 22:43:09 node00 node_exporter[88262]: time="2019-09-20T22:43:09-04:00" level=info msg=" - vmstat" source="node_exporter.go:104"

Sep 20 22:43:09 node00 node_exporter[88262]: time="2019-09-20T22:43:09-04:00" level=info msg=" - xfs" source="node_exporter.go:104"

Sep 20 22:43:09 node00 node_exporter[88262]: time="2019-09-20T22:43:09-04:00" level=info msg=" - zfs" source="node_exporter.go:104"

Sep 20 22:43:09 node00 node_exporter[88262]: time="2019-09-20T22:43:09-04:00" level=info msg="Listening on :9100" source="node_exporter.go:170"

# 开机自启

[root@node00 exporter]# systemctl enable node_exporter

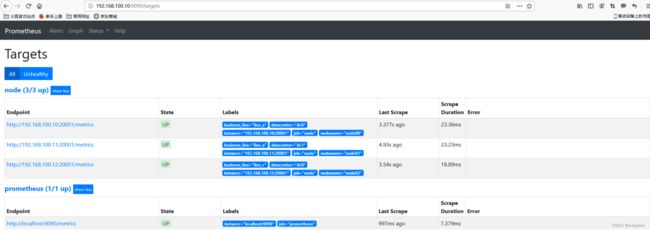

2、配置prometheus采集node信息

修改配置文件:

[root@node00 prometheus]# cd /usr/local/prometheus/prometheus

[root@node00 prometheus]# vim prometheus.yml

# 在scrape_configs中加入job node ,最终scrape_configs如下配置

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

static_configs:

- targets:

- "192.168.100.10:20001"

[root@node00 prometheus]# systemctl restart prometheus

[root@node00 prometheus]# systemctl status prometheus

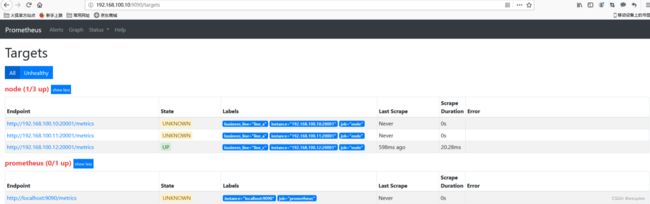

查看集成:

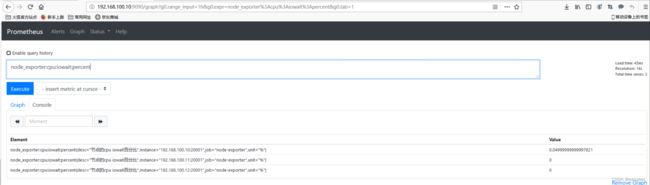

样例查询:

3、exporter详细配置

我们在主机上面安装了node_exporter程序,该程序对外暴露一个用于获取当前监控样本数据的http的访问地址, 这个的一个程序成为exporter,Exporter的实例称为一个target, prometheus通过轮训的方式定时从这些target中获取监控数据。

广义上向prometheus提供监控数据的程序都可以成为一个exporter的,一个exporter的实例称为target, exporter来源主要2个方面,一个是社区提供的,一种是用户自定义的。

1)常用exporter

官方和一些社区提供好多exproter, 我们可以直接拿过来采集我们的数据。

官方的exporter地址: Exporters and integrations | Prometheus

2)Blackbox Exporter

bloackbox exporter是prometheus社区提供的黑盒监控解决方案,运行用户通过HTTP、HTTPS、DNS、TCP以及ICMP的方式对网络进行探测。这里通过blackbox对我们的站点信息进行采集。

blackbox的安装:

# 进入下载目录

[root@node00 ~]# cd /usr/src/

# 下载

[root@node00 src]# wget https://github.com/prometheus/blackbox_exporter/releases/download/v0.15.1/blackbox_exporter-0.15.1.linux-amd64.tar.gz

# 解压

[root@node00 src]# tar xf blackbox_exporter-0.15.1.linux-amd64.tar.gz

# 部署到特定位置

[root@node00 src]# mv blackbox_exporter-0.15.1.linux-amd64 /usr/local/exporter/

# 进入目录

[root@node00 src]# cd /usr/local/exporter/

# 软连接

[root@node00 exporter]# ln -s blackbox_exporter-0.15.1.linux-amd64 blackbox_exporter

# 进入自启目录

[root@node00 exporter]# cd /usr/lib/systemd/system

# 配置blackbox的开机自启文件

[root@node00 system]# cat blackbox_exporter.service

[Unit]

Description=blackbox_exporter

After=network.target

[Service]

User=prometheus

Group=prometheus

WorkingDirectory=/usr/local/exporter/blackbox_exporter

ExecStart=/usr/local/exporter/blackbox_exporter/blackbox_exporter

[Install]

WantedBy=multi-user.target

# 启动

[root@node00 system]# systemctl restart blackbox_exporter

# 查看状态

[root@node00 system]# systemctl status blackbox_exporter

# 开机自启

[root@node00 system]# systemctl enable blackbox_exporter

配置prometheus采集数据:

- job_name: "blackbox"

metrics_path: /probe

params:

module: [http_2xx] # Look for a HTTP 200 response.

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/blackbox*.yml"

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: 192.168.100.10:9115

[root@node00 prometheus]# cat conf/blackbox-dis.yml

- targets:

- https://www.alibaba.com

- https://www.tencent.com

- https://www.baidu.com

grafana展示blackbox采集数据:

重启prometheus查看数据, 可以在grafana导入dashboard id 9965 可以看到如下数据。

3)influxdb_export

influxdb_export 是用来采集influxdb数据的指标的,但是influxdb提供一个专门的一个产品来暴露metrics数据, 也就是说infludb_exporter这个第三方的产品将来会被淘汰了。

不过还是可以使用的,可以参考: https://github.com/prometheus/influxdb_exporter

infludb官方的工具来获取metrics数据是telegraf, 这个工具相当的强大,内部使用prometheus client插件来暴露数据给prometheus采集, 当然这个工具内部集成了几十种插件用户暴露数据给其他的监控系统。

详细的可以参考官方地址: Telegraf output plugins | InfluxData Documentation Archive

这里我们使用的监控系统是prometheus, 只需要关注如下配置即可:https://github.com/influxdata/telegraf/tree/release-1.7/plugins/outputs/prometheus_client

telegraf的安装配置:

wget https://dl.influxdata.com/telegraf/releases/telegraf-1.12.2-1.x86_64.rpm

sudo yum localinstall telegraf-1.12.2-1.x86_64.rpm

rpm -ql |grep telegraf

cp /etc/telegraf/telegraf.conf /etc/telegraf/telegraf.conf.default

# 修改如下部分

[[outputs.prometheus_client]]

## Address to listen on

listen = ":9273"

systemctl restart telegraf

systemctl status telegraf

systemctl enabletelegraf

集成prometheus:

# prometheus加入如下采集

- job_name: "influxdb-exporter"

static_configs:

- targets: [ "192.168.100.10:9273" ]

查看数据:

六、Prometheus配置详解

在prometheus监控系统,prometheus的职责是采集,查询和存储和推送报警到alertmanager。

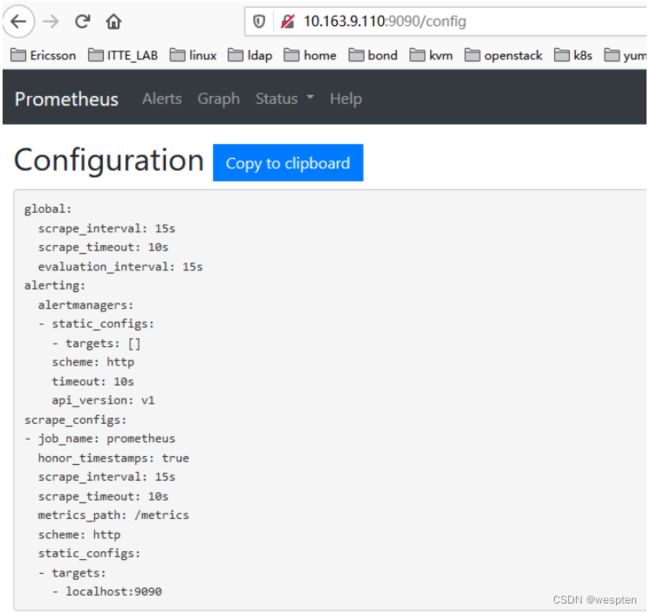

1、全局配置文件

默认配置文件:

[root@node00 prometheus]# cat prometheus.yml.default

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- global: 此片段指定的是prometheus的全局配置, 比如采集间隔,抓取超时时间等。

- rule_files: 此片段指定报警规则文件, prometheus根据这些规则信息,会推送报警信息到alertmanager中。

- scrape_configs: 此片段指定抓取配置,prometheus的数据采集通过此片段配置。

- alerting: 此片段指定报警配置, 这里主要是指定prometheus将报警规则推送到指定的alertmanager实例地址。

- remote_write: 指定后端的存储的写入api地址。

- remote_read: 指定后端的存储的读取api地址。

global片段主要参数:

# How frequently to scrape targets by default.

[ scrape_interval: | default = 1m ] # 抓取间隔

# How long until a scrape request times out.

[ scrape_timeout: | default = 10s ] # 抓取超时时间

# How frequently to evaluate rules.

[ evaluation_interval: | default = 1m ] # 评估规则间隔

# The labels to add to any time series or alerts when communicating with

# external systems (federation, remote storage, Alertmanager).

external_labels: # 外部一些标签设置

[ : ... ]

scrapy_config片段主要参数:

一个scrape_config 片段指定一组目标和参数, 目标就是实例,指定采集的端点, 参数描述如何采集这些实例, 主要参数如下。

- scrape_interval: 抓取间隔,默认继承global值。

- scrape_timeout: 抓取超时时间,默认继承global值。

- metric_path: 抓取路径, 默认是/metrics

- scheme: 指定采集使用的协议,http或者https。

- params: 指定url参数。

- basic_auth: 指定认证信息。

- *_sd_configs: 指定服务发现配置

- static_configs: 静态指定服务job。

- relabel_config: relabel设置。

static_configs样例:

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

static_configs:

- targets:

- "192.168.100.10:20001"

- "192.168.100.11:20001

- "192.168.100.12:20001"

file_sd_configs:

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/node*.yml"

# 独立文件配置如下

cat conf/node-dis.conf

- targets:

- "192.168.100.10:20001"

- "192.168.100.11:20001"

- "192.168.100.12:20001"

或者可以这样配置

[root@node00 conf]# cat node-dis.yml

- targets:

- "192.168.100.10:20001"

labels:

hostname: node00

- targets:

- "192.168.100.11:20001"

labels:

hostname: node01

- targets:

- "192.168.100.12:20001"

labels:

hostname: node02

通过file_fd_files 配置后我们可以在不重启prometheus的前提下, 修改对应的采集文件(node_dis.yml), 在特定的时间内(refresh_interval),prometheus会完成配置信息的载入工作。

consul_sd_file样例:

由于consul的配置需要有consul的服务提供, 这里简单部署下consul的服务。

# 进入下载目录

[root@node00 prometheus]# cd /usr/src/

# 下载

[root@node00 src]# wget https://releases.hashicorp.com/consul/1.6.1/consul_1.6.1_linux_amd64.zip

# 解压

[root@node00 src]# unzip consul_1.6.1_linux_amd64.zip

Archive: consul_1.6.1_linux_amd64.zip

inflating: consul

# 查看

[root@node00 src]# ls

consul consul_1.6.1_linux_amd64.zip debug kernels node_exporter-0.18.1.linux-amd64.tar.gz prometheus-2.12.0.linux-amd64.tar.gz

# 查看文件类型

[root@node00 src]# file consul

consul: ELF 64-bit LSB executable, x86-64, version 1 (SYSV), statically linked, not stripped

# 防止到系统bin目录

[root@node00 src]# mv consul /usr/local/bin/

# 确保环境变量包含

[root@node00 src]# echo $PATH

/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin

# 运行测试

[root@node00 consul.d]# consul agent -dev

# 测试获取成员

[root@node00 ~]# consul members

# 创建配置目录

[root@node00 ~]#mkdir /etc/consul.d

[root@node00 consul.d]# cat prometheus-node.json

{

"addresses": {

"http": "0.0.0.0",

"https": "0.0.0.0"

},

"services": [{

"name": "prometheus-node",

"tags": ["prometheus","node"],

"port": 20001

}]

}

# 指定配置文件运行

consul agent -dev -config-dir=/etc/consul.d

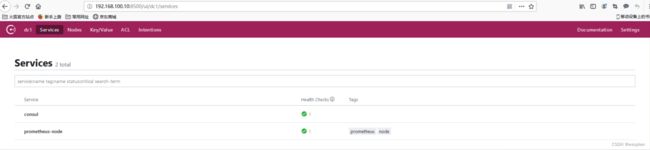

打开web管理界面 192.169.100.10:8500,查看相应的服务信息。

上面我们可以看到有2个service , 其中prometheus-node是我们定义的service。

和prometheus集成样例:

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

consul_sd_configs:

- server: localhost:8500

services:

- prometheus-node

# tags:

# - prometheus

# - node

#- refresh_interval: 1m

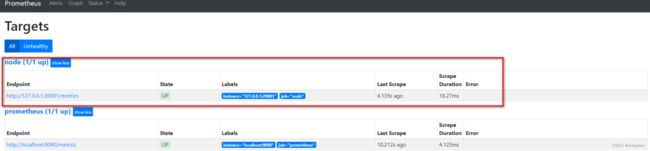

在prometheus的target界面上我们看到服务注册发现的结果。

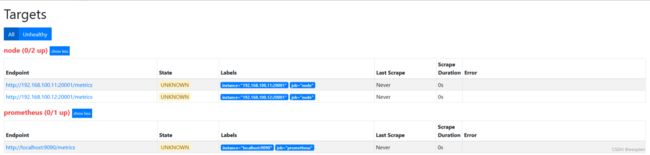

我们通过api接口给该service添加一个节点, 看看是否可以同步过来。

[root@node00 ~]# curl -XPUT [email protected] 127.0.0.1:8500/v1/catalog/register

true

[root@node00 ~]# cat node01.json

{

"id":"0cc931ea-9a3a-a6ff-3ef5-e0c99371d77d",

"Node": "node01",

"Address": "192.168.100.11",

"Service":

{

"Port": 20001,

"ID": "prometheus-node",

"Service": "prometheus-node"

}

}

在consul和prometheus中查看:

2、Prometheus relabel配置

relabel_config:

重新标记是一个功能强大的工具,可以在目标的标签集被抓取之前重写它,每个采集配置可以配置多个重写标签设置,并按照配置的顺序来应用于每个目标的标签集。

目标重新标签之后,以__开头的标签将从标签集中删除的。

如果使用只需要临时的存储临时标签值的,可以使用_tmp作为前缀标识。

relabel的action类型:

- replace: 对标签和标签值进行替换。

- keep: 满足特定条件的实例进行采集,其他的不采集。

- drop: 满足特定条件的实例不采集,其他的采集。

- hashmod: 这个我也没看懂啥意思,囧。

- labelmap: 这个我也没看懂啥意思,囧。

- labeldrop: 对抓取的实例特定标签进行删除。

- labelkeep: 对抓取的实例特定标签进行保留,其他标签删除。

常用action的测试:

在测试前,同步下配置文件如下。

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/node*.yml"

[root@node00 prometheus]# cat conf/node-dis.yml

- targets:

- "192.168.100.10:20001"

labels:

__hostname__: node00

__businees_line__: "line_a"

__region_id__: "cn-beijing"

__availability_zone__: "a"

- targets:

- "192.168.100.11:20001"

labels:

__hostname__: node01

__businees_line__: "line_a"

__region_id__: "cn-beijing"

__availability_zone__: "a"

- targets:

- "192.168.100.12:20001"

labels:

__hostname__: node02

__businees_line__: "line_c"

__region_id__: "cn-beijing"

__availability_zone__: "b"

此时如果查看target信息,如下图。

因为我们的label都是以__开头的,目标重新标签之后,以__开头的标签将从标签集中删除的。

一个简单的relabel设置:

将labels中的__hostname__替换为node_name。

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/node*.yml"

relabel_configs:

- source_labels:

- "__hostname__"

regex: "(.*)"

target_label: "nodename"

action: replace

replacement: "$1"

重启服务查看target信息如下图:

说下上面的配置: source_labels指定我们我们需要处理的源标签, target_labels指定了我们要replace后的标签名字, action指定relabel动作,这里使用replace替换动作。 regex去匹配源标签(hostname)的值,"(.*)"代表__hostname__这个标签是什么值都匹配的,然后replacement指定的替换后的标签(target_label)对应的数值。采用正则引用方式获取的。

这里修改下上面的正则表达式为 ‘’regex: "(node00)"'的时候可以看到如下图。

keep:

修改配置文件:

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/node*.yml"

target如下图:

修改配置文件如下:

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/node*.yml"

relabel_configs:

- source_labels:

- "__hostname__"

regex: "node00"

action: keep

target如下图:

action为keep,只要source_labels的值匹配regex(node00)的实例才能会被采集。 其他的实例不会被采集。

drop:

在上面的基础上,修改action为drop。

target如下图:

action为drop,其实和keep是相似的, 不过是相反的, 只要source_labels的值匹配regex(node00)的实例不会被采集。 其他的实例会被采集。

replace:

我们的基础信息里面有__region_id__和__availability_zone__,但是我想融合2个字段在一起,可以通过replace来实现。

修改配置如下:

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/node*.yml"

relabel_configs:

- source_labels:

- "__region_id__"

- "__availability_zone__"

separator: "-"

regex: "(.*)"

target_label: "region_zone"

action: replace

replacement: "$1"

target如下图:

abelkeep:

配置文件如下:

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/node*.yml"

relabel_configs:

- source_labels:

- "__hostname__"

regex: "(.*)"

target_label: "nodename"

action: replace

replacement: "$1"

- source_labels:

- "__businees_line__"

regex: "(.*)"

target_label: "businees_line"

action: replace

replacement: "$1"

- source_labels:

- "__datacenter__"

regex: "(.*)"

target_label: "datacenter"

action: replace

replacement: "$1"

target如下图:

修改配置文件如下:

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/node*.yml"

relabel_configs:

- source_labels:

- "__hostname__"

regex: "(.*)"

target_label: "nodename"

action: replace

replacement: "$1"

- source_labels:

- "__businees_line__"

regex: "(.*)"

target_label: "businees_line"

action: replace

replacement: "$1"

- source_labels:

- "__datacenter__"

regex: "(.*)"

target_label: "datacenter"

action: replace

replacement: "$1"

- regex: "(nodename|datacenter)"

action: labeldrop

target如下图:

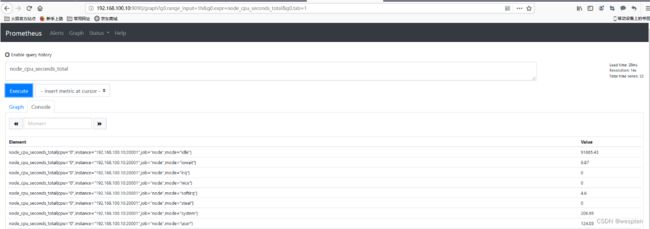

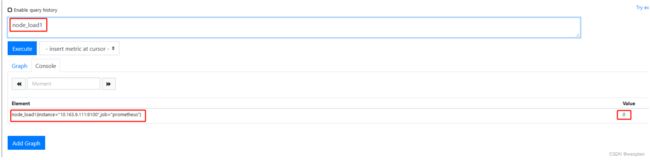

七、PromQL查询语句

Prometheus提供了一种名为PromQL (Prometheus查询语言)的函数式查询语言,允许用户实时选择和聚合时间序列数据。表达式的结果既可以显示为图形,也可以在Prometheus的表达式浏览器中作为表格数据查看,或者通过HTTP API由外部系统使用。

1、准备工作

在进行查询,这里提供下我的配置文件如下

[root@node00 prometheus]# cat prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/node*.yml"

remote_write:

- url: "http://localhost:8086/api/v1/prom/write?db=prometheus"

remote_read:

- url: "http://localhost:8086/api/v1/prom/read?db=prometheus"

[root@node00 prometheus]# cat conf/node-dis.yml

- targets:

- "192.168.100.10:20001"

labels:

__datacenter__: dc0

__hostname__: node00

__businees_line__: "line_a"

__region_id__: "cn-beijing"

__availability_zone__: "a"

- targets:

- "192.168.100.11:20001"

labels:

__datacenter__: dc1

__hostname__: node01

__businees_line__: "line_a"

__region_id__: "cn-beijing"

__availability_zone__: "a"

- targets:

- "192.168.100.12:20001"

labels:

__datacenter__: dc0

__hostname__: node02

__businees_line__: "line_c"

__region_id__: "cn-beijing"

__availability_zone__: "b"

2、简单时序查询

1)直接查询特定metric_name

节点的forks的总次数:

node_forks_total

结果如下:

| Element | Value |

|---|---|

| node_forks_total{instance="192.168.100.10:20001",job="node"} | 201518 |

| node_forks_total{instance="192.168.100.11:20001",job="node"} | 23951 |

| node_forks_total{instance="192.168.100.12:20001",job="node"} | 24127 |

2)带标签的查询

node_forks_total{instance="192.168.100.10:20001"}

结果如下:

| Element | Value |

|---|---|

| node_forks_total{instance="192.168.100.10:20001",job="node"} | 201816 |

3)多标签查询

node_forks_total{instance="192.168.100.10:20001",job="node"}

结果如下:

Element Value

node_forks_total{instance="192.168.100.10:20001",job="node"} 201932

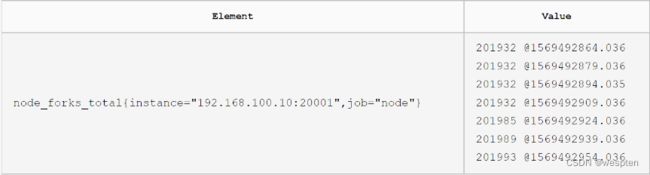

4)查询2分钟的时序数值

node_forks_total{instance="192.168.100.10:20001",job="node"}[2m]

5)正则匹配

node_forks_total{instance=~"192.168.*:20001",job="node"}

| Element | Value |

|---|---|

| node_forks_total{instance="192.168.100.10:20001",job="node"} | 202107 |

| node_forks_total{instance="192.168.100.11:20001",job="node"} | 24014 |

| node_forks_total{instance="192.168.100.12:20001",job="node"} | 24186 |

3、常用函数查询

官方提供的函数比较多, 具体可以参考地址如下: Query functions | Prometheus

这里主要就常用函数进行演示。

1)irate

irate用于计算速率。

通过标签查询,特定实例特定job,特定cpu 在idle状态下的cpu次数速率:

irate(node_cpu_seconds_total{cpu="0",instance="192.168.100.10:20001",job="node",mode="idle"}[1m])| Element | Value |

|---|---|

| {cpu="0",instance="192.168.100.10:20001",job="node",mode="idle"} | 0.9833988932595507 |

2)count_over_time

计算特定的时序数据中的个数。

这个数值个数和采集频率有关, 我们的采集间隔是15s,在一分钟会有4个点位数据:

count_over_time(node_boot_time_seconds[1m])| Element | Value |

|---|---|

| {instance="192.168.100.10:20001",job="node"} | 4 |

| {instance="192.168.100.11:20001",job="node"} | 4 |

| {instance="192.168.100.12:20001",job="node"} | 4 |

3)子查询

过去的10分钟内, 每分钟计算下过去5分钟的一个速率值。 一个采集10m/1m一共10个值。

rate(node_cpu_seconds_total{cpu="0",instance="192.168.100.10:20001",job="node",mode="idle"}[5m])[10m:1m]4、复杂查询

1)计算内存使用百分比

node_memory_MemFree_bytes / node_memory_MemTotal_bytes * 100| Element | Value |

|---|---|

| {instance="192.168.100.10:20001",job="node"} | 9.927579722322251 |

| {instance="192.168.100.11:20001",job="node"} | 59.740727403673034 |

| {instance="192.168.100.12:20001",job="node"} | 63.2080982675149 |

2)获取所有实例的内存使用百分比前2个

topk(2,node_memory_MemFree_bytes / node_memory_MemTotal_bytes * 100 )| Element | Value |

|---|---|

| {instance="192.168.100.12:20001",job="node"} | 63.20129636298163 |

| {instance="192.168.100.11:20001",job="node"} | 59.50586164125955 |

5、实用查询样例

1)获取cpu核心个数

# 计算所有的实例cpu核心数

count by (instance) ( count by (instance,cpu) (node_cpu_seconds_total{mode="system"}) )

# 计算单个实例的

count by (instance) ( count by (instance,cpu) (node_cpu_seconds_total{mode="system",instance="192.168.100.11:20001"})

2)计算内存使用率

(1 - (node_memory_MemAvailable_bytes{instance=~"192.168.100.10:20001"} / (node_memory_MemTotal_bytes{instance=~"192.168.100.10:20001"})))* 100| Element | Value |

|---|---|

| {instance="192.168.100.10:20001",job="node"} | 87.09358620413717 |

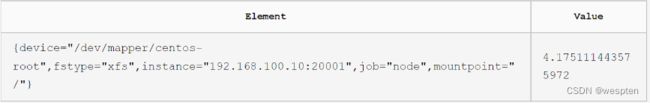

3)计算根分区使用率

100 - ((node_filesystem_avail_bytes{instance="192.168.100.10:20001",mountpoint="/",fstype=~"ext4|xfs"} * 100) / node_filesystem_size_bytes {instance=~"192.168.100.10:20001",mountpoint="/",fstype=~"ext4|xfs"})

4)预测磁盘空间

# 整体分为 2个部分, 中间用and分割, 前面部分计算根分区使用率大于85的, 后面计算根据近6小时的数据预测接下来24小时的磁盘可用空间是否小于0 。

(1- node_filesystem_avail_bytes{fstype=~"ext4|xfs",mountpoint="/"}

/ node_filesystem_size_bytes{fstype=~"ext4|xfs",mountpoint="/"}) * 100 >= 85 and (predict_linear(node_filesystem_avail_bytes[6h],3600 * 24) < 0)

八、Prometheus Grafana 展示平台

在prometheus中,我们可以使用web页面进行数据的查询和展示, 不过展示效果不太理想,这里使用一款专业的展示平台进行展示。

1、grafana安装

# 下载

wget https://dl.grafana.com/oss/release/grafana-6.3.6-1.x86_64.rpm

# 安装

sudo yum localinstall grafana-6.3.6-1.x86_64.rpm

# 查看配置文件

[root@node00 ~]# rpm -ql grafana |grep etc

/etc/grafana

/etc/init.d/grafana-server

/etc/sysconfig/grafana-server

# 查看开机自启文件

[root@node00 ~]# rpm -ql grafana |grep systemd

/usr/lib/systemd/system/grafana-server.service

# 启动服务

[root@node00 grafana]# systemctl restart grafana-server

# 查看状态图

[root@node00 grafana]# systemctl status grafana-server

# 开机自启

[root@node00 grafana]# systemctl enable grafana-server

# 查看监听端口

[root@node00 grafana]# lsof -i :3000

2、WEB页面配置

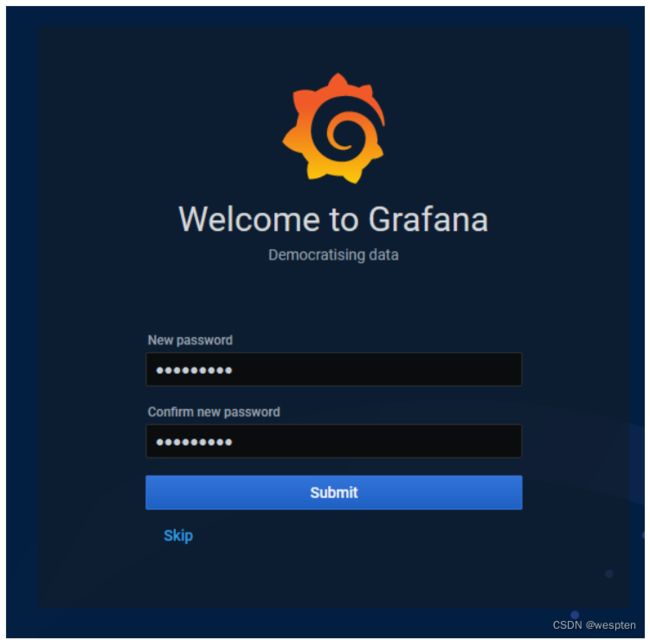

1)首次登陆设置

访问web页面地址192.168.100.10:3000端口,会弹出基础的登陆窗口,默认的用户名和密码为: admin/admin。 首次登录会引导你修改密码的。

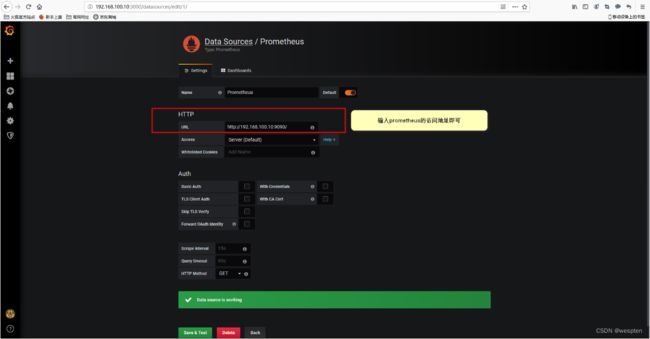

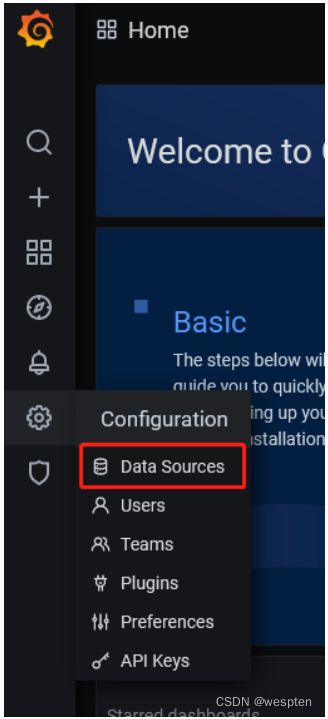

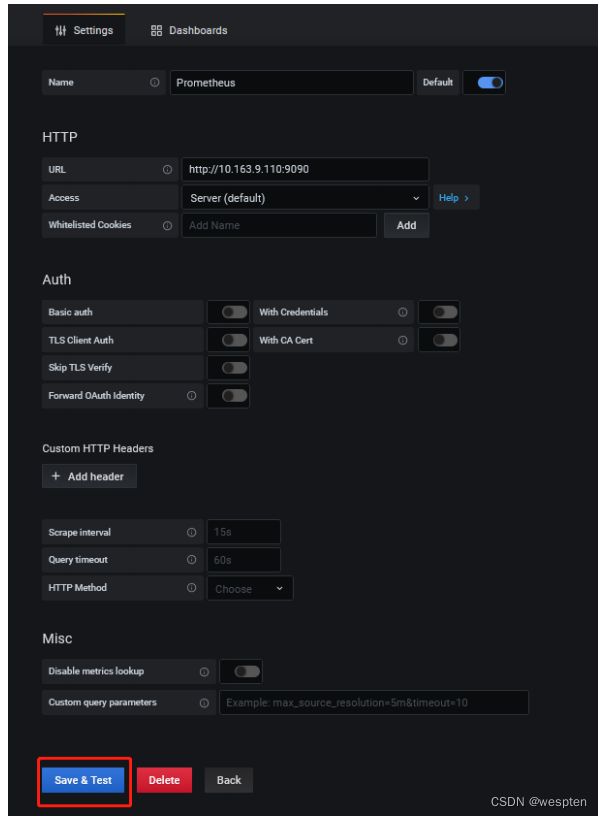

2)添加数据源

访问web页面地址192.168.100.10:3000/datasources, 点击 "Add data source" 按钮 ,选择Prometheus作为数据源类型。进入如下界面。

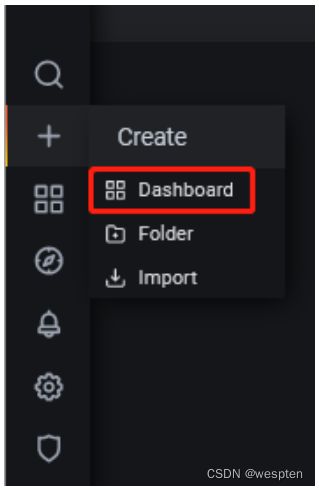

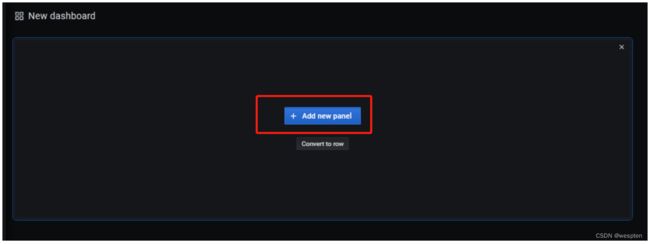

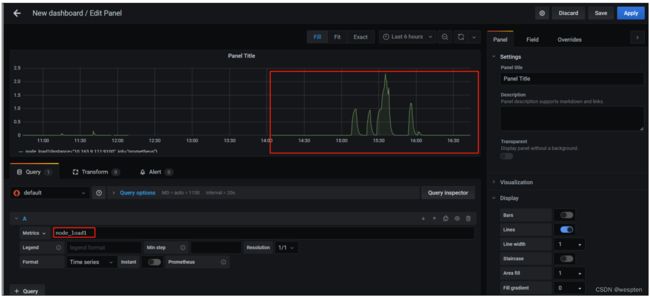

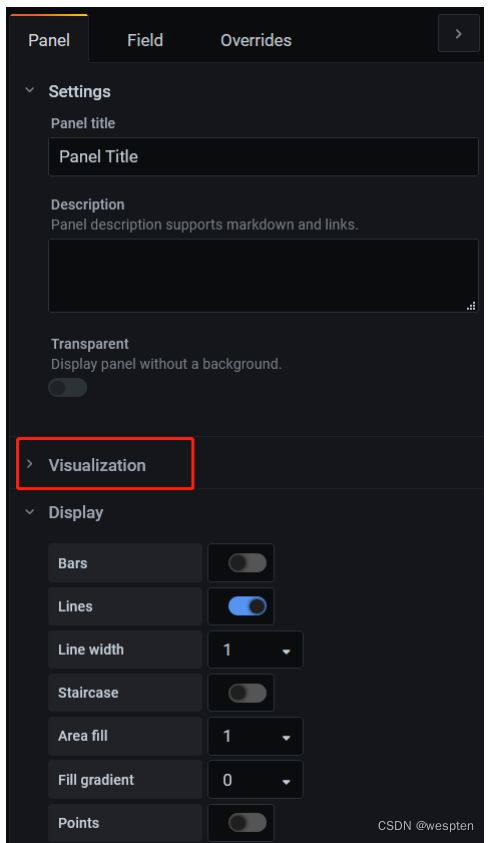

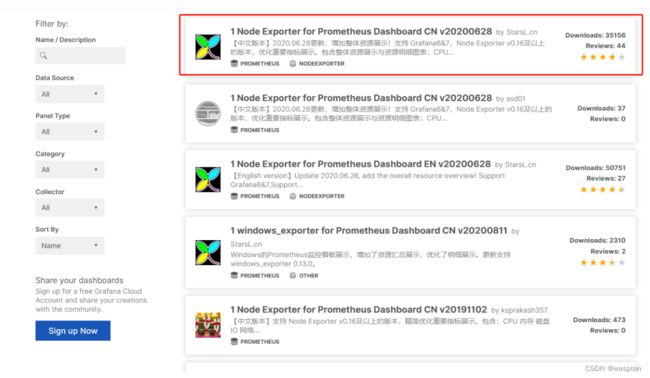

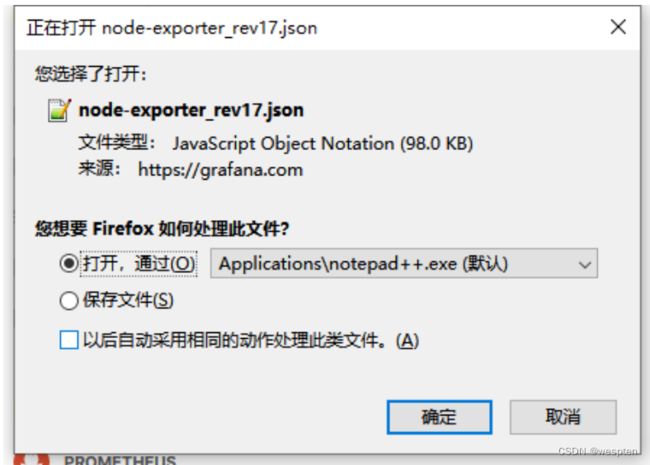

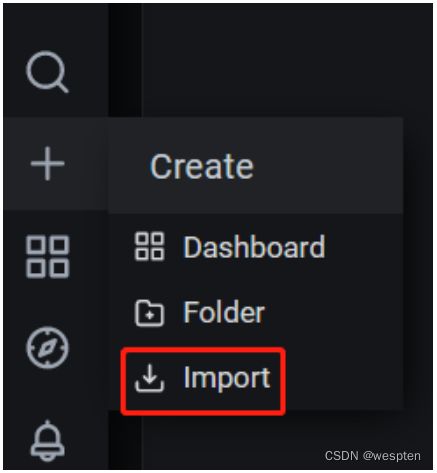

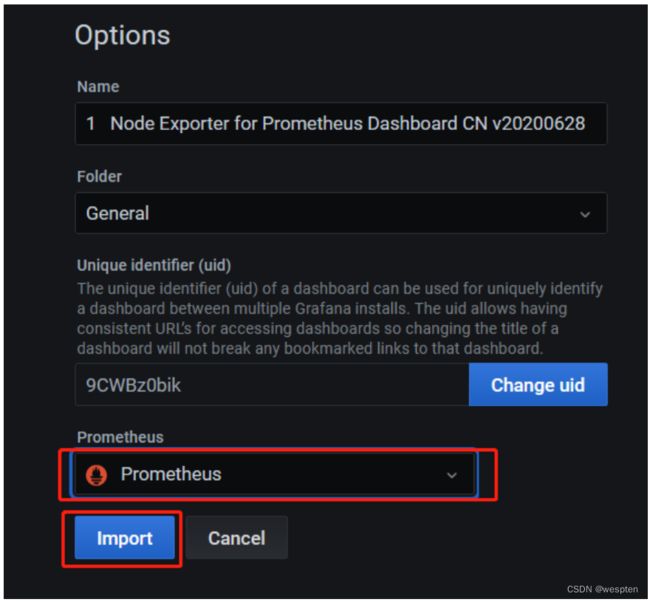

3)添加dashboard

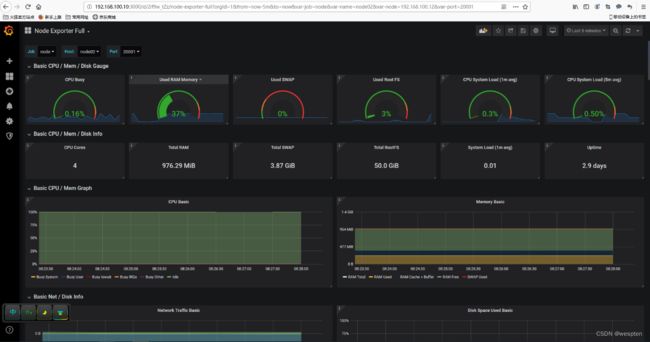

点击页面左上角的"+"号,然后选择import导入方式,输入1860,可以看到如下图:

注意: 由于我是在虚拟机上面运行的node节点,运行采集时间较短,所以为了展示效果设置的展示时间为最近5分钟。

4)添加精简导出器

我这边根据网络上面的dashardboard进行修改,使用在工作中的dashboard如下图。

对应的json文件如下,可以通过import方式导入的:

{

"annotations": {

"list": [

{

"builtIn": 1,

"datasource": "-- Grafana --",

"enable": true,

"hide": true,

"iconColor": "rgba(0, 211, 255, 1)",

"name": "Annotations & Alerts",

"type": "dashboard"

}

]

},

"description": "node-exporter",

"editable": true,

"gnetId": 8919,

"graphTooltip": 1,

"id": 131,

"iteration": 1569546050404,

"links": [],

"panels": [

{

"collapsed": false,

"gridPos": {

"h": 1,

"w": 24,

"x": 0,

"y": 0

},

"id": 180,

"panels": [],

"repeat": null,

"title": "基础信息",

"type": "row"

},

{

"cacheTimeout": null,

"colorBackground": false,

"colorPostfix": false,

"colorPrefix": false,

"colorValue": true,

"colors": [

"rgba(245, 54, 54, 0.9)",

"rgba(237, 129, 40, 0.89)",

"rgba(50, 172, 45, 0.97)"

],

"datasource": "Prometheus",

"decimals": 1,

"description": "",

"format": "s",

"gauge": {

"maxValue": 100,

"minValue": 0,

"show": false,

"thresholdLabels": false,

"thresholdMarkers": true

},

"gridPos": {

"h": 5,

"w": 2,

"x": 0,

"y": 1

},

"hideTimeOverride": true,

"id": 15,

"interval": null,

"links": [],

"mappingType": 1,

"mappingTypes": [

{

"name": "value to text",

"value": 1

},

{

"name": "range to text",

"value": 2

}

],

"maxDataPoints": 100,

"nullPointMode": "null",

"nullText": null,

"postfix": "",

"postfixFontSize": "50%",

"prefix": "",

"prefixFontSize": "50%",

"rangeMaps": [

{

"from": "null",

"text": "N/A",

"to": "null"

}

],

"sparkline": {

"fillColor": "rgba(31, 118, 189, 0.18)",

"full": false,

"lineColor": "rgb(31, 120, 193)",

"show": false

},

"tableColumn": "",

"targets": [

{

"expr": "time() - node_boot_time_seconds{instance=~\"$instance\"}",

"format": "time_series",

"hide": false,

"instant": true,

"intervalFactor": 2,

"legendFormat": "",

"refId": "A",

"step": 40

}

],

"thresholds": "1,2",

"title": "系统运行时间",

"transparent": true,

"type": "singlestat",

"valueFontSize": "100%",

"valueMaps": [

{

"op": "=",

"text": "N/A",

"value": "null"

}

],

"valueName": "current"

},

{

"cacheTimeout": null,

"colorBackground": false,

"colorPostfix": false,

"colorValue": true,

"colors": [

"rgba(245, 54, 54, 0.9)",

"rgba(237, 129, 40, 0.89)",

"rgba(50, 172, 45, 0.97)"

],

"datasource": "Prometheus",

"description": "",

"format": "short",

"gauge": {

"maxValue": 100,

"minValue": 0,

"show": false,

"thresholdLabels": false,

"thresholdMarkers": true

},

"gridPos": {

"h": 2,

"w": 2,

"x": 2,

"y": 1

},

"id": 14,

"interval": null,

"links": [],

"mappingType": 1,

"mappingTypes": [

{

"name": "value to text",

"value": 1

},

{

"name": "range to text",

"value": 2

}

],

"maxDataPoints": 100,

"minSpan": 4,

"nullPointMode": "null",

"nullText": null,

"postfix": "",

"postfixFontSize": "50%",

"prefix": "",

"prefixFontSize": "50%",

"rangeMaps": [

{

"from": "null",

"text": "N/A",

"to": "null"

}

],

"sparkline": {

"fillColor": "rgba(31, 118, 189, 0.18)",

"full": false,

"lineColor": "rgb(31, 120, 193)",

"show": false

},

"tableColumn": "",

"targets": [

{

"expr": "count(count(node_cpu_seconds_total{instance=~\"$instance\", mode='system',job=\"$job\"}) by (cpu))",

"format": "time_series",

"instant": true,

"intervalFactor": 1,

"legendFormat": "",

"refId": "A",

"step": 20

}

],

"thresholds": "1,2",

"title": "CPU 核数",

"transparent": true,

"type": "singlestat",

"valueFontSize": "100%",

"valueMaps": [

{

"op": "=",

"text": "N/A",

"value": "null"

}

],

"valueName": "current"

},

{

"cacheTimeout": null,

"colorBackground": false,

"colorValue": true,

"colors": [

"rgba(50, 172, 45, 0.97)",

"rgba(237, 129, 40, 0.89)",

"rgba(245, 54, 54, 0.9)"

],

"datasource": "Prometheus",

"decimals": 2,

"description": "",

"format": "percent",

"gauge": {

"maxValue": 100,

"minValue": 0,

"show": true,

"thresholdLabels": false,

"thresholdMarkers": true

},

"gridPos": {

"h": 5,

"w": 3,

"x": 4,

"y": 1

},

"id": 167,

"interval": null,

"links": [],

"mappingType": 1,

"mappingTypes": [

{

"name": "value to text",

"value": 1

},

{

"name": "range to text",

"value": 2

}

],

"maxDataPoints": 100,

"minSpan": 2,

"nullPointMode": "null",

"nullText": null,

"postfix": "",

"postfixFontSize": "50%",

"prefix": "",

"prefixFontSize": "50%",

"rangeMaps": [

{

"from": "null",

"text": "N/A",

"to": "null"

}

],

"sparkline": {

"fillColor": "rgba(31, 118, 189, 0.18)",

"full": false,

"lineColor": "rgb(31, 120, 193)",

"show": true

},

"tableColumn": "",

"targets": [

{

"expr": "100 - (avg(irate(node_cpu_seconds_total{instance=~\"$instance\",mode=\"idle\",job=\"$job\"}[1m])) * 100)",

"format": "time_series",

"hide": false,

"interval": "",

"intervalFactor": 1,

"legendFormat": "",

"refId": "A",

"step": 20

}

],

"thresholds": "50,80",

"title": "CPU使用率(1m)",

"transparent": true,

"type": "singlestat",

"valueFontSize": "80%",

"valueMaps": [

{

"op": "=",

"text": "N/A",

"value": "null"

}

],

"valueName": "current"

},

{

"cacheTimeout": null,

"colorBackground": false,

"colorValue": true,

"colors": [

"rgba(50, 172, 45, 0.97)",

"rgba(237, 129, 40, 0.89)",

"rgba(245, 54, 54, 0.9)"

],

"datasource": "Prometheus",

"decimals": 2,

"description": "",

"format": "percent",

"gauge": {

"maxValue": 100,

"minValue": 0,

"show": true,

"thresholdLabels": false,

"thresholdMarkers": true

},

"gridPos": {

"h": 5,

"w": 3,

"x": 7,

"y": 1

},

"id": 20,

"interval": null,

"links": [],

"mappingType": 1,

"mappingTypes": [

{

"name": "value to text",

"value": 1

},

{

"name": "range to text",

"value": 2

}

],

"maxDataPoints": 100,

"minSpan": 2,

"nullPointMode": "null",

"nullText": null,

"postfix": "",

"postfixFontSize": "50%",

"prefix": "",

"prefixFontSize": "50%",

"rangeMaps": [

{

"from": "null",

"text": "N/A",

"to": "null"

}

],

"sparkline": {

"fillColor": "rgba(31, 118, 189, 0.18)",

"full": false,

"lineColor": "rgb(31, 120, 193)",

"show": true

},

"tableColumn": "",

"targets": [

{

"expr": "avg(irate(node_cpu_seconds_total{instance=~\"$instance\",mode=\"iowait\",job=\"$job\"}[1m])) * 100",

"format": "time_series",

"hide": false,

"interval": "",

"intervalFactor": 1,

"legendFormat": "",

"refId": "A",

"step": 20

}

],

"thresholds": "10,20",

"title": "CPU iowait(1m)",

"transparent": true,

"type": "singlestat",

"valueFontSize": "80%",

"valueMaps": [

{

"op": "=",

"text": "N/A",

"value": "null"

}

],

"valueName": "current"

},

{

"cacheTimeout": null,

"colorBackground": false,

"colorValue": true,

"colors": [

"rgba(50, 172, 45, 0.97)",

"rgba(237, 129, 40, 0.89)",

"rgba(245, 54, 54, 0.9)"

],

"datasource": "Prometheus",

"decimals": 0,

"description": "",

"format": "percent",

"gauge": {

"maxValue": 100,

"minValue": 0,

"show": true,

"thresholdLabels": false,

"thresholdMarkers": true

},

"gridPos": {

"h": 5,

"w": 3,

"x": 10,

"y": 1

},

"hideTimeOverride": false,

"id": 172,

"interval": null,

"links": [],

"mappingType": 1,

"mappingTypes": [

{

"name": "value to text",

"value": 1

},

{

"name": "range to text",

"value": 2

}

],

"maxDataPoints": 100,

"minSpan": 4,

"nullPointMode": "null",

"nullText": null,

"postfix": "",

"postfixFontSize": "50%",

"prefix": "",

"prefixFontSize": "50%",

"rangeMaps": [

{

"from": "null",

"text": "N/A",

"to": "null"

}

],

"sparkline": {

"fillColor": "rgba(31, 118, 189, 0.18)",

"full": false,

"lineColor": "rgb(31, 120, 193)",

"show": true

},

"tableColumn": "",

"targets": [

{

"expr": "(1 - (node_memory_MemAvailable_bytes{instance=~\"$instance\",job=\"$job\"} / (node_memory_MemTotal_bytes{instance=~\"$instance\",job=\"$job\"})))* 100",

"format": "time_series",

"hide": false,

"interval": "10s",

"intervalFactor": 1,

"refId": "A",

"step": 20

}

],

"thresholds": "80,90",

"title": "内存使用率",

"transparent": true,

"type": "singlestat",

"valueFontSize": "80%",

"valueMaps": [],

"valueName": "current"

},

{

"cacheTimeout": null,

"colorBackground": false,

"colorPostfix": false,

"colorPrefix": false,

"colorValue": true,

"colors": [

"rgba(50, 172, 45, 0.97)",

"rgba(237, 129, 40, 0.89)",

"rgba(245, 54, 54, 0.9)"

],

"datasource": "Prometheus",

"decimals": 2,

"description": "",

"format": "short",

"gauge": {

"maxValue": 10000,

"minValue": null,

"show": true,

"thresholdLabels": false,

"thresholdMarkers": true

},

"gridPos": {

"h": 5,

"w": 3,

"x": 13,

"y": 1

},

"hideTimeOverride": false,

"id": 16,

"interval": null,

"links": [],

"mappingType": 1,

"mappingTypes": [

{

"name": "value to text",

"value": 1

},

{

"name": "range to text",

"value": 2

}

],

"maxDataPoints": 100,

"minSpan": 4,

"nullPointMode": "null",

"nullText": null,

"postfix": "",

"postfixFontSize": "50%",

"prefix": "",

"prefixFontSize": "50%",

"rangeMaps": [

{

"from": "null",

"text": "N/A",

"to": "null"

}

],

"sparkline": {

"fillColor": "rgba(31, 118, 189, 0.18)",

"full": false,

"lineColor": "rgb(31, 120, 193)",

"show": true

},

"tableColumn": "",

"targets": [

{

"expr": "node_filefd_allocated{instance=~\"$instance\",job=\"$job\"}",

"format": "time_series",

"instant": false,

"interval": "10s",

"intervalFactor": 1,

"refId": "B"

}

],

"thresholds": "7000,9000",

"title": "当前打开的文件描述符",

"transparent": true,

"type": "singlestat",

"valueFontSize": "70%",

"valueMaps": [],

"valueName": "current"

},

{

"cacheTimeout": null,

"colorBackground": false,

"colorValue": true,

"colors": [

"rgba(50, 172, 45, 0.97)",

"rgba(237, 129, 40, 0.89)",

"rgba(245, 54, 54, 0.9)"

],

"datasource": "Prometheus",

"decimals": null,

"description": "",

"format": "percent",

"gauge": {

"maxValue": 100,

"minValue": 0,

"show": true,

"thresholdLabels": false,

"thresholdMarkers": true

},

"gridPos": {

"h": 5,

"w": 4,

"x": 16,

"y": 1

},

"id": 166,

"interval": null,

"links": [],

"mappingType": 1,

"mappingTypes": [

{

"name": "value to text",

"value": 1

},

{

"name": "range to text",

"value": 2

}

],

"maxDataPoints": 100,

"minSpan": 4,

"nullPointMode": "null",

"nullText": null,

"postfix": "",

"postfixFontSize": "50%",

"prefix": "",

"prefixFontSize": "50%",

"rangeMaps": [

{

"from": "null",

"text": "N/A",

"to": "null"

}

],

"repeatDirection": "h",

"sparkline": {

"fillColor": "rgba(31, 118, 189, 0.18)",

"full": false,

"lineColor": "rgb(31, 120, 193)",

"show": true

},

"tableColumn": "",

"targets": [

{

"expr": "100 - ((node_filesystem_avail_bytes{instance=~\"$instance\",mountpoint=\"/\",fstype=~\"ext4|xfs\",job=\"$job\"} * 100) / node_filesystem_size_bytes {instance=~\"$instance\",mountpoint=\"/\",fstype=~\"ext4|xfs\",job=\"$job\"})",

"format": "time_series",

"interval": "10s",

"intervalFactor": 1,

"refId": "A",

"step": 20

}

],

"thresholds": "70,90",

"title": "根分区使用率",

"transparent": true,

"type": "singlestat",

"valueFontSize": "80%",

"valueMaps": [

{

"op": "=",

"text": "N/A",

"value": "null"

}

],

"valueName": "current"

},

{

"cacheTimeout": null,

"colorBackground": false,

"colorValue": true,

"colors": [

"rgba(50, 172, 45, 0.97)",

"rgba(237, 129, 40, 0.89)",

"rgba(245, 54, 54, 0.9)"

],

"datasource": "Prometheus",

"decimals": null,

"description": "通过变量maxmount获取最大的分区。",

"format": "percent",

"gauge": {

"maxValue": 100,

"minValue": 0,

"show": true,

"thresholdLabels": false,

"thresholdMarkers": true

},

"gridPos": {

"h": 5,

"w": 4,

"x": 20,

"y": 1

},

"id": 154,

"interval": null,

"links": [],

"mappingType": 1,

"mappingTypes": [

{

"name": "value to text",

"value": 1

},

{

"name": "range to text",

"value": 2

}

],

"maxDataPoints": 100,

"minSpan": 4,

"nullPointMode": "null",

"nullText": null,

"postfix": "",

"postfixFontSize": "50%",

"prefix": "",

"prefixFontSize": "50%",

"rangeMaps": [

{

"from": "null",

"text": "N/A",

"to": "null"

}

],

"repeat": null,

"repeatDirection": "h",

"sparkline": {

"fillColor": "rgba(31, 118, 189, 0.18)",

"full": false,

"lineColor": "rgb(31, 120, 193)",

"show": true

},

"tableColumn": "",

"targets": [

{

"expr": "100 - ((node_filesystem_avail_bytes{instance=~\"$instance\",mountpoint=\"$maxmount\",fstype=~\"ext4|xfs\",job=\"$job\"} * 100) / node_filesystem_size_bytes {instance=~\"$instance\",mountpoint=\"$maxmount\",fstype=~\"ext4|xfs\",job=\"$job\"})",

"format": "time_series",

"interval": "10s",

"intervalFactor": 1,

"refId": "A",

"step": 20

}

],

"thresholds": "70,90",

"title": "最大分区($maxmount)使用率",

"transparent": true,

"type": "singlestat",

"valueFontSize": "80%",

"valueMaps": [

{

"op": "=",

"text": "N/A",

"value": "null"

}

],

"valueName": "current"

},

{

"cacheTimeout": null,

"colorBackground": false,

"colorValue": true,

"colors": [

"rgba(245, 54, 54, 0.9)",

"rgba(237, 129, 40, 0.89)",

"rgba(50, 172, 45, 0.97)"

],

"datasource": "Prometheus",

"decimals": null,

"description": "",

"format": "bytes",

"gauge": {

"maxValue": 100,

"minValue": 0,

"show": false,

"thresholdLabels": false,

"thresholdMarkers": true

},

"gridPos": {

"h": 3,

"w": 2,

"x": 2,

"y": 3

},

"id": 75,

"interval": null,

"links": [],

"mappingType": 1,

"mappingTypes": [

{

"name": "value to text",

"value": 1

},

{

"name": "range to text",

"value": 2

}

],

"maxDataPoints": 100,

"minSpan": 4,

"nullPointMode": "null",

"nullText": null,

"postfix": "",

"postfixFontSize": "70%",

"prefix": "",

"prefixFontSize": "50%",

"rangeMaps": [

{

"from": "null",

"text": "N/A",

"to": "null"

}

],

"sparkline": {

"fillColor": "rgba(31, 118, 189, 0.18)",

"full": false,

"lineColor": "rgb(31, 120, 193)",

"show": false

},

"tableColumn": "",

"targets": [

{

"expr": "node_memory_MemTotal_bytes{instance=~\"$instance\",job=\"$job\"}",

"format": "time_series",

"instant": true,

"intervalFactor": 1,

"legendFormat": "{{instance}}",

"refId": "A",

"step": 20

}

],

"thresholds": "2,3",

"title": "内存总量",

"transparent": true,

"type": "singlestat",

"valueFontSize": "80%",

"valueMaps": [

{

"op": "=",

"text": "N/A",

"value": "null"

}

],

"valueName": "current"

},

{

"gridPos": {

"h": 1,

"w": 24,

"x": 0,

"y": 6

},

"id": 178,

"title": "Memory && CPU",

"type": "row"

},

{

"aliasColors": {

"内存_Avaliable": "#6ED0E0",

"内存_Cached": "#EF843C",

"内存_Free": "#629E51",

"内存_Total": "#6d1f62",

"内存_Used": "#eab839",

"可用": "#9ac48a",

"总内存": "#bf1b00"

},

"bars": false,

"dashLength": 10,

"dashes": false,

"datasource": "Prometheus",

"decimals": 2,

"fill": 1,

"gridPos": {

"h": 7,

"w": 6,

"x": 0,

"y": 7

},

"height": "300",

"id": 156,

"legend": {

"alignAsTable": false,

"avg": false,

"current": false,

"max": false,

"min": false,

"rightSide": false,

"show": true,

"sort": "current",

"sortDesc": true,

"total": false,

"values": false

},

"lines": true,

"linewidth": 1,

"links": [],

"nullPointMode": "null",

"percentage": false,

"pointradius": 5,

"points": false,

"renderer": "flot",

"seriesOverrides": [],

"spaceLength": 10,

"stack": false,

"steppedLine": false,

"targets": [

{

"expr": "node_memory_MemTotal_bytes{instance=~\"$instance\",job=\"$job\"}",

"format": "time_series",

"hide": false,

"instant": false,

"intervalFactor": 2,

"legendFormat": "总内存",

"refId": "A",

"step": 4

},

{

"expr": "node_memory_MemTotal_bytes{instance=~\"$instance\",job=\"$job\"} - node_memory_MemAvailable_bytes{instance=~\"$instance\",job=\"$job\"}",

"format": "time_series",

"hide": false,

"instant": false,

"intervalFactor": 2,

"legendFormat": "已用内存",

"refId": "B",

"step": 4

}

],

"thresholds": [],

"timeFrom": null,

"timeRegions": [],

"timeShift": null,

"title": "内存信息",

"tooltip": {

"shared": true,

"sort": 1,

"value_type": "individual"

},

"transparent": true,

"type": "graph",

"xaxis": {

"buckets": null,

"mode": "time",

"name": null,

"show": true,

"values": []

},

"yaxes": [

{

"format": "bytes",

"label": null,

"logBase": 1,

"max": null,

"min": "0",

"show": true

},

{

"format": "short",

"label": null,

"logBase": 1,

"max": null,

"min": null,

"show": true

}

],

"yaxis": {

"align": false,

"alignLevel": null

}

},

{

"aliasColors": {

"15分钟": "#6ED0E0",

"1分钟": "#BF1B00",

"5分钟": "#CCA300"

},

"bars": false,

"dashLength": 10,

"dashes": false,

"datasource": "Prometheus",

"editable": true,

"error": false,

"fill": 1,

"grid": {},

"gridPos": {

"h": 7,

"w": 6,

"x": 6,

"y": 7

},

"height": "300",

"id": 13,

"legend": {

"alignAsTable": false,

"avg": false,

"current": false,

"max": false,

"min": false,

"rightSide": false,

"show": true,

"total": false,

"values": false

},

"lines": true,

"linewidth": 2,

"links": [],

"minSpan": 4,

"nullPointMode": "null as zero",

"percentage": false,

"pointradius": 5,

"points": false,

"renderer": "flot",

"repeat": null,

"seriesOverrides": [],

"spaceLength": 10,

"stack": false,

"steppedLine": false,

"targets": [

{

"expr": "node_load1{instance=~\"$instance\",job=\"$job\"}",

"format": "time_series",

"instant": false,

"interval": "10s",

"intervalFactor": 2,

"legendFormat": "load_1m",

"metric": "",

"refId": "A",

"step": 20,

"target": ""

},

{

"expr": "node_load5{instance=~\"$instance\",job=\"$job\"}",

"format": "time_series",

"instant": false,

"interval": "10s",

"intervalFactor": 2,

"legendFormat": "load_5m",

"refId": "B",

"step": 20

},

{

"expr": "node_load15{instance=~\"$instance\",job=\"$job\"}",

"format": "time_series",

"instant": false,

"interval": "10s",

"intervalFactor": 2,

"legendFormat": "load_15m",

"refId": "C",

"step": 20

}

],

"thresholds": [],

"timeFrom": null,

"timeRegions": [],

"timeShift": null,

"title": "系统平均负载",

"tooltip": {

"msResolution": false,

"shared": true,

"sort": 0,

"value_type": "cumulative"

},

"transparent": true,

"type": "graph",

"xaxis": {

"buckets": null,

"mode": "time",

"name": null,

"show": true,

"values": []

},

"yaxes": [

{

"format": "short",

"logBase": 1,

"max": null,

"min": null,

"show": true

},

{

"format": "short",

"logBase": 1,

"max": null,

"min": null,

"show": true

}

],

"yaxis": {

"align": false,

"alignLevel": null

}

},

{

"aliasColors": {

"Idle - Waiting for something to happen": "#052B51",

"guest": "#9AC48A",

"idle": "#052B51",

"iowait": "#EAB839",

"irq": "#BF1B00",

"nice": "#C15C17",

"sdb_每秒I/O操作%": "#d683ce",

"softirq": "#E24D42",

"steal": "#FCE2DE",

"system": "#508642",

"user": "#5195CE",

"磁盘花费在I/O操作占比": "#ba43a9"

},

"bars": false,

"dashLength": 10,

"dashes": false,

"datasource": "Prometheus",

"decimals": 2,

"description": "",

"fill": 1,

"gridPos": {

"h": 7,

"w": 6,

"x": 12,

"y": 7

},

"id": 7,

"legend": {

"alignAsTable": false,

"avg": false,

"current": false,

"hideEmpty": true,

"hideZero": true,

"max": false,

"min": false,

"rightSide": false,

"show": true,

"sideWidth": null,

"sort": null,

"sortDesc": null,

"total": false,

"values": false

},

"lines": true,

"linewidth": 1,

"links": [],

"minSpan": 4,

"nullPointMode": "null",

"percentage": false,

"pointradius": 5,

"points": false,

"renderer": "flot",

"repeat": null,

"seriesOverrides": [],

"spaceLength": 10,

"stack": false,

"steppedLine": false,

"targets": [

{

"expr": "(1 - avg by (environment,instance) (irate(node_cpu_seconds_total{instance=~\"$instance\",mode=\"idle\",job=\"$job\"}[1m])))",

"format": "time_series",

"hide": false,

"instant": false,

"interval": "",

"intervalFactor": 2,

"legendFormat": "CPU_Total",

"refId": "A",

"step": 20

},

{

"expr": "avg(irate(node_cpu_seconds_total{instance=~\"$instance\",mode=\"user\",job=\"$job\"}[1m])) by (instance)",

"format": "time_series",

"hide": false,

"intervalFactor": 2,

"legendFormat": "CPU_User",

"refId": "B",

"step": 240

},

{

"expr": "avg(irate(node_cpu_seconds_total{instance=~\"$instance\",mode=\"iowait\",job=\"$job\"}[1m])) by (instance)",

"format": "time_series",

"hide": false,

"intervalFactor": 2,

"legendFormat": "CPU_Iowait",

"refId": "D",

"step": 240

}

],

"thresholds": [],

"timeFrom": null,

"timeRegions": [],

"timeShift": null,

"title": "CPU使用率",

"tooltip": {

"shared": true,

"sort": 0,

"value_type": "individual"

},

"transparent": true,

"type": "graph",

"xaxis": {

"buckets": null,

"mode": "time",

"name": null,

"show": true,

"values": []

},

"yaxes": [

{

"decimals": null,

"format": "percentunit",

"label": "",

"logBase": 1,

"max": "1",

"min": null,

"show": true

},

{

"format": "short",

"label": null,

"logBase": 1,

"max": null,

"min": null,

"show": false

}

],

"yaxis": {

"align": false,

"alignLevel": null

}

},

{

"aliasColors": {},

"breakPoint": "25%",

"cacheTimeout": null,

"combine": {

"label": "Others",

"threshold": 0

},

"datasource": "Prometheus",

"decimals": null,

"fontSize": "80%",

"format": "short",

"gridPos": {

"h": 7,

"w": 6,

"x": 18,

"y": 7

},

"id": 182,

"interval": null,

"legend": {

"header": "",

"percentage": false,

"show": true,

"sideWidth": null,

"values": false

},

"legendType": "On graph",

"links": [],

"maxDataPoints": 3,

"nullPointMode": "connected",

"pieType": "pie",

"strokeWidth": 1,

"targets": [

{

"application": {

"filter": ""

},

"expr": "sum by (instance,cpu) ( node_cpu_seconds_total{instance=~\"$instance\" , mode!=\"idle\"})",

"format": "time_series",

"functions": [],

"group": {

"filter": ""

},

"host": {

"filter": ""

},

"instant": true,

"intervalFactor": 1,

"item": {

"filter": ""

},

"legendFormat": "cpu-{{cpu}}",

"mode": 0,

"options": {

"showDisabledItems": false,

"skipEmptyValues": false

},

"refId": "A",

"resultFormat": "time_series",

"table": {

"skipEmptyValues": false

},

"triggers": {

"acknowledged": 2,

"count": true,

"minSeverity": 3

}

}

],

"title": "本机多颗CPU使用占比",

"transparent": true,

"type": "grafana-piechart-panel",

"valueName": "avg"

},

{

"collapsed": false,

"gridPos": {

"h": 1,

"w": 24,

"x": 0,

"y": 14

},

"id": 176,

"panels": [],

"repeat": null,

"title": "Disk",

"type": "row"

},

{

"aliasColors": {

"vda_write": "#6ED0E0"

},

"bars": true,

"dashLength": 10,

"dashes": false,

"datasource": "Prometheus",

"description": "Reads completed: 每个磁盘分区每秒读完成次数\n\nWrites completed: 每个磁盘分区每秒写完成次数\n\nIO now 每个磁盘分区每秒正在处理的输入/输出请求数",

"fill": 2,

"gridPos": {

"h": 8,

"w": 6,

"x": 0,

"y": 15

},

"height": "300",

"id": 161,

"legend": {

"alignAsTable": true,

"avg": false,

"current": true,

"hideEmpty": true,

"hideZero": true,

"max": true,

"min": false,

"show": true,

"sort": "current",

"sortDesc": true,

"total": false,

"values": true

},

"lines": false,

"linewidth": 1,

"links": [],

"nullPointMode": "null",

"percentage": false,

"pointradius": 5,

"points": false,

"renderer": "flot",

"seriesOverrides": [

{

"alias": "/.*_读取$/",

"transform": "negative-Y"

}

],

"spaceLength": 10,

"stack": false,

"steppedLine": false,

"targets": [

{

"expr": "irate(node_disk_reads_completed_total{instance=~\"$instance\",job=\"$job\"}[1m])",

"format": "time_series",

"hide": false,

"interval": "",

"intervalFactor": 2,

"legendFormat": "{{device}}_读取",

"refId": "A",

"step": 10

},

{

"expr": "irate(node_disk_writes_completed_total{instance=~\"$instance\",job=\"$job\"}[1m])",

"format": "time_series",

"hide": false,

"intervalFactor": 2,

"legendFormat": "{{device}}_写入",

"refId": "B",

"step": 10

}

],

"thresholds": [],

"timeFrom": null,

"timeRegions": [],

"timeShift": null,

"title": "磁盘读写速率(IOPS)",

"tooltip": {

"shared": true,

"sort": 0,

"value_type": "individual"

},

"type": "graph",

"xaxis": {

"buckets": null,

"mode": "time",

"name": null,

"show": true,

"values": []

},

"yaxes": [

{

"decimals": null,

"format": "iops",

"label": "读取(-)/写入(+)I/O ops/sec",

"logBase": 1,

"max": null,

"min": null,

"show": true

},

{

"format": "short",

"label": null,

"logBase": 1,

"max": null,

"min": null,

"show": true

}

],

"yaxis": {

"align": false,

"alignLevel": null

}

},

{

"aliasColors": {

"vda_write": "#6ED0E0"

},

"bars": true,

"dashLength": 10,

"dashes": false,

"datasource": "Prometheus",

"description": "Read bytes 每个磁盘分区每秒读取的比特数\nWritten bytes 每个磁盘分区每秒写入的比特数",

"fill": 2,

"gridPos": {

"h": 8,

"w": 6,

"x": 6,

"y": 15

},

"height": "300",

"id": 168,

"legend": {

"alignAsTable": true,

"avg": false,

"current": true,

"hideEmpty": true,

"hideZero": true,

"max": true,

"min": false,

"show": true,

"total": false,

"values": true

},

"lines": false,

"linewidth": 1,

"links": [],

"nullPointMode": "null",

"percentage": false,

"pointradius": 5,

"points": false,

"renderer": "flot",

"seriesOverrides": [

{

"alias": "/.*_读取$/",

"transform": "negative-Y"

}

],

"spaceLength": 10,

"stack": false,

"steppedLine": false,

"targets": [

{

"expr": "irate(node_disk_read_bytes_total{instance=~\"$instance\",job=\"$job\"}[1m])",

"format": "time_series",

"interval": "",

"intervalFactor": 2,

"legendFormat": "{{device}}_读取",

"refId": "A",

"step": 10

},

{

"expr": "irate(node_disk_written_bytes_total{instance=~\"$instance\",job=\"$job\"}[1m])",

"format": "time_series",

"hide": false,

"intervalFactor": 2,

"legendFormat": "{{device}}_写入",

"refId": "B",

"step": 10

}

],

"thresholds": [],

"timeFrom": null,

"timeRegions": [],

"timeShift": null,

"title": "磁盘读写容量大小",

"tooltip": {

"shared": true,

"sort": 0,

"value_type": "individual"

},

"type": "graph",