huggingface bert模型文本分类预处理模型从0到应用——踩坑记录

huggingface bert模型文本分类预处理模型应用踩坑记录

- 基本信息

- 流程记录(一步一坑)

-

- 导入模型

- 模型预测

-

- 模型使用调研

- 模型踩坑记录

基本信息

模型:BERT-Tiny fine-tuned on on sms_spam dataset for spam detection

模型链接

任务目标:文本分类,筛选出文本中的垃圾文本。

数据集说明:任务数据集为非标注数据…

任务思路:使用预训练好的BERT分类模型对任务数据集(无标注)进行预测,然后人工抽检正样本,查看预测结果准确率,判断模型是否可行。

流程记录(一步一坑)

导入模型

有两种方案:

- 通过官网在线导入

- 下载至本地导入

坑1:我连接的服务器限制外网,因此无法通过官网导入

解决方案:将模型文件下载至本地并通过filezila上传至服务器

两种导入方法参见hugging face 模型库的使用及加载 Bert 预训练模型

官网导入代码如下

from transformers import BertTokenizer,BertModel

model_name = 'hfl/chinese-roberta-wwm-ext'

config = BertConfig.from_pretrained(model_name) # 这个方法会自动从官方的s3数据库下载模型配置、参数等信息(代码中已配置好位置)

tokenizer = BertTokenizer.from_pretrained(model_name) # 这个方法会自动从官方的s3数据库读取文件下的vocab.txt文件

model = BertModel.from_pretrained(model_name) # 这个方法会自动从官方的s3数据库下载模型信息

本地导入代码如下

from transformers import BertConfig,BertTokenizer

model_name = 'E:\Programming\PyCharm\NLP\preTrainBert\hfl\chinese-roberta-wwm-ext'

tokenizer = BertTokenizer.from_pretrained(model_name) # 通过词典导入分词器

model_config = BertConfig.from_pretrained(model_name) # 导入配置文件

model = BertModel.from_pretrained(model_name, config=model_config)

模型预测

模型使用调研

国内网上常见的bert模型应用流程为加载预训练模型后,使用自己的数据集(已标注)进行基于特征的方式建模或者利用BERT基于微调的方式进行建模,参见文章:基于Transformers库的BERT模型:一个文本情感分类的实例解析

本文由于任务数据集为无标注数据集,所以目的是直接使用预训练模型进行预测。

做了三种尝试:

- 使用pipeline调用Bert模型(AutoModel函数载入模型)

- 使用Bert模型+公开数据集(已标注)训练模型,再预测任务数据集

- 使用pipeline调用Bert模型(BertForSequenceClassification函数载入模型)

模型踩坑记录

pipelines使用参考一下两个文档。

pipelines使用分享中文博客

pipelines官方文档

- 使用pipeline调用Bert模型(AutoModel函数载入模型)

from transformers import AutoModel

from transformers import AutoTokenizer

model_path = "*****" # 写自己模型所在的文件夹

tokenizer = AutoTokenizer.from_pretrained(model_path)

model = AutoModel.from_pretrained(model_path)

classifier = pipeline("text-classification",model=model,tokenizer=tokenizer)

## warning和报错如下

ome weights of the model checkpoint at /home/zhangguofeng/model-pytorch/bert-tiny-finetuned-sms-spam-detection were not used when initializing BertModel: ['classifier.weight', 'classifier.bias']

- This IS expected if you are initializing BertModel from the checkpoint of a model trained on another task or with another architecture (e.g. initializing a BertForSequenceClassification model from a BertForPreTraining model).

- This IS NOT expected if you are initializing BertModel from the checkpoint of a model that you expect to be exactly identical (initializing a BertForSequenceClassification model from a BertForSequenceClassification model).

PipelineException: The model 'BertModel' is not supported for text-classification. Supported models are ['RoFormerForSequenceClassification', 'BigBirdPegasusForSequenceClassification', 'BigBirdForSequenceClassification', 'ConvBertForSequenceClassification', 'LEDForSequenceClassification', 'DistilBertForSequenceClassification', 'AlbertForSequenceClassification', 'CamembertForSequenceClassification', 'XLMRobertaForSequenceClassification', 'MBartForSequenceClassification', 'BartForSequenceClassification', 'LongformerForSequenceClassification', 'RobertaForSequenceClassification', 'SqueezeBertForSequenceClassification', 'LayoutLMForSequenceClassification', 'BertForSequenceClassification', 'XLNetForSequenceClassification', 'MegatronBertForSequenceClassification', 'MobileBertForSequenceClassification', 'FlaubertForSequenceClassification', 'XLMForSequenceClassification', 'ElectraForSequenceClassification', 'FunnelForSequenceClassification', 'DebertaForSequenceClassification', 'DebertaV2ForSequenceClassification', 'GPT2ForSequenceClassification', 'GPTNeoForSequenceClassification', 'OpenAIGPTForSequenceClassification', 'ReformerForSequenceClassification', 'CTRLForSequenceClassification', 'TransfoXLForSequenceClassification', 'MPNetForSequenceClassification', 'TapasForSequenceClassification', 'IBertForSequenceClassification']

之前的错误经验让我以为warning并不影响使用,所以直接奔着报错PipelineException。

懂了,"BertModel"不支持”text-classification“分类。想到两种方法,一种是自己使用Bert获取特征,训练模型;另一种是找到一个支持分类的模型。

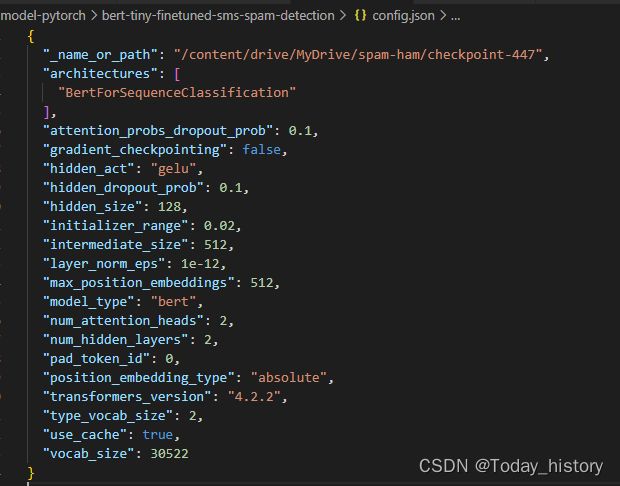

看一下下载模型的config.json。model_type写的是”bert“,验证了猜想:下载的模型是”BertModel“,而不是支持分类的类型。

但其实这里不知道一点,”architectures“才是真正的模型类型,下载的模型是"BertForSequenceClassification",是支持”text-classificaiton“分类的!只要换个载入模型的函数——

BertForSequenceClassification.pre-trained()就行了。

忽略了关键性的warning和json信息,于是悲催的去测试了第二种方案。

- 使用Bert模型+公开数据集(已标注)训练模型,再预测任务数据集

训练过程参考文章:基于Transformers库的BERT模型:一个文本情感分类的实例解析

结果在处理自己数据时报错:

重跑数据时卡死,查找方法时偶然看了下载入模型时的warning…于是转去测试第三种。

- 使用pipeline调用Bert模型(BertForSequenceClassification函数载入模型)

将方法一中的代码改两行

from transformers import BertForSequenceClassification

model = BertForSequenceClassification.from_pretrained(model_path, config=model_config)

# 和上面一样

from transformers import pipeline

classifier = pipeline("text-classification",model=model,tokenizer=tokenizer)

classifier("Free entry in 2 a wkly comp to win FA Cup final tkts 21st May 2005. Text FA to 87121 to receive entry question(std txt rate)T&C's apply 08452810075over18's")

# output: [{'label': 'LABEL_1', 'score': 0.9051627516746521}]

接口调用成功!后面再测试下用这个函数处理自己数据集。