(刘二大人)PyTorch深度学习实践-多分类问题(Minist)

1.首先解决加载数据集缓慢以及不成功问题

去Minist官网下载四个数据集,放到你的项目文件中,最好放在MINIST/raw文件夹中,切忌不要随便解压,这里我的路径为E:\learn_pytorch\LE\MNIST\raw

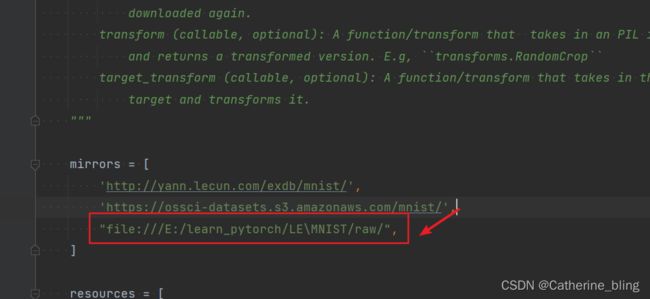

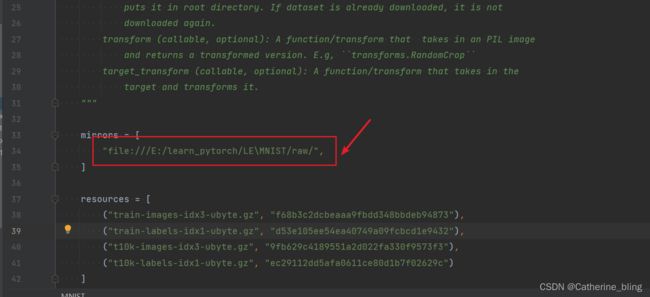

然后去你的pytorch环境中的lib库中找到site-packages中的torchvision包,修改minist.py的文件下载路径,我这里是Anaconda的虚拟环境

直接将你的文件的加载路径放上去,然后把上面的去掉

我们再次进行数据下载会发现已成功

2.完整代码实现

import torch

from torchvision import datasets

from torchvision import transforms

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

#准备数据集

trans = transforms.Compose([transforms.ToTensor(),transforms.Normalize((0.1307,),(0.3801,))])#这里第一个是均值,第二个是标准差

train_datasets = datasets.MNIST(root='E:\learn_pytorch\LE',train=True,transform=trans,download=True)

test_datasets = datasets.MNIST(root='E:\learn_pytorch\LE',train=False,transform=trans,download=True)

#进行数据集的加载

batch_size = 64

train_loader = DataLoader(dataset=train_datasets,batch_size=batch_size,shuffle=True)

test_loader = DataLoader(dataset=test_datasets,batch_size=batch_size,shuffle=False)

#进行模型的构建

class Model(torch.nn.Module):

def __init__(self):

super(Model, self).__init__()

self.linear1 = torch.nn.Linear(784,512)

self.linear2 = torch.nn.Linear(512,256)

self.linear3 = torch.nn.Linear(256,128)

self.linear4 = torch.nn.Linear(128,64)

self.linear5 = torch.nn.Linear(64,10)

def forward(self,x):

x = x.view(-1,784)#在这里先对x进行操作,我们将其转换为张量

x = F.relu(self.linear1(x))

x = F.relu(self.linear2(x))

x = F.relu(self.linear3(x))

x = F.relu(self.linear4(x))

return self.linear5(x)

#进行实例化

huihui = Model()

#定义损失函数和优化器

loss_fn = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(huihui.parameters(),lr=0.01,momentum=0.5)

#我们将一轮epoch单独拿出来作为一个函数

def train(epoch):

running_loss = 0.0

for batch_id,data in enumerate(train_loader):

inputs,targets = data

optimizer.zero_grad()

# Forward

outputs = huihui(inputs)

loss = loss_fn(outputs,targets)

loss.backward()

optimizer.step()

#标签从0开始

running_loss+=loss.item()

if batch_id%300 == 299:

print('[%d,%5d] loss:%.3f' %(epoch+1,batch_id+1,running_loss/300))

running_loss = 0.0

#定义测试集

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images,labels = data

outputs = huihui(images)

#这个torch.max函数可以返回最大值和最大值的下标,那个predict取的是最大值下标,只需要拿它和标签对比即可

_,predict = torch.max(outputs.data,dim=1)

total += labels.size(0)#总共有多少个标签样本,Nx1

correct+=(predict==labels).sum().item()#将我们预测的最有可能的下标与真实标签对比,最后将这个标量取出来

print('Accuracy on test set: %d %%' % (100*correct/total))

#进行训练和测试

if __name__ == '__main__':

for epoch in range(10):

train(epoch)

test()

3.结果展示(由于损失了很多空间特征,这种方法精度就是在97%了)

D:\Anaconda3\envs\pytorch\python.exe E:/learn_pytorch/LE/Minist.py

[1, 300] loss:2.259

[1, 600] loss:1.288

[1, 900] loss:0.505

Accuracy on test set: 88 %

[2, 300] loss:0.359

[2, 600] loss:0.312

[2, 900] loss:0.266

Accuracy on test set: 92 %

[3, 300] loss:0.216

[3, 600] loss:0.193

[3, 900] loss:0.173

Accuracy on test set: 95 %

[4, 300] loss:0.153

[4, 600] loss:0.136

[4, 900] loss:0.128

Accuracy on test set: 96 %

[5, 300] loss:0.114

[5, 600] loss:0.106

[5, 900] loss:0.099

Accuracy on test set: 96 %

[6, 300] loss:0.089

[6, 600] loss:0.086

[6, 900] loss:0.078

Accuracy on test set: 97 %

[7, 300] loss:0.070

[7, 600] loss:0.069

[7, 900] loss:0.069

Accuracy on test set: 97 %

[8, 300] loss:0.055

[8, 600] loss:0.056

[8, 900] loss:0.055

Accuracy on test set: 97 %

[9, 300] loss:0.044

[9, 600] loss:0.046

[9, 900] loss:0.046

Accuracy on test set: 97 %

[10, 300] loss:0.040

[10, 600] loss:0.040

[10, 900] loss:0.036

Accuracy on test set: 97 %Process finished with exit code 0