微服务设计指导-使用云原生微服务解决传统海量跑批时引起的系统间“级联雪崩”以及效率

问题描述

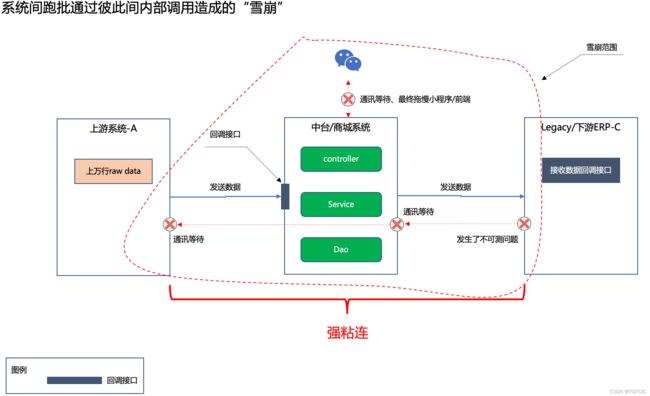

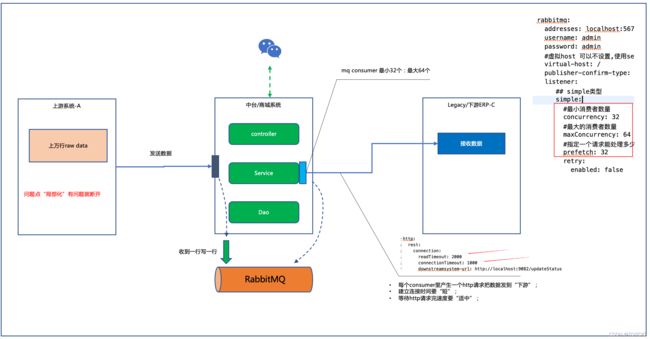

这也是一起真实的生产事故,如下图所示

这种“雪崩”是属于企业内部系统雪崩。

我们都知道如果是在外部http (包括一切restful、soap请求、http类型调用)调用上如果存在:a->b->c->d假设系统b为“中间段”,任意一点出错,如果你的http设置的时间超长或者是no connection time或者是无熔断。都会一路上“倒卡系统b“。这叫雪崩。

可是在我们的企业内部系统间也是存在这种”系统间雪崩“效应的。特别是在跑批场景,比如说:

- 柜面收银把数据、扫码购、人脸往交易记录往中台发;

- 交易中台再把数据二传手转到第三方分析引擎;

- 第三方分析引擎一旦出错,就会出现上述这种结果”第三方分析引擎卡死->交易中台卡死->柜面收银“;

然后就是一堆”鸡飞狗跳“,不断加内存、升CPU直到频繁重启都搞不定问题的解决,终于业务老板吃不消了要求改造,然后一群人连问题都找不到,于是就有了:

- 喂,你少发点数据!

- 喂,我只管发你吃不住数据管我什么事?

- 喂,我们没问题是你不行影响到了我们因此这个问题要你来改!

哎呀,那叫一个踢皮球!哎呀,那叫一个“热闹”!哎呀,那叫一个急!

解决方案的理论基础

我们知道了外部级联雪崩,同为内网的企业间系统其实也有雪崩而且更容易出现雪崩。

如上图,当有一个系统处于另外两个legacy系统间,此时这个系统会很“难受”。因为legacy要么是erp要么是c/s传统架构。

企业内的legacy讲究的是业务幂等。因此使用的都是基于DB的悲观锁来做的交易行为。这样的系统,150并发对它们来说已经是it's a myth了。你一个跑批发起,一个大for循环,中大型企业这种类型的跑批至少存在有150-300多种,每一种日交易量好点的都是1万-3万笔流水,银行金融都是讲10万流水的。然后把10万流水乘渠道乘帐目类型得到的用于循环的一个笛卡尔积动不动就是上百万。

上百万的记录套在一个for循环里,往下游的legacy送,对于先进点的“中间地位的交易系统”来说,多线程不是问题,哗啦一下开启个几百个线程,每个线程上千数据的往外就送。

哎呀。。。不需要1分钟,下游系统卡爆。

一旦下游系统卡爆就反向影响中间地位的交易系统,于是就有了这种“雪崩”,具体表现就是突然间前端APP应用或者是小程序应用变得很慢很慢。

然后就是上述一堆甩锅开始了。

因此,解决这一类问题我们需要本着如下的设计原则,这个原则也就是一些先进的大厂经常在面试P6, P7级别或者以上人员希望听到侯选人能够说出以下这些点:

- 多线程必须做到每个线程间不污染;

- 支持重跑、重试、严重错误记录;

- 线程数恒定、可控,跑批时间可以稍长点(都跑批了,你不在乎长那么几十秒,而实际多线程运用了好甚至远远千倍高于设计了不好的盲目开几百上千线程的应用);

- 任务处理不过来时运用“弹性横向扩充应用”来解决分布式计算而不是一味增大线程数;

- 资源尽可能利用少(整个job运行期间:内存<10%利用率,CPU<10%利用率,TCP文件打开数控制在整体文件句柄的10%甚至5%);

- 充分利用“计算机的CPU时间片轮流转“、充分利用并行计算、充分利用缓存、内存、异步;

因此,”跑批“两个字看似simple,but it means everything。是一个高度集成计算机科学专业知识的设计与制作。

此处顺便就补足一下“培训班-只教CRUD”这种急功近利的方式无法教给大家的真正的计算机科学程序员所需要的知识,我们市面上90%的猿们正是缺少了这些核心知识因此才造就了“X农”这个名词的存在,也正是缺少了这些基础知识点造成了一切系统本应该(是个编程的就需要基本应该做到)围绕着这些知识点展开却没能做到的“根本原因”,也造成了我们绝大多数系统有功能无性能,门店数、顾客数稍稍一多系统就爆了,一个系统连半年寿命都达不到(跳脱上北广-大厂的领域,国内绝大多数系统真心无性能,这一点不夸张):

- HTTP=TCP=OS(我们拿unix/linux来举例)的ulimit数,这个数最大到65,535,但你要考虑到OS还有其它的应用要用到文件句柄数,一般来说可以给到web应用的数量为一半,差不多32,000即并发可被打开的http数量,一个大for循环或者不加控制的线程数可以在1分钟内轻而易举到达这个阀值,一旦到了这个阀值,该OS上的应用全“卡死”;

- CPU利用率>80%时,你这台OS上的一切应用都处于”假死“状态,你可以自己拿jmeter,并发线程写个1万,跑1分钟试试,直接把自己电脑搞挂了;

- 内存占用率>90%时,如同CPU利用率>80%情况;

- 特别在万、十万、百万数据循环时,如果你不做sleep, wait的待待,CPU会像一个以11秒百米冲刺的速度的人保持这个速度要跑完45公里一样,它的“肺”不需要多久就爆了。你得让他“喘口气”,这就是CPU分片轮流转。很多人在这种跑批处理时都不知道sleep;

- 前端的APP/小程序应用经常会在跑批时被卡死就是因为很多人以为前后端分离,但实际前端的API是落到后端的spring boot的应用上的呀,而后端和跑批如果正好处于同一个应用而此时跑批正在耗你的http进程,势必也会影响到你的前端访问,因为TO B端的跑批把可用的http连接用完了前端用户如何连得进来呢?都认为前后端分离,但是前端你有API请求呀,要不你不要API请求了,你就一个纯静态应用那么后台就算shutdown也不会影响前台了,这是很多传统甲方型公司的IT甚至CTO一层都想不到的点,毫不夸张的说目前市面上90%(全国范围)甲方型IT都是这种认知;

- 把“系统间的强关联改成弱关联”,把出问题的点、范围约束在尽可能小的范围,那么要多用“异步”架构,这样就不会形成雪崩,要崩也是单崩。崩坏了你的上游或者下游正好暴露出来他们的系统里的问题,如果你要连着跟着一起崩,那么当出现这种问题时就是一锅混水,3个系统本来是两个系统不行,排查解决也可以精准到系统。你现在去“搅和在一起”。。。这是“死也要陪着别人一起死喽?你们间多友情?”;

- 减少传统OLTP型DB的使用或者干脆能不用就不用,一条数据库行级锁就可以一路卡爆你们的系统。适当的使用NOSQL如:mongodb、redis,你会发现同样数据量使用NOSQL的效率是传统DB的千倍、万倍以上;

- 一条条update、insert循环1,000次和把1,000条update语句组装在一起一次性提交,前后性能相差几十有的甚至达到上千倍,因为数据库乐于做“批量一次性提交“而非一条条提交。网络传输亦是如此。比如说你往百度网盘上传3万个文件每个文件只有20K,你可能要上传几小时,而你把3万个文件打成一个ZIP包一次性上传不会超过15分钟。因为网络IO频繁读写是极其”低效“的;

- HTTP或者任何连接不得无超时设定,超时又要尽可能设的短。并且任何连接包括HTTP连接都有两个timeout。一个叫createConnectionTimeout,一个叫readTimeout。createConnectionTime是指两个系统间建立连接通道所用时间,这个时间如果是企业内网>2秒或者4秒,我们算4秒吧,已经算很夸张了,这代表要建立的系统间有一个系统早己经“挂了”。而readTimeout就是http request->response的时间,这个时间就是一次“交易”的时间,在互联网to c端微服务领域我们甚至要求这个readTimeout达到100毫秒或者100毫秒内,可想而知留给你交易一笔交易的时间有多少,为什么要少呢?你时间也可以长呀,处理一笔交易10几秒,好。。。一路雪崩若干系统模块、系统组件、甚至系统,然后全局雪崩、然后小程序/APP白屏、卡死,真happy!因此readTimeout我们控制在4 秒吧,两个参数的关系是readTimeout的时间>createConnectionTime。很多合理的系统的createConnectionTimeout的设定一般不会超过2秒;

好了,大的原则不过如上9点,那么根据上述9点,我们把我们最新的设计展现出来,如何做到“可控恒定线程数、又能做到业务幂等、又能做到效率不差(事实上只会更高)而系统资源开销更少”的来做到以上这件事呢?

提出解决方案

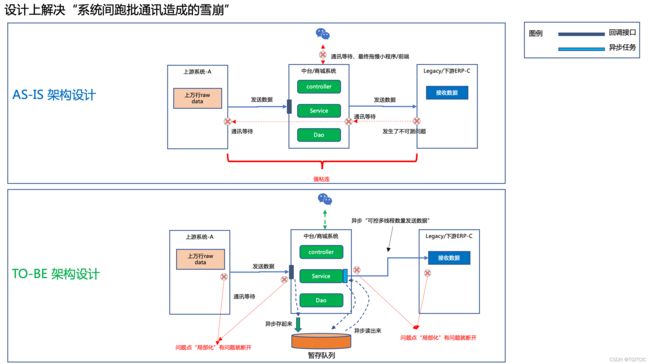

AS-IS & TO-B,是TOGAF企业架构常用的一种架构设计手法。

- AS-IS代表:己有/现有设计;

- TO-B代表:未来、目标、我们要设计成什么样;

在TO-B架构设计里我们可以看到设计的目标如下几点:

- 把A-B-C三个系统间的跑批,从强粘连引起的大范围雪崩约束到小范围雪崩;

- 我们为了保TO C端即小程序/APP不被卡死,那就算雪崩我们也把这个雪崩控制在后台,不会“反射/反弹”到前端,因此我们利用异步;

- 如果要异步就必须要使用到暂存队列,这个暂存队列可以使用mq也可以自己使用“流水表+带状态字段”来制作异步job,具体设计我们分解到后面详细设计中去解说;

- 上游端把数据一条条发过来,交易系统把它吃下来落流水表后直接返回响应,这种请求即readTimeout不会超过1秒;

- 存下来后利用一个异步的job把流水读出来然后以可控线程数的异步机制发送往下游系统“扔”过去;

这就是我们的设计思路,彻底解耦。

解释两个为什么

这两个为什么一旦搞懂其实就搞清了整个设计的核心思想了,非常重要,因此特在此做深度解释。

为什么一定一定要讲究“发送线程为可控、恒定线程”呢?

这个设计运行在一个jvm里,发送线程是恒定的可控的,一般来说这个线程数不易设置超过50,正如我一直以来所说,一般上游或者下游系统都是legacy,这种legacy不要看很“贵”,但真的连150并发都吃不住的。因为这些系统都是“强交易(其实这只是这些系统过于老旧-平均20年前技术栈设计而成导致)”,都是基于DB的悲观锁设计,那么我们有这个“责任”去保护好这些系统。

对于这些系统永远要控制好发往它的请求数要控制住、不搞死,对于整个企业的IT来说“共赢”才能赢,单部门赢不是赢,因此这个设计是很重要的,不能只考虑自己的系统“爽”。

为什么一定要用异步暂存呢

我们可以控制好我们送出去的量不把别人搞死,你怎么保证别人送过来的量不把你搞死呢?这些legacy系统之所以legacy就如我上文所说的根源是这些系统的设计很古老,他们可能连多线程都没法用,很多银行金融型企业甚至用的还是单片机、C来做的,哪来的多线程、微服务、分布式这种先进的技术手段,诚然,在当时那个年代这些系统很先进,但是它们没有跟上时代在进化的潮流中被时代已经淘汰。

那它们发往你的数据高度有可能是“不控线程、直接大量请求泛滥”进你的系统的。因此,你的系统要有能力“挡”,挡住了后呢一点点把这些内部legacy过来的流量“消化”掉。

因此此处就需要使用到异步、暂存、队列这种手法了。

详细设计

对于“前面可以挡得住,挡得住可以吃得下,输出可以控”的核心原则。我们可以用两种手段来落地我们的设计。

- 使用MQ+可控数量MQ的Consumer+RestTemplate的方式;

- 使用MQ+MongoDb来做流水表+SpringBoot线程池+Redisson自续约锁+RestTemplate的方式;

这两种方式又涉及到以下的细节技术。

我特别喜欢在设计转化到实际落地前把要用到的技术栈全部准备好,这是一个好习惯。我不知道大家有没有去过天津,吃过那里的小吃?

在天津,老清老早,天还蒙蒙亮时,你来到天津特别是一些小胡同,看到有一个摊,老板在凌晨4、5点就已经把水烧好、把料都准备好了,一小碟子精盐、一小碟子蒜、一小碟子花生、一小碟子芝麻、葱、酱、虾皮,摆了一桌面。

客人来了后:老板,来一碗老面茶。

老板用手快速的在各个碟子间游走着,拿起这个,拿起那个,快速的往一个海碗里撒入这些预先准备好的料,然后拿了一个大勺,往早已经烧热的大汤锅里这么一撩,然后往满是小料的海碗里这么一浇,喊一声“来了您哎,慢慢吃”。

客人呢?一手拿着果子咬一口一嘴油,另一只手拿着勺这么喝一口老面茶,那叫一个香。

所以呢,我们就把我们的这些“小料”都预先给它准备好了。

| 序号 | 技术栈名 |

| 1 | spring boot 2.4.2 |

| 2 | webflux来做我们的controller |

| 3 | spring boot的thread pool |

| 4 | spring boot的quartz |

| 5 | spring boot redisson自续约锁 |

| 6 | spring boot rabbitmqTemplate |

| 7 | spring boot mongoTemplate |

| 8 | mysql 5.7 |

| 9 | MongoDb 3.2.x |

| 10 | RabbitMq 3.8.x |

| 11 | Redis6.x |

| 12 | apache jmeter 5.3 |

这些技术分解成两个spring boot工程:

- SkyPayment,用于模拟我们的中台/电商核心交易平台,它会提供一个回调接口,该接口接受一条http请求,这个http请求会达到几万的量,用于模拟上游端往SkyPayment工程里喂入海量的数据,此处的海量数据我们会使用jmeter的Arrival Threads来模拟出这个高并发的量来;

- SkyDownStreamSystem,用于模拟下游系统。即当上游(jmeter)往SkyPayment系统喂入海量的高并发数据时,SkyPayment把这个数据以“二传手”的身份传递给到SkyDownStreamSystem,SkyDownStreamSystem接到SkyPayment转过来的数据对数据库里的payment表的status做状态修改以模拟“入库”、“记帐”的动作;

系统设计要求:

- 这两个工程在运行时,面对几万级别的瞬时流量压入时必须从开始到结束,压入多少数据最终处理完多少数据;

- 有任何数据处理出错系统可以重复、补偿处理;

- 这两个系统中的http连接数必须控制在很小的并发数内,以便于任何系统不会被打爆;

- 在满足了以上三个点的同时还需要做到当海量数据全部发完后,系统可以在SkyPayment端传输完成后的1分钟内把所有的几万条数据处理完毕;

- 全过程每个项目的内存使用不超过2G、CPU不超过10%;

下面开始动手了。

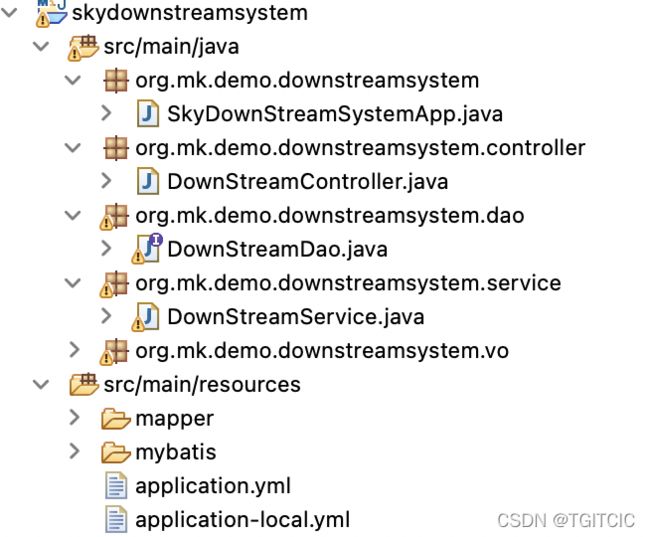

详细设计-用于模拟下游系统的SkyDownStream项目结构

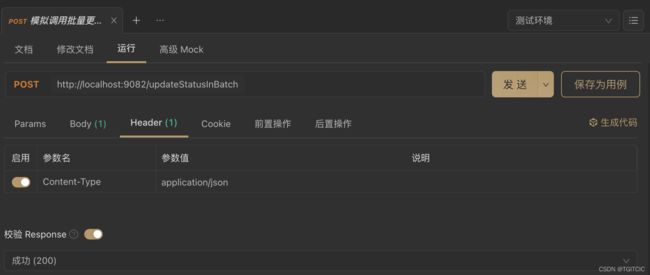

用于入库的controller

@PostMapping(value = "/updateStatusInBatch", produces = "application/json")

@ResponseBody

public Mono updateStatusInBatch(@RequestBody List paymentList) {

ResponseBean resp = new ResponseBean();

try {

downStreamService.updatePaymentStatusByList(paymentList);

resp = new ResponseBean(ResponseCodeEnum.SUCCESS.getCode(), "success");

} catch (Exception e) {

resp = new ResponseBean(ResponseCodeEnum.FAIL.getCode(), "system error");

logger.error(">>>>>>updateStatusInBatch error: " + e.getMessage(), e);

}

return Mono.just(resp);

} DownStreamService.java

@TimeLogger

@Transactional(rollbackFor = Exception.class)

public void updatePaymentStatusByList(List paymentList) throws Exception {

downStreamDao.updatePaymentStatusByList(paymentList);

}DownStreamDao.java

public void updatePaymentStatusByList(List paymentList);

update sky_payment set status=#{status} where

pay_id=#{payId}

update sky_payment set status=#{payment.status} where pay_id=#{payment.payId}

大家可以看到此处我有两个dao,一个DAO是根据一条条的pay_id来入库,另一个DAO是用的是batchUpdate方法。

此处为什么我要强调使用batchUpdate?想想我上面提到过的9大基本知识点中的1,000个循环一条条写数据库和一次1,000条一提交,性能可以差几十甚至上千倍的梗。因此在POC代码中我不仅仅实现了RabbitMq和使用NOSQL来实现可控异步多线程分布式计算这两种方式外我还在每个方式中使用了“一条条插入更新数据”和“批量更新数据”两种方式进行了对比,以验证一条条数据插/更新和批量插/更新的效率有多少可怕的区别。

PaymentBean.java

/**

* 系统项目名称 org.mk.demo.skypayment.vo PaymentBean.java

*

* Feb 1, 2022-11:12:17 PM 2022XX公司-版权所有

*

*/

package org.mk.demo.downstreamsystem.vo;

import java.io.Serializable;

/**

*

* PaymentBean

*

*

* Feb 1, 2022 11:12:17 PM

*

* @version 1.0.0

*

*/

public class PaymentBean implements Serializable {

private int payId;

public int getPayId() {

return payId;

}

public void setPayId(int payId) {

this.payId = payId;

}

public int getStatus() {

return status;

}

public void setStatus(int status) {

this.status = status;

}

public double getTransfAmount() {

return transfAmount;

}

public void setTransfAmount(double transfAmount) {

this.transfAmount = transfAmount;

}

private int status;

private double transfAmount = 0;

}

SkyDownStream系统的application.yml配置

mysql:

datasource:

db:

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: com.mysql.jdbc.Driver

minIdle: 50

initialSize: 50

maxActive: 300

maxWait: 1000

testOnBorrow: false

testOnReturn: true

testWhileIdle: true

validationQuery: select 1

validationQueryTimeout: 1

timeBetweenEvictionRunsMillis: 5000

ConnectionErrorRetryAttempts: 3

NotFullTimeoutRetryCount: 3

numTestsPerEvictionRun: 10

minEvictableIdleTimeMillis: 480000

maxEvictableIdleTimeMillis: 480000

keepAliveBetweenTimeMillis: 480000

keepalive: true

poolPreparedStatements: true

maxPoolPreparedStatementPerConnectionSize: 512

maxOpenPreparedStatements: 512

master: #master db

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: com.mysql.jdbc.Driver

url: jdbc:mysql://localhost:3306/ecom?useUnicode=true&characterEncoding=utf-8&useSSL=false&useAffectedRows=true&autoReconnect=true&allowMultiQueries=true

username: root

password: 111111

slaver: #slaver db

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: com.mysql.jdbc.Driver

url: jdbc:mysql://localhost:3307/ecom?useUnicode=true&characterEncoding=utf-8&useSSL=false&useAffectedRows=true&autoReconnect=true&allowMultiQueries=true

username: root

password: 111111

server:

port: 9082

tomcat:

max-http-post-size: -1

accept-count: 1000

max-threads: 1000

min-spare-threads: 150

max-connections: 10000

max-http-header-size: 10240000

spring:

application:

name: skydownstreamsystem

servlet:

multipart:

max-file-size: 10MB

max-request-size: 10MB

context-path: /skydownstreamsystem

SkyDownStream的pom.xml(parent pom.xml和SkyPayment工程共用)

4.0.0

org.mk.demo

springboot-demo

0.0.1

skydownstreamsystem

org.springframework.boot

spring-boot-starter-web

${spring-boot.version}

org.slf4j

slf4j-log4j12

org.springframework.boot

spring-boot-starter-logging

org.springframework.boot

spring-boot-starter-amqp

org.springframework.boot

spring-boot-starter-logging

org.springframework.boot

spring-boot-starter-jdbc

org.springframework.boot

spring-boot-starter-logging

mysql

mysql-connector-java

com.alibaba

druid

org.springframework.boot

spring-boot-starter-data-redis

org.springframework.boot

spring-boot-starter-logging

org.slf4j

slf4j-log4j12

org.mybatis.spring.boot

mybatis-spring-boot-starter

redis.clients

jedis

org.redisson

redisson-spring-boot-starter

3.13.6

org.redisson

redisson-spring-data-23

org.apache.commons

commons-lang3

org.redisson

redisson-spring-data-21

3.13.1

org.springframework.boot

spring-boot-starter-test

test

org.springframework.boot

spring-boot-starter-logging

org.slf4j

slf4j-log4j12

org.springframework.boot

spring-boot-starter-log4j2

org.apache.logging.log4j

log4j-core

org.apache.logging.log4j

log4j-api

org.apache.logging.log4j

log4j-api

${log4j2.version}

org.apache.logging.log4j

log4j-core

${log4j2.version}

org.aspectj

aspectjweaver

com.lmax

disruptor

com.alibaba

fastjson

com.fasterxml.jackson.core

jackson-databind

com.google.guava

guava

com.alibaba

fastjson

com.fasterxml.jackson.core

jackson-databind

org.mk.demo

common-util

${common-util.version}

org.mk.demo

db-common

${db-common.version}

skyDownStream-下游系统运行起来后怎么用

请求报文示例

[

{

"payId": "1",

"status": "6"

},

{

"payId": "2",

"status": "6"

},

{

"payId": "3",

"status": "6"

},

{

"payId": "4",

"status": "6"

},

{

"payId": "5",

"status": "6"

},

{

"payId": "10",

"status": "6"

}

]skypayment数据库表结构

CREATE TABLE `sky_payment` (

`pay_id` int(11) NOT NULL AUTO_INCREMENT,

`status` tinyint(3) DEFAULT NULL,

`transf_amount` varchar(45) DEFAULT NULL,

`created_date` timestamp NULL DEFAULT CURRENT_TIMESTAMP,

`updated_date` timestamp NULL DEFAULT NULL ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`pay_id`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8mb4;我自己写了一个store procedure往这个表里喂入了800多万条数据。因为数据量超过500万后,这种批量操作如果做了不好,很容易出现数据库死锁、主从延迟、数据库事务过长这些幺蛾子问题的发生,从而以此充分模拟线上生产环境。

而实际我们的线上生产环境的数据量涉及到这条出事情跑批的单表数据根本没我自己模拟的数据量大。

详细设计-RabbitMq解决方案

SkyDownStream下游系统就这样了,重点在于我们模拟我们的中台/商城交易系统的SkyPayment系统。

我们先用RabbitMQ+可控消费数+可控线程数的方案来实现

先上SkyPayment和SkyDownStream系统所共用的parent pom。

parent pom.xml

4.0.0

org.mk.demo

springboot-demo

0.0.1

pom

1.8

0.8.3

0.0.1

2.4.2

3.4.13

2020.0.1

2.7.3

4.0.1

2.8.0

1.2.6

27.0.1-jre

1.2.59

2.7.3

1.1.4

5.1.46

3.4.2

1.8.13

1.8.14-RELEASE

1.0.0

4.1.42.Final

0.1.4

1.16.22

3.1.0

2.1.0

1.2.3

1.3.10.RELEASE

1.0.2

4.0.0

2.4.6

2.9.2

1.9.6

1.5.23

1.5.22

1.5.22

1.9.5

0.0.1

3.1.6

2.11.1

2.8.6

2.5.8

0.1.4

1.7.25

2.0-M2-groovy-2.5

2.2.0

3.10.0

2.6

5.0.0

${java.version}

${java.version}

3.8.1

3.2.3

3.1.1

2.2.3

1.4.197

3.4.14

4.4.10

4.5.6

3.0.0

UTF-8

UTF-8

2.0.22-RELEASE

4.1.0

4.1.0

4.1.0

1.6.1

3.1.0

3.10.0

2.6

5.0.0

2.2.5.RELEASE

2.2.1.RELEASE

3.16.1

2.17.1

0.0.1

0.0.1

0.0.1

0.0.1

org.redisson

redisson-spring-boot-starter

${redission.version}

org.redisson

redisson-spring-data-21

${redission.version}

com.alibaba.cloud

spring-cloud-alibaba-dependencies

${spring-cloud-alibaba.version}

pom

import

com.alibaba.cloud

spring-cloud-starter-alibaba-nacos-discovery

${nacos-discovery.version}

com.aldi.jdbc

sharding

${aldi-sharding.version}

com.auth0

java-jwt

${java-jwt.version}

cn.hutool

hutool-crypto

${hutool-crypto.version}

org.apache.poi

poi

${poi.version}

org.apache.poi

poi-ooxml

${poi-ooxml.version}

org.apache.poi

poi-ooxml

${poi-ooxml.version}

org.apache.poi

poi-ooxml-schemas

${poi-ooxml-schemas.version}

dom4j

dom4j

${dom4j.version}

org.apache.xmlbeans

xmlbeans

${xmlbeans.version}

com.odianyun.architecture

oseq-aldi

${oseq-aldi.version}

org.apache.httpcomponents

httpcore

${httpcore.version}

org.apache.httpcomponents

httpclient

${httpclient.version}

org.apache.zookeeper

zookeeper

${zkclient.version}

log4j

log4j

org.slf4j

slf4j-log4j12

org.quartz-scheduler

quartz

${quartz.version}

org.quartz-scheduler

quartz-jobs

${quartz.version}

org.springframework.cloud

spring-cloud-dependencies

Hoxton.SR7

pom

import

org.mockito

mockito-core

${mockito-core.version}

test

com.auth0

java-jwt

${java-jwt.version}

cn.hutool

hutool-crypto

${hutool-crypto.version}

org.springframework.boot

spring-boot-starter-actuator

${spring-boot.version}

org.logback-extensions

logback-ext-spring

${logback-ext-spring.version}

org.slf4j

jcl-over-slf4j

${jcl-over-slf4j.version}

com.h2database

h2

${h2.version}

org.apache.zookeeper

zookeeper

${zookeeper.version}

org.slf4j

slf4j-log4j12

log4j

log4j

com.xuxueli

xxl-job-core

${xxljob.version}

org.springframework.boot

spring-boot-starter-test

${spring-boot.version}

test

org.springframework.boot

spring-boot-starter-logging

org.slf4j

slf4j-log4j12

org.spockframework

spock-core

1.3-groovy-2.4

test

org.spockframework

spock-spring

1.3-RC1-groovy-2.4

test

org.codehaus.groovy

groovy-all

2.4.6

com.google.code.gson

gson

${gson.version}

com.fasterxml.jackson.core

jackson-databind

${jackson-databind.version}

org.springframework.boot

spring-boot-starter-web-services

${spring-boot.version}

org.apache.cxf

cxf-rt-frontend-jaxws

${cxf.version}

org.apache.cxf

cxf-rt-transports-http

${cxf.version}

org.springframework.boot

spring-boot-starter-security

${spring-boot.version}

io.github.swagger2markup

swagger2markup

1.3.1

io.springfox

springfox-swagger2

${swagger.version}

io.springfox

springfox-swagger-ui

${swagger.version}

com.github.xiaoymin

swagger-bootstrap-ui

${swagger-bootstrap-ui.version}

io.swagger

swagger-annotations

${swagger-annotations.version}

io.swagger

swagger-models

${swagger-models.version}

org.sky

sky-sharding-jdbc

${sky-sharding-jdbc.version}

com.googlecode.xmemcached

xmemcached

${xmemcached.version}

org.apache.shardingsphere

sharding-jdbc-core

${shardingsphere.jdbc.version}

org.springframework.kafka

spring-kafka

1.3.10.RELEASE

org.mybatis.spring.boot

mybatis-spring-boot-starter

${mybatis.version}

com.github.pagehelper

pagehelper-spring-boot-starter

${pagehelper-mybatis.version}

org.springframework.boot

spring-boot-starter-web

${spring-boot.version}

org.slf4j

slf4j-log4j12

org.springframework.boot

spring-boot-starter-logging

org.springframework.boot

spring-boot-dependencies

${spring-boot.version}

pom

import

org.apache.dubbo

dubbo-spring-boot-starter

${dubbo.version}

org.slf4j

slf4j-log4j12

org.springframework.boot

spring-boot-starter-logging

org.apache.dubbo

dubbo

${dubbo.version}

javax.servlet

servlet-api

org.apache.curator

curator-framework

${curator-framework.version}

org.apache.curator

curator-recipes

${curator-recipes.version}

mysql

mysql-connector-java

${mysql-connector-java.version}

com.alibaba

druid

${druid.version}

com.alibaba

druid-spring-boot-starter

${druid.version}

com.lmax

disruptor

${disruptor.version}

com.google.guava

guava

${guava.version}

com.alibaba

fastjson

${fastjson.version}

org.apache.dubbo

dubbo-registry-nacos

${dubbo-registry-nacos.version}

com.alibaba.nacos

nacos-client

${nacos-client.version}

org.aspectj

aspectjweaver

${aspectj.version}

org.springframework.boot

spring-boot-starter-data-redis

${spring-boot.version}

io.seata

seata-all

${seata.version}

io.netty

netty-all

${netty.version}

org.projectlombok

lombok

${lombok.version}

com.alibaba.boot

nacos-config-spring-boot-starter

${nacos.spring.version}

nacos-client

com.alibaba.nacos

net.sourceforge.groboutils

groboutils-core

5

commons-lang

commons-lang

${commons-lang.version}

redis-common

common-util

db-common

skypayment

skydownstreamsystem

httptools-common

SkyPayment的pom.xml文件

4.0.0

org.mk.demo

springboot-demo

0.0.1

skypayment

io.netty

netty-all

org.springframework.boot

spring-boot-starter-web

${spring-boot.version}

org.slf4j

slf4j-log4j12

org.springframework.boot

spring-boot-starter-logging

org.springframework.boot

spring-boot-starter-quartz

org.springframework.boot

spring-boot-starter-logging

org.quartz-scheduler

quartz

org.quartz-scheduler

quartz-jobs

org.springframework.boot

spring-boot-starter-amqp

org.springframework.boot

spring-boot-starter-logging

org.springframework.boot

spring-boot-starter-jdbc

org.springframework.boot

spring-boot-starter-logging

mysql

mysql-connector-java

com.alibaba

druid

org.springframework.boot

spring-boot-starter-data-redis

org.springframework.boot

spring-boot-starter-logging

org.slf4j

slf4j-log4j12

org.mybatis.spring.boot

mybatis-spring-boot-starter

redis.clients

jedis

org.redisson

redisson-spring-boot-starter

${redission.version}

org.redisson

redisson-spring-data-21

${redission.version}

org.apache.commons

commons-lang3

org.springframework.boot

spring-boot-starter-test

test

org.springframework.boot

spring-boot-starter-logging

org.slf4j

slf4j-log4j12

org.springframework.boot

spring-boot-starter-log4j2

org.apache.logging.log4j

log4j-core

org.apache.logging.log4j

log4j-api

org.apache.logging.log4j

log4j-api

${log4j2.version}

org.apache.logging.log4j

log4j-core

${log4j2.version}

org.aspectj

aspectjweaver

com.lmax

disruptor

com.alibaba

fastjson

com.fasterxml.jackson.core

jackson-databind

com.google.guava

guava

com.alibaba

fastjson

com.fasterxml.jackson.core

jackson-databind

org.springframework.boot

spring-boot-starter-data-mongodb

org.mk.demo

redis-common

${redis-common.version}

org.mk.demo

common-util

${common-util.version}

org.mk.demo

db-common

${db-common.version}

org.mk.demo

httptools-common

${httptools-common.version}

PaymentController.java

这个文件就是用来接受jmeter压入海量高并发数据的那个“回调接口”。

/**

* 系统项目名称 org.mk.demo.skypayment.controller PaymentController.java

*

* Feb 3, 2022-12:08:59 AM 2022XX公司-版权所有

*

*/

package org.mk.demo.skypayment.controller;

import javax.annotation.Resource;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import org.mk.demo.util.response.ResponseBean;

import org.mk.demo.util.response.ResponseCodeEnum;

import org.mk.demo.skypayment.service.Publisher;

import org.mk.demo.skypayment.vo.PaymentBean;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.ResponseBody;

import org.springframework.web.bind.annotation.RestController;

import reactor.core.publisher.Mono;

/**

*

* PaymentController

*

*

* Feb 3, 2022 12:08:59 AM

*

* @version 1.0.0

*

*/

@RestController

public class PaymentController {

private Logger logger = LogManager.getLogger(this.getClass());

@Resource

private Publisher publisher;

@PostMapping(value = "/updateStatus", produces = "application/json")

@ResponseBody

public Mono updateStatus(@RequestBody PaymentBean payment) {

ResponseBean resp = new ResponseBean();

try {

publisher.publishPaymentStatusChange(payment);

resp = new ResponseBean(ResponseCodeEnum.SUCCESS.getCode(), "success");

} catch (Exception e) {

resp = new ResponseBean(ResponseCodeEnum.FAIL.getCode(), "system error");

logger.error(">>>>>>updateStatus error: " + e.getMessage(), e);

}

return Mono.just(resp);

}

}

这个接口会如此来接受jmeter“汹涌压过来的数据”

publisher.java

从controller可以看到,进来一条数据就往mq里写一条数据

/**

* 系统项目名称 org.mk.demo.rabbitmqdemo.service Publisher.java

*

* Nov 19, 2021-11:38:39 AM 2021XX公司-版权所有

*

*/

package org.mk.demo.skypayment.service;

import org.mk.demo.skypayment.config.mq.RabbitMqConfig;

import org.mk.demo.skypayment.vo.PaymentBean;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.amqp.rabbit.core.RabbitTemplate;

import org.springframework.amqp.support.converter.Jackson2JsonMessageConverter;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Component;

/**

*

* Publisher

*

*

* Nov 19, 2021 11:38:39 AM

*

* @version 1.0.0

*

*/

@Component

public class Publisher {

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Autowired

private RabbitTemplate rabbitTemplate;

public void publishPaymentStatusChange(PaymentBean payment) {

try {

rabbitTemplate.setMessageConverter(new Jackson2JsonMessageConverter());

rabbitTemplate.convertAndSend(RabbitMqConfig.PAYMENT_EXCHANGE, RabbitMqConfig.PAYMENT_QUEUE, payment);

} catch (Exception ex) {

logger.error(">>>>>>publish exception: " + ex.getMessage(), ex);

}

}

}

相应的rabbitTemplate的配置

RabbitMqConfig.java

/**

* 系统项目名称 org.mk.demo.rabbitmqdemo.config RabbitMqConfig.java

*

* Nov 19, 2021-11:30:43 AM 2021XX公司-版权所有

*

*/

package org.mk.demo.skypayment.config.mq;

import org.springframework.amqp.core.Binding;

import org.springframework.amqp.core.BindingBuilder;

import org.springframework.amqp.core.ExchangeBuilder;

import org.springframework.amqp.core.FanoutExchange;

import org.springframework.amqp.core.Queue;

import org.springframework.amqp.core.QueueBuilder;

import org.springframework.amqp.core.TopicExchange;

import org.springframework.amqp.rabbit.connection.ConnectionFactory;

import org.springframework.amqp.rabbit.core.RabbitTemplate;

import org.springframework.amqp.support.converter.Jackson2JsonMessageConverter;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.stereotype.Component;

/**

*

* RabbitMqConfig

*

*

* Nov 19, 2021 11:30:43 AM

*

* @version 1.0.0

*

*/

@Component

public class RabbitMqConfig {

/**

* 主要测试一个死信队列,功能主要实现延时消费,原理是先把消息发到正常队列, 正常队列有超时时间,当达到时间后自动发到死信队列,然后由消费者去消费死信队列里的消息.

*/

public static final String PAYMENT_EXCHANGE = "payment.exchange";

public static final String PAYMENT_DL_EXCHANGE = "payment.dl.exchange";

public static final String PAYMENT_QUEUE = "payment.queue";

public static final String PAYMENT_DEAD_QUEUE = "payment.queue.dead";

public static final String PAYMENT_FANOUT_EXCHANGE = "paymentFanoutExchange";

/**

* 单位为微秒.

*/

@Value("${queue.expire:5000}")

private long queueExpire;

/**

* 创建普通交换机.

*/

@Bean

public TopicExchange paymentExchange() {

return (TopicExchange)ExchangeBuilder.topicExchange(PAYMENT_EXCHANGE).durable(true).build();

}

/**

* 创建死信交换机.

*/

@Bean

public TopicExchange paymentExchangeDl() {

return (TopicExchange)ExchangeBuilder.topicExchange(PAYMENT_DL_EXCHANGE).durable(true).build();

}

/**

* 创建普通队列.

*/

@Bean

public Queue paymentQueue() {

return QueueBuilder.durable(PAYMENT_QUEUE).withArgument("x-dead-letter-exchange", PAYMENT_DL_EXCHANGE)// 设置死信交换机

.withArgument("x-message-ttl", queueExpire).withArgument("x-dead-letter-routing-key", PAYMENT_DEAD_QUEUE)// 设置死信routingKey

.build();

}

/**

* 创建死信队列.

*/

@Bean

public Queue paymentDelayQueue() {

return QueueBuilder.durable(PAYMENT_DEAD_QUEUE).build();

}

/**

* 绑定死信队列.

*/

@Bean

public Binding bindDeadBuilders() {

return BindingBuilder.bind(paymentDelayQueue()).to(paymentExchangeDl()).with(PAYMENT_DEAD_QUEUE);

}

/**

* 绑定普通队列.

*

* @return

*/

@Bean

public Binding bindBuilders() {

return BindingBuilder.bind(paymentQueue()).to(paymentExchange()).with(PAYMENT_QUEUE);

}

/**

* 广播交换机.

*

* @return

*/

@Bean

public FanoutExchange fanoutExchange() {

return new FanoutExchange(PAYMENT_FANOUT_EXCHANGE);

}

@Bean

public RabbitTemplate rabbitTemplate(final ConnectionFactory connectionFactory) {

final RabbitTemplate rabbitTemplate = new RabbitTemplate(connectionFactory);

rabbitTemplate.setMessageConverter(producerJackson2MessageConverter());

return rabbitTemplate;

}

@Bean

public Jackson2JsonMessageConverter producerJackson2MessageConverter() {

return new Jackson2JsonMessageConverter();

}

}

RabbitMqListenerConfig.java

/**

* 系统项目名称 org.mk.demo.skypayment.config.mq RabbitMqListenerConfig.java

*

* Feb 3, 2022-12:35:21 AM 2022XX公司-版权所有

*

*/

package org.mk.demo.skypayment.config.mq;

import org.springframework.amqp.rabbit.annotation.RabbitListenerConfigurer;

import org.springframework.amqp.rabbit.listener.RabbitListenerEndpointRegistrar;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.messaging.converter.MappingJackson2MessageConverter;

import org.springframework.messaging.handler.annotation.support.DefaultMessageHandlerMethodFactory;

import org.springframework.messaging.handler.annotation.support.MessageHandlerMethodFactory;

/**

*

* RabbitMqListenerConfig

*

*

* Feb 3, 2022 12:35:21 AM

*

* @version 1.0.0

*

*/

@Configuration

public class RabbitMqListenerConfig implements RabbitListenerConfigurer {

/* (non-Javadoc)

* @see org.springframework.amqp.rabbit.annotation.RabbitListenerConfigurer#configureRabbitListeners(org.springframework.amqp.rabbit.listener.RabbitListenerEndpointRegistrar)

*/

@Override

public void configureRabbitListeners(RabbitListenerEndpointRegistrar registor) {

registor.setMessageHandlerMethodFactory(messageHandlerMethodFactory());

}

@Bean

MessageHandlerMethodFactory messageHandlerMethodFactory() {

DefaultMessageHandlerMethodFactory messageHandlerMethodFactory = new DefaultMessageHandlerMethodFactory();

messageHandlerMethodFactory.setMessageConverter(consumerJackson2MessageConverter());

return messageHandlerMethodFactory;

}

@Bean

public MappingJackson2MessageConverter consumerJackson2MessageConverter() {

return new MappingJackson2MessageConverter();

}

}

RabbitMQ消费端-Subscriber.java

/**

* 系统项目名称 org.mk.demo.rabbitmqdemo.service Subscriber.java

*

* Nov 19, 2021-11:47:02 AM 2021XX公司-版权所有

*

*/

package org.mk.demo.skypayment.service;

import javax.annotation.Resource;

import org.mk.demo.skypayment.config.mq.RabbitMqConfig;

import org.mk.demo.skypayment.vo.PaymentBean;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.amqp.rabbit.annotation.RabbitListener;

import org.springframework.stereotype.Component;

/**

*

* Subscriber

*

*

* Nov 19, 2021 11:47:02 AM

*

* @version 1.0.0

*

*/

@Component

public class Subscriber {

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Resource

private PaymentService paymentService;

@RabbitListener(queues = RabbitMqConfig.PAYMENT_QUEUE)

public void receiveDL(PaymentBean payment) {

try {

if (payment != null) {

paymentService.UpdateRemotePaymentStatusById(payment, false);

}

} catch (Exception ex) {

logger.error(">>>>>>Subscriber from dead queue exception: " + ex.getMessage(), ex);

}

}

}

这个消费端我们的核心配置在application.yml里有配置如下的内容

rabbitmq:

publisher-confirm-type: CORRELATED

listener:

## simple类型

simple:

#最小消费者数量

concurrency: 32

#最大的消费者数量

maxConcurrency: 64

#指定一个请求能处理多少个消息,如果有事务的话,必须大于等于transaction数量

prefetch: 32所以,这个配置决定了我们的最大消费者每秒是64个。

然后我们在我们的每一个消费端里打开一条通向SkyDownStream的/updateStatusInBatch请求,也就意味着它在每一秒不会向下游发起超过64个并发的restTemplate请求。

RestTemplate配置

HttpConfig.java

package org.mk.demo.config.http;

import java.util.ArrayList;

import java.util.List;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.http.client.BufferingClientHttpRequestFactory;

import org.springframework.http.client.ClientHttpRequestFactory;

import org.springframework.http.client.ClientHttpRequestInterceptor;

import org.springframework.http.client.SimpleClientHttpRequestFactory;

import org.springframework.web.client.RestTemplate;

@Configuration

@ConfigurationProperties(prefix = "http.rest.connection")

public class HttpConfig {

private Logger logger = LoggerFactory.getLogger(this.getClass());

public int getConnectionTimeout() {

return connectionTimeout;

}

public void setConnectionTimeout(int connectionTimeout) {

this.connectionTimeout = connectionTimeout;

}

public int getReadTimeout() {

return readTimeout;

}

public void setReadTimeout(int readTimeout) {

this.readTimeout = readTimeout;

}

private int connectionTimeout;

private int readTimeout;

@Bean

public RestTemplate restTemplate(ClientHttpRequestFactory factory) {

RestTemplate restTemplate = new RestTemplate(new BufferingClientHttpRequestFactory(factory));

List interceptors = new ArrayList<>();

interceptors.add(new LoggingRequestInterceptor());

restTemplate.setInterceptors(interceptors);

return restTemplate;

}

@Bean

public ClientHttpRequestFactory simpleClientHttpRequestFactory() {

SimpleClientHttpRequestFactory factory = new SimpleClientHttpRequestFactory();// 默认的是JDK提供http连接,需要的话可以//通过setRequestFactory方法替换为例如Apache

// HttpComponents、Netty或//OkHttp等其它HTTP

// library。

logger.info(">>>>>>http.rest.connection.readTimeout setted to->{}", readTimeout);

logger.info(">>>>>>http.rest.connection.connectionTimeout setted to->{}", connectionTimeout);

factory.setReadTimeout(readTimeout);// 单位为ms

factory.setConnectTimeout(connectionTimeout);// 单位为ms

return factory;

}

}

LoggingRequestInterceptor.java

package org.mk.demo.config.http;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.http.HttpRequest;

import org.springframework.http.client.ClientHttpRequestExecution;

import org.springframework.http.client.ClientHttpRequestInterceptor;

import org.springframework.http.client.ClientHttpResponse;

public class LoggingRequestInterceptor implements ClientHttpRequestInterceptor {

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Override

public ClientHttpResponse intercept(HttpRequest request, byte[] body, ClientHttpRequestExecution execution)

throws IOException {

traceRequest(request, body);

ClientHttpResponse response = execution.execute(request, body);

traceResponse(response);

return response;

}

private void traceRequest(HttpRequest request, byte[] body) throws IOException {

logger.debug("===========================request begin================================================");

logger.debug("URI : {}", request.getURI());

logger.debug("Method : {}", request.getMethod());

logger.debug("Headers : {}", request.getHeaders());

logger.debug("Request body: {}", new String(body, "UTF-8"));

logger.debug("==========================request end================================================");

}

private void traceResponse(ClientHttpResponse response) throws IOException {

StringBuilder inputStringBuilder = new StringBuilder();

BufferedReader bufferedReader = new BufferedReader(new InputStreamReader(response.getBody(), "UTF-8"));

String line = bufferedReader.readLine();

while (line != null) {

inputStringBuilder.append(line);

inputStringBuilder.append('\n');

line = bufferedReader.readLine();

}

logger.debug("============================response begin==========================================");

logger.debug("Status code : {}", response.getStatusCode());

logger.debug("Status text : {}", response.getStatusText());

logger.debug("Headers : {}", response.getHeaders());

logger.debug("Response body: {}", inputStringBuilder.toString());

logger.debug("=======================response end=================================================");

}

}

RestTemplate的核心配置

http:

rest:

connection:

readTimeout: 4000

connectionTimeout: 2000

downstreamsystem-url: http://localhost:9082/updateStatus

downstreamsystem-batch-url: http://localhost:9082/updateStatusInBatch可以从上述配置看到我们的ReadTimeout为4秒,connectionTimeout为2秒。以便于就算系统出了问题也可以及时的“断开”。

一味的放长等待时间是绝大多数出问题项目程序猿们的掩耳盗铃。微服务讲究的是一秒原则,>=1秒的任何请求都属于慢请求(无论外部并发到达10万每秒还是100万每秒的请求),比起掩耳盗铃,我们更应该关注如何把你的交易处理的响应压缩到“更短的时间”,这才是程序员。

SkyPayment项目的全application.yml文件内容

mysql:

datasource:

db:

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: com.mysql.jdbc.Driver

minIdle: 50

initialSize: 50

maxActive: 300

maxWait: 1000

testOnBorrow: false

testOnReturn: true

testWhileIdle: true

validationQuery: select 1

validationQueryTimeout: 1

timeBetweenEvictionRunsMillis: 5000

ConnectionErrorRetryAttempts: 3

NotFullTimeoutRetryCount: 3

numTestsPerEvictionRun: 10

minEvictableIdleTimeMillis: 480000

maxEvictableIdleTimeMillis: 480000

keepAliveBetweenTimeMillis: 480000

keepalive: true

poolPreparedStatements: true

maxPoolPreparedStatementPerConnectionSize: 512

maxOpenPreparedStatements: 512

master: #master db

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: com.mysql.jdbc.Driver

url: jdbc:mysql://localhost:3306/ecom?useUnicode=true&characterEncoding=utf-8&useSSL=false&useAffectedRows=true&autoReconnect=true&allowMultiQueries=true

username: root

password: 111111

slaver: #slaver db

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: com.mysql.jdbc.Driver

url: jdbc:mysql://localhost:3307/ecom?useUnicode=true&characterEncoding=utf-8&useSSL=false&useAffectedRows=true&autoReconnect=true&allowMultiQueries=true

username: root

password: 111111

server:

port: 9080

tomcat:

max-http-post-size: -1

max-http-header-size: 10240000

spring:

application:

name: skypayment

servlet:

multipart:

max-file-size: 10MB

max-request-size: 10MB

context-path: /skypayment

data:

mongodb:

uri: mongodb://skypayment:111111@localhost:27017,localhost:27018/skypayment

quartz:

properties:

org:

quartz:

scheduler:

instanceName: DefaultQuartzScheduler

instanceId: AUTO

makeSchedulerThreadDaemon: true

threadPool:

class: org.quartz.simpl.SimpleThreadPool

makeThreadsDaemons: true

threadCount: 20

threadPriority: 5

jobStore:

class: org.quartz.simpl.RAMJobStore

# driverDelegateClass: org.quartz.impl.jdbcjobstore.StdJDBCDelegate

# tablePrefix: qrtz_

# isClustered: false

misfireThreshold: 60000

#job-store-type: jdbc

#jdbc:

# initialize-schema: never

auto-startup: true

overwrite-existing-jobs: true

startup-delay: 10000

wait-for-jobs-to-complete-on-shutdown: true

redis:

password: 111111

#nodes: localhost:7001

redisson:

nodes: redis://127.0.0.1:27001,redis://127.0.0.1:27002,redis://127.0.0.1:27003

sentinel:

nodes: localhost:27001,localhost:27002,localhost:27003

master: master1

database: 0

switchFlag: 1

lettuce:

pool:

max-active: 100

max-wait: 10000

max-idle: 25

min-idl: 2

shutdown-timeout: 2000

timeBetweenEvictionRunsMillis: 5000

timeout: 5000

#配置rabbitMq 服务器

rabbitmq:

addresses: localhost:5672

username: admin

password: admin

#虚拟host 可以不设置,使用server默认host

virtual-host: /

publisher-confirm-type: CORRELATED

listener:

## simple类型

simple:

#最小消费者数量

concurrency: 32

#最大的消费者数量

maxConcurrency: 64

#指定一个请求能处理多少个消息,如果有事务的话,必须大于等于transaction数量

prefetch: 32

retry:

enabled: false

#rabbitmq的超时用于队列的超时使用

queue:

expire: 1000

http:

rest:

connection:

readTimeout: 4000

connectionTimeout: 2000

downstreamsystem-url: http://localhost:9082/updateStatus

downstreamsystem-batch-url: http://localhost:9082/updateStatusInBatch

job:

corn:

updatePaymentStatus: 0/1 * * * * ?

threadpool:

corePoolSize: 25

maxPoolSize: 50

queueCapacity: 5000

keepAliveSeconds: 100

threadNamePrefix: syntask-thread

waitForTasksToCompleteOnShutdown: true

awaitTerminationSeconds: 100基于RabbitMQ可控消费者的运行演示

运行起SkyDownStream和SkyPayment,在运行前分别往两个项目中植入Glowroot探针。

- SkyPayment的glowroot监控运行在http://localhost:9000端;

- SkyDownStream的glowroot监控运行在http://localhost:9001端;

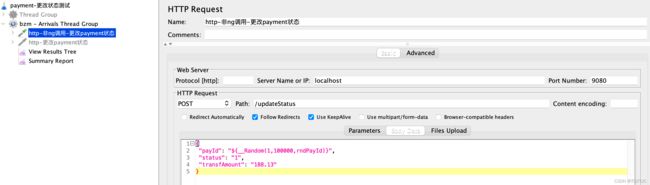

然后我们使用jmeter的Arrival Thread Groups往SkyPayment里压入这么多请求。

线程在刚开始时会一个个发起,过了15秒当线程到达“全发起”后,要维持5分钟的持续运行。

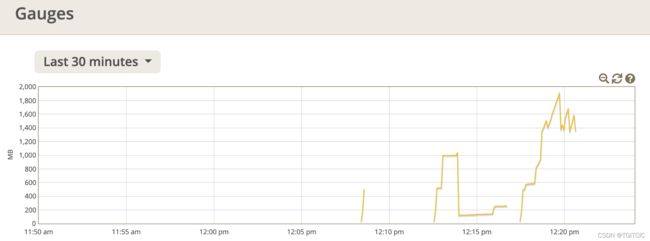

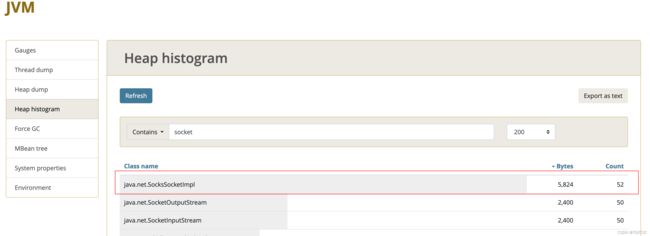

此时我们观察我们的SkyPayment端的glowroot监控和SkyDownStream端的glowroot监控。我们可以看到,无论是SkyPayment端(查看httpclient在jvm中的对象数)还是在SkyDownStream端(查看socket对象数)没有超过64个并发过。

我们进而观察JVM运行情况,可以看到没有一个项目超过2GB内存的。

当jmeter端的24,720个请求全部发完后,SkyPayment的控制台继续还有持续的输出,然后过了40秒不到,我们查看数据库skypayment表,表内的status状态全部被update完成了。

以上运行说明系统达到设计指标。

别急,我们下面来看用NOSQL-MongoDb来实现暂存队列、使用线程池来实现的另一种跑批,你们会发觉,这种设计的性能和扩展性要强于使用RabbitMQ的可控消费端数量技术的几倍。

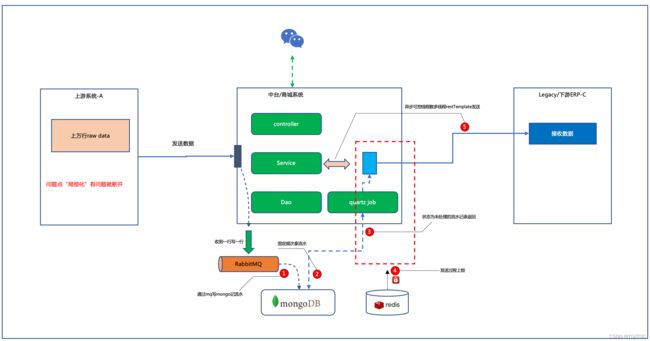

详细设计-基于NoSql做缓存队列并使用线程池制作的分布式跑批解决方案

在这个设计里面整体的系统在运作机制上是这样的:

- 还是利用RabbitMQ,前端流量来一条请求用publisher塞到一个mq队列中;

- 在队列中可控消费者数量,每个消费者执行一条写mongodb的操作,把数据写入到mongodb中的一个叫“paymentBean”的collection中以形成流水,并把paymentBean里的flag这个字段置成-1,代表“未处理”;

- 前端的操作截止第2步结束,然后系统自带一个quartz,每秒运行一次。每一次运行取出一批flag=-1的记录以List

的形式发到下游系统-SkyDownStream系统中; - 在发送时我们使用spring boot自带的线程池,以最大不超过50个线程的并发然后使用RestTemplate往下游系统发,发完一批-50条记录后再把暂存流水表即mongodb的paymentBean collection中的flag字段改成1;

- 在第4步的“发送请求和把己发送的数据的flag变更“过程中,我们用redisson自续约锁把这个过程锁上,以防止一个 quartz job还没处理完后一个quartz job跟上造成资源竞争、数据污染。同时,更重要的是我们这个设计是云原生的,即意味着这样的系统可以不受限于单机可多幅本的运行,由其是当多幅本运行起来后不同的job会产生并发资源竞争并最终造成“数据库行级死锁”。因此我们才会使用redisson自续约锁来避免出现上述的场景;

动手实现代码

PaymentBean用于映射Mongodb的paymentBean Collection

/**

* 系统项目名称 org.mk.demo.skypayment.vo PaymentBean.java

*

* Feb 1, 2022-11:12:17 PM 2022XX公司-版权所有

*

*/

package org.mk.demo.skypayment.vo;

import java.io.Serializable;

import org.mk.demo.skypayment.config.mongo.AutoIncKey;

import org.springframework.data.annotation.Id;

import org.springframework.data.mongodb.core.mapping.Field;

/**

*

* PaymentBean

*

*

* Feb 1, 2022 11:12:17 PM

*

* @version 1.0.0

*

*/

public class PaymentBean implements Serializable {

private static final long serialVersionUID = 1L;

@Id

@AutoIncKey

@Field("flowId")

private int flowId;

public int getFlowId() {

return flowId;

}

public void setFlowId(int flowId) {

this.flowId = flowId;

}

private int flag;

public int getFlag() {

return flag;

}

public void setFlag(int flag) {

this.flag = flag;

}

private int payId;

public int getPayId() {

return payId;

}

public void setPayId(int payId) {

this.payId = payId;

}

public int getStatus() {

return status;

}

public void setStatus(int status) {

this.status = status;

}

public double getTransfAmount() {

return transfAmount;

}

public void setTransfAmount(double transfAmount) {

this.transfAmount = transfAmount;

}

private int status;

private double transfAmount = 0;

}

MongoTemplate中对于主键自增长-自定义的AutoIncKey的设计

/**

* 系统项目名称 org.mk.demo.skypayment.config.mongo AutoIncKey.java

*

* Feb 18, 2022-2:41:07 PM 2022XX公司-版权所有

*

*/

package org.mk.demo.skypayment.config.mongo;

import java.lang.annotation.ElementType;

import java.lang.annotation.Retention;

import java.lang.annotation.RetentionPolicy;

import java.lang.annotation.Target;

/**

*

* AutoIncKey

*

*

* Feb 18, 2022 2:41:07 PM

*

* @version 1.0.0

*

*/

@Target(ElementType.FIELD)

@Retention(RetentionPolicy.RUNTIME)

public @interface AutoIncKey {}Mongo内自增长主键IncInfo.java

/**

* 系统项目名称 org.mk.demo.skypayment.config.mongo IncInfo.java

*

* Feb 18, 2022-2:41:37 PM 2022XX公司-版权所有

*

*/

package org.mk.demo.skypayment.config.mongo;

import org.springframework.data.annotation.Id;

import org.springframework.data.mongodb.core.mapping.Document;

import org.springframework.data.mongodb.core.mapping.Field;

/**

*

* IncInfo

*

*

* Feb 18, 2022 2:41:37 PM

*

* @version 1.0.0

*

*/

@Document(collection = "inc")

public class IncInfo {

@Id

private String id;// 主键

@Field

private String collName;// 需要自增id的集合名称(这里设置为MyDomain)

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public String getCollName() {

return collName;

}

public void setCollName(String collName) {

this.collName = collName;

}

public Integer getIncId() {

return incId;

}

public void setIncId(Integer incId) {

this.incId = incId;

}

@Field

private Integer incId;// 当前自增id值

}

SaveEventListener.java-用于对于在mongodb内新增一条记录自增长的操作

/**

* 系统项目名称 org.mk.demo.skypayment.config.mongo SaveEventListener.java

*

* Feb 18, 2022-2:29:43 PM 2022XX公司-版权所有

*

*/

package org.mk.demo.skypayment.config.mongo;

import org.mk.demo.skypayment.vo.PaymentBean;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.mongodb.core.FindAndModifyOptions;

import org.springframework.data.mongodb.core.MongoTemplate;

import org.springframework.data.mongodb.core.mapping.event.AbstractMongoEventListener;

import org.springframework.data.mongodb.core.mapping.event.BeforeConvertEvent;

import org.springframework.data.mongodb.core.query.Criteria;

import org.springframework.data.mongodb.core.query.Query;

import org.springframework.data.mongodb.core.query.Update;

import org.springframework.stereotype.Component;

import org.springframework.util.ReflectionUtils;

import java.lang.reflect.Field;

/**

*

* SaveEventListener

*

*

* Feb 18, 2022 2:29:43 PM

*

* @version 1.0.0

*

*/

@Component

public class SaveEventListener extends AbstractMongoEventListener有了上述工具类后,我们在原有SkyPayment项目结构里加入一个consumer,这个consumer不同于基于RabbitMQ的例子中的subscriber,这个subscriber接受到一条请求就往mongodb的paymentBean collection内新增一条记录,并把这条记录里的flag字段置成-1,代表未处理。

SubscriberAndRunJob.java

/**

* 系统项目名称 org.mk.demo.skypayment.service SubscriberToJob.java

*

* Feb 16, 2022-1:46:59 PM 2022XX公司-版权所有

*

*/

package org.mk.demo.skypayment.service;

import javax.annotation.Resource;

import org.mk.demo.skypayment.config.mq.RabbitMqConfig;

import org.mk.demo.skypayment.vo.PaymentBean;

import org.mk.demo.util.CloneUtil;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.amqp.rabbit.annotation.RabbitListener;

import org.springframework.stereotype.Component;

/**

*

* SubscriberToJob

*

*

* Feb 16, 2022 1:46:59 PM

*

* @version 1.0.0

*

*/

@Component

public class SubscriberAndRunJob {

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Resource

private PaymentService paymentService;

@RabbitListener(queues = RabbitMqConfig.PAYMENT_QUEUE)

public void receiveDL(PaymentBean payment) throws Exception {

try {

if (payment != null) {

PaymentBean paymentFlow = CloneUtil.clone(payment);

paymentFlow.setFlag(-1);

paymentService.mongoAddPaymentFlow(paymentFlow);

}

} catch (Exception e) {

logger.error(e.getMessage(), e);

}

}

}

定义每秒运行一次job的quartz

quartz:

properties:

org:

quartz:

scheduler:

instanceName: DefaultQuartzScheduler

instanceId: AUTO

makeSchedulerThreadDaemon: true

threadPool:

class: org.quartz.simpl.SimpleThreadPool

makeThreadsDaemons: true

threadCount: 20

threadPriority: 5

jobStore:

class: org.quartz.simpl.RAMJobStore

# driverDelegateClass: org.quartz.impl.jdbcjobstore.StdJDBCDelegate

# tablePrefix: qrtz_

# isClustered: false

misfireThreshold: 60000

#job-store-type: jdbc

#jdbc:

# initialize-schema: never

auto-startup: true

overwrite-existing-jobs: true

startup-delay: 10000

wait-for-jobs-to-complete-on-shutdown: true相应的quartz自动装配

JobCronProperties.java

package org.mk.demo.skypayment.config.quartz;

import org.springframework.boot.context.properties.ConfigurationProperties;

@ConfigurationProperties("job.corn")

public class JobCronProperties {

private String updatePaymentStatus;

public String getUpdatePaymentStatus() {

return updatePaymentStatus;

}

public void setUpdatePaymentStatus(String updatePaymentStatus) {

this.updatePaymentStatus = updatePaymentStatus;

}

}

JobConfig.java

/**

* 系统项目名称 org.mk.demo.skypayment.config.quartz JobConfig.java

*

* Feb 16, 2022-2:25:15 PM 2022XX公司-版权所有

*

*/

package org.mk.demo.skypayment.config.quartz;

import javax.annotation.Resource;

import org.mk.demo.skypayment.service.PaymentJob;

import org.quartz.CronScheduleBuilder;

import org.quartz.JobBuilder;

import org.quartz.JobDetail;

import org.quartz.Trigger;

import org.quartz.TriggerBuilder;

import org.springframework.boot.autoconfigure.condition.ConditionalOnProperty;

import org.springframework.boot.context.properties.EnableConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

*

* JobConfig

*

*

* Feb 16, 2022 2:25:15 PM

*

* @version 1.0.0

*

*/

@Configuration

@EnableConfigurationProperties({JobCronProperties.class})

public class JobConfig {

@Resource

private JobCronProperties jobCronProperties;

@Bean

public JobDetail autoCancelJob() {

return JobBuilder.newJob(PaymentJob.class).withIdentity(PaymentJob.class.getName(), "updatePaymentStatus")

.requestRecovery().storeDurably().build();

}

@Bean

public Trigger autoCancelTrigger(JobDetail autoCancelJob) {

return TriggerBuilder.newTrigger().forJob(autoCancelJob)

.withSchedule(CronScheduleBuilder.cronSchedule(jobCronProperties.getUpdatePaymentStatus()))

.withIdentity(PaymentJob.class.getName(), "updatePaymentStatus").build();

}

}

PaymentJob.java

/**

* 系统项目名称 org.mk.demo.skypayment.service PaymentJob.java

*

* Feb 16, 2022-4:00:14 PM 2022XX公司-版权所有

*

*/

package org.mk.demo.skypayment.service;

import java.util.List;

import javax.annotation.Resource;

import org.mk.demo.skypayment.vo.PaymentBean;

import org.quartz.JobExecutionContext;

import org.quartz.JobExecutionException;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.scheduling.quartz.QuartzJobBean;

/**

*

* PaymentJob

*

*

* Feb 16, 2022 4:00:14 PM

*

* @version 1.0.0

*

*/

public class PaymentJob extends QuartzJobBean {

private final Logger logger = LoggerFactory.getLogger(getClass());

/* (non-Javadoc)

* @see org.springframework.scheduling.quartz.QuartzJobBean#executeInternal(org.quartz.JobExecutionContext)

*/

@Resource

private PaymentService paymentService;

@Override

protected void executeInternal(JobExecutionContext context) throws JobExecutionException {

logger.info(">>>>>>Start updatePaymentStatus job");

try {

List unhandledPaymentFlowList = paymentService.mongoGetUnhandledPaymentFlow();

if (unhandledPaymentFlowList != null && unhandledPaymentFlowList.size() > 0) {

// 改用mongo方法修改流水表

paymentService.mongoRemotePaymentStatusByList(unhandledPaymentFlowList, true);

Thread.sleep(50);

}

} catch (

Exception e) {

logger.error(">>>>>>execute batch update paymentstatus error: " + e.getMessage(), e);

}

}

}

这个job每秒钟运行一次,每次运行时拿到flag=-1的流水,它是通过paymentService.mongoGetUnhandledPaymentFlow方法得到。

PaymentService中的mongoGetUnhandledPaymentFlow方法

public List mongoGetUnhandledPaymentFlow() {

List paymentFlowList = new ArrayList();

try {

Query query = new Query(Criteria.where("flag").is(-1));

paymentFlowList = mongoTemplate.find(query, PaymentBean.class);

} catch (Exception e) {

logger.error(">>>>>>getUnhandledPaymentFlow from mongo error: " + e.getMessage(), e);

}

return paymentFlowList;

} 查到后调用PaymentService中的mongoRemotePaymentStatusByList方法往下游系统SkyDownStream里送就行了。

PaymentService.mongoRemotePaymentStatusByList方法

@Async("callRemoteUpdatePaymentStatusInBatch")

public ResponseBean mongoRemotePaymentStatusByList(List paymentList, boolean batchFlag) {

ResponseBean resp = null;

if (paymentList == null || paymentList.size() < 1) {// 只要有flag=-1的未处理数据才进入发送处理过程

return new ResponseBean(ResponseCodeEnum.SUCCESS.getCode(), "暂无需要处理的数据");

}

RLock lock = redissonSentinel.getLock(ConstantsVal.UPDATE_PAYMENT_LOCK);

try {

boolean islock = lock.tryLock(0, TimeUnit.SECONDS);

if (islock) {

ResponseEntity responseEntity =

restTemplate.postForEntity(downStreamSystemBatchUrl, paymentList, ResponseBean.class);

// 返回json数据

resp = responseEntity.getBody();

if (batchFlag) {

if (resp.getCode() == 0) {

// 改成使用mongoUpdate

// paymentDao.updatePaymentFlowDoneByList(paymentList);

for (PaymentBean paymentFlow : paymentList) {

Query query = Query.query(Criteria.where("_id").is(paymentFlow.getFlowId()));

Update update = Update.update("flag", 1);

mongoTemplate.upsert(query, update, "paymentBean");

}

}

}

} else {

resp = new ResponseBean(ResponseCodeEnum.WAITING.getCode(), "请稍侯");

logger.info(">>>>>>前面任务进行中,请稍侯!");

}

} catch (Exception e) {

logger.error(">>>>>>remote remoteUpdatePaymentStatusById error: " + e.getMessage(), e);

resp = new ResponseBean(ResponseCodeEnum.FAIL.getCode(), "system error");

} finally {

try {

lock.unlock();

} catch (Exception e) {

}

}

return resp;

} 注意看这个方法有三处需要注意

- 这个方法头上有一个@Async("callRemoteUpdatePaymentStatusInBatch"),代表了这个方法会自动走spring boot线程池;

- 使用了Redisson自续约锁,来保证不会出现资源竞争造成的“死锁”;

- 通过RestTemplate送到下游系统一旦成功后把MongoDb中的流水表的flag改成1;

spring boot线程池设定

ThreadPoolConfig.java

package org.mk.demo.skypayment.config.thread;

import java.util.concurrent.Executor;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.scheduling.annotation.EnableAsync;

import org.springframework.scheduling.concurrent.ThreadPoolTaskExecutor;

@EnableAsync

@Configuration

@ConfigurationProperties(prefix = "threadpool")

public class ThreadPoolConfig {

@Bean("callRemoteUpdatePaymentStatusInBatch")

public Executor syncTaskBatchExecutor() {

// ThreadPoolTaskExecutor taskExecutor = new ThreadPoolTaskExecutor();

ThreadPoolTaskExecutor taskExecutor = new VisiableThreadPoolTaskExecutor();

taskExecutor.setCorePoolSize(corePoolSize);

taskExecutor.setMaxPoolSize(maxPoolSize);

taskExecutor.setQueueCapacity(queueCapacity);

taskExecutor.setKeepAliveSeconds(keepAliveSeconds);

taskExecutor.setThreadNamePrefix(threadNamePrefix);

taskExecutor.setWaitForTasksToCompleteOnShutdown(waitForTasksToCompleteOnShutdown);

taskExecutor.setAwaitTerminationSeconds(awaitTerminationSeconds);

return taskExecutor;

}

@Value("${threadpool.corePoolSize}")

private int corePoolSize;

@Value("${threadpool.maxPoolSize}")

private int maxPoolSize;

@Value("${threadpool.queueCapacity}")

private int queueCapacity;

@Value("${threadpool.keepAliveSeconds}")

private int keepAliveSeconds;

@Value("${threadpool.threadNamePrefix}")

private String threadNamePrefix;

@Value("${threadpool.waitForTasksToCompleteOnShutdown}")

private boolean waitForTasksToCompleteOnShutdown;

@Value("${threadpool.awaitTerminationSeconds}")

private int awaitTerminationSeconds;

}

VisiableThreadPoolTaskExecutor.java

/**

* 系统项目名称 org.mk.demo.odychannelweb.config VisiableThreadPoolTaskExecutor.java

*

* Jan 31, 2021-4:21:14 AM 2021XX公司-版权所有

*

*/

package org.mk.demo.skypayment.config.thread;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.scheduling.concurrent.ThreadPoolTaskExecutor;

import org.springframework.util.concurrent.ListenableFuture;

import java.util.concurrent.Callable;

import java.util.concurrent.Future;

import java.util.concurrent.ThreadPoolExecutor;

/**

*

* VisiableThreadPoolTaskExecutor

*

*

* Jan 31, 2021 4:21:14 AM

*

* @version 1.0.0

*

*/

public class VisiableThreadPoolTaskExecutor extends ThreadPoolTaskExecutor {

protected final Logger logger = LoggerFactory.getLogger(this.getClass());

private void showThreadPoolInfo(String prefix) {

ThreadPoolExecutor threadPoolExecutor = getThreadPoolExecutor();

if (null == threadPoolExecutor) {

return;

}

logger.info("{}, {},taskCount [{}], completedTaskCount [{}], activeCount [{}], queueSize [{}]",

this.getThreadNamePrefix(), prefix, threadPoolExecutor.getTaskCount(),

threadPoolExecutor.getCompletedTaskCount(), threadPoolExecutor.getActiveCount(),

threadPoolExecutor.getQueue().size());

}

@Override

public void execute(Runnable task) {

showThreadPoolInfo("1. do execute");

super.execute(task);

}

@Override

public void execute(Runnable task, long startTimeout) {

showThreadPoolInfo("2. do execute");

super.execute(task, startTimeout);

}

@Override

public Future submit(Runnable task) {

showThreadPoolInfo("1. do submit");

return super.submit(task);

}

@Override

public Future submit(Callable task) {

showThreadPoolInfo("2. do submit");

return super.submit(task);

}

@Override

public ListenableFuture submitListenable(Runnable task) {

showThreadPoolInfo("1. do submitListenable");

return super.submitListenable(task);

}

@Override

public ListenableFuture submitListenable(Callable task) {

showThreadPoolInfo("2. do submitListenable");

return super.submitListenable(task);

}

}

运行基于NOSQL暂存队列的可控线程数跑批例子的效果

我们把SkyPayment工程运行起来,并拿jmeter同样喂入这么多样本数据

然后我们观察着系统,当jmeter达到峰值时开始进入5分钟的持续并发时间,然后我们先来看在如此大并发下,下游系统SkyDownStream系统中的socket被打开数,即意味着这个下游系统一秒内被并发调用数

运行全过程中,这个值始终没有变过,说明我们对下游的访问并发数控制的很好。

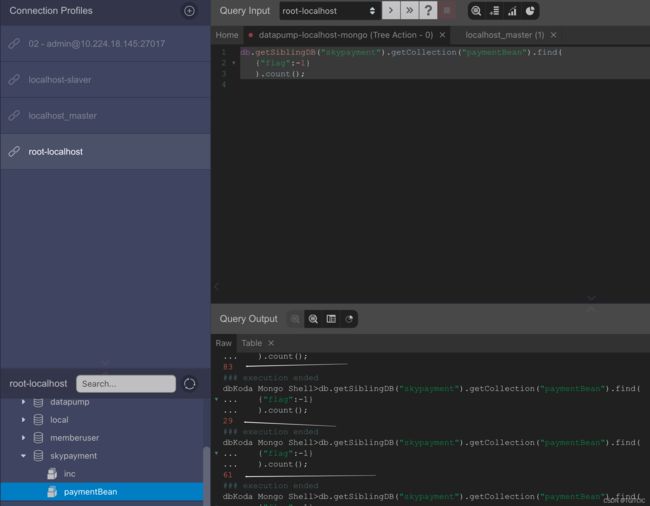

我们在mongodb中查询flag=-1的数据

我们发觉这个值一会83,一会29,一会61。这说明在如此高并发的插入下,我们的流水消耗速度相当正常。

要知道这可是2万4千的请求像潮水一样汹涌的插入流水表,如果我们的job的消耗速度跟不上,那么这个flag=-1的记录数是会飞速的、直线的上升的。而不是像这样:进入一些后即马上被消耗掉一些。

这说明系统进入和消耗的速度基本上达到平衡。而在系统运行过程中:

- SkyPayment没有超过2GB内存;

- SkyDownStream没有超过1GB内存;

前端的jmeter的并发结束后,SkyPayment里的Job也几乎是在同一时刻结束的。

系统整体远远优于“基于MQ可控消费者数”的跑批设计。

总结

这是因为以下几点细节造成的如此大的差距:

- 尽量使用NOSQL或者最好不要使用DB;

- 要锁也要用分布式锁如:Redis锁,而不要用Synchronized这种锁;

- 一批一提交而不要一条条的请求去提交;

- 线程可控-我们把我们的多线程只控制在不超过最大50个并发,实际比一些动不动开个1,000个线程数的劣质设计性能还要优;

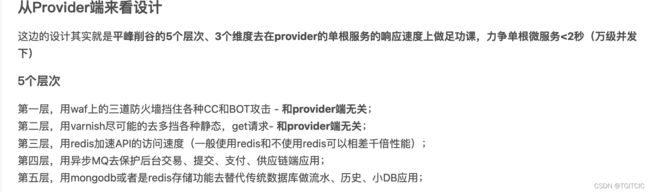

所以以上4点结合我之前有一篇博客:微服务设计指导-微服务各层间应该如何调用中所提到的5个层次:

现在各位知道,微服务的设计上要求我们对于IT系统的综合知识的掌握有多么重要了!你说它是技术它也不属于纯技术,我觉得它更像一种“思想”、一种“艺术”。

结束本篇博客。