【ELM】动态自适应可变加权极限学习机ELM预测(Matlab代码实现)

欢迎来到本博客❤️❤️

博主优势:博客内容尽量做到思维缜密,逻辑清晰,为了方便读者。

⛳️座右铭:行百里者,半于九十。

目录

1 概述

2 运行结果

3 参考文献

4 Matlab代码实现

1 概述

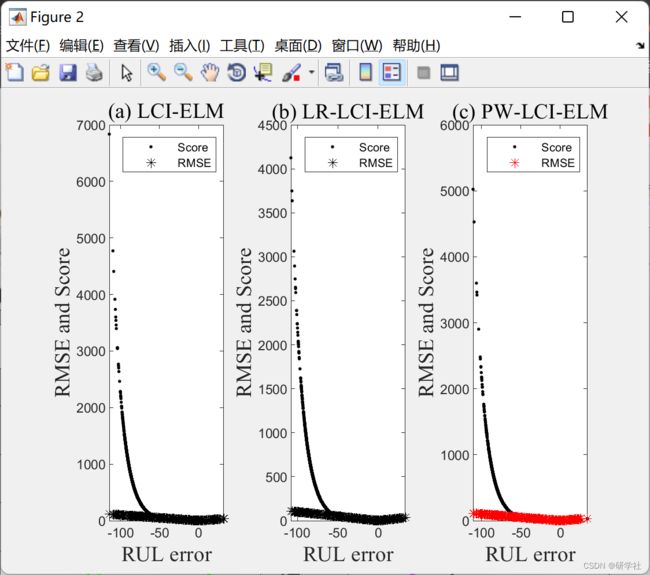

该文提出了一种新的长度可变极限学习机。该方法旨在提高单隐藏层前馈神经网络(SLFN)在丰富的动态不平衡数据下的学习性能。粒子群优化 (PSO) 涉及增量学习期间的超参数调整和更新。该算法使用燃气涡轮发动机C-MAPSS(商用模块化航空推进系统仿真)数据集的子集进行评估,并与其衍生物进行比较。结果表明,新算法具有较好的学习效果。

文献来源:

2 运行结果

部分代码:

function [net] = OP_W_LCI_ELM(xtr,ytr,xts,yts,sample,Options)

% Length changeabale incremental ELM :

% hyper parameters

k=Options.k;

lambda=Options.lambda;

MaxHiddenNeurons=Options.MaxHiddenNeurons;

epsilon=Options.epsilon;

ActivationFunType=Options.ActivationFunType;

C=Options.C;

Weighted=Options.Weighted;

maxite=Options.maxite;

epsilonPSO=Options.epsilonPSO;

%%%%%%%%%%%%%%%%

[~,InputDimension] = size(xtr);% get dimensions

start_time_training = cputime;% calculating time

W = [];% initialize input weights

b = [];% initialize hidden layer biases

TrainingAccuracy = [];% initialize

OutputWeights = [];% initialize

num = 0; % initialize number of hidden nodes

count = 0;% initialize ITERATION COUNTER

E=[]; % nitialize vector of RMSEs by iteration

Ets=[]; % initialize vector of RMSEs of testing

Tolerance=1;% initialize tolerance value

%Training phase

node=0;

while num < MaxHiddenNeurons & Tolerance > epsilon

len = floor(k*exp(-lambda*count))+1; %calculate the number of newly added hidden nodes for this loop

wi = rand(InputDimension,len)*2-1;

W = [W wi];

switch ActivationFunType

case 'sig'

bi = rand(1,len)*2-1;

b = [b bi];

H = activeadd(xtr,W,b);

case 'radbas'

bi = rand(1,len)*0.5;

b = [b bi];

H = activerbf(xtr,W,b);

otherwise

end

% particl swarm optimization for best regularization and weightes

% Adjustments

% dfine initial population

population=[C Weighted];

% random search

[~,population,~,fit_behavior]=PSO(population,H,ytr,Options);

% save best solutions

Reg(count+1,:)=population;

% determine beta

[beta] = R(H,ytr, population);

% calculate target

f = H*beta;

%update the output function

OutputWeights = beta; %update the output weights

TrainingAccuracy = sqrt(mse(ytr-f));

E=[E TrainingAccuracy];% save training accuraccy

if count>1

Tolerance= sqrt(mse(E(count)-E(count-1))); % tolerance function

Tol(count-1,1)=Tolerance;

end

count = count+1;% counter

% testing phase

[O,TestingAccuracy,TestingTime]=ELMtest(xts,yts,ActivationFunType,W,b,OutputWeights);

% testing accuracy

Ets=[Ets TestingAccuracy];

% number of used hiddden nodes

num = num+len;

% used hidden nodes in each step

nodes(count)=node+len;

% stor

node=nodes(count);

end

end_time_training = cputime;

TrainingTime = end_time_training - start_time_training;

clear wi bi H beta ;

% Testing phase

% sample

switch ActivationFunType

case 'sig'

y_sample = activeadd(sample,W,b)*OutputWeights;

case 'radbas'

y_sample = activerbf(sample,W,b)*OutputWeights;

otherwise

end

y_sample=scaledata(y_sample,7,125);

num=num-1;

% performances

[SCORE,S,d,er]=Score(O,yts);

% stor results

%%%

net.E=E;

net.Ets=Ets;

net.num=num;

net.nodes=nodes;

net.Tol=Tol;

net.Tr_time=TrainingTime;

net.Ts_time=TestingTime;

net.Tr_acc=TrainingAccuracy;

net.Ts_acc=TestingAccuracy;

net.Opt_solution=population;

net.Score=SCORE;

net.S=S;

net.d=d;

net.er=er;

net.reg=Reg;

net.y_sample=y_sample;

net.fit_behavior=fit_behavior;

end

3 参考文献

部分理论来源于网络,如有侵权请联系删除。

[1] Y. X. Wu, D. Liu, and H. Jiang, “Length-Changeable Incremental Extreme Learning Machine,” J. Comput. Sci. Technol., vol. 32, no. 3, pp. 630–643, 2017.

[2] A. Saxena, M. Ieee, K. Goebel, D. Simon, and N. Eklund, “Damage Propagation Modeling for Aircraft Engine Prognostics,” Response, 2008.

[3] M. N. Alam, “Codes in MATLAB for Particle Swarm Optimization Codes in MATLAB for Particle Swarm Optimization,” no. March, 2016.

[4] J. Cao, K. Zhang, M. Luo, C. Yin, and X. Lai, “Extreme learning machine and adaptive sparse representation for image classification,” Neural Networks, vol. 81, no. 61773019, pp. 91–102, 2016.