【PyTorch】CNN图像识别分类模型

使用PyTorch搭建一个类似LeNet-5的卷积神经网络结构,用于fashion-mnist数据集的图像分类。分为数据准备、模型建立、使用训练集进行训练和使用测试集测试模型效果并可视化。

import numpy as np

import pandas as pd

from sklearn.metrics import accuracy_score,confusion_matrix,classification_report

import matplotlib.pyplot as plt

import seaborn as sns

import copy

import time

import torch

import torch.nn as nn

from torch.optim import Adam

import torch.utils.data as Data

from torchvision import transforms

from torchvision.datasets import FashionMNIST

#读取数据

train_data=FashionMNIST(

root="./data/FashionMNIST",

train=True,

transform=transforms.ToTensor(),

download=False

)

#定义数据加载器

train_loader=Data.DataLoader(

dataset=train_data,

batch_size=64,

shuffle=False,

num_workers=0,

)

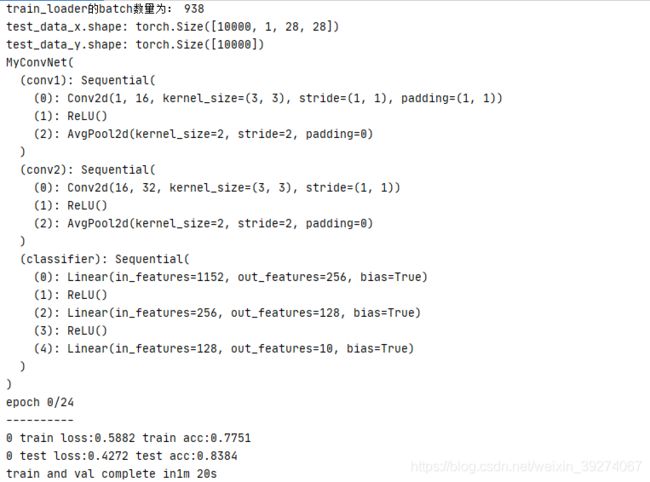

print("train_loader的batch数量为:",len(train_loader))

#可视化一个batch的图像

#获取一个batc的数据

for step,(b_x,b_y) in enumerate(train_loader):

if step>0:

break

#可视化一个batch的图像

batch_x=b_x.squeeze().numpy()

batch_y=b_y.numpy()

class_label=train_data.classes

class_label[0]="T-shirt"

plt.figure(figsize=(12,5))

for ii in np.arange(len(batch_y)):

plt.subplot(4,16,ii+1)#4行16列

plt.imshow(batch_x[ii,:,:],cmap=plt.cm.gray)

plt.title(class_label[batch_y[ii]],size=9)

plt.axis("off")

plt.subplots_adjust(wspace=0.05)

# plt.show()

#测试集数据处理

test_data=FashionMNIST(

root="./data/FashionMNIST",

train=False,

# transform=transforms.ToTensor(),

download=False

)

test_data_x=test_data.data.type(torch.FloatTensor)/255.0#归一化

test_data_x=torch.unsqueeze(test_data_x,dim=1)#添加一个通道维度

test_data_y=test_data.targets

print("test_data_x.shape:",test_data_x.shape)

print("test_data_y.shape:",test_data_y.shape)

class MyConvNet(nn.Module):

def __init__(self):

super(MyConvNet,self).__init__()

#第一个卷积层

self.conv1=nn.Sequential(

nn.Conv2d(

in_channels=1,

out_channels=16,#16*28*28

kernel_size=3,

stride=1,#步长

padding=1,

),#1*28*28-->16*28*28 正常会变成26*26但两侧有填充 所以还是28*28

nn.ReLU(),

nn.AvgPool2d(

kernel_size=2,

stride=2,

),#16*28*28-->16*14*14

)

# 第2个卷积层

self.conv2=nn.Sequential(

nn.Conv2d(16,32,3,1,0),#16*14*14-->32*12*12

nn.ReLU(),

nn.AvgPool2d(2,2)#32*12*12-->32*6*6

)

#分类层

self.classifier=nn.Sequential(

nn.Linear(32*6*6,256),#展平

nn.ReLU(),

nn.Linear(256,128),

nn.ReLU(),

nn.Linear(128,10)

)

def forward(self,x):

x=self.conv1(x)

x=self.conv2(x)

x=x.view(x.size(0),-1)

output=self.classifier(x)

return output

myConvnet=MyConvNet()

print(myConvnet)

def train_model(model,traindataloader,train_rate,criterion,optimizer,num_epochs=25):

'''

:param model: 网络模型

:param traindataloader: 训练数据集 训练集和测试集

:param train_rate: 训练集batchsize百分比 用于划分训练集和测试集

:param criterion: 损失函数

:param optimizer: 优化器

:param num_epochs: 训练轮数

:return: 训练好的模型和训练过程

'''

batch_num=len(traindataloader)

train_batch_num=round(batch_num*train_rate)#round() 方法返回浮点数x的四舍五入值。

best_model_wts=copy.deepcopy(model.state_dict())#复制模型参数

best_acc=0.0

train_loss_all=[]

train_acc_all=[]

val_loss_all=[]

val_acc_all=[]

since=time.time()

for epoch in range(num_epochs):

print('epoch {}/{}'.format(epoch,num_epochs-1))

print('-'*10)

train_loss=0.0

train_corrects=0

train_num=0

val_loss=0.0

val_corrects=0

val_num=0

for step,(b_x,b_y) in enumerate(traindataloader):

if step<train_batch_num:

model.train()

output=model(b_x)

pre_lab=torch.argmax(output,1)#返回指定维度最大值的序号下标

# print(pre_lab)

loss=criterion(output,b_y)#

optimizer.zero_grad()

loss.backward()

optimizer.step()

train_loss+=loss.item()*b_x.size(0)

train_corrects+=torch.sum(pre_lab==b_y.data)

train_num+=b_x.size(0)

else:

model.eval()

output = model(b_x)

pre_lab = torch.argmax(output, 1) # 返回指定维度最大值的序号下标

loss = criterion(output, b_y) #

val_loss += loss.item() * b_x.size(0)

val_corrects += torch.sum(pre_lab == b_y.data)

val_num += b_x.size(0)

train_loss_all.append(train_loss/train_num)

train_acc_all.append(train_corrects.double().item()/train_num)

val_loss_all.append(val_loss/val_num)

val_acc_all.append(val_corrects.double().item()/val_num)

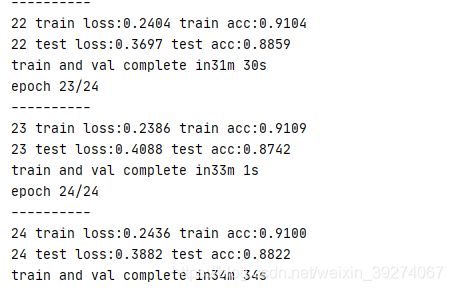

print('{} train loss:{:.4f} train acc:{:.4f}'.format(epoch,train_loss_all[-1],train_acc_all[-1]))

print('{} test loss:{:.4f} test acc:{:.4f}'.format(epoch, val_loss_all[-1], val_acc_all[-1]))

if val_acc_all[-1]>best_acc:

best_acc=val_acc_all[-1]

best_model_wts=copy.deepcopy(model.state_dict())

time_user=time.time()-since

print("train and val complete in{:.0f}m {:.0f}s".format(time_user//60,time_user%60))

model.load_state_dict(best_model_wts)

train_process=pd.DataFrame(

data={

"epoch":range(num_epochs),

"train_loss_all":train_loss_all,

"val_loss_all":val_loss_all,

"train_acc_all":train_acc_all,

"val_acc_all":val_acc_all

}

)

return model,train_process

#训练模型

optimizer=torch.optim.Adam(myConvnet.parameters(),lr=0.01)

criterion=nn.CrossEntropyLoss()

myConvnet,train_process=train_model(myConvnet,train_loader,0.8,criterion,optimizer,num_epochs=25)

#可视化

plt.figure(figsize=(12,4))

plt.subplot(1,2,1)

plt.plot(train_process.epoch,train_process.train_loss_all,"ro-",label="train loss")

plt.plot(train_process.epoch,train_process.val_loss_all,"ro-",label="val loss")

plt.legend()

plt.xlabel("epoch")

plt.ylabel("loss")

plt.subplot(1,2,2)

plt.plot(train_process.epoch,train_process.train_acc_all,"ro-",label="train acc")

plt.plot(train_process.epoch,train_process.val_acc_all,"ro-",label="val acc")

plt.legend()

plt.xlabel("epoch")

plt.ylabel("acc")

plt.show()

#预测

myConvnet.eval()

output=myConvnet(test_data_x)

pre_lab=torch.argmax(output,1)

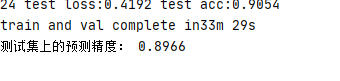

acc=accuracy_score(test_data_y,pre_lab)

print("测试集上的预测精度:",acc)

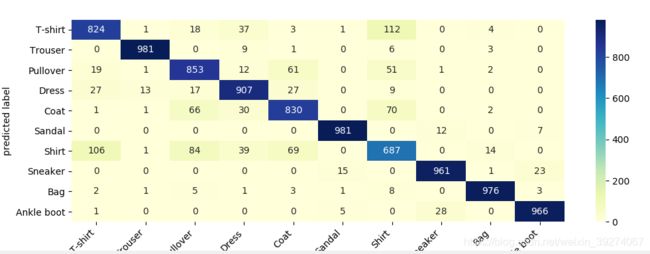

#混淆矩阵热力图

conf_mat=confusion_matrix(test_data_y,pre_lab)

df_cm=pd.DataFrame(conf_mat,index=class_label,columns=class_label)

heatmap=sns.heatmap(df_cm,annot=True,fmt="d",cmap="YlGnBu")

heatmap.yaxis.set_ticklabels(heatmap.yaxis.get_ticklabels(),rotation=0,ha="right")

heatmap.xaxis.set_ticklabels(heatmap.xaxis.get_ticklabels(),rotation=45,ha="right")

plt.xlabel("true label")

plt.ylabel("predicted label")

plt.show()