AutoGluon包使用示例(表格、图像与多模态)

前言

- 前些天看李沐老师的课,发现一个AutoMl包

AutoGluon,李沐老师说使用该包在Kaggle泰坦尼克号生还预测中取得前10%的成绩,在房价预测中拿到了第1名的成绩(用到了表格+文本的多模态模型) - 这里我用员工满意度预测(Table)、Children vs Adults Classification(Image)、流浪猫收留预测(Multimodal),数据下载可点击数据标题

- 接下来我将分别示例表格类型数据预测、图片类型数据分类、多模态预测(表格、文本、图片)

- 安装

AutoGluon(Jupyter Notebook中):! pip install autogluon - 关于

AutoGluon的更多API请参照官方网站,Github地址

表格类型预测

导入包

- 导入必要包

#加载包

import numpy as np

import pandas as pd

from plotnine import*

import seaborn as sns

from scipy import stats

import matplotlib as mpl

import matplotlib.pyplot as plt

#中文显示问题

plt.rcParams['font.sans-serif']=['SimHei']

plt.rcParams['axes.unicode_minus'] = False

# notebook嵌入图片

%matplotlib inline

# 提高分辨率

%config InlineBackend.figure_format='retina'

# 切分数据

from sklearn.model_selection import train_test_split

#评价指标

from sklearn.metrics import mean_squared_error

# 忽略警告

import warnings

warnings.filterwarnings('ignore')

数据导入与探索

- 导入训练与测试数据

df_train = pd.read_csv("../input/employee-satisfaction/训练集.csv", encoding="gbk",index_col = 'id')

df_test = pd.read_csv("../input/employee-satisfaction/测试集.csv", encoding="gbk",index_col = 'id')

df_train.head()

- 将数据中字符串列数值化

# 将字符串列改为数值列

df_train.replace({'package':{'a':0,'b':1,'c':2,'d':3,'e':4},

'salary':{'low':0,'medium':1,'high':2}},

inplace=True)

df_test.replace({'package':{'a':0,'b':1,'c':2,'d':3,'e':4},

'salary':{'low':0,'medium':1,'high':2}},

inplace=True)

df_train.head()

- 对部门列进行独热编码

df_train = pd.get_dummies(df_train,columns=['division'])

df_test = pd.get_dummies(df_test,columns=['division'])

df_train.head()

- 异常值检查

# 绘制箱型图进行异常值检查

i = 0

frows = 2

fcols = 2

plt.figure(dpi = 600,figsize=(12, 8))

for lab in df_train.columns[[0,2,8]]:

i += 1

plt.subplot(frows,fcols,i)

plt.boxplot(x=df_train[lab].values,labels=[lab])

# plt.savefig('1.png')

- 数据归一化

# 数据归一化

from sklearn import preprocessing

# 归一化的特征列不包含预测列

features_columns = [col for col in df_train.columns if col not in ['satisfaction_level']]

min_max_scaler = preprocessing.MinMaxScaler()

min_max_scaler = min_max_scaler.fit(df_train[features_columns])

train_data_scaler = min_max_scaler.transform(df_train[features_columns])

test_data_scaler = min_max_scaler.transform(df_test[features_columns])

train_data_scaler = pd.DataFrame(train_data_scaler)

train_data_scaler.columns = features_columns

test_data_scaler = pd.DataFrame(test_data_scaler)

test_data_scaler.columns = features_columns

train_data_scaler['satisfaction_level'] = df_train['satisfaction_level'].values

df_train = train_data_scaler

df_test = test_data_scaler

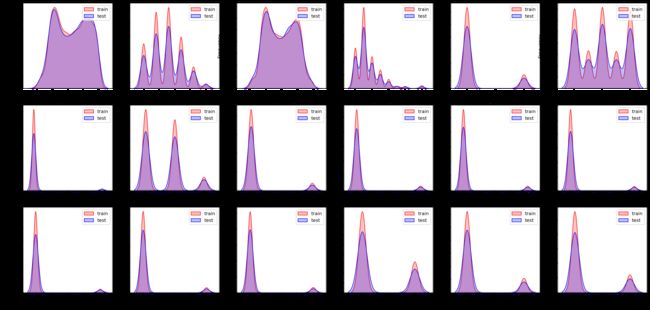

- 观察训练集与测试集数据分布是否有偏差

# 观察训练集与测试集数据分布

dist_cols = 6

dist_rows = len(df_test.columns)

plt.figure(figsize=(4*dist_cols,4*dist_rows))

for i, col in enumerate(df_test.columns):

ax=plt.subplot(dist_rows,dist_cols,i+1)

ax = sns.kdeplot(df_train[col], color="Red", shade=True)

ax = sns.kdeplot(df_test[col], color="Blue", shade=True)

ax.set_xlabel(col)

ax.set_ylabel("Frequency")

ax = ax.legend(["train","test"])

plt.show()

- 绘制特征间相关性热力图

# 绘制相关性热力图

plt.figure(dpi = 300,figsize=(20, 16))

# 获取列标签

column = df_train.columns.tolist()

mcorr = df_train[column].corr(method="spearman")

# 创建一个和相关性矩阵相同维度的空矩阵

mask = np.zeros_like(mcorr, dtype=np.bool)

mask[np.triu_indices_from(mask)] = True

cmap = sns.diverging_palette(220, 10, as_cmap=True)

g = sns.heatmap(mcorr, mask=mask, cmap=cmap, square=True, annot=True, fmt='0.2f')

# plt.savefig('2.png')

plt.show()

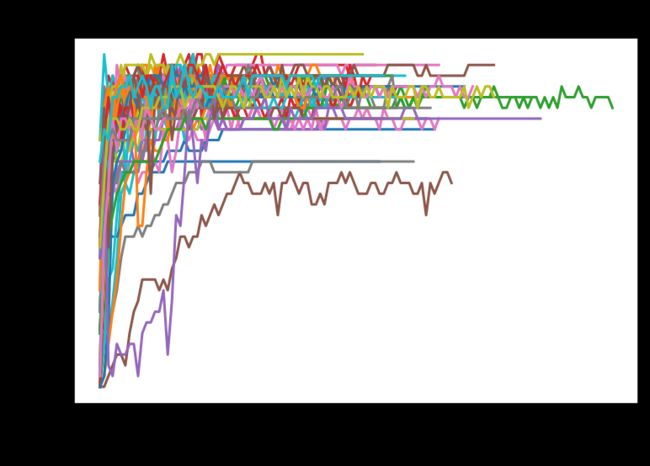

Auto ML

- 将数据转换为

autogluon中所需格式,并定义预测标签,不考虑时间成本追求最优模型,5择交叉检验、模型融合 - 使用CPU训练了大约20分钟,可以看到最优模型为

WeightedEnsemble_L3

from autogluon.tabular import TabularDataset, TabularPredictor

train_data = TabularDataset(df_train)

# 预测标签

label = 'satisfaction_level'

# 模型保存文件名

save_path = 'agModels-predictClass'

# 建立预测模型,verbosity(0~4),默认为2就好

predictor = TabularPredictor(label=label,path=save_path,verbosity=0)

# presets='best_quality'不考虑时间成本,追求最好模型

predictor.fit(train_data,presets='best_quality',num_bag_folds=5,num_bag_sets=1,num_stack_levels=1)

# 输出模型表现

predictor.leaderboard(silent=True)

输出:

model score_val pred_time_val fit_time pred_time_val_marginal fit_time_marginal stack_level can_infer fit_order

0 WeightedEnsemble_L3 -0.172081 7.343329 333.876595 0.001310 0.623922 3 True 22

1 ExtraTreesMSE_BAG_L2 -0.172605 5.116233 172.232698 1.107705 7.378392 2 True 17

2 CatBoost_BAG_L2 -0.173452 4.080730 179.991769 0.072202 15.137464 2 True 16

3 LightGBMXT_BAG_L2 -0.173462 4.172402 174.590106 0.163874 9.735801 2 True 13

4 RandomForestMSE_BAG_L2 -0.173785 4.957497 191.662132 0.948968 26.807827 2 True 15

5 LightGBM_BAG_L2 -0.173939 4.105616 175.162125 0.097088 10.307820 2 True 14

6 WeightedEnsemble_L2 -0.174116 2.045973 34.980294 0.001211 0.699372 2 True 12

7 XGBoost_BAG_L2 -0.174219 4.094137 180.652536 0.085609 15.798230 2 True 19

8 LightGBMLarge_BAG_L2 -0.174762 4.210132 182.043600 0.201604 17.189295 2 True 21

9 NeuralNetFastAI_BAG_L2 -0.175477 4.541154 209.018030 0.532626 44.163725 2 True 18

10 RandomForestMSE_BAG_L1 -0.175685 0.904012 7.887363 0.904012 7.887363 1 True 5

11 ExtraTreesMSE_BAG_L1 -0.177117 0.847087 4.248557 0.847087 4.248557 1 True 7

12 NeuralNetTorch_BAG_L2 -0.177767 4.301633 212.179403 0.293104 47.325097 2 True 20

13 LightGBMLarge_BAG_L1 -0.179927 0.274584 11.694356 0.274584 11.694356 1 True 11

14 XGBoost_BAG_L1 -0.180700 0.115822 7.230349 0.115822 7.230349 1 True 9

15 CatBoost_BAG_L1 -0.180793 0.053771 14.898683 0.053771 14.898683 1 True 6

16 LightGBM_BAG_L1 -0.181259 0.313841 8.541626 0.313841 8.541626 1 True 4

17 LightGBMXT_BAG_L1 -0.183204 0.583974 9.379441 0.583974 9.379441 1 True 3

18 NeuralNetFastAI_BAG_L1 -0.188396 0.415200 45.523810 0.415200 45.523810 1 True 8

19 NeuralNetTorch_BAG_L1 -0.192048 0.241119 54.613551 0.241119 54.613551 1 True 10

20 KNeighborsDist_BAG_L1 -0.195021 0.124069 0.015969 0.124069 0.015969 1 True 2

21 KNeighborsUnif_BAG_L1 -0.196171 0.135048 0.820599 0.135048 0.820599 1 True 1

- 输出各特征重要性

# 删除其余模型(减少内存开销)

predictor.delete_models(models_to_keep='best')

# 输出最优模型

predictor.get_model_best()

# 输出特征重要程度

predictor.feature_importance(train_data)

- 输出:

importance stddev p_value n p99_high p99_low

number_project 0.140325 0.001946 4.437726e-09 5 0.144332 0.136318

average_monthly_hours 0.120057 0.001612 3.897295e-09 5 0.123376 0.116739

time_spend_company 0.113286 0.002141 1.531254e-08 5 0.117695 0.108877

last_evaluation 0.108663 0.000795 3.442178e-10 5 0.110301 0.107026

package 0.071335 0.000921 3.339475e-09 5 0.073232 0.069438

salary 0.034672 0.001662 6.312180e-07 5 0.038093 0.031250

Work_accident 0.016181 0.000579 1.958612e-07 5 0.017373 0.014990

division_sales 0.016054 0.000679 3.826687e-07 5 0.017451 0.014656

division_technical 0.015590 0.000977 1.843122e-06 5 0.017603 0.013578

division_support 0.012123 0.000587 6.598496e-07 5 0.013332 0.010913

division_IT 0.007362 0.000297 3.168499e-07 5 0.007973 0.006750

division_product_mng 0.006758 0.000402 1.501938e-06 5 0.007587 0.005930

division_marketing 0.006032 0.000617 1.297950e-05 5 0.007302 0.004761

division_accounting 0.005793 0.000534 8.553800e-06 5 0.006892 0.004694

division_RandD 0.005265 0.000286 1.042488e-06 5 0.005854 0.004676

division_hr 0.004269 0.000494 2.108536e-05 5 0.005286 0.003253

division_management 0.003864 0.000752 1.638736e-04 5 0.005413 0.002316

promotion_last_5years 0.002596 0.000383 5.523791e-05 5 0.003385 0.001807

预测

- 导入测试数据集,

autogluon会自动使用最优模型进行预测

# 导入预测数据

test_data = TabularDataset(df_test)

# 导入模型

predictor = TabularPredictor.load(save_path)

# 得到预测值

y_pred = predictor.predict(test_data)

y_pred

图像数据分类

划分数据集

import autogluon.core as ag

from autogluon.vision import ImagePredictor, ImageDataset

train_data, _, test_data = ImageDataset.from_folders('../input/children-vs-adults-images', train='train', test='test')

print('train #', len(train_data), 'test #', len(test_data))

输出:

train # 680 test # 120

Auto ML

- 选择不考虑时间成本,最求最优模型

- 这个模型我在Kaggle上使用GPU训练了快12个小时!,可以看到模型在训练集上准确率为96.05%,验证集上准确率为97.05%,效果很好

predictor = ImagePredictor(verbosity=2)

predictor.fit(train_data,presets ='best_quality')

输出

Finished, total runtime is 42713.23 s

{ 'best_config': { 'augmentation': { 'auto_augment': None,

'color_jitter': 0.4,

'cutmix': 0.0,

'cutmix_minmax': None,

'drop': 0.0,

'drop_block': None,

'drop_path': None,

'hflip': 0.5,

'mixup': 0.0,

'mixup_mode': 'batch',

'mixup_off_epoch': 0,

'mixup_prob': 1.0,

'mixup_switch_prob': 0.5,

'no_aug': False,

'ratio': (0.75, 1.3333333333333333),

'scale': (0.08, 1.0),

'smoothing': 0.1,

'train_interpolation': 'random',

'vflip': 0.0},

'data': { 'crop_pct': 0.99,

'img_size': None,

'input_size': None,

'interpolation': '',

'mean': None,

'std': None,

'validation_batch_size_multiplier': 1},

'estimator': <class 'gluoncv.auto.estimators.torch_image_classification.torch_image_classification.TorchImageClassificationEstimator'>,

'gpus': [0],

'img_cls': { 'global_pool_type': None,

'model': 'swin_base_patch4_window7_224',

'pretrained': True},

'misc': { 'amp': False,

'apex_amp': False,

'eval_metric': 'top1',

'log_interval': 50,

'native_amp': False,

'num_workers': 2,

'pin_mem': False,

'prefetcher': False,

'save_images': False,

'seed': 467,

'torchscript': False,

'tta': 0,

'use_multi_epochs_loader': False},

'model_ema': { 'model_ema': True,

'model_ema_decay': 0.9998,

'model_ema_force_cpu': False},

'optimizer': { 'clip_grad': None,

'clip_mode': 'norm',

'momentum': 0.9,

'opt': 'sgd',

'opt_betas': None,

'opt_eps': None,

'weight_decay': 0.0001},

'train': { 'batch_size': 16,

'bn_eps': None,

'bn_momentum': None,

'cooldown_epochs': 10,

'decay_epochs': 30,

'decay_rate': 0.1,

'early_stop_baseline': -inf,

'early_stop_max_value': inf,

'early_stop_min_delta': 0.001,

'early_stop_patience': 50,

'epochs': 200,

'lr': 0.0005115112828551085,

'lr_cycle_limit': 1,

'lr_cycle_mul': 1.0,

'lr_noise': None,

'lr_noise_pct': 0.67,

'lr_noise_std': 1.0,

'min_lr': 1e-05,

'output_lr_mult': 0.1,

'patience_epochs': 10,

'sched': 'step',

'start_epoch': 0,

'sync_bn': False,

'transfer_lr_mult': 0.01,

'warmup_epochs': 3,

'warmup_lr': 0.0001}},

'total_time': 42712.701295375824,

'train_acc': 0.9605263157894737,

'valid_acc': 0.9705882352941176}

预测

- 最优模型在测试集上准确率为89.2%也是一个非常不错的成绩,比我用手调的还好2个点!

test_acc = predictor.evaluate(test_data)

print('Top-1 test acc: %.3f' % test_acc['top1'])

输出:

Top-1 test acc: 0.892

- 输出测试集预测标签

result = predictor.predict(test_data)

print(result)

输出:

0 0

1 0

2 0

3 0

4 0

..

115 1

116 1

117 1

118 1

119 1

Name: label, Length: 120, dtype: int64

多模态数据预测

- 多模态数据即表格数据+文本数据+图像数据,是基于多模型融合的预测。

下载数据集

download_dir = './ag_petfinder_tutorial'

zip_file = 'https://automl-mm-bench.s3.amazonaws.com/petfinder_kaggle.zip'

from autogluon.core.utils.loaders import load_zip

load_zip.unzip(zip_file, unzip_dir=download_dir)

dataset_path = download_dir + '/petfinder_processed'

os.listdir(dataset_path)

# train_images:训练集图片

# test_images:测试集图片

# train.csv:训练集标签,特征,文本

# test.csv:测试集标签,特征,文本

# dev.csv:所有数据集标签

导入数据

train_data = pd.read_csv(f'{dataset_path}/train.csv', index_col=0)

test_data = pd.read_csv(f'{dataset_path}/dev.csv', index_col=0)

train_data.info()

输出:

<class 'pandas.core.frame.DataFrame'>

Int64Index: 11994 entries, 10721 to 5640

Data columns (total 25 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Type 11994 non-null int64

1 Name 10988 non-null object

2 Age 11994 non-null int64

3 Breed1 11994 non-null int64

4 Breed2 11994 non-null int64

5 Gender 11994 non-null int64

6 Color1 11994 non-null int64

7 Color2 11994 non-null int64

8 Color3 11994 non-null int64

9 MaturitySize 11994 non-null int64

10 FurLength 11994 non-null int64

11 Vaccinated 11994 non-null int64

12 Dewormed 11994 non-null int64

13 Sterilized 11994 non-null int64

14 Health 11994 non-null int64

15 Quantity 11994 non-null int64

16 Fee 11994 non-null int64

17 State 11994 non-null int64

18 RescuerID 11994 non-null object

19 VideoAmt 11994 non-null int64

20 Description 11986 non-null object

21 PetID 11994 non-null object

22 PhotoAmt 11994 non-null float64

23 AdoptionSpeed 11994 non-null int64

24 Images 11994 non-null object

dtypes: float64(1), int64(19), object(5)

memory usage: 2.4+ MB

- 告诉Auto模型预测列与图像路径列

# 需要预测的值

label = 'AdoptionSpeed'

# 对应图像标签

image_col = 'Images'

- 同1只动物有2~3张不同的照片,因为AutoGluon包1行数据只能读取1张照片,这里只取图片路径列的第1张

# 每行取第一张图片

train_data[image_col] = train_data[image_col].apply(lambda ele: ele.split(';')[0])

test_data[image_col] = test_data[image_col].apply(lambda ele: ele.split(';')[0])

train_data[image_col].iloc[0]

- 将图片路径改为绝对路径

# 将csv文件中的图片路径补充完整

def path_expander(path, base_folder):

path_l = path.split(';')

return ';'.join([os.path.abspath(os.path.join(base_folder, path)) for path in path_l])

train_data[image_col] = train_data[image_col].apply(lambda ele: path_expander(ele, base_folder=dataset_path))

test_data[image_col] = test_data[image_col].apply(lambda ele: path_expander(ele, base_folder=dataset_path))

train_data[image_col].iloc[0]

- 绘制图片

example_row = train_data.iloc[1]

example_image = example_row['Images']

from IPython.display import Image, display

pil_img = Image(filename=example_image)

display(pil_img)

- 数据采样,迅速定位值得训练的模型

# 数据采样(了解哪些模型值得训练)

train_data = train_data.sample(5000, random_state=0)

- 多模态特征提取

from autogluon.tabular import FeatureMetadata

feature_metadata = FeatureMetadata.from_df(train_data)

print(feature_metadata)

输出:

('float', []) : 1 | ['PhotoAmt']

('int', []) : 19 | ['Type', 'Age', 'Breed1', 'Breed2', 'Gender', ...]

('object', []) : 4 | ['Name', 'RescuerID', 'PetID', 'Images']

('object', ['text']) : 1 | ['Description']

- 将Images列设为图像路径属性(方便训练器识别)

# 将Images列设为图像路径属性(方便训练器识别)

feature_metadata = feature_metadata.add_special_types({image_col: ['image_path']})

print(feature_metadata)

输出:

('float', []) : 1 | ['PhotoAmt']

('int', []) : 19 | ['Type', 'Age', 'Breed1', 'Breed2', 'Gender', ...]

('object', []) : 3 | ['Name', 'RescuerID', 'PetID']

('object', ['image_path']) : 1 | ['Images']

('object', ['text']) : 1 | ['Description']

Auto ML

from autogluon.tabular.configs.hyperparameter_configs import get_hyperparameter_config

# 多模态训练模式

hyperparameters = get_hyperparameter_config('multimodal')

hyperparameters

- 时间限制最多训练8个小时,并使用GPU加速

from autogluon.tabular import TabularPredictor

predictor = TabularPredictor(label=label).fit(

train_data=train_data,

hyperparameters=hyperparameters,

feature_metadata=feature_metadata,

presets = 'best_quality',

time_limit=8*3600,

ag_args_fit={'num_gpus': 0})

输出:

Fitting model: LightGBMLarge_BAG_L1 ... Training model for up to 17493.06s of the 27095.97s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with ParallelLocalFoldFittingStrategy

0.432 = Validation score (accuracy)

386.71s = Training runtime

1.49s = Validation runtime

Fitting model: TextPredictor_BAG_L1 ... Training model for up to 17096.1s of the 26699.01s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with ParallelLocalFoldFittingStrategy

E1019 05:14:49.161276010 2762 chttp2_transport.cc:1103] Received a GOAWAY with error code ENHANCE_YOUR_CALM and debug data equal to "too_many_pings"

0.3634 = Validation score (accuracy)

3734.32s = Training runtime

18.76s = Validation runtime

Fitting model: ImagePredictor_BAG_L1 ... Training model for up to 13344.84s of the 22947.75s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with ParallelLocalFoldFittingStrategy

0.3524 = Validation score (accuracy)

4517.97s = Training runtime

28.6s = Validation runtime

Completed 1/20 k-fold bagging repeats ...

Fitting model: WeightedEnsemble_L2 ... Training model for up to 1919.15s of the 18419.43s of remaining time.

0.4578 = Validation score (accuracy)

1.42s = Training runtime

0.0s = Validation runtime

Fitting 6 L2 models ...

Fitting model: LightGBM_BAG_L2 ... Training model for up to 18417.99s of the 18417.59s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4564 = Validation score (accuracy)

199.13s = Training runtime

1.04s = Validation runtime

Fitting model: LightGBMXT_BAG_L2 ... Training model for up to 18213.83s of the 18213.57s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4528 = Validation score (accuracy)

140.68s = Training runtime

0.68s = Validation runtime

Fitting model: CatBoost_BAG_L2 ... Training model for up to 18067.64s of the 18067.38s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with ParallelLocalFoldFittingStrategy

0.463 = Validation score (accuracy)

1012.6s = Training runtime

1.73s = Validation runtime

Fitting model: XGBoost_BAG_L2 ... Training model for up to 17049.43s of the 17049.16s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4614 = Validation score (accuracy)

355.78s = Training runtime

0.67s = Validation runtime

Fitting model: NeuralNetTorch_BAG_L2 ... Training model for up to 16687.78s of the 16687.5s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4528 = Validation score (accuracy)

62.11s = Training runtime

0.85s = Validation runtime

Fitting model: LightGBMLarge_BAG_L2 ... Training model for up to 16621.21s of the 16620.96s of remaining time.

Fitting 8 child models (S1F1 - S1F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4588 = Validation score (accuracy)

698.95s = Training runtime

2.09s = Validation runtime

Repeating k-fold bagging: 2/20

Fitting model: LightGBM_BAG_L2 ... Training model for up to 15917.11s of the 15916.86s of remaining time.

Fitting 8 child models (S2F1 - S2F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4474 = Validation score (accuracy)

361.11s = Training runtime

1.7s = Validation runtime

Fitting model: LightGBMXT_BAG_L2 ... Training model for up to 15750.46s of the 15750.21s of remaining time.

Fitting 8 child models (S2F1 - S2F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4566 = Validation score (accuracy)

383.67s = Training runtime

2.51s = Validation runtime

Fitting model: CatBoost_BAG_L2 ... Training model for up to 15502.33s of the 15502.08s of remaining time.

Fitting 8 child models (S2F1 - S2F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4534 = Validation score (accuracy)

2029.12s = Training runtime

3.6s = Validation runtime

Fitting model: XGBoost_BAG_L2 ... Training model for up to 14480.59s of the 14480.34s of remaining time.

Fitting 8 child models (S2F1 - S2F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4574 = Validation score (accuracy)

713.87s = Training runtime

1.32s = Validation runtime

Fitting model: NeuralNetTorch_BAG_L2 ... Training model for up to 14118.13s of the 14117.89s of remaining time.

Fitting 8 child models (S2F1 - S2F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4494 = Validation score (accuracy)

123.94s = Training runtime

1.64s = Validation runtime

Fitting model: LightGBMLarge_BAG_L2 ... Training model for up to 14051.54s of the 14051.05s of remaining time.

Fitting 8 child models (S2F1 - S2F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4516 = Validation score (accuracy)

1290.52s = Training runtime

3.15s = Validation runtime

Repeating k-fold bagging: 3/20

Fitting model: LightGBM_BAG_L2 ... Training model for up to 13454.83s of the 13454.58s of remaining time.

Fitting 8 child models (S3F1 - S3F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4534 = Validation score (accuracy)

565.3s = Training runtime

2.79s = Validation runtime

Fitting model: LightGBMXT_BAG_L2 ... Training model for up to 13245.22s of the 13244.89s of remaining time.

Fitting 8 child models (S3F1 - S3F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4552 = Validation score (accuracy)

562.97s = Training runtime

3.64s = Validation runtime

Fitting model: CatBoost_BAG_L2 ... Training model for up to 13061.41s of the 13061.16s of remaining time.

Fitting 8 child models (S3F1 - S3F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4538 = Validation score (accuracy)

2989.07s = Training runtime

5.31s = Validation runtime

Fitting model: XGBoost_BAG_L2 ... Training model for up to 12096.7s of the 12096.4s of remaining time.

Fitting 8 child models (S3F1 - S3F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4628 = Validation score (accuracy)

1065.02s = Training runtime

1.96s = Validation runtime

Fitting model: NeuralNetTorch_BAG_L2 ... Training model for up to 11741.2s of the 11740.95s of remaining time.

Fitting 8 child models (S3F1 - S3F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4536 = Validation score (accuracy)

191.06s = Training runtime

2.32s = Validation runtime

Fitting model: LightGBMLarge_BAG_L2 ... Training model for up to 11669.58s of the 11669.33s of remaining time.

Fitting 8 child models (S3F1 - S3F8) | Fitting with ParallelLocalFoldFittingStrategy

0.455 = Validation score (accuracy)

1921.24s = Training runtime

4.67s = Validation runtime

Repeating k-fold bagging: 4/20

Fitting model: LightGBM_BAG_L2 ... Training model for up to 11032.26s of the 11032.01s of remaining time.

Fitting 8 child models (S4F1 - S4F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4564 = Validation score (accuracy)

779.74s = Training runtime

4.27s = Validation runtime

Fitting model: LightGBMXT_BAG_L2 ... Training model for up to 10813.47s of the 10813.22s of remaining time.

Fitting 8 child models (S4F1 - S4F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4564 = Validation score (accuracy)

724.24s = Training runtime

4.74s = Validation runtime

Fitting model: CatBoost_BAG_L2 ... Training model for up to 10647.91s of the 10647.64s of remaining time.

Fitting 8 child models (S4F1 - S4F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4544 = Validation score (accuracy)

4043.74s = Training runtime

7.46s = Validation runtime

Fitting model: XGBoost_BAG_L2 ... Training model for up to 9588.75s of the 9588.44s of remaining time.

Fitting 8 child models (S4F1 - S4F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4632 = Validation score (accuracy)

1380.01s = Training runtime

2.51s = Validation runtime

Fitting model: NeuralNetTorch_BAG_L2 ... Training model for up to 9269.72s of the 9269.47s of remaining time.

Fitting 8 child models (S4F1 - S4F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4528 = Validation score (accuracy)

249.06s = Training runtime

3.08s = Validation runtime

Fitting model: LightGBMLarge_BAG_L2 ... Training model for up to 9207.7s of the 9207.42s of remaining time.

Fitting 8 child models (S4F1 - S4F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4544 = Validation score (accuracy)

2604.8s = Training runtime

6.68s = Validation runtime

Repeating k-fold bagging: 5/20

Fitting model: LightGBM_BAG_L2 ... Training model for up to 8518.63s of the 8518.39s of remaining time.

Fitting 8 child models (S5F1 - S5F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4568 = Validation score (accuracy)

969.05s = Training runtime

5.1s = Validation runtime

Fitting model: LightGBMXT_BAG_L2 ... Training model for up to 8324.31s of the 8324.06s of remaining time.

Fitting 8 child models (S5F1 - S5F8) | Fitting with ParallelLocalFoldFittingStrategy

0.46 = Validation score (accuracy)

954.32s = Training runtime

6.73s = Validation runtime

Fitting model: CatBoost_BAG_L2 ... Training model for up to 8089.46s of the 8089.21s of remaining time.

Fitting 8 child models (S5F1 - S5F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4526 = Validation score (accuracy)

4982.74s = Training runtime

9.3s = Validation runtime

Fitting model: XGBoost_BAG_L2 ... Training model for up to 7145.47s of the 7145.16s of remaining time.

Fitting 8 child models (S5F1 - S5F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4618 = Validation score (accuracy)

1687.99s = Training runtime

3.07s = Validation runtime

Fitting model: NeuralNetTorch_BAG_L2 ... Training model for up to 6833.41s of the 6833.16s of remaining time.

Fitting 8 child models (S5F1 - S5F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4534 = Validation score (accuracy)

311.52s = Training runtime

3.78s = Validation runtime

Fitting model: LightGBMLarge_BAG_L2 ... Training model for up to 6766.63s of the 6766.35s of remaining time.

Fitting 8 child models (S5F1 - S5F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4552 = Validation score (accuracy)

3278.15s = Training runtime

8.55s = Validation runtime

Repeating k-fold bagging: 6/20

Fitting model: LightGBM_BAG_L2 ... Training model for up to 6087.9s of the 6087.65s of remaining time.

Fitting 8 child models (S6F1 - S6F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4586 = Validation score (accuracy)

1164.77s = Training runtime

6.08s = Validation runtime

Fitting model: LightGBMXT_BAG_L2 ... Training model for up to 5886.76s of the 5886.47s of remaining time.

Fitting 8 child models (S6F1 - S6F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4624 = Validation score (accuracy)

1100.85s = Training runtime

7.32s = Validation runtime

Fitting model: CatBoost_BAG_L2 ... Training model for up to 5735.64s of the 5735.38s of remaining time.

Fitting 8 child models (S6F1 - S6F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4518 = Validation score (accuracy)

5980.75s = Training runtime

11.3s = Validation runtime

Fitting model: XGBoost_BAG_L2 ... Training model for up to 4732.41s of the 4732.16s of remaining time.

Fitting 8 child models (S6F1 - S6F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4578 = Validation score (accuracy)

1990.26s = Training runtime

3.59s = Validation runtime

Fitting model: NeuralNetTorch_BAG_L2 ... Training model for up to 4425.39s of the 4425.12s of remaining time.

Fitting 8 child models (S6F1 - S6F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4546 = Validation score (accuracy)

377.89s = Training runtime

4.44s = Validation runtime

Fitting model: LightGBMLarge_BAG_L2 ... Training model for up to 4354.59s of the 4354.33s of remaining time.

Fitting 8 child models (S6F1 - S6F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4524 = Validation score (accuracy)

3901.7s = Training runtime

10.22s = Validation runtime

Repeating k-fold bagging: 7/20

Fitting model: LightGBM_BAG_L2 ... Training model for up to 3725.6s of the 3725.34s of remaining time.

Fitting 8 child models (S7F1 - S7F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4596 = Validation score (accuracy)

1347.21s = Training runtime

6.95s = Validation runtime

Fitting model: LightGBMXT_BAG_L2 ... Training model for up to 3538.96s of the 3538.7s of remaining time.

Fitting 8 child models (S7F1 - S7F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4622 = Validation score (accuracy)

1247.92s = Training runtime

7.96s = Validation runtime

Fitting model: CatBoost_BAG_L2 ... Training model for up to 3387.65s of the 3387.4s of remaining time.

Fitting 8 child models (S7F1 - S7F8) | Fitting with ParallelLocalFoldFittingStrategy

0.452 = Validation score (accuracy)

6844.92s = Training runtime

13.13s = Validation runtime

Fitting model: XGBoost_BAG_L2 ... Training model for up to 2519.12s of the 2518.87s of remaining time.

Fitting 8 child models (S7F1 - S7F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4578 = Validation score (accuracy)

2340.68s = Training runtime

4.2s = Validation runtime

Fitting model: NeuralNetTorch_BAG_L2 ... Training model for up to 2164.52s of the 2164.27s of remaining time.

Fitting 8 child models (S7F1 - S7F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4576 = Validation score (accuracy)

441.27s = Training runtime

5.19s = Validation runtime

Fitting model: LightGBMLarge_BAG_L2 ... Training model for up to 2097.19s of the 2096.93s of remaining time.

Fitting 8 child models (S7F1 - S7F8) | Fitting with ParallelLocalFoldFittingStrategy

0.4582 = Validation score (accuracy)

4558.04s = Training runtime

11.89s = Validation runtime

Completed 7/20 k-fold bagging repeats ...

Fitting model: WeightedEnsemble_L3 ... Training model for up to 1841.8s of the 1436.21s of remaining time.

0.4694 = Validation score (accuracy)

0.93s = Training runtime

0.0s = Validation runtime

AutoGluon training complete, total runtime = 27364.79s ... Best model: "WeightedEnsemble_L3"

TabularPredictor saved. To load, use: predictor = TabularPredictor.load("AutogluonModels/ag-20221019_043937/")

预测

- 观察各模型在测试集上表现

leaderboard = predictor.leaderboard(test_data)

model score_test score_val pred_time_test pred_time_val fit_time pred_time_test_marginal pred_time_val_marginal fit_time_marginal stack_level can_infer fit_order

0 CatBoost_BAG_L2 0.440147 0.4520 311.027459 67.010538 17144.274338 3.961333 13.129473 6844.922442 2 True 12

1 NeuralNetTorch_BAG_L2 0.439146 0.4576 313.881686 59.070805 10740.619594 6.815560 5.189740 441.267699 2 True 14

2 WeightedEnsemble_L3 0.438146 0.4694 356.545686 84.355455 21175.077252 0.013631 0.000960 0.931303 3 True 16

3 LightGBMXT_BAG_L2 0.435145 0.4622 331.060749 61.838714 11547.272638 23.994623 7.957649 1247.920743 2 True 11

4 WeightedEnsemble_L2 0.434478 0.4578 301.874017 52.586521 10151.541209 0.006723 0.001295 1.415491 2 True 9

5 LightGBM_BAG_L2 0.431811 0.4596 328.935630 60.831540 11646.558565 21.869503 6.950476 1347.206670 2 True 10

6 LightGBMLarge_BAG_L2 0.431811 0.4582 345.884833 65.766117 14857.395032 38.818707 11.885052 4558.043137 2 True 15

7 XGBoost_BAG_L2 0.429810 0.4578 321.760539 58.077633 12640.035064 14.694413 4.196568 2340.683169 2 True 13

8 LightGBMLarge_BAG_L1 0.426475 0.4320 6.381093 1.489012 386.712939 6.381093 1.489012 386.712939 1 True 6

9 LightGBM_BAG_L1 0.423474 0.4302 2.985550 0.803576 132.148328 2.985550 0.803576 132.148328 1 True 1

10 XGBoost_BAG_L1 0.421140 0.4302 2.731979 0.632295 190.459603 2.731979 0.632295 190.459603 1 True 4

11 CatBoost_BAG_L1 0.420140 0.4452 0.933754 1.721086 1114.353101 0.933754 1.721086 1114.353101 1 True 3

12 LightGBMXT_BAG_L1 0.410470 0.4126 5.198832 1.295838 149.226177 5.198832 1.295838 149.226177 1 True 2

13 NeuralNetTorch_BAG_L1 0.397799 0.4078 0.728582 0.579873 74.164125 0.728582 0.579873 74.164125 1 True 5

14 TextPredictor_BAG_L1 0.370457 0.3634 96.319121 18.761692 3734.320718 96.319121 18.761692 3734.320718 1 True 7

15 ImagePredictor_BAG_L1 0.350784 0.3524 191.787215 28.597692 4517.966904 191.787215 28.597692 4517.966904 1 True 8

- 输出测试集预测值

y_pred = predictor.predict(test_data)

y_pred

结语

AutoGluon包是很强大的Auto ML包,但相应的需要付出的算力是人工调参的10倍以上,针对一些有经验性的任务不妨用手调,能快速部署。AutoGluon官网上还有很多例子,比如文本(NLP)类预测,以及目标检测、时间序列,这里因为篇幅原因不过多赘述,后面有时间会再更新。