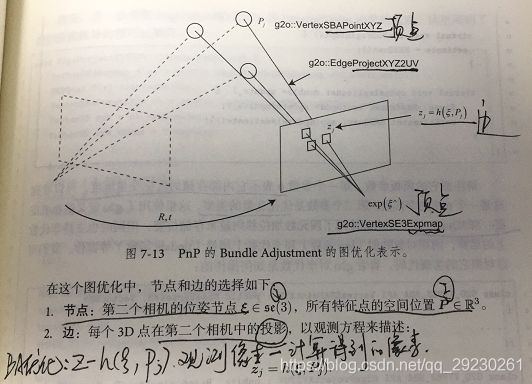

VSLAM算法(二):PnP求解 3D-2D 相机位姿及 BA优化算法

BA优化模型如下:优化变量(空间位置P和相机位姿),边(P在相机平面的投影像素坐标)

// 调用OpenCV 的 PnP 求解,可选择EPNP,DLS等方法

solvePnP ( pts_3d, pts_2d, K, Mat(), r, t, false );

cv::Rodrigues ( r, R ); // r为旋转向量形式,用Rodrigues公式转换为矩阵

void bundleAdjustment (

const vector< Point3f > points_3d,

const vector< Point2f > points_2d,

const Mat& K,

Mat& R, Mat& t )

{

// 初始化g2o

typedef g2o::BlockSolver< g2o::BlockSolverTraits<6,3> > Block; // pose 维度为 6, landmark 维度为 3

//Block::LinearSolverType* linearSolver = new g2o::LinearSolverCSparse(); // 线性方程求解器

std::unique_ptr linearSolver ( new g2o::LinearSolverCSparse());

//Block* solver_ptr = new Block ( linearSolver ); // 矩阵块求解器

//std::unique_ptr solver_ptr ( new Block ( linearSolver));

std::unique_ptr solver_ptr ( new Block ( std::move(linearSolver))); // 矩阵块求解器

//g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg ( solver_ptr );

g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg ( std::move(solver_ptr));

g2o::SparseOptimizer optimizer;

optimizer.setAlgorithm ( solver );

//申明一个位姿优化模型

// vertex

g2o::VertexSE3Expmap* pose = new g2o::VertexSE3Expmap(); // camera pose

Eigen::Matrix3d R_mat;

R_mat <<

R.at ( 0,0 ), R.at ( 0,1 ), R.at ( 0,2 ),

R.at ( 1,0 ), R.at ( 1,1 ), R.at ( 1,2 ),

R.at ( 2,0 ), R.at ( 2,1 ), R.at ( 2,2 );

pose->setId ( 0 );

pose->setEstimate ( g2o::SE3Quat (

R_mat,

Eigen::Vector3d ( t.at ( 0,0 ), t.at ( 1,0 ), t.at ( 2,0 ) )

) );

optimizer.addVertex ( pose );

//添加3D路标点

int index = 1;

for ( const Point3f p:points_3d ) // landmarks

{

g2o::VertexSBAPointXYZ* point = new g2o::VertexSBAPointXYZ();

point->setId ( index++ );

point->setEstimate ( Eigen::Vector3d ( p.x, p.y, p.z ) );

point->setMarginalized ( true ); // g2o 中必须设置 marg 参见第十讲内容

optimizer.addVertex ( point );

}

// 添加相机参数 parameter: camera intrinsics

g2o::CameraParameters* camera = new g2o::CameraParameters (

K.at ( 0,0 ), Eigen::Vector2d ( K.at ( 0,2 ), K.at ( 1,2 ) ), 0

);

camera->setId ( 0 );

optimizer.addParameter ( camera );

//添加边 edges

index = 1;

for ( const Point2f p:points_2d )

{

g2o::EdgeProjectXYZ2UV* edge = new g2o::EdgeProjectXYZ2UV();

edge->setId ( index );

edge->setVertex ( 0, dynamic_cast ( optimizer.vertex ( index ) ) );

edge->setVertex ( 1, pose );

edge->setMeasurement ( Eigen::Vector2d ( p.x, p.y ) );

edge->setParameterId ( 0,0 );

edge->setInformation ( Eigen::Matrix2d::Identity() );

optimizer.addEdge ( edge );

index++;

}

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

optimizer.setVerbose ( true );

optimizer.initializeOptimization();

optimizer.optimize ( 100 );

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration time_used = chrono::duration_cast> ( t2-t1 );

cout<<"optimization costs time: "<void solvePnP(InputArray objectPoints,

InputArray imagePoints,

InputArray cameraMatrix,

InputArray distCoeffs,

OutputArray rvec,

OutputArray tvec,

bool useExtrinsicGuess=false,

int flags = CV_ITERATIVE

)

参数解释:

objectPoints:世界坐标系中的3D点坐标,单位mm

imagePoints:图像坐标系中点的坐标,单位像素

cameraMatrix:相机内参矩阵

distCoeffs:畸变系数

rvec:旋转矩阵——》是向量形式,需用Rodrigues转换成旋转矩阵形式

tvec:平移矩阵

useExtrinsicGuess:是否输出平移矩阵和旋转矩阵,默认为false

flags:SOLVEPNP _ITERATIVE、SOLVEPNP _P3P、SOLVEPNP _EPNP、SOLVEPNP _DLS、SOLVEPNP _UPNP

----------------------------------------------------------------------------------------

objectPoints - 世界坐标系下的控制点的坐标,vector的数据类型在这里可以使用

imagePoints - 在图像坐标系下对应的控制点的坐标。vector在这里可以使用

cameraMatrix - 相机的内参矩阵

distCoeffs - 相机的畸变系数

以上两个参数通过相机标定可以得到。相机的内参数的标定参见:http://www.cnblogs.com/star91/p/6012425.html

rvec - 输出的旋转向量。使坐标点从世界坐标系旋转到相机坐标系

tvec - 输出的平移向量。使坐标点从世界坐标系平移到相机坐标系

flags - 默认使用CV_ITERATIV迭代法

作者:喻茸sophie

链接:https://www.jianshu.com/p/b97406d8833c ushort d = d1.ptr (int ( keypoints_1[m.queryIdx].pt.y )) [ int ( keypoints_1[m.queryIdx].pt.x ) ];

/*

* 这个m的类型:DMatch

* DMatch主要用来储存匹配信息的结构体,query是要匹配的描述子,train是被匹配的描述子;

* 在Opencv中进行匹配时

* void DescriptorMatcher::match( const Mat& queryDescriptors,

const Mat& trainDescriptors,

vector& matches,

const Mat& mask

) const

* match函数的参数中位置在前面的为query descriptor,后面的是 train descriptor

* 例如:query descriptor的数目为20,train descriptor数目为30,则DescriptorMatcher::match后的vector的size为20

* 若反过来,则vector的size为30

*/

struct CV_EXPORTS_W_SIMPLE DMatch

{

//默认构造函数,FLT_MAX是无穷大

//#define FLT_MAX 3.402823466e+38F /* max value */

CV_WRAP DMatch() : queryIdx(-1), trainIdx(-1), imgIdx(-1), distance(FLT_MAX) {}

//DMatch构造函数

CV_WRAP DMatch( int _queryIdx, int _trainIdx, float _distance ) :

queryIdx(_queryIdx), trainIdx(_trainIdx), imgIdx(-1), distance(_distance) {}

//DMatch构造函数

CV_WRAP DMatch( int _queryIdx, int _trainIdx, int _imgIdx, float _distance ) :

queryIdx(_queryIdx), trainIdx(_trainIdx), imgIdx(_imgIdx), distance(_distance) {}

//queryIdx为query描述子的索引,match函数中前面的那个描述子

CV_PROP_RW int queryIdx; // query descriptor index

//trainIdx为train描述子的索引,match函数中后面的那个描述子

CV_PROP_RW int trainIdx; // train descriptor index

//imgIdx为进行匹配图像的索引

//例如已知一幅图像的sift描述子,与其他十幅图像的描述子进行匹配,找最相似的图像,则imgIdx此时就有用了。

CV_PROP_RW int imgIdx; // train image index

//distance为两个描述子之间的距离

CV_PROP_RW float distance;

//DMatch比较运算符重载,比较的是DMatch中的distance,小于为true,否则为false

// less is better

bool operator<( const DMatch &m ) const

{

return distance < m.distance;

}

};

原文:https://blog.csdn.net/robinhjwy/article/details/77801950