基于Tensorflow框架的人脸活体检测、人脸属性总结附代码(持续更新)

基于Tensorflow框架的人脸活体检测、人脸属性总结附代码(持续更新)

基于Tensorflow框架的人脸检测总结附代码(持续更新)

基于Tensorflow框架的人脸匹配总结附代码(持续更新)

基于Tensorflow框架的人脸对齐、人脸关键点检测总结附代码(持续更新)

最近利用人脸开源数据库WIDER FACE、64_CASIA-FaceV5、CelebA、300W_LP以及自己做的一个近200张照片的私人数据集复现了人脸检测、人脸匹配、人脸对齐、人脸关键点检测、活体检测、人脸属性等功能,并且将其集成在微信小程序中,以上所有内容将用大概5篇博客来总结。所有数据集下载链接附在下方,所有完整代码附在GitHub链接

WIDER FACE 数据集,包括32203个图像和393703个人脸图像,其中在尺度、姿势、遮挡、表情、装扮、光照等均有所不同。

LFW数据集,是目前人脸识别的常用测试集,其中提供的人脸图片均来源于生活中的自然场景,因此识别难度会增大,尤其由于多姿态、光照、表情、年龄、遮挡等因素影响导致即使同一人的照片差别也很大。并且有些照片中可能不止一个人脸出现,对这些多人脸图像仅选择中心坐标的人脸作为目标,其他区域的视为背景干扰。LFW数据集共有13233张人脸图像,每张图像均给出对应的人名,共有5749人,且绝大部分人仅有一张图片。每张图片的尺寸为250X250,绝大部分为彩色图像,但也存在少许黑白人脸图片。

64_CASIA-FaceV5 该数据集包含了来自500个人的2500张亚洲人脸图片。

CelebA 包含10,177个名人身份的202,599张人脸图片,每张图片都做好了特征标记,包含人脸bbox标注框、5个人脸特征点坐标以及40个属性标记,广泛用于人脸相关的计算机视觉训练任务,可用于人脸属性标识训练、人脸检测训练以及landmark标记等。

300W_LP 该数据集是3DDFA团队基于现有的AFW,IBUG,HEPEP,FLWP等2D人脸对齐数据集,通过3DMM拟合得到的3DMM标注,并对姿态,光照,色彩等进行变化以及对原始图像进行flip(镜像)得到的一个大姿态3D人脸对齐数据集。

文章目录

- 1.活体检测业务介绍

- 2.活体检测方法介绍

-

- 2.1传统方法

- 2.2 深度学习

- 3.活体检测问题挑战及解决思路

- 4.人脸属性业务介绍

- 5.人脸属性方法介绍

- 6.基于多任务网络的人脸属性识别编程实战

-

- 6.1人脸属性识别模型搭建

- 6.2人脸属性识别模型训练与测试

1.活体检测业务介绍

■什么是活体检测?

♦为防止恶意者伪造和窃取他人的生物特征用于身份认证;

♦判断提交的生物特征是否来自有生命的个体;

■如何实现人脸身份验证?

♦人脸左转、右转、张嘴、眨眼等等;

2.活体检测方法介绍

2.1传统方法

■基于脸部视觉特征方法:

♦颜色纹理分析:SVM分类器;

♦材料(皮肤、纸面、镜面):利用真实的人脸与照片的差异进行判别;

♦帧差信息:解决视频序列信息,利用第N帧与第N+1帧的差异进行判断;

♦光流算法:用来进行运动检测,找到物体运动方向,轨迹等;

♦脸部形状变化:结合帧差信息进行人脸轮廓差异判断;

2.2 深度学习

♦多帧序列分析:CNN+LSTM;

♦人脸深度图:进行差异性分析;

♦3D Landmark:进行人脸三维信息重建;

♦多任务网络:人脸检测 + 人脸分类(真假人脸);

■基于landmark的人脸活体检测方法:

输入连续多帧人脸 → 关键点定位 → 动作检测 → 检测完成

动作检测中存在的问题:

1.张嘴检测

♦嘴巴上部关键点 - 嘴巴下部关键点 > 阈值

2.眨眼检测

♦左眼眼睛上部关键点 - 左眼眼睛下部关键点 > 阈值

♦右眼眼睛上部关键点 - 右眼眼睛下部关键点 > 阈值

3.摇/点头检测

♦左/上人脸边界上部关键点 - 右/下人脸边界关键点 > 阈值

♦右/上人脸边界上部关键点 - 左/下人脸边界关键点 > 阈值

3.活体检测问题挑战及解决思路

1.作弊问题(镜面、视频):区分人脸与照片

2.约束场景下的活体检测

■对应解决的主流技术:

♦1.3DLandmark: landmark是一种人脸部特征点提取的技术,Dlib库中为人脸68点标记,采用landmark中的点提取眼睛区域、嘴巴区域用于疲劳检测,提取鼻子等部分可用于3D姿态估计。具体参考:Dlib库landmark算法解析

♦2.End2End的活体检测算法:主要是对视频内容进行分析,通过缩减人工预处理和后续处理,尽可能使模型从原始输入到最终输出,给模型更多的可以根据数据自动调节的空间,增加模型的整体契合度。end2end强调的是全局最优,中间部分局部最优并不能代表整体最优。具体参考:深度学习中对 end2end的理解

♦3.tracking:加入跟踪思想,列举几种跟踪算法,具体参考:计算机视觉中,目前有哪些经典的目标跟踪算法?

4.人脸属性业务介绍

■什么是人脸属性?

♦人脸属性是指根据给定的人脸判断其性别、年龄和表情等;

■构建什么类型的模型,分类?回归?

♦依据所需结果为离散值还是连续值,如是否年轻,是否戴眼镜,则为分类模型,而年龄具体几岁时,则为回归模型!

5.人脸属性方法介绍

具体方法流程: 图片 → CNN → [性别、戴眼镜、年轻人、微笑]

人脸属性方法难点:

不同人种、不同年龄、不同性别等导致模型识别错误

6.基于多任务网络的人脸属性识别编程实战

6.1人脸属性识别模型搭建

为了解决多分类问题,提出多分类模型,利用不同损失函数进行反传,其思想如下图:

实验采用CelebA数据集,原数据集中有40个属性,在此选择4个属性[male、eyeglasses、young、smile]进行实验

在整理好整个多分类模型思路之后,接下来便是数据处理工作,其关键代码如下:

import cv2

import tensorflow as tf

import dlib

anno_file = "CelebA/Anno/list_attr_celeba.txt"

ff = open(anno_file)

anno_info = ff.readlines()

attribute_class = anno_info[1].split(" ")

print(attribute_class)

# Eyeglasses, male, young, smiling

idx = 0

for i in attribute_class:

if i == "Eyeglasses":

print("Eyeglasses", idx)

elif i == "Male":

print("Male", idx)

elif i == "Young":

print("Young", idx)

elif i == "Smiling":

print("Smiling", idx)

idx += 1

writer_train = tf.python_io.TFRecordWriter("train.tfrecords")

writer_test = tf.python_io.TFRecordWriter("test.tfrecords")

detector = dlib.get_frontal_face_detector()

for idx in range(2, anno_info.__len__()):

info = anno_info[idx]

attr_val = info.replace(" ", " ").split(" ")

#print(attr_val.__len__())

print(attr_val[0])

print(attr_val[16])

print(attr_val[21])

print(attr_val[32])

print(attr_val[40])

im_data = cv2.imread("CelebA/Img/img_celeba.7z/img_celeba/" + attr_val[0])

#print(i_data)

#cv2.imshow("11", i_data)

#cv2.waitKey()

#im_data = cv2.cvtColor(i_data, cv2.COLOR_BGR2RGB)

rects = detector(im_data, 0)

if len(rects) == 0:

continue

x1 = rects[0].left()

y1 = rects[0].top()

x2 = rects[0].right()

y2 = rects[0].bottom()

y1 = int(max(y1 - 0.3 * (y2 - y1), 0))

#cv2.rectangle(im_data, (x1, y1), (x2, y2), (0, 255, 0), 2)

#cv2.imshow("11", im_data)

# cv2.waitKey(0)

#人脸框判断,类似于数据清洗

if y2 - y1 < 50 or x2 - x1 < 50 or x1 < 0 or y1 < 0:

continue

im_data = im_data[y1 : y2, x1 : x2]

im_data = cv2.resize(im_data, (128, 128))

ex = tf.train.Example(

features = tf.train.Features(

feature = {

"image" : tf.train.Feature(

bytes_list = tf.train.BytesList(value = [im_data.tobytes()])

),

"Eyeglasses": tf.train.Feature(

int64_list = tf.train.Int64List(

value = [int(attr_val[16])]

)

),

"Male": tf.train.Feature(

int64_list=tf.train.Int64List(

value=[int(attr_val[21])]

)

),

"Young": tf.train.Feature(

int64_list=tf.train.Int64List(

value=[int(attr_val[32])]

)

),

"Smiling": tf.train.Feature(

int64_list=tf.train.Int64List(

value=[int(attr_val[40])]

)

)

}

)

)

if idx > anno_info.__len__() * 0.95:

writer_test.write(ex.SerializeToString())

else:

writer_train.write(ex.SerializeToString())

writer_train.close()

writer_test.close()

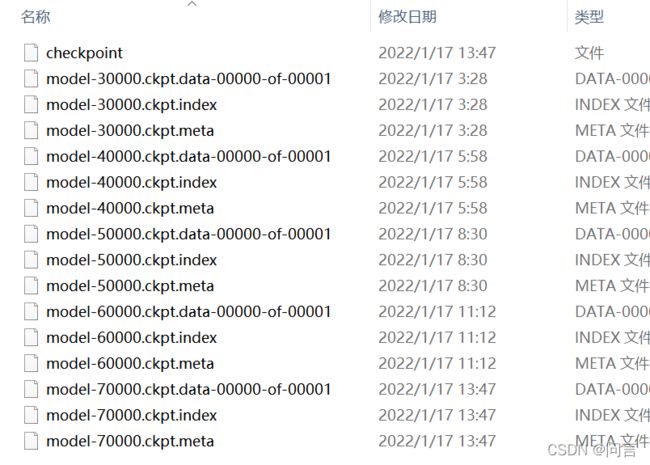

代码成功运行之后,保存两个.tfrecords文件,如下图所示:

6.2人脸属性识别模型训练与测试

在数据打包好之后,进一步开始搭建CNN训练模型,其关键核心代码如下:

def inception_v3(images, drop_out, is_training = True):

batch_norm_params = {

"is_training" : is_training,

"trainable" : True,

"decay": 0.9997,

"epsilon": 0.00001,

"variables_collections":{

"beta": None,

"gamma":None,

"moving_mean":["moving_vars"],

"moving_variance":['moving_var']

}

}

weights_regularizer = tf.contrib.layers.l2_regularizer(0.00004)

with tf.contrib.slim.arg_scope(

[tf.contrib.slim.conv2d, tf.contrib.slim.fully_connected],

weights_regularizer = weights_regularizer,

trainable = True):

with tf.contrib.slim.arg_scope(

[tf.contrib.slim.conv2d],

weights_initializer = tf.truncated_normal_initializer(stddev = 0.1),

activation_fn = tf.nn.relu,

normalizer_fn = batch_norm,

normalizer_params = batch_norm_params):

nets, endpoints = inception_v3_base(images)

print(nets)

print(endpoints)

net = tf.reduce_mean(nets, axis=[1,2]) #nhwc

net = tf.nn.dropout(net, drop_out, name = "droplast")

net = flatten(net, scope = "flatten")

net_eyeglasses = slim.fully_connected(net, 2, activation_fn = None)

net_male = slim.fully_connected(net, 2, activation_fn=None)

net_young = slim.fully_connected(net, 2, activation_fn=None)

net_smiling = slim.fully_connected(net, 2, activation_fn=None)

return net_eyeglasses, net_male, net_young,net_smiling

#多个loss

input_x = tf.placeholder(tf.float32, shape = [None, 128, 128, 3])

label_eyeglasses = tf.placeholder(tf.int64, shape=[None, 1])

label_young = tf.placeholder(tf.int64, shape=[None, 1])

label_male = tf.placeholder(tf.int64, shape=[None, 1])

label_smiling = tf.placeholder(tf.int64, shape=[None, 1])

logits_eyeglasses, logits_young, logits_male, logits_smiling = inception_v3(input_x, 0.5, True)

loss_eyeglasses = tf.losses.sparse_softmax_cross_entropy(labels = label_eyeglasses,

logits = logits_eyeglasses)

loss_young = tf.losses.sparse_softmax_cross_entropy(labels = label_young,

logits = logits_young)

loss_male = tf.losses.sparse_softmax_cross_entropy(labels = label_male,

logits = logits_male)

loss_smiling = tf.losses.sparse_softmax_cross_entropy(labels = label_smiling,

logits = logits_smiling)

logits_eyeglasses = tf.nn.softmax(logits_eyeglasses)

logits_young = tf.nn.softmax(logits_young)

logits_male = tf.nn.softmax(logits_male)

logits_smiling = tf.nn.softmax(logits_smiling)

loss = loss_eyeglasses + loss_male + loss_young + loss_smiling

reg_set = tf.get_collection(tf.GraphKeys.REGULARIZATION_LOSSES)

l2_loss = tf.add_n(reg_set)

#learn

global_step = tf.Variable(0, trainable = True)

lr = tf.train.exponential_decay(0.0001, global_step,

decay_steps = 1000,

decay_rate = 0.98,

staircase = False)

update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS)

with tf.control_dependencies(update_ops):

train_op = tf.train.AdamOptimizer(lr).minimize(loss + l2_loss, global_step)

def get_one_batch(batch_size, type):

if type == 0:

file_list = tf.gfile.Glob("train.tfrecords")

else:

file_list = tf.gfile.Glob("test.tfrecords")

reader = tf.TFRecordReader()

##100,loss

file_queue = tf.train.string_input_producer(

file_list, num_epochs = None, shuffle = True

)

_, se = reader.read(file_queue)

if type == 0:

batch = tf.train.shuffle_batch([se], batch_size, capacity = batch_size,

min_after_dequeue = batch_size // 2)

else:

batch = tf.train.batch([se], batch_size, capacity = batch_size)

features = tf.parse_example(batch, features = {

"image": tf.FixedLenFeature([], tf.string),

"Eyeglasses": tf.FixedLenFeature([1], tf.int64),

"Male": tf.FixedLenFeature([1], tf.int64),

"Young": tf.FixedLenFeature([1], tf.int64),

"Smiling": tf.FixedLenFeature([1], tf.int64),

})

batch_im = features["image"]

batch_eye = (features["Eyeglasses"] + 1) // 2

batch_male = (features["Male"] + 1) // 2

batch_young = (features["Young"] + 1) // 2

batch_smiling = (features["Smiling"] + 1) // 2

batch_im = tf.decode_raw(batch_im, tf.uint8)

batch_im = tf.cast(tf.reshape(batch_im, (batch_size, 128, 128, 3)), tf.float32)

return batch_im, batch_eye, batch_male, batch_young, batch_smiling

tr_im_batch, tr_label_eye_batch, tr_label_male, \

tr_label_young, tr_label_smiling = get_one_batch(64, 0 )

te_im_batch, te_label_eye_batch, te_label_male, \

te_label_young, te_label_smiling = get_one_batch(64, 1 )

saver = tf.train.Saver(tf.global_variables())

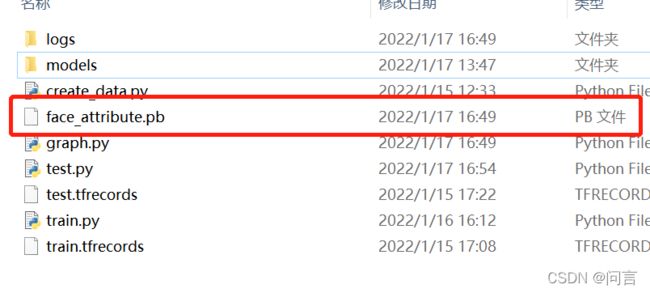

在模型训练之后,便是对模型进行固化保存,其关键代码如下:

tr_im_batch, tr_label_eye_batch, tr_label_male, \

tr_label_young, tr_label_smiling = get_one_batch(64, 0 )

te_im_batch, te_label_eye_batch, te_label_male, \

te_label_young, te_label_smiling = get_one_batch(64, 1 )

saver = tf.train.Saver(tf.global_variables())

##

with tf.Session() as session:

coord = tf.train.Coordinator()

tf.train.start_queue_runners(sess = session, coord = coord)

init_ops = tf.group(tf.global_variables_initializer(), tf.local_variables_initializer())

session.run(init_ops)

summary_writer = tf.summary.FileWriter('logs',session.graph)

###定义存储模型

ckpt = tf.train.get_checkpoint_state("models")

if ckpt and tf.train.checkpoint_exists(ckpt.model_checkpoint_path):

print(ckpt.model_checkpoint_path)

saver.restore(session, ckpt.model_checkpoint_path)

output_graph_def = tf.graph_util.convert_variables_to_constants(session,

session.graph.as_graph_def(),

['ArgMax',

'ArgMax_1',

'ArgMax_2',

'ArgMax_3'])

with tf.gfile.FastGFile("face_attribute.pb", "wb") as f:

f.write(output_graph_def.SerializeToString())

f.close()

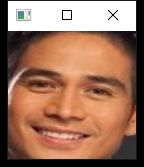

在模型固化之后,便是对模型进行测试,其测试全部代码如下:

import tensorflow as tf

import cv2

import glob

import numpy as np

import dlib

pb_path = "face_attribute.pb"

detector = dlib.get_frontal_face_detector()

sess = tf.Session()

with sess.as_default():

with tf.gfile.FastGFile(pb_path, 'rb') as f:

graph_def = sess.graph_def

graph_def.ParseFromString(f.read())

tf.import_graph_def(graph_def, name = "")

pred_eyeglasses = sess.graph.get_tensor_by_name("ArgMax:0")

pred_male = sess.graph.get_tensor_by_name("ArgMax_1:0")

pred_young = sess.graph.get_tensor_by_name("ArgMax_2:0")

pred_smiling = sess.graph.get_tensor_by_name("ArgMax_3:0")

im_list = glob.glob("CelebA/Img/img_celeba.7z/img_celeba/*")

for im_path in im_list:

im_data = cv2.imread(im_path)

rects = detector(im_data, 0)

if len(rects) == 0:

continue

x1 = rects[0].left()

y1 = rects[0].top()

x2 = rects[0].right()

y2 = rects[0].bottom()

y1 = int(max(y1 - 0.3 * (y2 - y1), 0))

im_data = im_data[y1:y2, x1:x2]

im_data = cv2.resize(im_data, (128, 128))

print(im_data.shape)

[eyeglasses, male, young, smiling] = sess.run([pred_eyeglasses, pred_male, pred_young, pred_smiling],

{"Placeholder:0" : np.expand_dims(im_data, 0)})

print("eyeglasses, male, young, smiling", eyeglasses, male, young, smiling)

cv2.imshow("1", im_data)

cv2.waitKey(0)

Use tf.gfile.GFile.

(128, 128, 3)

2022-02-11 13:16:07.931039: W tensorflow/core/common_runtime/bfc_allocator.cc:237] Allocator (GPU_0_bfc) ran out of memory trying to allocate 1.98GiB with freed_by_count=0. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2022-02-11 13:16:08.003675: W tensorflow/core/common_runtime/bfc_allocator.cc:237] Allocator (GPU_0_bfc) ran out of memory trying to allocate 1.69GiB with freed_by_count=0. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2022-02-11 13:16:08.017530: W tensorflow/core/common_runtime/bfc_allocator.cc:237] Allocator (GPU_0_bfc) ran out of memory trying to allocate 1.41GiB with freed_by_count=0. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

eyeglasses, male, young, smiling [0] [1] [0] [1]

(128, 128, 3)

eyeglasses, male, young, smiling [0] [1] [1] [1]