MyDLNote - Attention: [NLA系列] Asymmetric Non-local Neural Networks for Semantic Segmentation

Asymmetric Non-local Neural Networks for Semantic Segmentation

Zhen Zhu , Mengde Xu , Song Bai , Tengteng Huang , Xiang Bai

Huazhong University of Science and Technology, University of Oxford

[GitHub]: https://github.com/MendelXu/ANN

[paper]: https://arxiv.org/pdf/1908.07678.pdf

这篇文章的写作很棒。

[Non-Local Attention 系列]

Non-local neural networks

GCNet: Non-local Networks Meet Squeeze-Excitation Networks and Beyond [my CSDN]

Asymmetric Non-local Neural Networks for Semantic Segmentation [my CSDN]

Efficient Attention: Attention with Linear Complexities [my CSDN]

CCNet: Criss-Cross Attention for Semantic Segmentation [my CSDN]

Non-locally Enhanced Encoder-Decoder Network for Single Image De-raining [my CSDN]

Image Restoration via Residual Non-local Attention Networks [my CSDN]

Table of Contents

Asymmetric Non-local Neural Networks for Semantic Segmentation

Abstract

Introduction

Related Work

Asymmetric Non-local Neural Network

Revisiting Non-local Block

Asymmetric Pyramid Non-local Block

Asymmetric Fusion Non-local Block

Network Architecture

Experiments

Datasets and Evaluation Metrics

Implementation Details

Comparisons with Other Methods

Conclusion

Abstract

The non-local module works as a particularly useful technique for semantic segmentation while criticized for its prohibitive computation and GPU memory occupation.

[文章的motivation,即文章要解决的问题]

In this paper, we present Asymmetric Non-local Neural Network to semantic segmentation, which has two prominent components: Asymmetric Pyramid Non-local Block (APNB) and Asymmetric Fusion Non-local Block (AFNB). APNB leverages a pyramid sampling module into the non-local block to largely reduce the computation and memory consumption without sacrificing the performance. AFNB is adapted from APNB to fuse the features of different levels under a sufficient consideration of long range dependencies and thus considerably improves the performance.

[网络结构介绍,简洁而清晰]

Extensive experiments on semantic segmentation benchmarks demonstrate the effectiveness and efficiency of our work. In particular, we report the state-of-the-art performance of 81.3 mIoU on the Cityscapes test set. For a 256 × 128 input, APNB is around 6 times faster than a non-local block on GPU while 28 times smaller in GPU running memory occupation.

[最后,实验结果要和motivation相呼应,即说明文章提出的方法确实有效解决了motivation提出的问题]

Introduction

Some recent studies [20, 33, 47] indicate that the performance could be improved if making sufficient use of long range dependencies. However, models that solely rely on convolutions exhibit limited ability in capturing these long range dependencies. A possible reason is the receptive field of a single convolutional layer is inadequate to cover correlated areas. Choosing a big kernel or composing a very deep network is able to enlarge the receptive field. However, such strategies require extensive computation and parameters, thus being very inefficient [44]. Consequently, several works [33, 47] resort to use global operations like non-local means [2] and spatial pyramid pooling [12, 16].

传统CNN不能高效的捕捉long range dependency. Non-local 和 spatial pyramid pooling 可以有效解决这个问题。

In [33], Wang et al. combined CNNs and traditional non-local means [2] to compose a network module named nonlocal block in order to leverage features from all locations in an image. This module improves the performance of existing methods [33]. However, the prohibitive computational cost and vast GPU memory occupation hinder its usage in many real applications. The architecture of a common non-local block [33] is depicted in Fig. 1(a). The block first calculates the similarities of all locations between each other, requiring a matrix multiplication of computational complexity ![]() , given an input feature map with size

, given an input feature map with size ![]() . Then it requires another matrix multiplication of computational complexity

. Then it requires another matrix multiplication of computational complexity ![]() to gather the influence of all locations to themselves. Concerning the high complexity brought by the matrix multiplications, we are interested in this work if there are efficient ways to solve this without sacrificing the performance.

to gather the influence of all locations to themselves. Concerning the high complexity brought by the matrix multiplications, we are interested in this work if there are efficient ways to solve this without sacrificing the performance.

描述了Non-local的结构和复杂度。

这两段是问题的提出。

Figure 1: Architecture of a standard non-local block (a) and the asymmetric non-local block (b).

while

.

We notice that as long as the outputs of the key branch and value branch hold the same size, the output size of the non-local block remains unchanged. Considering this, if we could sample only a few representative points from key branch and value branch, it is possible that the time complexity is significantly decreased without sacrificing the performance. This motivation is demonstrated in Fig. 1 when changing a large value N in the key branch and value branch to a much smaller value S (From (a) to (b)).

本文的观察和动机。Non-local是计算每个点和所有点的相关性(即每个像素点都对应一张attention map);而本文认为不需要计算每个点的attention map,而是计算每个像素点与某几个局部区域的 attention map。

In this paper, we propose a simple yet effective nonlocal module called Asymmetric Pyramid Non-local Block (APNB) to decrease the computation and GPU memory consumption of the standard non-local module [33] with applications to semantic segmentation. Motivated by the spatial pyramid pooling [12, 16, 47] strategy, we propose to embed a pyramid sampling module into non-local blocks, which could largely reduce the computation overhead of matrix multiplications yet provide substantial semantic feature statistics. This spirit is also related to the sub-sampling tricks [33] (e.g., max pooling). Our experiments suggest that APNB yields much better performance than those sub-sampling tricks with a decent decrease of computations. To better illustrate the boosted efficiency, we compare the GPU times of APNB and a standard non-local block in Fig. 2, averaging the running time of 10 different runs with the same configuration. Our APNB largely reduces the time cost on matrix multiplications, thus being nearly 6 times faster than a non-local block.

描述APNB的形成机制(Non-Local + Spatial Pyramid Pooling),具备低复杂度、高 performance。

Besides, we also adapt APNB to fuse the features of different stages of a deep network, which brings a considerable improvement over the baseline model. We call the adapted block as Asymmetric Fusion Non-local Block (AFNB). AFNB calculates the correlations between every pixel of the low-level and high-level feature maps, yielding a fused feature with long range interactions. Our network is built based on a standard ResNet-FCN model by integrating APNB and AFNB together.

描述AFNB:融合了low-level和high-level的特征,生成了具有long range interactions的、融合的特征。

Related Work

Recent advances focus on exploring the context information and can be roughly categorized into five directions:

为了挖掘 context information,现有的方法大致包括5个研究方向:

Encoder-Decoder:U-Net

Conditional Random Field: Deeplab

Different Convolutions: Dilated Conv.

Spatial Pyramid Pooling: PSPNet, Atrous Spatial Pyramid Pooling layer (ASPP)

Non-local Network: GCNet(19CVPR), NLNet

Different from these works, our network uniquely incorporates pyramid sampling strategies with non-local blocks to capture the semantic statistics of different scales with only a minor budget of computation, while maintaining the excellent performance as the original non-local modules.

Asymmetric Non-local Neural Network

While APNB aims to decrease the computational overhead of non-local blocks, AFNB improves the learning capacity of non-local blocks thereby improving the segmentation performance.

APNB的目标是降低 non-local blocks 的计算开销,而AFNB提高了 non-local blocks 的学习能力,从而提高了分割性能。

Revisiting Non-local Block

Non-local 原理就不多写了,这里列出后面要用到的公式。函数![]() can take the form from softmax, rescaling, and none. 选择 softmax,就是Embedded Gaussian.

can take the form from softmax, rescaling, and none. 选择 softmax,就是Embedded Gaussian.

Asymmetric Pyramid Non-local Block

- Motivation and Analysis

By inspecting the general computing flow of a non-local block, one could clearly find that Eq. (2) and Eq. (4) dominate the computation. The time complexities of the two matrix multiplications are both ![]() . In semantic segmentation, the output of the network usually has a large resolution to retain detailed semantic features [6, 47]. That means N is large (for example in our training phase, N = 96 × 96 = 9216). Hence, the large matrix multiplication is the main cause of the inefficiency of a non-local block (see our statistic in Fig. 2). A more straightforward pipeline is given as

. In semantic segmentation, the output of the network usually has a large resolution to retain detailed semantic features [6, 47]. That means N is large (for example in our training phase, N = 96 × 96 = 9216). Hence, the large matrix multiplication is the main cause of the inefficiency of a non-local block (see our statistic in Fig. 2). A more straightforward pipeline is given as

这段很好理解,就是详细介绍 Non-local 的复杂度为啥那么高。

We hold a key yet intuitive observation that by changing N to another number ![]() , the output size will remain the same, as

, the output size will remain the same, as

Returning to the design of the non-local block, changing N to a small number S is equivalent to sampling several representative points from θ and γ instead of feeding all the spatial points, as illustrated in Fig. 1. Consequently, the computational complexity could be considerably decreased.

这段也很好理解,就是把N缩小到S,S远远小于N。那这个操作是怎么实现的呢?

- Solution

Based on the above observation, we propose to add sampling modules ![]() and

and ![]() after θ and γ to sample several sparse anchor points denoted as

after θ and γ to sample several sparse anchor points denoted as ![]() and

and ![]() , where S is the number of sampled anchor points. Mathematically, this is computed by

, where S is the number of sampled anchor points. Mathematically, this is computed by

![]() (8)

(8)

The similarity matrix ![]() between

between ![]() and the anchor points

and the anchor points ![]() is thus calculated by

is thus calculated by

![]()

Note that ![]() is an asymmetric matrix of size N × S.

is an asymmetric matrix of size N × S. ![]() then goes through the same normalizing function as a standard non-local block, giving the unified similarity matrix

then goes through the same normalizing function as a standard non-local block, giving the unified similarity matrix ![]() . And the attention output is acquired by

. And the attention output is acquired by

where the output is in the same size as that of Eq. (4). Following non-local blocks, the final output ![]() is given as

is given as

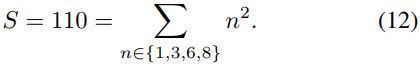

The time complexity of such an asymmetric matrix multiplication is only O(CNS ˆ ), significantly lower than O(CNˆ 2 ) in a standard non-local block. It is ideal that S should be much smaller than N. However, it is hard to ensure that when S is small, the performance would not drop too much in the meantime.

这部分详细介绍采用pyramid pooling降低复杂度。Non-local 中,输入的 query 和 key 是经过 1x1 卷积得到的 CxN 维;而这里,query 和 key 是经过 1x1 卷积,还要用 pyramid pooling 进行采样,得到 CxS 维。 即,non-local是把每个像素点作为 query,而APNB是对图像做采样,用几个 sparse anchor points 作为query。如此,计算复杂度从![]() 降低到

降低到 ![]() 。

。

作者同时认为,S 不能太小。

下面部分则是更进一步介绍具体的 pyramid pooling 是如何操作的。

- Spatial Pyramid Pooling

As discovered by previous works [16, 47], global and multi-scale representations are useful for categorizing scene semantics. Such representations can be comprehensively carved by Spatial Pyramid Pooling [16], which contains several pooling layers with different output sizes in parallel. In addition to this virtue, spatial pyramid pooling is also parameter-free and very efficient. Therefore, we embed pyramid pooling in the non-local block to enhance the global representations while reducing the computational overhead.

By doing so, we now arrive at the final formulation of Asymmetric Pyramid Non-local Block (APNB), as given in Fig. 3. As can be seen, our APNB derives from the design of a standard non-local block [33]. A vital change is to add a spatial pyramid pooling module after θ and γ respectively to sample representative anchors. This sampling process is clearly depicted in Fig. 4, where several pooling layers are applied after θ or γ and then the four pooling results are flattened and concatenated to serve as the input to the next layer. We denote the spatial pyramid pooling modules as ![]() and

and ![]() , where the superscript

, where the superscript ![]() means the width (or height) of the output size of the pooling layer (empirically, the width is equal to the height). In our model, we set

means the width (or height) of the output size of the pooling layer (empirically, the width is equal to the height). In our model, we set ![]() . Then the total number of the anchor points is

. Then the total number of the anchor points is

Figure 3: Overview of the proposed Asymmetric Non-local Neural Network. In our implementation, the key branch and the value branch in APNB share the same 1×1 convolution and sampling module, which decreases the number of parameters and computation without sacrificing the performance.

Figure 4: Demonstration of the pyramid max or average sampling process.

SPP具体的操作细节。以H = 128 and W = 256为例,本文的方法降低复杂度为non-local的 (256x128)/112=298倍。

注意:在APNB中,作者把key和value的conv1x1及pyramid pooling共享了,即用的是同一组矩阵。

Asymmetric Fusion Non-local Block

Fusing features of different levels are helpful to semantic segmentation and object tracking as hinted in [16, 18, 26, 41, 46, 51]. Common fusing operations such as addition/concatenation, are conducted in a pixel-wise and local manner. We provide an alternative that leverages long range dependencies through a non-local block to fuse multi-level features, called Fusion Non-local Block. A standard non-local block only has one input source while FNB has two: a high-level feature map ![]() and a low-level feature map

and a low-level feature map ![]() . Nh and Nl are the numbers of spatial locations of

. Nh and Nl are the numbers of spatial locations of ![]() and

and ![]() , respectively.

, respectively. ![]() and

and ![]() are the channel numbers of

are the channel numbers of ![]() and

and ![]() , respectively. Likewise, 1 × 1 convolutions

, respectively. Likewise, 1 × 1 convolutions ![]() ,

, ![]() and

and ![]() are used to transform

are used to transform ![]() and

and ![]() to embeddings

to embeddings ![]() ,

, ![]() and

and ![]() as

as

![]()

the similarity matrix ![]()

The output ![]() reflects the bonus of

reflects the bonus of ![]() to

to ![]() , which are carefully selected from all locations in

, which are carefully selected from all locations in ![]() .

.

AFNB的细节,其实图3已经解释的很清楚了。

Network Architecture

The overall architecture of our network is depicted in Fig. 3. We choose ResNet-101 [13] as our backbone network following the choice of most previous works [38, 47, 48]. We remove the last two down-sampling operations and use the dilation convolutions instead to hold the feature maps from the last two stages 1/8 of the input image. Concretely, all the feature maps in the last three stages have the same spatial size. According to our experimental trials, we fuse the features of Stage4 and Stage5 using AFNB. The fused features are thereupon concatenated with the feature maps after Stage5, avoiding situations that AFNB could not produce accurate strengthened features particularly when the training just begins and degrades the overall performance. Such features, full of rich long range cues from different feature levels, serve as the input to APNB, which then help to discover the correlations among pixels. As done for AFNB, the output of APNB is also concatenated with its input source. Note that in our implementation for APNB, ![]() and

and ![]() share parameters in order to save parameters and computation, following the design of [42]. This design doesn’t decrease the performance of APNB. Finally, a classifier is followed up to produce channel-wise semantic maps that later receive their supervisions from the ground truth maps. Note we also add another supervision to Stage4 following the settings of [47], as it is beneficial to improve the performance.

share parameters in order to save parameters and computation, following the design of [42]. This design doesn’t decrease the performance of APNB. Finally, a classifier is followed up to produce channel-wise semantic maps that later receive their supervisions from the ground truth maps. Note we also add another supervision to Stage4 following the settings of [47], as it is beneficial to improve the performance.

网络构成细节:

1. ResNet 101

2. stage 4 和 5 分辨率不变,采用的正是 dilated ResNet [参考我的博客 Dilated Residual Networks]

3. AFNB 融合的是 stage 4 和 5

4. AFNB 之后跟 APNB,残差连接方式

5. ![]() and

and ![]() share parameters [42: OCnet: Object context network for scene parsing]

share parameters [42: OCnet: Object context network for scene parsing]

6. add another supervision to Stage4 [47: Pyramid scene parsing network]

Experiments

Datasets and Evaluation Metrics

Datasets:Cityscapes [9], ADE20K [50] and PASCAL Context [21].

Evaluation Metric:Mean IoU (mean of classwise intersection over union)

Implementation Details

Training Objectives

Following [47 Pyramid scene parsing network], our model has two supervisions: one after the final output of our model while another at the output layer of Stage4. For Lfinal, we perform online hard pixel mining, which excels at coping with difficult cases.

![]()

Comparisons with Other Methods

1. Efficiency Comparison with Non-local Block

2. Performance Comparisons

3. Ablation Study

Efficacy of the APNB and AFNB

Selection of Sampling Methods:

Influence of the Anchor Points Numbers

Conclusion

个人总结:

这篇文章两个亮点:

1. 与Non-local 不同的是:

Non-local:每个点(query)与每个点之间的相关;pixel-wise

APNB:每个点与局部内容之间的关系;patch-wise

2. 融合不同level的的特征:AFNB

![MyDLNote - Attention: [NLA系列] Asymmetric Non-local Neural Networks for Semantic Segmentation_第1张图片](http://img.e-com-net.com/image/info8/a10398e8492846f39cc24da47b1b692e.jpg)

![MyDLNote - Attention: [NLA系列] Asymmetric Non-local Neural Networks for Semantic Segmentation_第2张图片](http://img.e-com-net.com/image/info8/2aea648c01f34234af59c2a971fb2c6f.jpg)

![MyDLNote - Attention: [NLA系列] Asymmetric Non-local Neural Networks for Semantic Segmentation_第3张图片](http://img.e-com-net.com/image/info8/6f92a4ec63b54df88498ec84be23419c.jpg)

![MyDLNote - Attention: [NLA系列] Asymmetric Non-local Neural Networks for Semantic Segmentation_第4张图片](http://img.e-com-net.com/image/info8/ab3f4e4d8b564be8a72de06fef253959.jpg)

![MyDLNote - Attention: [NLA系列] Asymmetric Non-local Neural Networks for Semantic Segmentation_第5张图片](http://img.e-com-net.com/image/info8/186f58969c5844618b87b536bfcc54fe.jpg)

![MyDLNote - Attention: [NLA系列] Asymmetric Non-local Neural Networks for Semantic Segmentation_第6张图片](http://img.e-com-net.com/image/info8/847bad348a664bb1b71096b696ebfff9.jpg)

![MyDLNote - Attention: [NLA系列] Asymmetric Non-local Neural Networks for Semantic Segmentation_第7张图片](http://img.e-com-net.com/image/info8/22dbde21603141809f575a336a5480c7.jpg)

![MyDLNote - Attention: [NLA系列] Asymmetric Non-local Neural Networks for Semantic Segmentation_第8张图片](http://img.e-com-net.com/image/info8/54493efeb18c4c5f97fea7fff4fa5a0c.jpg)