利用yolov4进行手写数字识别

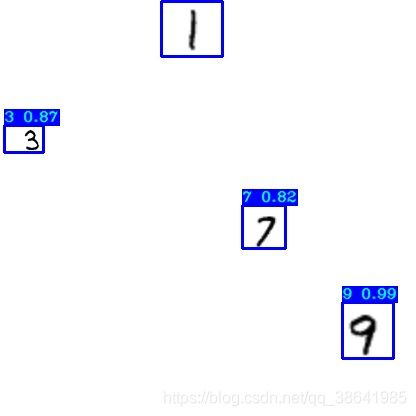

识别效果

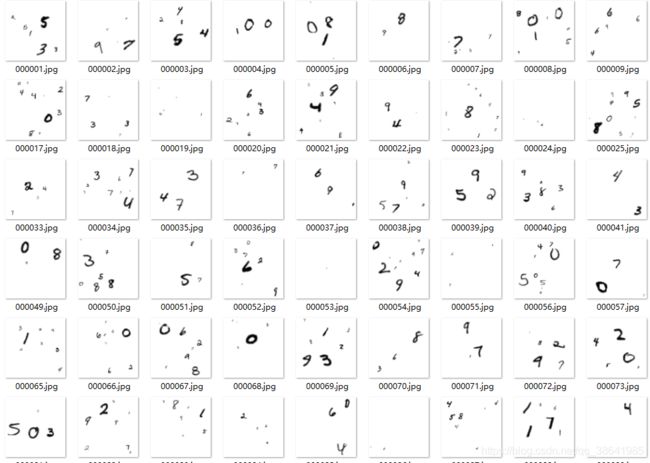

数据集

下载,https://download.csdn.net/download/qq_38641985/18963935

图片

标记

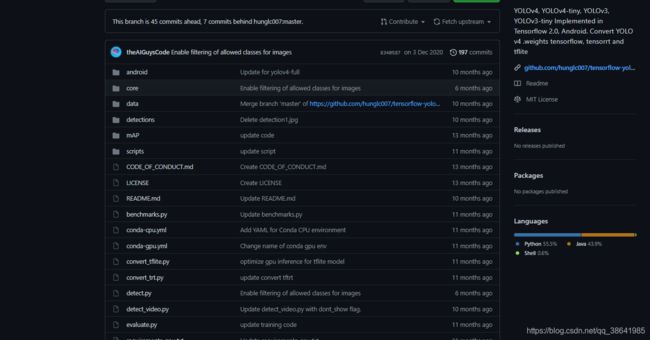

参考

https://github.com/theAIGuysCode/tensorflow-yolov4-tflite

修改配置

#! /usr/bin/env python

# coding=utf-8

from easydict import EasyDict as edict

__C = edict()

# Consumers can get config by: from config import cfg

cfg = __C

# YOLO options

__C.YOLO = edict()

__C.YOLO.CLASSES = "./mnist/mnist.names"

__C.YOLO.ANCHORS = [12,16, 19,36, 40,28, 36,75, 76,55, 72,146, 142,110, 192,243, 459,401]

__C.YOLO.ANCHORS_V3 = [10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326]

__C.YOLO.ANCHORS_TINY = [23,27, 37,58, 81,82, 81,82, 135,169, 344,319]

__C.YOLO.STRIDES = [8, 16, 32]

__C.YOLO.STRIDES_TINY = [16, 32]

__C.YOLO.XYSCALE = [1.2, 1.1, 1.05]

__C.YOLO.XYSCALE_TINY = [1.05, 1.05]

__C.YOLO.ANCHOR_PER_SCALE = 3

__C.YOLO.IOU_LOSS_THRESH = 0.5

# Train options

__C.TRAIN = edict()

__C.TRAIN.ANNOT_PATH = "./mnist/mnist_train.txt"

__C.TRAIN.BATCH_SIZE = 1

# __C.TRAIN.INPUT_SIZE = [320, 352, 384, 416, 448, 480, 512, 544, 576, 608]

__C.TRAIN.INPUT_SIZE = 224

__C.TRAIN.DATA_AUG = True

__C.TRAIN.LR_INIT = 1e-3

__C.TRAIN.LR_END = 1e-6

__C.TRAIN.WARMUP_EPOCHS = 2

__C.TRAIN.FISRT_STAGE_EPOCHS = 20

__C.TRAIN.SECOND_STAGE_EPOCHS = 30

# TEST options

__C.TEST = edict()

__C.TEST.ANNOT_PATH = "./mnist/mnist_test.txt"

__C.TEST.BATCH_SIZE = 1

__C.TEST.INPUT_SIZE = 224

__C.TEST.DATA_AUG = False

__C.TEST.DECTECTED_IMAGE_PATH = "./mnist/mnist_test/"

__C.TEST.SCORE_THRESHOLD = 0.25

__C.TEST.IOU_THRESHOLD = 0.5

YOLO_TYPE = "yolov4" # yolov4 or yolov3

YOLO_FRAMEWORK = "tf" # "tf" or "trt"

YOLO_V3_WEIGHTS = "model_data/yolov3.weights"

YOLO_V4_WEIGHTS = "model_data/yolov4.weights"

YOLO_V3_TINY_WEIGHTS = "model_data/yolov3-tiny.weights"

YOLO_V4_TINY_WEIGHTS = "model_data/yolov4-tiny.weights"

YOLO_TRT_QUANTIZE_MODE = "INT8" # INT8, FP16, FP32

YOLO_CUSTOM_WEIGHTS = False # "checkpoints/yolov3_custom" # used in evaluate_mAP.py and custom model detection, if not using leave False

# YOLO_CUSTOM_WEIGHTS also used with TensorRT and custom model detection

YOLO_COCO_CLASSES = "mnist/mnist.names"

YOLO_STRIDES = [8, 16, 32]

YOLO_IOU_LOSS_THRESH = 0.5

YOLO_ANCHOR_PER_SCALE = 3

YOLO_MAX_BBOX_PER_SCALE = 100

YOLO_INPUT_SIZE = 416

if YOLO_TYPE == "yolov4":

YOLO_ANCHORS = [[[12, 16], [19, 36], [40, 28]],

[[36, 75], [76, 55], [72, 146]],

[[142,110], [192, 243], [459, 401]]]

if YOLO_TYPE == "yolov3":

YOLO_ANCHORS = [[[10, 13], [16, 30], [33, 23]],

[[30, 61], [62, 45], [59, 119]],

[[116, 90], [156, 198], [373, 326]]]

# Train options

TRAIN_YOLO_TINY = False

TRAIN_SAVE_BEST_ONLY = True # saves only best model according validation loss (True recommended)

TRAIN_SAVE_CHECKPOINT = True # saves all best validated checkpoints in training process (may require a lot disk space) (False recommended)

TRAIN_CLASSES = "mnist/mnist.names"

TRAIN_ANNOT_PATH = "mnist/mnist_train.txt"

TRAIN_LOGDIR = "log"

TRAIN_CHECKPOINTS_FOLDER = "checkpoints"

TRAIN_MODEL_NAME = f"{YOLO_TYPE}_custom"

TRAIN_LOAD_IMAGES_TO_RAM = True # With True faster training, but need more RAM

TRAIN_BATCH_SIZE = 1

TRAIN_INPUT_SIZE = 224

TRAIN_DATA_AUG = True

TRAIN_TRANSFER = True

TRAIN_FROM_CHECKPOINT = False # "checkpoints/yolov3_custom"

TRAIN_LR_INIT = 1e-4

TRAIN_LR_END = 1e-6

TRAIN_WARMUP_EPOCHS = 2

TRAIN_EPOCHS = 100

# TEST options

TEST_ANNOT_PATH = "mnist/mnist_test.txt"

TEST_BATCH_SIZE = 1

TEST_INPUT_SIZE = 224

TEST_DATA_AUG = False

TEST_DECTECTED_IMAGE_PATH = ""

TEST_SCORE_THRESHOLD = 0.3

TEST_IOU_THRESHOLD = 0.45

训练

python train.py --model yolov4 --weights ./model/yolov4.weights

识别

import os

os.environ['CUDA_VISIBLE_DEVICES'] = '0'

import cv2

import numpy as np

import random

import colorsys

import time

import tensorflow as tf

from tensorflow.keras.layers import Conv2D, Input

from core.config import *

from core.yolov4 import *

YOLO_STRIDES = [8, 16, 32]

STRIDES = np.array(YOLO_STRIDES)

ANCHORS = (np.array(YOLO_ANCHORS).T/STRIDES).T

def detect_image(Yolo, image_path, output_path, input_size=416, show=False, CLASSES=YOLO_COCO_CLASSES, score_threshold=0.3, iou_threshold=0.45, rectangle_colors=''):

original_image = cv2.imread(image_path)

original_image = cv2.cvtColor(original_image, cv2.COLOR_BGR2RGB)

original_image = cv2.cvtColor(original_image, cv2.COLOR_BGR2RGB)

image_data = image_preprocess(np.copy(original_image), [input_size, input_size])

image_data = image_data[np.newaxis, ...].astype(np.float32)

if YOLO_FRAMEWORK == "tf":

pred_bbox = Yolo.predict(image_data)

elif YOLO_FRAMEWORK == "trt":

batched_input = tf.constant(image_data)

result = Yolo(batched_input)

pred_bbox = []

for key, value in result.items():

value = value.numpy()

pred_bbox.append(value)

pred_bbox = [tf.reshape(x, (-1, tf.shape(x)[-1])) for x in pred_bbox]

pred_bbox = tf.concat(pred_bbox, axis=0)

bboxes = postprocess_boxes(pred_bbox, original_image, input_size, score_threshold)

bboxes = nms(bboxes, iou_threshold, method='nms')

image = draw_bbox(original_image, bboxes, CLASSES=CLASSES, rectangle_colors=rectangle_colors)

# CreateXMLfile("XML_Detections", str(int(time.time())), original_image, bboxes, read_class_names(CLASSES))

if output_path != '': cv2.imwrite(output_path, image)

if show:

# Show the image

cv2.imshow("predicted image", image)

# Load and hold the image

cv2.waitKey(0)

# To close the window after the required kill value was provided

cv2.destroyAllWindows()

return image

def postprocess_boxes(pred_bbox, original_image, input_size, score_threshold):

valid_scale=[0, np.inf]

pred_bbox = np.array(pred_bbox)

pred_xywh = pred_bbox[:, 0:4]

pred_conf = pred_bbox[:, 4]

pred_prob = pred_bbox[:, 5:]

# 1. (x, y, w, h) --> (xmin, ymin, xmax, ymax)

pred_coor = np.concatenate([pred_xywh[:, :2] - pred_xywh[:, 2:] * 0.5,

pred_xywh[:, :2] + pred_xywh[:, 2:] * 0.5], axis=-1)

# 2. (xmin, ymin, xmax, ymax) -> (xmin_org, ymin_org, xmax_org, ymax_org)

org_h, org_w = original_image.shape[:2]

resize_ratio = min(input_size / org_w, input_size / org_h)

dw = (input_size - resize_ratio * org_w) / 2

dh = (input_size - resize_ratio * org_h) / 2

pred_coor[:, 0::2] = 1.0 * (pred_coor[:, 0::2] - dw) / resize_ratio

pred_coor[:, 1::2] = 1.0 * (pred_coor[:, 1::2] - dh) / resize_ratio

# 3. clip some boxes those are out of range

pred_coor = np.concatenate([np.maximum(pred_coor[:, :2], [0, 0]),

np.minimum(pred_coor[:, 2:], [org_w - 1, org_h - 1])], axis=-1)

invalid_mask = np.logical_or((pred_coor[:, 0] > pred_coor[:, 2]), (pred_coor[:, 1] > pred_coor[:, 3]))

pred_coor[invalid_mask] = 0

# 4. discard some invalid boxes

bboxes_scale = np.sqrt(np.multiply.reduce(pred_coor[:, 2:4] - pred_coor[:, 0:2], axis=-1))

scale_mask = np.logical_and((valid_scale[0] < bboxes_scale), (bboxes_scale < valid_scale[1]))

# 5. discard boxes with low scores

classes = np.argmax(pred_prob, axis=-1)

scores = pred_conf * pred_prob[np.arange(len(pred_coor)), classes]

score_mask = scores > score_threshold

mask = np.logical_and(scale_mask, score_mask)

coors, scores, classes = pred_coor[mask], scores[mask], classes[mask]

return np.concatenate([coors, scores[:, np.newaxis], classes[:, np.newaxis]], axis=-1)

def bboxes_iou(boxes1, boxes2):

boxes1 = np.array(boxes1)

boxes2 = np.array(boxes2)

boxes1_area = (boxes1[..., 2] - boxes1[..., 0]) * (boxes1[..., 3] - boxes1[..., 1])

boxes2_area = (boxes2[..., 2] - boxes2[..., 0]) * (boxes2[..., 3] - boxes2[..., 1])

left_up = np.maximum(boxes1[..., :2], boxes2[..., :2])

right_down = np.minimum(boxes1[..., 2:], boxes2[..., 2:])

inter_section = np.maximum(right_down - left_up, 0.0)

inter_area = inter_section[..., 0] * inter_section[..., 1]

union_area = boxes1_area + boxes2_area - inter_area

ious = np.maximum(1.0 * inter_area / union_area, np.finfo(np.float32).eps)

return ious

def draw_bbox(image, bboxes, CLASSES=YOLO_COCO_CLASSES, show_label=True, show_confidence = True, Text_colors=(255,255,0), rectangle_colors='', tracking=False):

NUM_CLASS = read_class_names(CLASSES)

num_classes = len(NUM_CLASS)

image_h, image_w, _ = image.shape

hsv_tuples = [(1.0 * x / num_classes, 1., 1.) for x in range(num_classes)]

#print("hsv_tuples", hsv_tuples)

colors = list(map(lambda x: colorsys.hsv_to_rgb(*x), hsv_tuples))

colors = list(map(lambda x: (int(x[0] * 255), int(x[1] * 255), int(x[2] * 255)), colors))

random.seed(0)

random.shuffle(colors)

random.seed(None)

for i, bbox in enumerate(bboxes):

coor = np.array(bbox[:4], dtype=np.int32)

score = bbox[4]

class_ind = int(bbox[5])

bbox_color = rectangle_colors if rectangle_colors != '' else colors[class_ind]

bbox_thick = int(0.6 * (image_h + image_w) / 1000)

if bbox_thick < 1: bbox_thick = 1

fontScale = 0.75 * bbox_thick

(x1, y1), (x2, y2) = (coor[0], coor[1]), (coor[2], coor[3])

# put object rectangle

cv2.rectangle(image, (x1, y1), (x2, y2), bbox_color, bbox_thick*2)

if show_label:

# get text label

score_str = " {:.2f}".format(score) if show_confidence else ""

if tracking: score_str = " "+str(score)

try:

label = "{}".format(NUM_CLASS[class_ind]) + score_str

except KeyError:

print("You received KeyError, this might be that you are trying to use yolo original weights")

print("while using custom classes, if using custom model in configs.py set YOLO_CUSTOM_WEIGHTS = True")

# get text size

(text_width, text_height), baseline = cv2.getTextSize(label, cv2.FONT_HERSHEY_COMPLEX_SMALL,

fontScale, thickness=bbox_thick)

# put filled text rectangle

cv2.rectangle(image, (x1, y1), (x1 + text_width, y1 - text_height - baseline), bbox_color, thickness=cv2.FILLED)

# put text above rectangle

cv2.putText(image, label, (x1, y1-4), cv2.FONT_HERSHEY_COMPLEX_SMALL,

fontScale, Text_colors, bbox_thick, lineType=cv2.LINE_AA)

return image

def nms(bboxes, iou_threshold, sigma=0.3, method='nms'):

"""

:param bboxes: (xmin, ymin, xmax, ymax, score, class)

Note: soft-nms, https://arxiv.org/pdf/1704.04503.pdf

https://github.com/bharatsingh430/soft-nms

"""

classes_in_img = list(set(bboxes[:, 5]))

best_bboxes = []

for cls in classes_in_img:

cls_mask = (bboxes[:, 5] == cls)

cls_bboxes = bboxes[cls_mask]

# Process 1: Determine whether the number of bounding boxes is greater than 0

while len(cls_bboxes) > 0:

# Process 2: Select the bounding box with the highest score according to socre order A

max_ind = np.argmax(cls_bboxes[:, 4])

best_bbox = cls_bboxes[max_ind]

best_bboxes.append(best_bbox)

cls_bboxes = np.concatenate([cls_bboxes[: max_ind], cls_bboxes[max_ind + 1:]])

# Process 3: Calculate this bounding box A and

# Remain all iou of the bounding box and remove those bounding boxes whose iou value is higher than the threshold

iou = bboxes_iou(best_bbox[np.newaxis, :4], cls_bboxes[:, :4])

weight = np.ones((len(iou),), dtype=np.float32)

assert method in ['nms', 'soft-nms']

if method == 'nms':

iou_mask = iou > iou_threshold

weight[iou_mask] = 0.0

if method == 'soft-nms':

weight = np.exp(-(1.0 * iou ** 2 / sigma))

cls_bboxes[:, 4] = cls_bboxes[:, 4] * weight

score_mask = cls_bboxes[:, 4] > 0.

cls_bboxes = cls_bboxes[score_mask]

return best_bboxes

def image_preprocess(image, target_size, gt_boxes=None):

ih, iw = target_size

h, w, _ = image.shape

scale = min(iw/w, ih/h)

nw, nh = int(scale * w), int(scale * h)

image_resized = cv2.resize(image, (nw, nh))

image_paded = np.full(shape=[ih, iw, 3], fill_value=128.0)

dw, dh = (iw - nw) // 2, (ih-nh) // 2

image_paded[dh:nh+dh, dw:nw+dw, :] = image_resized

image_paded = image_paded / 255.

if gt_boxes is None:

return image_paded

else:

gt_boxes[:, [0, 2]] = gt_boxes[:, [0, 2]] * scale + dw

gt_boxes[:, [1, 3]] = gt_boxes[:, [1, 3]] * scale + dh

return image_paded, gt_boxes

def read_class_names(class_file_name):

# loads class name from a file

names = {}

with open(class_file_name, 'r') as data:

for ID, name in enumerate(data):

names[ID] = name.strip('\n')

return names

def decode(conv_output, NUM_CLASS, i=0):

# where i = 0, 1 or 2 to correspond to the three grid scales

conv_shape = tf.shape(conv_output)

batch_size = conv_shape[0]

output_size = conv_shape[1]

conv_output = tf.reshape(conv_output, (batch_size, output_size, output_size, 3, 5 + NUM_CLASS))

#conv_raw_dxdy = conv_output[:, :, :, :, 0:2] # offset of center position

#conv_raw_dwdh = conv_output[:, :, :, :, 2:4] # Prediction box length and width offset

#conv_raw_conf = conv_output[:, :, :, :, 4:5] # confidence of the prediction box

#conv_raw_prob = conv_output[:, :, :, :, 5: ] # category probability of the prediction box

conv_raw_dxdy, conv_raw_dwdh, conv_raw_conf, conv_raw_prob = tf.split(conv_output, (2, 2, 1, NUM_CLASS), axis=-1)

# next need Draw the grid. Where output_size is equal to 13, 26 or 52

#y = tf.range(output_size, dtype=tf.int32)

#y = tf.expand_dims(y, -1)

#y = tf.tile(y, [1, output_size])

#x = tf.range(output_size,dtype=tf.int32)

#x = tf.expand_dims(x, 0)

#x = tf.tile(x, [output_size, 1])

xy_grid = tf.meshgrid(tf.range(output_size), tf.range(output_size))

xy_grid = tf.expand_dims(tf.stack(xy_grid, axis=-1), axis=2) # [gx, gy, 1, 2]

xy_grid = tf.tile(tf.expand_dims(xy_grid, axis=0), [batch_size, 1, 1, 3, 1])

xy_grid = tf.cast(xy_grid, tf.float32)

#xy_grid = tf.concat([x[:, :, tf.newaxis], y[:, :, tf.newaxis]], axis=-1)

#xy_grid = tf.tile(xy_grid[tf.newaxis, :, :, tf.newaxis, :], [batch_size, 1, 1, 3, 1])

#y_grid = tf.cast(xy_grid, tf.float32)

# Calculate the center position of the prediction box:

pred_xy = (tf.sigmoid(conv_raw_dxdy) + xy_grid) * STRIDES[i]

# Calculate the length and width of the prediction box:

pred_wh = (tf.exp(conv_raw_dwdh) * ANCHORS[i]) * STRIDES[i]

pred_xywh = tf.concat([pred_xy, pred_wh], axis=-1)

pred_conf = tf.sigmoid(conv_raw_conf) # object box calculates the predicted confidence

pred_prob = tf.sigmoid(conv_raw_prob) # calculating the predicted probability category box object

# calculating the predicted probability category box object

return tf.concat([pred_xywh, pred_conf, pred_prob], axis=-1)

def Create_Yolo(input_size=416, channels=3, training=False, CLASSES=YOLO_COCO_CLASSES):

NUM_CLASS = len(read_class_names(CLASSES))

input_layer = Input([input_size, input_size, channels])

if TRAIN_YOLO_TINY:

if YOLO_TYPE == "yolov4":

conv_tensors = YOLOv4_tiny(input_layer, NUM_CLASS)

if YOLO_TYPE == "yolov3":

conv_tensors = YOLOv3_tiny(input_layer, NUM_CLASS)

else:

if YOLO_TYPE == "yolov4":

conv_tensors = YOLOv4(input_layer, NUM_CLASS)

if YOLO_TYPE == "yolov3":

conv_tensors = YOLOv3(input_layer, NUM_CLASS)

output_tensors = []

for i, conv_tensor in enumerate(conv_tensors):

pred_tensor = decode(conv_tensor, NUM_CLASS, i)

if training: output_tensors.append(conv_tensor)

output_tensors.append(pred_tensor)

Yolo = tf.keras.Model(input_layer, output_tensors)

return Yolo

def main():

ID = random.randint(0, 200)

label_txt = "mnist/mnist_test.txt"

image_info = open(label_txt).readlines()[ID].split()

image_path = image_info[0]

yolo = Create_Yolo(input_size=YOLO_INPUT_SIZE, CLASSES=TRAIN_CLASSES)

yolo.load_weights(f"./checkpoints/yolov4_custom/yolov4") # use keras weights

detect_image(yolo, image_path, "txt.jpg", input_size=YOLO_INPUT_SIZE, show=True, CLASSES=TRAIN_CLASSES, rectangle_colors=(255,0,0))

main()

模型转换pb

import os

os.environ['CUDA_VISIBLE_DEVICES'] = '0'

import numpy as np

import sys

import tensorflow as tf

from core.yolov4 import YOLOv4

from tensorflow.keras.layers import Input

TRAIN_CLASSES = "mnist/mnist.names"

TRAIN_YOLO_TINY = False

YOLO_TYPE = "yolov4"

STRIDES = [8, 16, 32]

ANCHORS = [[[12, 16], [19, 36], [40, 28]],

[[36, 75], [76, 55], [72, 146]],

[[142,110], [192, 243], [459, 401]]]

STRIDES = np.array(STRIDES)

ANCHORS = (np.array(ANCHORS).T/STRIDES).T

def decode_tf(conv_output, output_size, NUM_CLASS, STRIDES, ANCHORS, i=0, XYSCALE=[1, 1, 1]):

batch_size = tf.shape(conv_output)[0]

conv_output = tf.reshape(conv_output,

(batch_size, output_size, output_size, 3, 5 + NUM_CLASS))

conv_raw_dxdy, conv_raw_dwdh, conv_raw_conf, conv_raw_prob = tf.split(conv_output, (2, 2, 1, NUM_CLASS),

axis=-1)

xy_grid = tf.meshgrid(tf.range(output_size), tf.range(output_size))

xy_grid = tf.expand_dims(tf.stack(xy_grid, axis=-1), axis=2) # [gx, gy, 1, 2]

xy_grid = tf.tile(tf.expand_dims(xy_grid, axis=0), [batch_size, 1, 1, 3, 1])

xy_grid = tf.cast(xy_grid, tf.float32)

pred_xy = ((tf.sigmoid(conv_raw_dxdy) * XYSCALE[i]) - 0.5 * (XYSCALE[i] - 1) + xy_grid) * \

STRIDES[i]

pred_wh = (tf.exp(conv_raw_dwdh) * ANCHORS[i])

pred_xywh = tf.concat([pred_xy, pred_wh], axis=-1)

pred_conf = tf.sigmoid(conv_raw_conf)

pred_prob = tf.sigmoid(conv_raw_prob)

pred_prob = pred_conf * pred_prob

pred_prob = tf.reshape(pred_prob, (batch_size, -1, NUM_CLASS))

pred_xywh = tf.reshape(pred_xywh, (batch_size, -1, 4))

return pred_xywh, pred_prob

def decode(conv_output, output_size, NUM_CLASS, i, XYSCALE=[1,1,1], FRAMEWORK='tf'):

if FRAMEWORK == 'trt':

return decode_trt(conv_output, output_size, NUM_CLASS, STRIDES, ANCHORS, i=i, XYSCALE=XYSCALE)

elif FRAMEWORK == 'tflite':

return decode_tflite(conv_output, output_size, NUM_CLASS, STRIDES, ANCHORS, i=i, XYSCALE=XYSCALE)

else:

return decode_tf(conv_output, output_size, NUM_CLASS, STRIDES, ANCHORS, i=i, XYSCALE=XYSCALE)

def read_class_names(class_file_name):

# loads class name from a file

names = {}

with open(class_file_name, 'r') as data:

for ID, name in enumerate(data):

names[ID] = name.strip('\n')

return names

def Create_Yolo(input_size=416, channels=3, training=False, CLASSES=TRAIN_CLASSES):

NUM_CLASS = len(read_class_names(CLASSES))

input_layer = Input([input_size, input_size, channels])

if TRAIN_YOLO_TINY:

if YOLO_TYPE == "yolov4":

conv_tensors = YOLOv4_tiny(input_layer, NUM_CLASS)

else:

if YOLO_TYPE == "yolov4":

conv_tensors = YOLOv4(input_layer, NUM_CLASS)

output_tensors = []

for i, conv_tensor in enumerate(conv_tensors):

pred_tensor = decode(conv_tensor,input_size, NUM_CLASS, i)

if training: output_tensors.append(conv_tensor)

output_tensors.append(pred_tensor)

Yolo = tf.keras.Model(input_layer, output_tensors)

return Yolo

yolo = Create_Yolo(input_size=224, CLASSES=TRAIN_CLASSES)

Darknet_weights="checkpoints/yolo224/yolov4"

yolo.load_weights(Darknet_weights) # use custom weights

yolo.summary()

yolo.save(f'./checkpoints/my_model')

print(f"model saves to /checkpoints/my_model")

说明,模型转换虽然成功(keras模型->pb模型),但是调用报错,暂时未解决。

暂时使用keras模型

我的项目

https://download.csdn.net/download/qq_38641985/18963831