Advances in Graph Neural Networks笔记4:Heterogeneous Graph Neural Networks

诸神缄默不语-个人CSDN博文目录

本书网址:https://link.springer.com/book/10.1007/978-3-031-16174-2

本文是本书第四章的学习笔记。

感觉这一章写得不怎么样。以研究生组会讲异质图神经网络主题论文作为标准的话,倒是还行,介绍了HGNN的常见范式和三个经典模型。以教材或综述作为标准的话,建议别买。

文章目录

- 1. HGNN

- 2. heterogeneous graph propagation network (hpn)

-

- 2.1 semantic confusion

- 2.2 HPN模型架构

- 2.3 HPN损失函数

- 2. distance encoding-based heterogeneous graph neural network (DHN)

-

- 2.1 HDE概念定义

- 2.2 DHN+链路预测

- 3. self-supervised heterogeneous graph neural network with co-contrastive learning (HeCo)

- 4. Further Reading

1. HGNN

两步信息聚合:

对目标节点

- node-level:对每个metapath聚合所有邻居节点

- semantic level:聚合所有metapath表征

本文介绍的方法关注两个问题:deep degradation phenomenon and discriminative power

2. heterogeneous graph propagation network (hpn)

分析deep degradation phenomenon问题,减缓semantic confusion

2.1 semantic confusion

semantic confusion类似同质图GNN中的过拟合问题

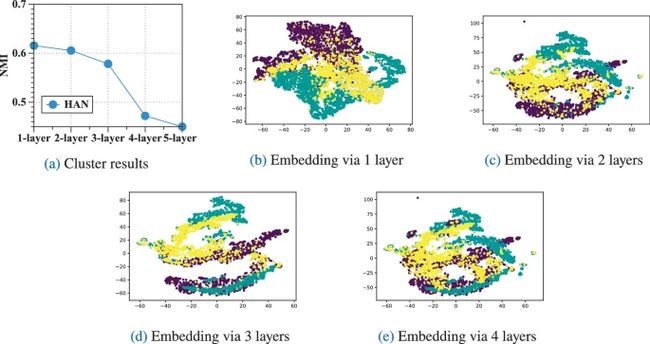

HAN模型在不同层上的聚类结果和论文节点表征的可视化图像,每种颜色代表一种标签(研究领域)。

(本文中对semantic confusion现象的解释很含混,略)

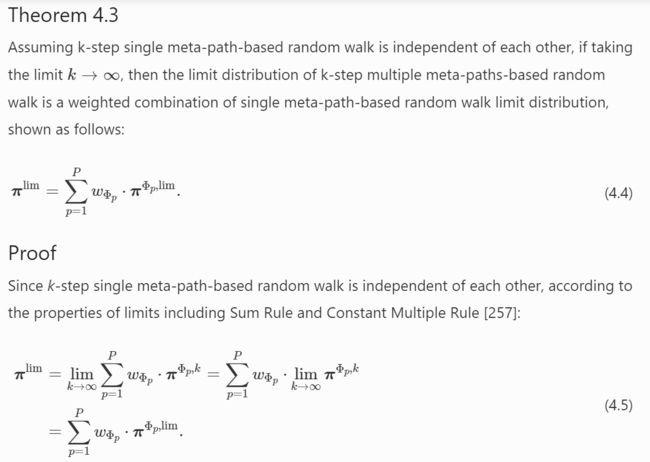

HPN一文证明了HGNN和mutiple meta-paths-based random walk是等价的:

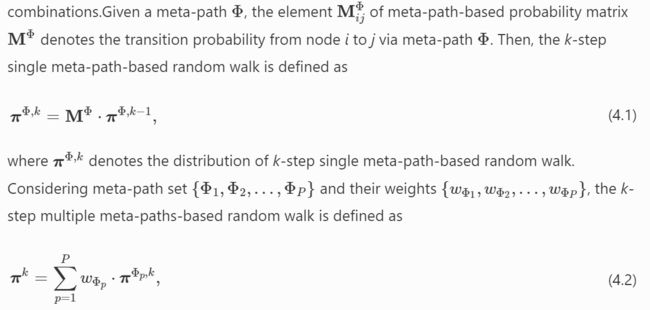

multiple meta-paths-based random walk(感觉跟同质图随机游走也差不太多嘛,就是多个用metapath处理如同质图的随机游走概率叠起来):

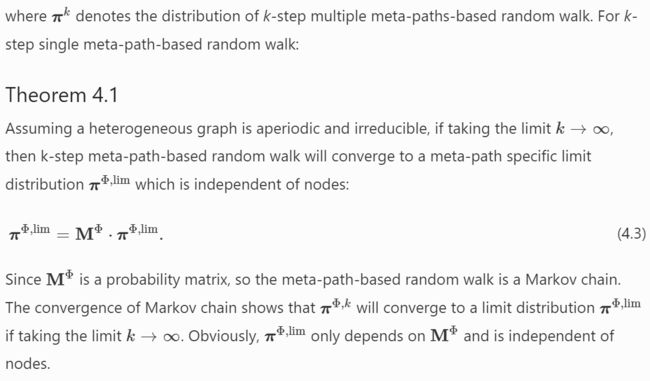

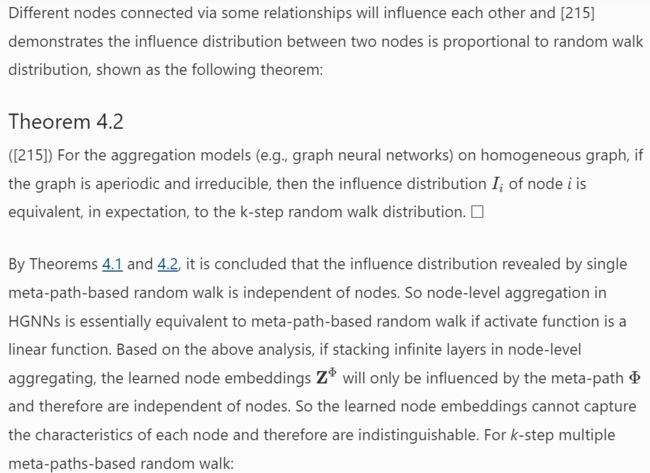

加上步数到极限以后,数学部分我就不太懂了。总之单看表示形式的话依然跟同质图随机游走相似,意思就是说最终分布只与metapaths有关,与初始状态无关:

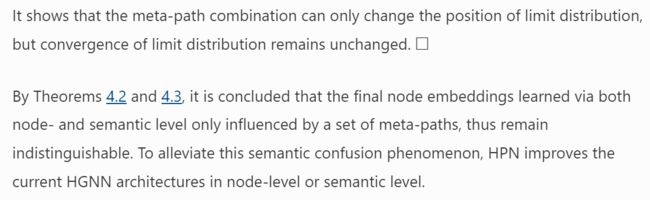

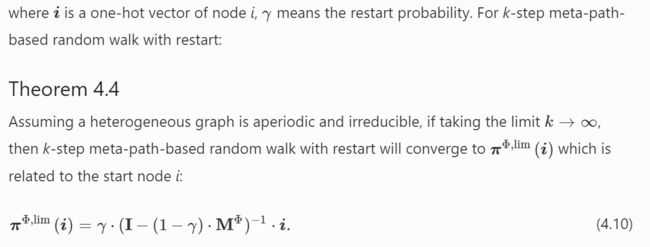

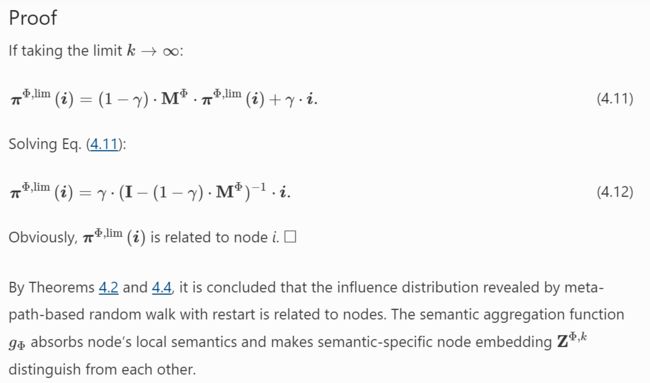

与之相对,meta-path-based random walk with restart的极限分布是与初始状态有关的:

meta-path-based random walk with restart: π Φ , k ( i ) = ( 1 − γ ) ⋅ M Φ ⋅ π Φ , k − 1 ( i ) + γ ⋅ i \boldsymbol{\pi }^{\Phi ,k}\left( \boldsymbol{i}\right) =(1-\gamma )\cdot {\textbf{M}^{\Phi }}\cdot \boldsymbol{\pi }^{\Phi ,k-1} \left( \boldsymbol{i}\right) +\gamma \cdot \boldsymbol{i} πΦ,k(i)=(1−γ)⋅MΦ⋅πΦ,k−1(i)+γ⋅i

2.2 HPN模型架构

HPN包含两部分:

- semantic propagation mechanism:除基于meta-path-based neighbors聚合信息外,还以一合适的权重吸收节点的局部语义信息,这样即使层数加深也能学习节点的局部信息(我说这逻辑听起来像APPNP啊)

- semantic fusion mechanism:学习metapaths的重要性

- Semantic Propagation Mechanism

参考meta-path-based random walk with restart:

对每个metapath邻居,先对节点做线性转换,然后聚合邻居信息(一式是底下两式的加总):

Z Φ = P Φ ( X ) = g Φ ( f Φ ( X ) ) H Φ = f Φ ( X ) = σ ( X ⋅ W Φ + b Φ ) Z Φ , k = g Φ ( Z Φ , k − 1 ) = ( 1 − γ ) ⋅ M Φ ⋅ Z Φ , k − 1 + γ ⋅ H Φ \begin{aligned} &\textbf{Z}^{\Phi } = \mathcal {P}_{\Phi }(\textbf{X}) =g_{\Phi }({f_{\Phi }(\textbf{X}))}\\ &\textbf{H}^{\Phi }= f_{\Phi }(\textbf{X})=\sigma (\textbf{X} \cdot \textbf{W}^{\Phi }+\textbf{b}^{\Phi })\\ &\textbf{Z}^{\Phi ,k} = g_{\Phi }(\textbf{Z}^{\Phi ,k-1}) =(1-\gamma )\cdot {\textbf{M}^{\Phi }}\cdot \textbf{Z}^{\Phi ,k-1} + \gamma \cdot \textbf{H}^{\Phi } \end{aligned} ZΦ=PΦ(X)=gΦ(fΦ(X))HΦ=fΦ(X)=σ(X⋅WΦ+bΦ)ZΦ,k=gΦ(ZΦ,k−1)=(1−γ)⋅MΦ⋅ZΦ,k−1+γ⋅HΦ - Semantic Fusion Mechanism:注意力机制

Z = F ( Z Φ 1 , Z Φ 2 , … , Z Φ P ) w Φ p = 1 ∣ V ∣ ∑ i ∈ V q T ⋅ tanh ( W ⋅ z i Φ p + b ) β Φ p = exp ( w Φ p ) ∑ p = 1 P exp ( w Φ p ) Z = ∑ p = 1 P β Φ p ⋅ Z Φ p \begin{aligned} &\textbf{Z}=\mathcal {F}(\textbf{Z}^{\Phi _1},\textbf{Z}^{\Phi _2},\ldots ,\textbf{Z}^{\Phi _{P}})\\ &w_{\Phi _p} =\frac{1}{|\mathcal {V}|}\sum _{i \in \mathcal {V}} \textbf{q}^\textrm{T} \cdot \tanh ( \textbf{W} \cdot \textbf{z}_{i}^{\Phi _p} +\textbf{b})\\ &\beta _{\Phi _p}=\frac{\exp (w_{\Phi _p})}{\sum _{p=1}^{P} \exp (w_{\Phi _p})}\\ &\textbf{Z}=\sum _{p=1}^{P} \beta _{\Phi _p}\cdot \textbf{Z}^{\Phi _p} \end{aligned} Z=F(ZΦ1,ZΦ2,…,ZΦP)wΦp=∣V∣1i∈V∑qT⋅tanh(W⋅ziΦp+b)βΦp=∑p=1Pexp(wΦp)exp(wΦp)Z=p=1∑PβΦp⋅ZΦp

2.3 HPN损失函数

半监督节点分类的损失函数:

L = − ∑ l ∈ Y L Y l ⋅ ln ( Z l ⋅ C ) \mathcal {L}=-\sum _{l \in \mathcal {Y}_{L}} \textbf{Y}_{l} \cdot \ln ( \textbf{Z}_{l} \cdot \textbf{C}) L=−l∈YL∑Yl⋅ln(Zl⋅C)

无监督节点推荐(?这什么任务)的损失函数,BPR loss with negative sampling:

L = − ∑ ( u , v ) ∈ Ω log σ ( z u ⊤ z v ) − ∑ ( u , v ′ ) ∈ Ω − log σ ( − z u ⊤ z v ′ ) , \begin{aligned} \mathcal {L}=-\sum _{(u, v) \in \Omega } \log \sigma \left( \textbf{z}_{u}^{\top } \textbf{z}_{v}\right) -\sum _{\left( u^{}, v^{\prime }\right) \in \Omega ^{-}} \log \sigma \left( -\textbf{z}_{u}^{\top } \textbf{z}_{v'}\right) , \end{aligned} L=−(u,v)∈Ω∑logσ(zu⊤zv)−(u,v′)∈Ω−∑logσ(−zu⊤zv′),

第一项是observed (positive) node pairs,第二项是negative node pairs sampled from all unobserved node pairs

(HPN实验部分略。简单地说,做了ablation study,做了无监督节点聚类任务,验证了模型对层级加深的鲁棒性(与HAN对比))

2. distance encoding-based heterogeneous graph neural network (DHN)

关注discriminative power:在聚合时加入heterogeneous distance encoding (HDE)(distance encoding (DE) 也是一个同质图GNN中用过的概念)

Heterogeneous Graph Neural Network with Distance Encoding

传统HGNN嵌入各个节点,本文关注节点之间的关联。

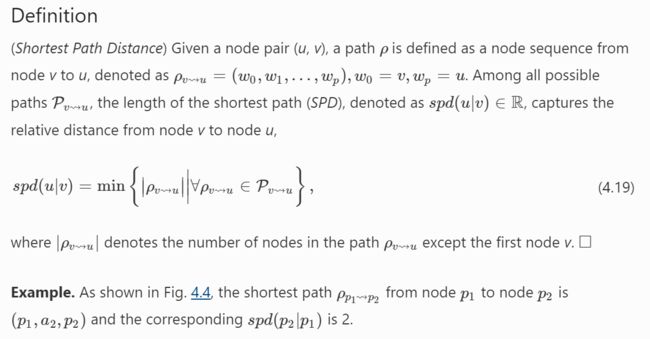

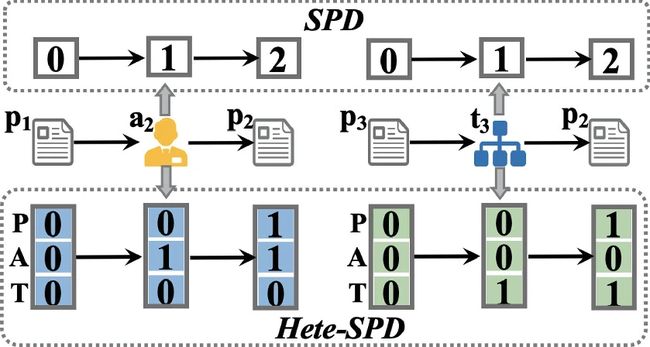

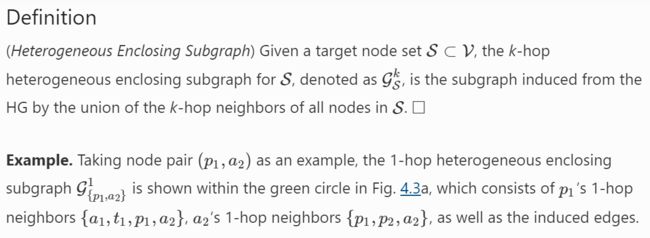

2.1 HDE概念定义

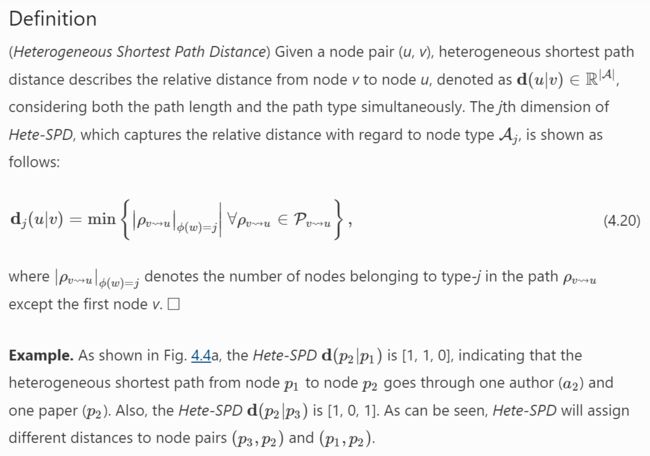

heterogeneous shortest path distance:节点数最少路径的节点数

HDE

异质图最短路径距离Hete-SPD:每一维度衡量一类节点在最短路径中出现的次数(不包括第一个节点)

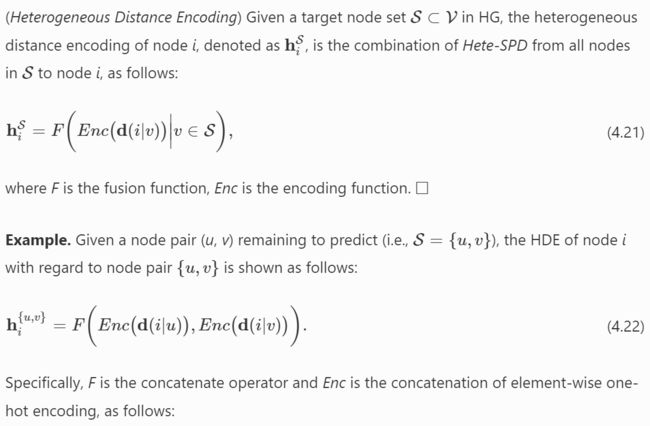

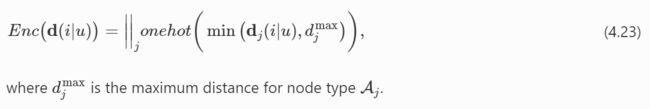

目标节点对锚节点(组)的HDE:目标节点对所有锚节点Hete-SPD先嵌入,后融合

2.2 DHN+链路预测

Heterogeneous Distance Encoding for Link Prediction

目标节点分别是节点对的两个节点,锚节点组也就是这两个节点。

- Node Embedding Initialization:HDE

- Heterogeneous distance encoding:仅计算k-hop heterogeneous enclosing subgraph的HDE

- Heterogeneous type encoding

c i = o n e h o t ( ϕ ( i ) ) e i { u , v } = σ ( W 0 ⋅ c i ∣ ∣ h i { u , v } + b 0 ) \begin{aligned} &\textbf{c}_{i} = onehot(\phi (i))\\ &\textbf{e}_i^{\{u, v\}} =\sigma (\textbf{W}_0 \cdot \textbf{c}_i||\textbf{h}_i^{\{u, v\}} + \textbf{b}_0) \end{aligned} ci=onehot(ϕ(i))ei{u,v}=σ(W0⋅ci∣∣hi{u,v}+b0) - Heterogeneous Graph Convolution:先抽样邻居节点

x u , l { u , v } = σ ( W l ⋅ ( x u , l − 1 { u , v } ∣ ∣ A v g { x i , l − 1 { u , v } } + b l ) , ∀ i ∈ N u { u , v } \begin{aligned} \textbf{x}_{u, l}^{\{u, v\}}=\sigma (\textbf{W}^{l} \cdot (\textbf{x}_{u,l-1}^{\{u, v\}}|| Avg \{\textbf{x}_{i,l-1}^{\{u, v\}}\} +\textbf{b}^{l}), \forall i \in \mathcal {N}^{\{u, v\}}_u \end{aligned} xu,l{u,v}=σ(Wl⋅(xu,l−1{u,v}∣∣Avg{xi,l−1{u,v}}+bl),∀i∈Nu{u,v} - Loss Function and Optimization

y ^ u , v = σ ( W 1 ⋅ ( z u { u , v } ∣ ∣ z v { u , v } ) + b 1 ) L = ∑ ( u , v ) ∈ E + ∪ E − ( y u , v log y ^ u , v + ( 1 − y u , v ) log ( 1 − y ^ u , v ) ) \begin{aligned} &\hat{y}_{u,v}=\sigma \left( \textbf{W}_1 \cdot (\textbf{z}_u^{\{u, v\}}||\textbf{z}_v^{\{u, v\}}) +b_1\right)\\ &\mathcal {L}=\sum _{(u, v) \in \mathcal {E}^+\cup \mathcal {E}^-}\left( y_{u, v} \log \hat{y}_{u, v}+\left( 1-y_{u, v}\right) \log \left( 1-\hat{y}_{u, v}\right) \right) \end{aligned} y^u,v=σ(W1⋅(zu{u,v}∣∣zv{u,v})+b1)L=(u,v)∈E+∪E−∑(yu,vlogy^u,v+(1−yu,v)log(1−y^u,v))

(实验部分略。同时做了transductive和inductive场合下的链路预测任务)

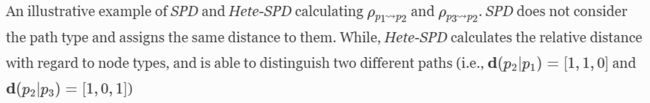

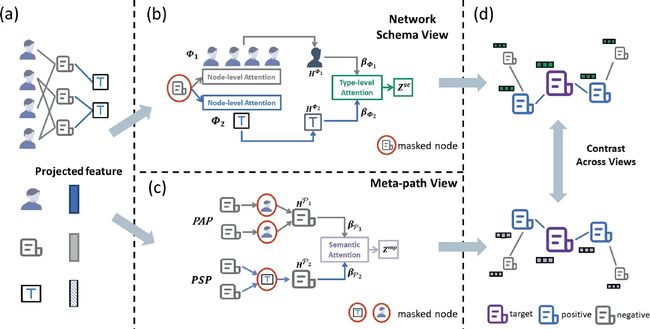

3. self-supervised heterogeneous graph neural network with co-contrastive learning (HeCo)

关注discriminative power:使用cross-view contrastive mechanism同时捕获局部和高阶结构

以前的工作:原网络VS破坏后的网络

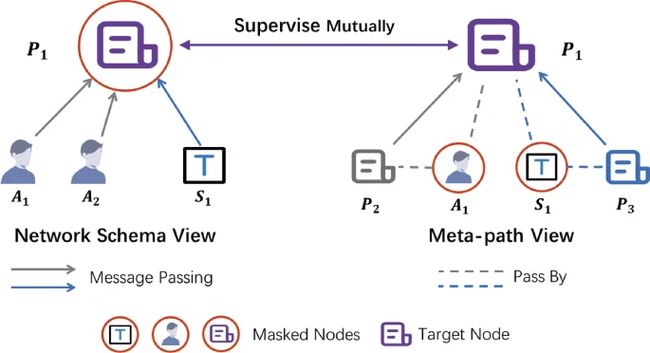

HeCo选择的view:network schema(局部结构) and meta-path structure(高阶结构)

从两个view编码节点(编码时应用view mask mechanism),在两个view-specific embeddings之间应用对比学习。

- Node Feature Transformation:每种节点都映射到固定维度

h i = σ ( W ϕ i ⋅ x i + b ϕ i ) \begin{aligned} h_i=\sigma \left( W_{\phi _i} \cdot x_i+b_{\phi _i}\right)\end{aligned} hi=σ(Wϕi⋅xi+bϕi) - Network Schema View Guided Encoder

attention:节点级别和类别级别

对某一metapath的邻居(在实践中是抽样一部分节点),应用节点级别attention:

h i Φ m = σ ( ∑ j ∈ N i Φ m α i , j Φ m ⋅ h j ) α i , j Φ m = exp ( L e a k y R e L U ( a Φ m ⊤ ⋅ [ h i ∣ ∣ h j ] ) ) ∑ l ∈ N i Φ m exp ( L e a k y R e L U ( a Φ m ⊤ ⋅ [ h i ∣ ∣ h l ] ) ) \begin{aligned} &h_i^{\Phi _m}=\sigma \left( \sum \limits _{j \in N_i^{\Phi _m}}\alpha _{i,j}^{\Phi _m} \cdot h_j\right)\\ &\alpha _{i,j}^{\Phi _m}=\frac{\exp \left( LeakyReLU\left( {\textbf {a}}_{\Phi _m}^\top \cdot [h_i||h_j]\right) \right) }{\sum \limits _{l\in N_i^{\Phi _m}} \exp \left( LeakyReLU\left( {\textbf {a}}_{\Phi _m}^\top \cdot [h_i||h_l]\right) \right) } \end{aligned} hiΦm=σ⎝⎛j∈NiΦm∑αi,jΦm⋅hj⎠⎞αi,jΦm=l∈NiΦm∑exp(LeakyReLU(aΦm⊤⋅[hi∣∣hl]))exp(LeakyReLU(aΦm⊤⋅[hi∣∣hj]))

对所有metapath的嵌入,应用类别级别attention:

w Φ m = 1 ∣ V ∣ ∑ i ∈ V a s c ⊤ ⋅ tanh ( W s c h i Φ m + b s c ) β Φ m = exp ( w Φ m ) ∑ i = 1 S exp ( w Φ i ) z i s c = ∑ m = 1 S β Φ m ⋅ h i Φ m \begin{aligned} w_{\Phi _m}&=\frac{1}{|V|}\sum \limits _{i\in V} {\textbf {a}}_{sc}^\top \cdot \tanh \left( {\textbf {W}}_{sc}h_i^{\Phi _m}+{\textbf {b}}_{sc}\right) \\ \beta _{\Phi _m}&=\frac{\exp \left( w_{\Phi _m}\right) }{\sum _{i=1}^S\exp \left( w_{\Phi _i}\right) }\\ z_i^{sc}&=\sum _{m=1}^S \beta _{\Phi _m}\cdot h_i^{\Phi _m} \end{aligned} wΦmβΦmzisc=∣V∣1i∈V∑asc⊤⋅tanh(WschiΦm+bsc)=∑i=1Sexp(wΦi)exp(wΦm)=m=1∑SβΦm⋅hiΦm

- Meta-Path View Guided Encoder

meta-path表示语义相似性,用meta-path specific GCN编码:

h i P n = 1 d i + 1 h i + ∑ j ∈ N i P n 1 ( d i + 1 ) ( d j + 1 ) h j \begin{aligned} h_i^{\mathcal {P}_n}=\frac{1}{d_i+1}h_i+\sum \limits _{j\in {N_i^{\mathcal {P}_n}}}\frac{1}{\sqrt{(d_i+1)(d_j+1)}}h_j \end{aligned} hiPn=di+11hi+j∈NiPn∑(di+1)(dj+1)1hj

在所有metapath的表征上加attention:

z i m p = ∑ n = 1 M β P n ⋅ h i P n w P n = 1 ∣ V ∣ ∑ i ∈ V a m p ⊤ ⋅ tanh ( W m p h i P n + b m p ) β P n = exp ( w P n ) ∑ i = 1 M exp ( w P i ) \begin{aligned} z_i^{mp}&=\sum _{n=1}^M \beta _{\mathcal {P}_n}\cdot h_i^{\mathcal {P}_n}\\ w_{\mathcal {P}_n}&=\frac{1}{|V|}\sum \limits _{i\in V} {\textbf {a}}_{mp}^\top \cdot \tanh \left( {\textbf {W}}_{mp}h_i^{\mathcal {P}_n}+{\textbf {b}}_{mp}\right) \\ \beta _{\mathcal {P}_n}&=\frac{\exp \left( w_{\mathcal {P}_n}\right) }{\sum _{i=1}^M\exp \left( w_{\mathcal {P}_i}\right) } \end{aligned} zimpwPnβPn=n=1∑MβPn⋅hiPn=∣V∣1i∈V∑amp⊤⋅tanh(WmphiPn+bmp)=∑i=1Mexp(wPi)exp(wPn) - 增加对比难度:view mask mechanism

network schema view:不使用目标节点本身上一级别的嵌入

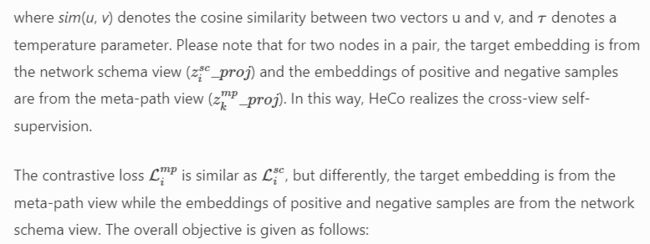

meta-path view:不使用与目标节点不同类(不是目标节点基于metapath的邻居的节点)的嵌入 - Collaboratively Contrastive Optimization

损失函数:传统对比学习+图数据

将两个view得到的嵌入映射到对比学习的隐空间:

z i s c _ p r o j = W ( 2 ) σ ( W ( 1 ) z i s c + b ( 1 ) ) + b ( 2 ) z i m p _ p r o j = W ( 2 ) σ ( W ( 1 ) z i m p + b ( 1 ) ) + b ( 2 ) \begin{aligned} \begin{aligned} z_i^{sc}\_proj&= W^{(2)}\sigma \left( W^{(1)}z_i^{sc}+b^{(1)}\right) +b^{(2)}\\ z_i^{mp}\_proj&= W^{(2)}\sigma \left( W^{(1)}z_i^{mp}+b^{(1)}\right) +b^{(2)} \end{aligned} \end{aligned} zisc_projzimp_proj=W(2)σ(W(1)zisc+b(1))+b(2)=W(2)σ(W(1)zimp+b(1))+b(2)

计算两个节点之间连的metapath数: C i ( j ) = ∑ n = 1 M 1 ( j ∈ N i P n ) \mathbb {C}_i(j) = \sum \limits _{n=1}^M \mathbb {1}\left( j\in N_i^{\mathcal {P}_n}\right) Ci(j)=n=1∑M1(j∈NiPn)( 1 ( ⋅ ) \mathbb {1}(\cdot ) 1(⋅)是indicator function)

将与节点i有metapath相连的节点组成集合 S i = { j ∣ j ∈ V a n d C i ( j ) ≠ 0 } S_i=\{j|j\in V\ and\ \mathbb {C}_i(j)\ne 0\} Si={j∣j∈V and Ci(j)=0},基于 C i ( ⋅ ) \mathbb {C}_i(\cdot ) Ci(⋅)数降序排列,如果节点集合元素数大于某一阈值,选出该阈值数个正样本 P i \mathbb {P}_i Pi,没选出来的作为负样本 N i \mathbb {N}_i Ni

计算network schema view的对比损失:

L i s c = − log ∑ j ∈ P i e x p ( s i m ( z i s c _ p r o j , z j m p _ p r o j ) / τ ) ∑ k ∈ { P i ⋃ N i } e x p ( s i m ( z i s c _ p r o j , z k m p _ p r o j ) / τ ) \begin{aligned} \mathcal {L}_i^{sc}=-\log \frac{\sum _{j\in \mathbb {P}_i}exp\left( sim\left( z_i^{sc}\_proj,z_j^{mp}\_proj\right) /\tau \right) }{\sum _{k\in \{\mathbb {P}_i\bigcup \mathbb {N}_i\}}exp\left( sim\left( z_i^{sc}\_proj,z_k^{mp}\_proj\right) /\tau \right) }\end{aligned} Lisc=−log∑k∈{Pi⋃Ni}exp(sim(zisc_proj,zkmp_proj)/τ)∑j∈Piexp(sim(zisc_proj,zjmp_proj)/τ)

总的学习目标:

J = 1 ∣ V ∣ ∑ i ∈ V [ λ ⋅ L i s c + ( 1 − λ ) ⋅ L i m p ] \begin{aligned} \mathcal {J} = \frac{1}{|V|}\sum _{i\in V}\left[ \lambda \cdot \mathcal {L}_i^{sc}+\left( 1-\lambda \right) \cdot \mathcal {L}_i^{mp}\right] \end{aligned} J=∣V∣1i∈V∑[λ⋅Lisc+(1−λ)⋅Limp]

(和前两篇一样,实验部分略。重要比较baseline有single-view的对比学习方法DMGI。做了节点分类任务,和Collaborative Trend Analysis(计算随训练epoch数增加,attention变化情况。可以发现两个view上attention是协同演变的))

4. Further Reading

惯例啦,因为参考文献序号匹配不上,所以不确定我找的论文 是否正确。

分层级聚合/intent recommendation/文本分类/GTN-软择边类型、自动生成metapaths/HGT-用heterogeneous mutual attention聚合meta-relation triplet/MAGNN-用relational rotation encoder聚合meta-path instances

- Neural graph collaborative filtering

- HGNN: Hyperedge-based graph neural network for MOOC Course Recommendation

- COSNET: Connecting Heterogeneous Social Networks with Local and Global Consistency

- Metapath-guided Heterogeneous Graph Neural Network for Intent Recommendation

- IntentGC: a Scalable Graph Convolution Framework Fusing Heterogeneous Information for Recommendation

- Heterogeneous Graph Attention Networks for Semi-supervised Short Text Classification

- Graph Transformer Networks

- Heterogeneous Graph Transformer

- MAGNN: Metapath Aggregated Graph Neural Network for Heterogeneous Graph Embedding:聚合每个metapath实例内所有节点的信息,然后聚合到每个metapath上,然后将所有metapath表征聚合到目标节点上