TurtleBot3 循线机器人

提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档

TurtleBot3 循线机器人

- 前言

- 一、按装TurtleBot3

- 二、传感器的使用

- 三、循线设计

- 四、循线脚本

- 总结

前言

由于时代的更替,TurtleBot系列以及更新了很多代了,TurtleBot1支持的是Ubuntu16.04,然而Unbntu18.04大家用的比较多,因此,我选择用三代来实现本次的功能。

一、按装TurtleBot3

1. 创建工作区以及包

(1)创建wanderbot_ws工作区

mkdir -p ~/wanderbot_ws/src

cd ~/wanderbot_ws/src

catkin_init_workspace

(2)创建wanderbot包

cd ~/wanderbot_ws/src

catkin_create_pkg wanderbot rospy geometry_msgs sensor_msgs

2.安装过程

(1)安装Turtlebot3以及仿真环境

cd wanderbot_ws/src

git clone https://github.com/ROBOTIS-GIT/turtlebot3_msgs.git

git clone https://github.com/ROBOTIS-GIT/turtlebot3.git

git clone https://github.com/ROBOTIS-GIT/turtlebot3_simulations.git

(2)安装gmapping包

cd wanderbot_ws/src

git clone https://github.com/ros-perception/openslam_gmapping.git

git clone https://github.com/ros-perception/slam_gmapping.git

git clone https://github.com/ros-planning/navigation.git

git clone https://github.com/ros/geometry2.git

git clone https://github.com/ros-planning/navigation_msgs.git

3.编译

cd ~/wanderbot_ws

catkin_make

二、传感器的使用

(1)打开世界地图

roslaunch turtlebot3_gazebo turtlebot3_world.launch

(2)启动仿真环境

roslaunch turtlebot3_gazebo turtlebot3_world.launch

如果遇到这中bug

(RLException: Invalid <arg> tag: environment variable 'TURTLEBOT3_MODEL' is not set. Arg xml is <arg default="$(env TURTLEBOT3_MODEL)" doc="model type [burger, waffle, waffle_pi]" name="model"/>

The traceback for the exception was written to the log file)*

解决bug方法:

export TURTLEBOT3_MODEL=burger

(3)DepthCloud选项

bash 显示深度相机数据

显示深度图片或者rgb图片

三、循线设计

1.用自定义的图片设计gazebo的地板

(1)在主目录下Ctrl+h,打开隐藏文件

(2)在.gazebo文件夹创建如下文件夹

mkdir ~/.gazebo/models/my_ground_plane

mkdir -p ~/.gazebo/models/my_ground_plane/materials/textures

mkdir -p ~/.gazebo/models/my_ground_plane/materials/scripts

(3)创建材料文件

cd ~/.gazebo/models/my_ground_plane/materials/scripts

vi my_ground_plane.material

my_ground_plane.material文件如下:

material MyGroundPlane/Image

{

receive_shadows on

technique

{

pass

{

ambient 0.5 0.5 0.5 1.0

texture_unit

{

texture MyImage.png

}

}

}

}

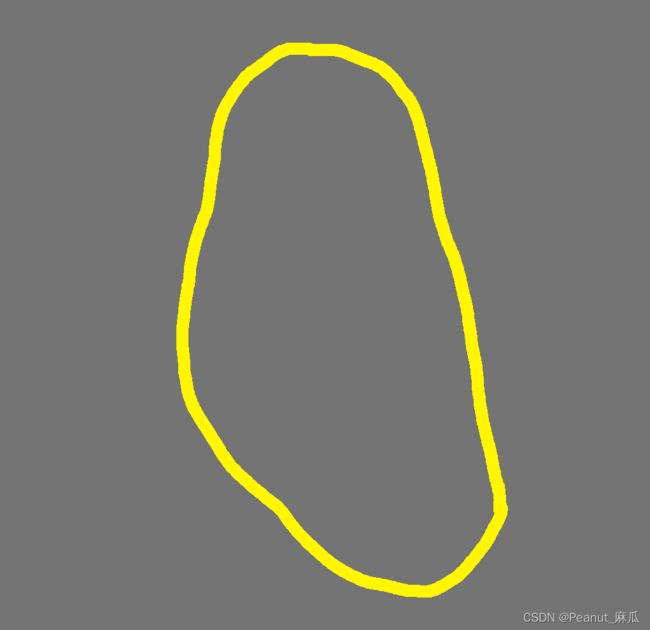

(4)在textures下保存我们想要的地板图片Myimage.png

如图,将它当到gazebo/models/my_ground_plane/materials/textures下面

可以使用命令:

cp 你的图片路径/MyImage.png ~/.gazebo/models/my_ground_plane/materials/textures/

(5)在my_ground_plane文件夹下,创建文件model.sdf

cd ~/.gazebo/models/my_ground_plane

vi model.sdf

model.sdf内容如下:

<?xml version="1.0" encoding="UTF-8"?>

<sdf version="1.4">

<model name="my_ground_plane">

<static>true</static>

<link name="link">

<collision name="collision">

<geometry>

<plane>

<normal>0 0 1</normal>

<size>15 15</size>

</plane>

</geometry>

<surface>

<friction>

<ode>

<mu>100</mu>

<mu2>50</mu2>

</ode>

</friction>

</surface>

</collision>

<visual name="visual">

<cast_shadows>false</cast_shadows>

<geometry>

<plane>

<normal>0 0 1</normal>

<size>15 15</size>

</plane>

</geometry>

<material>

<script>

<uri>model://my_ground_plane/materials/scripts</uri>

<uri>model://my_ground_plane/materials/textures/</uri>

<name>MyGroundPlane/Image</name>

</script>

</material>

</visual>

</link>

</model>

</sdf>

(6)在my_ground_plane文件夹下,创建文件moderl.config,内容如下:

<?xml version="1.0" encoding="UTF-8"?>

<model>

<name>My Ground Plane</name>

<version>1.0</version>

<sdf version="1.4">model.sdf</sdf>

<description>My textured ground plane.</description>

</model>

2.在gazebo中导入自己的地板模型

(1) 打开gazebo:

roslaunch turtlebot3_gazebo turtlebot3_world.launch

(2)另一个打开rviz:

roslaunch turtlebot_rviz_launchers view_robot.launch --screen

(3)点击gazebo左上角的insert插入刚才建的模型

(4)选择My Ground Plane:

(4)选择My Ground Plane:

![]() (5)用鼠标左键点击一下然后拖进去,右键gazebo中的模型del掉除了机器人和自己搭建的地板以外的其他模型

(5)用鼠标左键点击一下然后拖进去,右键gazebo中的模型del掉除了机器人和自己搭建的地板以外的其他模型

(6)用gazebo的移动、旋转工具将机器人放到线上面

四、循线脚本

1.写脚本控制机器人巡线

(1)创建ros工作空间

mkdir -p ~/turtlebot_ws/src

cd ~/turtlebot_ws

catkin_init_workspace

cd ..

catkin_make

cd ~/turtlebot_ws/src

catkin_create_pkg turtlebot1 rospy geometry_msgs sensor_msgs

cd ..

source ./devel/setup.bash

(2)

2.1写过滤黄线的脚本

cd src/turtlebot1/src

vi follower_color_filter.py

follower_color_filter.py内容如下:

#!/usr/bin/env python

# BEGIN ALL

import rospy, cv2, cv_bridge, numpy

from sensor_msgs.msg import Image

class Follower:

def __init__(self):

self.bridge = cv_bridge.CvBridge()

cv2.namedWindow("window", 1)

self.image_sub = rospy.Subscriber('camera/rgb/image_raw',

Image, self.image_callback)

def image_callback(self, msg):

# BEGIN BRIDGE

image = self.bridge.imgmsg_to_cv2(msg)

cv2.imshow("ori", image )

# END BRIDGE

# BEGIN HSV

hsv = cv2.cvtColor(image, cv2.COLOR_BGR2HSV)

cv2.imshow("hsv", hsv )

# END HSV

# BEGIN FILTER

lower_yellow = numpy.array([ 26, 43, 46])

upper_yellow = numpy.array([34, 255, 255])

mask = cv2.inRange(hsv, lower_yellow, upper_yellow)

# END FILTER

masked = cv2.bitwise_and(image, image, mask=mask)

cv2.imshow("window2", mask )

cv2.waitKey(3)

rospy.init_node('follower')

follower = Follower()

rospy.spin()

# END ALL

2.2给follower_color_filter.py脚本授权,编译

chmod 777 follower_color_filter.py

cd ~/turtlebot_ws

catkin_make

2.3运行脚本(在第2节的基础上进行)

rosrun turtlebot1 follower_color_filter.py

vi follower_line.py

rosrun turtlebot1 follower_line.py

follower_line.py内容如下:

#!/usr/bin/env python

# BEGIN ALL

import rospy, cv2, cv_bridge, numpy

from sensor_msgs.msg import Image

from geometry_msgs.msg import Twist

class Follower:

def __init__(self):

self.bridge = cv_bridge.CvBridge()

cv2.namedWindow("window", 1)

self.image_sub = rospy.Subscriber('camera/rgb/image_raw',

Image, self.image_callback)

self.cmd_vel_pub = rospy.Publisher('cmd_vel_mux/input/teleop',

Twist, queue_size=1)

self.twist = Twist()

def image_callback(self, msg):

image = self.bridge.imgmsg_to_cv2(msg,desired_encoding='bgr8')

hsv = cv2.cvtColor(image, cv2.COLOR_BGR2HSV)

lower_yellow = numpy.array([ 26, 43, 46])

upper_yellow = numpy.array([34, 255, 255])

mask = cv2.inRange(hsv, lower_yellow, upper_yellow)

h, w, d = image.shape

search_top = 3*h/4

search_bot = 3*h/4 + 20

mask[0:search_top, 0:w] = 0

mask[search_bot:h, 0:w] = 0

M = cv2.moments(mask)

if M['m00'] > 0:

cx = int(M['m10']/M['m00'])

cy = int(M['m01']/M['m00'])

cv2.circle(image, (cx, cy), 20, (0,0,255), -1)

# BEGIN CONTROL

err = cx - w/2

self.twist.linear.x = 0.2

self.twist.angular.z = -float(err) / 100

self.cmd_vel_pub.publish(self.twist)

# END CONTROL

cv2.imshow("window", image)

cv2.waitKey(3)

rospy.init_node('follower')

follower = Follower()

rospy.spin()

# END ALL

授权脚本权限以及编译步骤与2.1类似

运行脚本:

rosrun turtlebot1 follower_line.py

总结

本章内容是针对于《ROS机器人编程实践》中TurtleBot机器人循线部分的内容。

希望对广大读者有一些帮助,在过程中遇到各种问题可以留言或者私信我。感谢大家的支持!

本次内容参考多人的博客内容改编,若有侵权,请及时告之,立马删除!