matlab 卷积神经网络 图像去噪 对抗样本修复

总流程类似于帮助文档帮助文档图像回归

神经网络结构类似于Unet,由卷积、激活、池化、转置卷积、深度连接等layers组成

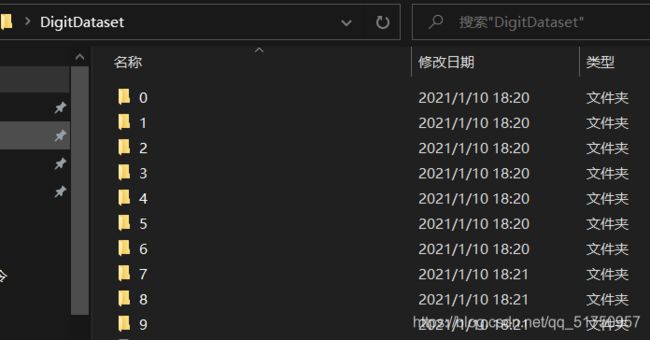

先准备好数据集,我上传在资源里,可下载https://download.csdn.net/download/qq_51750957/14115738

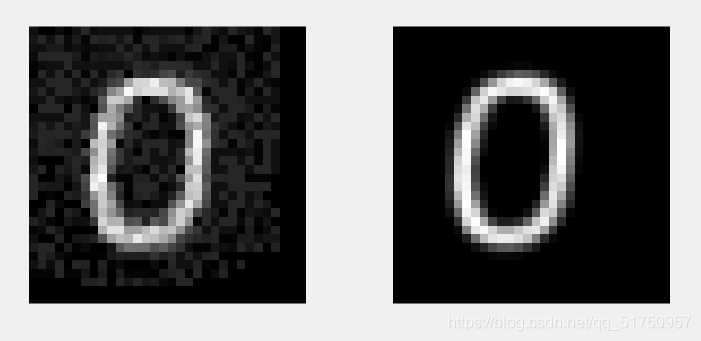

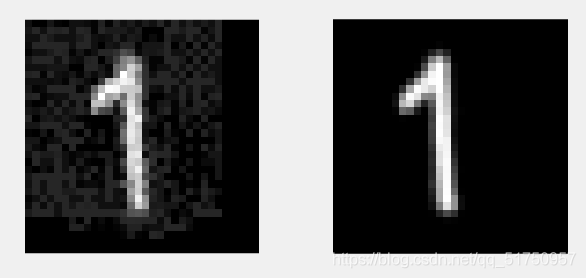

1.matlab自带的0-9手写数字,共10000张,为[28,28]单通道图像

![]()

![]()

![]()

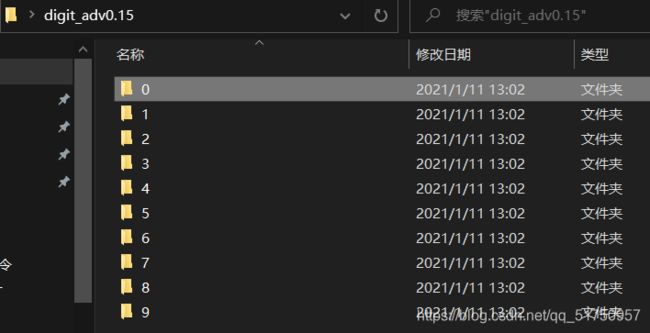

2.对应的对抗样本(加了干扰的图片)10000张(如何生成对抗样本可参照另一篇)

![]()

![]()

![]()

导入数据

imds = imageDatastore('DigitDataset', 'IncludeSubfolders', true, 'labelsource', 'foldernames');%读取原始图片

[imdsTrain,imdsValidation] = splitEachLabel(imds,0.9);%拆分出验证集

advs = imageDatastore('digit_adv0.15', 'IncludeSubfolders', true, 'labelsource', 'foldernames');%读取对抗样本

[advsTrain,advsValidation] = splitEachLabel(advs,0.9);

检查前十张图片是否对应

for i=1:10

figure(i);

subplot(1,2,1);imshow(imread(imds.Files{i}));

subplot(1,2,2);imshow(imread(advs.Files{i}));

list1{i}=imds.Files{i};

list2{i}=advs.Files{i};

end

组合数据,形成输入输出

dsTrain = combine(advsTrain,imdsTrain);%合并为两列数据,第一列为对抗样本(输入),第二列为原始图片(输出)

dsVal = combine(advsValidation,imdsValidation);%同上

dsTrain = transform(dsTrain,@commonPreprocessing);%归一化到【0,1】,转为灰度图,缩放为[32,32]大小,commonPreprocessing定义在最后

dsVal = transform(dsVal,@commonPreprocessing);%同上

dsTrain=shuffle(dsTrain);%打乱

dataOut = readall(dsTrain);%显示前8对数据

inputs = dataOut(:,1);

responses = dataOut(:,2);

minibatch = cat(2,inputs,responses);

montage(minibatch','Size',[8 2])

title('Inputs (Left) and Responses (Right)')

设置训练参数

options = trainingOptions('adam', ...

'MaxEpochs',30, ...

'MiniBatchSize',32, ...

'ValidationData',dsVal, ...

'ValidationFrequency',300,...

'ValidationPatience',100,...

'Shuffle','never', ...

'Plots','training-progress', ...

'LearnRateSchedule','piecewise',...

'LearnRateDropPeriod',5,...

'LearnRateDropFactor',0.5,...

'Verbose',false);

打开深度学习app,开始搭建网络,输入大小[32,32,1],输出也是[32,32,1],输入和输出每个像素都在【0,1】范围内。网络包含转置卷积层、深度连接层,比较复杂

搭建好后可导出为lgraph

网络很长,可以自己修改

创建深度学习网络架构

该脚本可创建具有以下属性的深度学习网络:

层数: 42

连接数: 44

运行脚本以在工作区变量 lgraph 中创建层。

要了解详细信息,请参阅 从 Deep Network Designer 生成 MATLAB 代码。

由 MATLAB 于 2021-01-11 20:52:38 自动生成

创建层次图

创建层次图变量以包含网络层。

lgraph = layerGraph();

添加层分支

将网络分支添加到层次图中。每个分支均为一个线性层组。

tempLayers = [

imageInputLayer([32 32 1],"Name","imageinput","Normalization","rescale-zero-one")

convolution2dLayer([3 3],32,"Name","conv_1_1","Padding","same")

batchNormalizationLayer("Name","batchnorm_1")

reluLayer("Name","relu_1_1")

convolution2dLayer([3 3],32,"Name","conv_1_2","Padding","same")

batchNormalizationLayer("Name","batchnorm_2")

reluLayer("Name","relu_1_2")

convolution2dLayer([3 3],32,"Name","conv_1_3","Padding","same")

batchNormalizationLayer("Name","batchnorm_3")

reluLayer("Name","relu_1_3")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

maxPooling2dLayer([2 2],"Name","maxpool_1","Padding","same","Stride",[2 2])

convolution2dLayer([3 3],64,"Name","conv_2","Padding","same")

reluLayer("Name","relu_2")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

dropoutLayer(0.15,"Name","dropout")

maxPooling2dLayer([2 2],"Name","maxpool_2","Padding","same","Stride",[2 2])

convolution2dLayer([3 3],128,"Name","conv_3","Padding","same")

reluLayer("Name","relu_3")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

maxPooling2dLayer([2 2],"Name","maxpool_3","Padding","same","Stride",[2 2])

convolution2dLayer([3 3],256,"Name","conv_5","Padding","same")

batchNormalizationLayer("Name","batchnorm_6")

reluLayer("Name","relu_7")

transposedConv2dLayer([4 4],128,"Name","transposed-conv_1","Cropping",[1 1 1 1],"Stride",[2 2])

reluLayer("Name","relu_4")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

depthConcatenationLayer(2,"Name","depthcat_3")

convolution2dLayer([3 3],128,"Name","conv_1_4","Padding","same")

batchNormalizationLayer("Name","batchnorm_4")

reluLayer("Name","relu_1_4")

transposedConv2dLayer([4 4],64,"Name","transposed-conv_2","Cropping",[1 1 1 1],"Stride",[2 2])

reluLayer("Name","relu_5")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

depthConcatenationLayer(2,"Name","depthcat_1")

convolution2dLayer([3 3],64,"Name","conv_1_5","Padding","same")

batchNormalizationLayer("Name","batchnorm_5")

reluLayer("Name","relu_1_5")

transposedConv2dLayer([4 4],32,"Name","transposed-conv_3","Cropping",[1 1 1 1],"Stride",[2 2])

reluLayer("Name","relu_6")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

depthConcatenationLayer(2,"Name","depthcat_2")

convolution2dLayer([3 3],32,"Name","conv_6","Padding","same")

batchNormalizationLayer("Name","batchnorm_7")

reluLayer("Name","relu_8")

convolution2dLayer([1 1],1,"Name","conv_4","Padding","same")

clippedReluLayer(1,"Name","clippedrelu")

regressionLayer("Name","regressionoutput")];

lgraph = addLayers(lgraph,tempLayers);

% 清理辅助变量

clear tempLayers;

连接层分支

连接网络的所有分支以创建网络图。

lgraph = connectLayers(lgraph,"relu_1_3","maxpool_1");

lgraph = connectLayers(lgraph,"relu_1_3","depthcat_2/in2");

lgraph = connectLayers(lgraph,"relu_2","dropout");

lgraph = connectLayers(lgraph,"relu_2","depthcat_1/in1");

lgraph = connectLayers(lgraph,"relu_3","maxpool_3");

lgraph = connectLayers(lgraph,"relu_3","depthcat_3/in1");

lgraph = connectLayers(lgraph,"relu_4","depthcat_3/in2");

lgraph = connectLayers(lgraph,"relu_5","depthcat_1/in2");

lgraph = connectLayers(lgraph,"relu_6","depthcat_2/in1");

绘制层

plot(lgraph);

开始训练,训练耗时很长,可以适当更改maxEpoch

denoise_net = trainNetwork(dsTrain,lgraph,options);

训练完成,尝试预测5张图片,左边是输入,右边是输出

for ii=0:5

figure(ii+1)

I=imread(['digit_adv0.15/',num2str(ii),'/0000.png']);%读入图片

I=imresize(I,[32,32]);

%I=rgb2gray(I);%如果是彩图,要转为灰度

I=single(I);

I=rescale(I);

subplot(1,2,1);imshow(I);

ypred = predict(denoise_net,I);%预测

subplot(1,2,2);imshow(rescale(ypred));

end

函数

function dataOut = commonPreprocessing(data)

dataOut = cell(size(data));

for col = 1:size(data,2)

for idx = 1:size(data,1)

temp = (data{idx,col});

temp = imresize(temp,[32,32]);

s=size(size(temp));

if s(2)==3

temp= rgb2gray(temp);

end

%temp = rescale(temp);

dataOut{idx,col} = temp;

end

end

end