猫狗数据集95%的准确率,tensorflow实现

猫狗数据集下载DATA_CAT | Kaggle

有25000张图片

导入相关的库

import warnings

import matplotlib.pyplot as plt

import numpy as np

import os

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

warnings.filterwarnings("ignore")导入数据

PATH = '/kaggle/input/data-cat'

train_dir = os.path.join(PATH, 'train')

validation_dir = os.path.join(PATH, 'val')

BATCH_SIZE = 32

IMG_SIZE = (160, 160)

train_ds = tf.keras.utils.image_dataset_from_directory(train_dir,

shuffle=True,

batch_size=BATCH_SIZE,

image_size=IMG_SIZE)

val_ds = tf.keras.utils.image_dataset_from_directory(validation_dir,

shuffle=True,

batch_size=BATCH_SIZE,

image_size=IMG_SIZE)数据增强

data_augmentation = keras.Sequential(

[

layers.RandomFlip("horizontal"),

layers.RandomRotation(0.1),

]

)建立模型

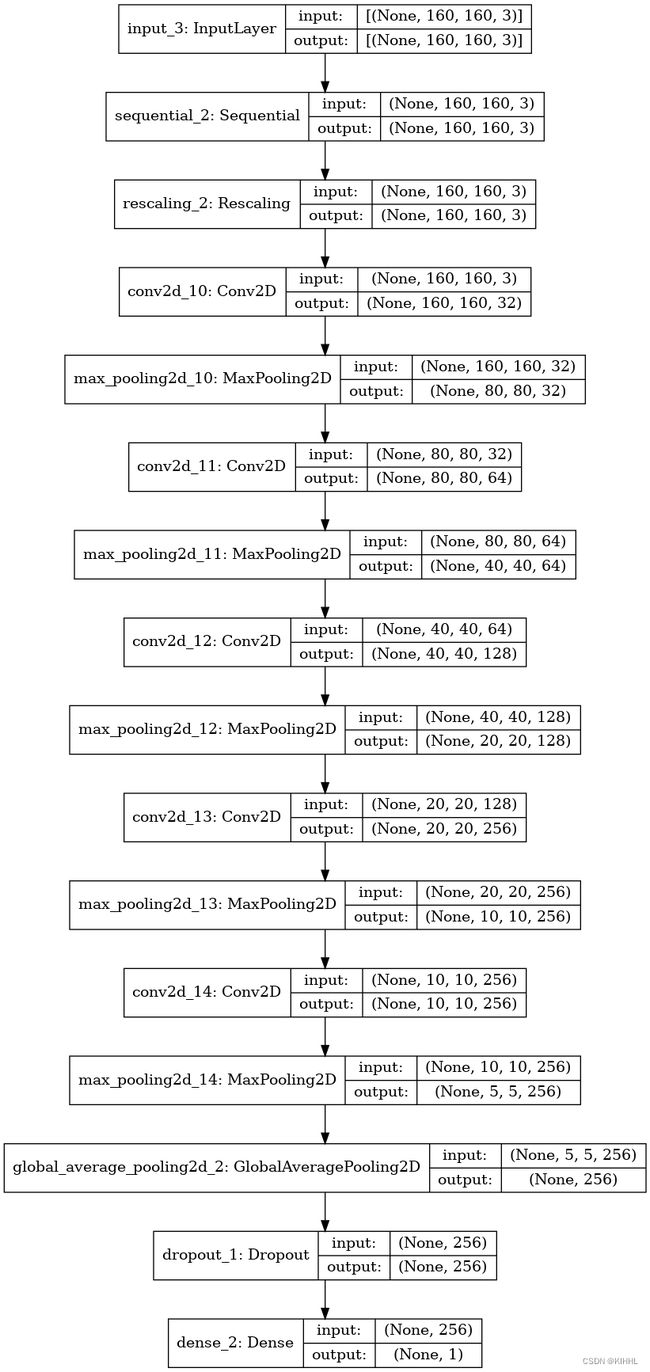

inputs = keras.Input(shape=(160, 160, 3))

x = data_augmentation(inputs)

x = layers.Rescaling(1./255)(x)

x = layers.Conv2D(filters=32, kernel_size=3, padding='same', activation="relu")(x)

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.Conv2D(filters=64, kernel_size=3, padding='same', activation="relu")(x)

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.Conv2D(filters=128, kernel_size=3, padding='same', activation="relu")(x)

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.Conv2D(filters=256, kernel_size=3, padding='same', activation="relu")(x)

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.Conv2D(filters=256, kernel_size=3, padding='same', activation="relu")(x)

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.GlobalAveragePooling2D()(x)

x = layers.Dropout(0.5)(x)

outputs = layers.Dense(1, activation="sigmoid")(x)

model = keras.Model(inputs=inputs, outputs=outputs)

keras.utils.plot_model(model, show_shapes=True, to_file='model.png')训练模型

initial_epochs = 50

callbacks = [

keras.callbacks.ModelCheckpoint("save_at_{epoch}.h5"),

]

model.compile(

optimizer=keras.optimizers.Adam(1e-3),

loss="binary_crossentropy",

metrics=["accuracy"],

)

history = model.fit(

train_ds, epochs=initial_epochs, callbacks=callbacks, validation_data=val_ds,

)Epoch 1/50

2022-09-24 05:12:23.783195: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:185] None of the MLIR Optimization Passes are enabled (registered 2) 2022-09-24 05:12:25.882535: I tensorflow/stream_executor/cuda/cuda_dnn.cc:369] Loaded cuDNN version 8005

625/625 [==============================] - 93s 135ms/step - loss: 0.6731 - accuracy: 0.5711 - val_loss: 0.6144 - val_accuracy: 0.6706 Epoch 2/50 625/625 [==============================] - 33s 53ms/step - loss: 0.6203 - accuracy: 0.6612 - val_loss: 0.6539 - val_accuracy: 0.6168 Epoch 3/50 625/625 [==============================] - 34s 53ms/step - loss: 0.5829 - accuracy: 0.6982 - val_loss: 0.5381 - val_accuracy: 0.7266 Epoch 4/50 625/625 [==============================] - 34s 53ms/step - loss: 0.5478 - accuracy: 0.7246 - val_loss: 0.4862 - val_accuracy: 0.7676 Epoch 5/50 625/625 [==============================] - 34s 53ms/step - loss: 0.5065 - accuracy: 0.7563 - val_loss: 0.4445 - val_accuracy: 0.7980 Epoch 6/50 625/625 [==============================] - 33s 53ms/step - loss: 0.4601 - accuracy: 0.7865 - val_loss: 0.4467 - val_accuracy: 0.7922 Epoch 7/50 625/625 [==============================] - 33s 53ms/step - loss: 0.4237 - accuracy: 0.8073 - val_loss: 0.3804 - val_accuracy: 0.8352

···

Epoch 45/50 625/625 [==============================] - 33s 53ms/step - loss: 0.1145 - accuracy: 0.9541 - val_loss: 0.1895 - val_accuracy: 0.9440 Epoch 46/50 625/625 [==============================] - 33s 52ms/step - loss: 0.1170 - accuracy: 0.9546 - val_loss: 0.1676 - val_accuracy: 0.9402 Epoch 47/50 625/625 [==============================] - 33s 52ms/step - loss: 0.1092 - accuracy: 0.9567 - val_loss: 0.1700 - val_accuracy: 0.9414 Epoch 48/50 625/625 [==============================] - 33s 52ms/step - loss: 0.1123 - accuracy: 0.9555 - val_loss: 0.1478 - val_accuracy: 0.9502 Epoch 49/50 625/625 [==============================] - 33s 53ms/step - loss: 0.1080 - accuracy: 0.9584 - val_loss: 0.1675 - val_accuracy: 0.9472 Epoch 50/50 625/625 [==============================] - 33s 52ms/step - loss: 0.1045 - accuracy: 0.9575 - val_loss: 0.1585 - val_accuracy: 0.9500

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.figure(figsize=(8, 8))

plt.subplot(2, 1, 1)

plt.plot(acc, "bo", label='Training Accuracy')

plt.plot(val_acc, "b", label='Validation Accuracy')

plt.legend(loc='lower right')

plt.ylabel('Accuracy')

plt.ylim([min(plt.ylim()),1])

plt.title('Training and Validation Accuracy')

plt.subplot(2, 1, 2)

plt.plot(loss, "bo", label='Training Loss')

plt.plot(val_loss, "b", label='Validation Loss')

plt.legend(loc='upper right')

plt.ylabel('Cross Entropy')

plt.ylim([0,1.0])

plt.title('Training and Validation Loss')

plt.xlabel('epoch')

plt.savefig("A.png", dpi=900)

plt.show()