Tensorflow实现神经网络及实现多层神经网络进行时装分类

Tensorflow实现神经网络及实现多层神经网络进行时装分类

1. tf.keras构建模型训练评估测试API介绍

import tensorflow as tf

from tensorflow import keras

1.1 构建模型

- 1、Keras中模型类型:Sequential模型

- 在 Keras 中,您可以通过组合层来构建模型。模型(通常)是由层构成的图。最常见的模型类型是层的堆叠,keras.layers中就有很多模型,如下图:可以在源码文件中找到

- tf.keras.Sequential模型(layers如下)

- 在 Keras 中,您可以通过组合层来构建模型。模型(通常)是由层构成的图。最常见的模型类型是层的堆叠,keras.layers中就有很多模型,如下图:可以在源码文件中找到

from tensorflow.python.keras.layers import Dense

from tensorflow.python.keras.layers import DepthwiseConv2D

from tensorflow.python.keras.layers import Dot

from tensorflow.python.keras.layers import Dropout

from tensorflow.python.keras.layers import ELU

from tensorflow.python.keras.layers import Embedding

from tensorflow.python.keras.layers import Flatten

from tensorflow.python.keras.layers import GRU

from tensorflow.python.keras.layers import GRUCell

from tensorflow.python.keras.layers import LSTMCell

...

...

...

【layers构建网络的没一层】

- Flatten:将输入数据进行形状改变展开 【将数据设置为固定的大小形状,简单来说就是修改形状】

- Dense:添加一层神经元

- Dense(units,activation=None,**kwargs)

- units:神经元个数

- activation:激活函数,参考tf.nn.relu,tf.nn.softmax,tf.nn.sigmoid,tf.nn.tanh

- **kwargs:输入上层输入的形状,input_shape=()

- Dense(units,activation=None,**kwargs)

tf.keras.Sequential构建类似管道的模型

model = keras.Sequential([

keras.layers.Flatten(input_shape=(28, 28)),

keras.layers.Dense(128, activation=tf.nn.relu),

keras.layers.Dense(10, activation=tf.nn.softmax)

])

示例代码:

import tensorflow as tf

from tensorflow.python.keras.models import Sequential

from tensorflow.python.keras.layers import Dense, Flatten

def main():

# (784, )--->(28, 28)

model = Sequential([

Flatten(input_shape=(28, 28)),

Dense(128, activation=tf.nn.relu),

Dense(10, activation=tf.nn.softmax)

])

print(model)

if __name__ == '__main__':

main()

- 2、Model类型:

- from keras.models import Model

- Model(inputs=a, outputs=b)

- inputs:定义模型的输入,Input类型

- outpts:定义模型的输出

- def call(self, inputs):接收来自上层的输入

from keras.models import Model

from keras.layers import Input, Dense

data = Input(shape=(784,))

out = Dense(32)(data)

model = Model(input=data, output=out)

示例代码:

import tensorflow as tf

from tensorflow.python.keras.models import Sequential, Model

from tensorflow.python.keras.layers import Dense, Flatten, Input

def main():

# (784, )--->(28, 28)

model_1 = Sequential([

Flatten(input_shape=(28, 28)),

Dense(128, activation=tf.nn.relu),

Dense(10, activation=tf.nn.softmax)

])

print(model_1)

# 通过Model建立模型

data = Input(shape=(784, ))

print(data)

out = Dense(64)(data)

print(out)

model_2 = Model(inputs=data, outputs=out)

print(model_2)

if __name__ == '__main__':

main()

运行结果:

Tensor("input_1:0", shape=(None, 784), dtype=float32)

Tensor("dense_2/BiasAdd:0", shape=(None, 64), dtype=float32)

1.2 Models属性

model.layers:获取模型结构列表

print(model.layers)

[, , , ]

model.inputs是模型的输入张量列表

print(model.inputs)

[]

model.outputs是模型的输出张量列表

print(model.outputs)

[]

model.summary()打印模型的摘要表示

Layer (type) Output Shape Param #

=================================================================

flatten (Flatten) (None, 784) 0

_________________________________________________________________

dense (Dense) (None, 64) 50240

_________________________________________________________________

dense_1 (Dense) (None, 128) 8320

_________________________________________________________________

dense_2 (Dense) (None, 10) 1290

=================================================================

Total params: 59,850

Trainable params: 59,850

Non-trainable params: 0

示例代码:

import tensorflow as tf

from tensorflow.python.keras.models import Sequential, Model

from tensorflow.python.keras.layers import Dense, Flatten, Input

def main():

# (784, )--->(28, 28)

model_1 = Sequential([

Flatten(input_shape=(28, 28)),

Dense(128, activation=tf.nn.relu),

Dense(10, activation=tf.nn.softmax)

])

print(model_1)

# 通过Model建立模型

data = Input(shape=(784, ))

print(data)

out = Dense(64)(data)

print(out)

model_2 = Model(inputs=data, outputs=out)

print(model_2)

print('#' * 100)

# 获取模型结构列表

print(model_1.layers)

# 模型的输入张量列表

print(model_1.inputs)

# 模型的输出张量列表

print(model_1.outputs)

# 打印模型的摘要表示

print(model_1.summary())

if __name__ == '__main__':

main()

运行效果:

Tensor("input_1:0", shape=(None, 784), dtype=float32)

Tensor("dense_2/BiasAdd:0", shape=(None, 64), dtype=float32)

####################################################################################################

[, , ]

[]

[]

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

flatten (Flatten) (None, 784) 0

_________________________________________________________________

dense (Dense) (None, 128) 100480

_________________________________________________________________

dense_1 (Dense) (None, 10) 1290

=================================================================

Total params: 101,770

Trainable params: 101,770

Non-trainable params: 0

_________________________________________________________________

None

1.3 Models方法

-

通过调用model的

compile方法去配置该模型所需要的训练参数以及评估方法。 -

model.compile(optimizer,loss=None,metrics=None, 准确率衡):配置训练相关参数

- optimizer:梯度下降优化器(在keras.optimizers)

from tensorflow.python.keras.optimizers import Adadelta from tensorflow.python.keras.optimizers import Adagrad from tensorflow.python.keras.optimizers import Adam from tensorflow.python.keras.optimizers import Adamax from tensorflow.python.keras.optimizers import Nadam from tensorflow.python.keras.optimizers import Optimizer from tensorflow.python.keras.optimizers import RMSprop from tensorflow.python.keras.optimizers import SGD from tensorflow.python.keras.optimizers import deserialize from tensorflow.python.keras.optimizers import get from tensorflow.python.keras.optimizers import serialize from tensorflow.python.keras.optimizers import AdamOptimizer()- loss=None:损失类型,类型可以是字符串或者该function名字参考:

from tensorflow.python.keras.losses import KLD from tensorflow.python.keras.losses import KLD as kld from tensorflow.python.keras.losses import KLD as kullback_leibler_divergence from tensorflow.python.keras.losses import MAE from tensorflow.python.keras.losses import MAE as mae from tensorflow.python.keras.losses import MAE as mean_absolute_error from tensorflow.python.keras.losses import MAPE from tensorflow.python.keras.losses import MAPE as mape from tensorflow.python.keras.losses import MAPE as mean_absolute_percentage_error from tensorflow.python.keras.losses import MSE from tensorflow.python.keras.losses import MSE as mean_squared_error from tensorflow.python.keras.losses import MSE as mse from tensorflow.python.keras.losses import MSLE from tensorflow.python.keras.losses import MSLE as mean_squared_logarithmic_error from tensorflow.python.keras.losses import MSLE as msle from tensorflow.python.keras.losses import binary_crossentropy from tensorflow.python.keras.losses import categorical_crossentropy from tensorflow.python.keras.losses import categorical_hinge from tensorflow.python.keras.losses import cosine from tensorflow.python.keras.losses import cosine as cosine_proximity from tensorflow.python.keras.losses import deserialize from tensorflow.python.keras.losses import get from tensorflow.python.keras.losses import hinge from tensorflow.python.keras.losses import logcosh from tensorflow.python.keras.losses import poisson from tensorflow.python.keras.losses import serialize from tensorflow.python.keras.losses import sparse_categorical_crossentropy from tensorflow.python.keras.losses import squared_hinge- metrics=None, ['accuracy']

model.compile(optimizer=tf.keras.optimizers.Adam(),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

sparse_categorical_crossentropy:对于目标值是整型的进行交叉熵损失计算 【即为没有进行one-hot编码等,不是01数】

categorical_crossentropy:对于两个output tensor and a target tensor进行交叉熵损失计算 【即为已经是编码后的数了】

-

model.fit():进行训练

-

(x=None,y=None, batch_size=None,epochs=1,callbacks=None)

-

x:特征值:

1、Numpy array (or array-like), or a list of arrays 2、A TensorFlow tensor, or a list of tensors 3、`tf.data` dataset or a dataset iterator. Should return a tuple of either `(inputs, targets)` or `(inputs, targets, sample_weights)`. 4、A generator or `keras.utils.Sequence` returning `(inputs, targets)` or `(inputs, targets, sample weights)`. -

y:目标值

-

batch_size=None:批次大小

-

epochs=1:训练迭代次数

-

callbacks=None:添加回调列表(用于如tensorboard显示等)

-

model.fit(train_images, train_labels, epochs=5, batch_size=32)

- model.evaluate(test_images, test_labels)

model.evaluate(test, test_label)

-

预测model.predict(test):

-

其它方法:

model.save_weights(filepath)将模型的权重保存为HDF5文件或者ckpt文件model.load_weights(filepath, by_name=False)从HDF5文件(由其创建save_weights)加载模型的权重。默认情况下,架构预计不会更改。要将权重加载到不同的体系结构(具有一些共同的层),请使用by_name=True仅加载具有相同名称的那些层。

2. 案例:实现多层神经网络进行时装分类

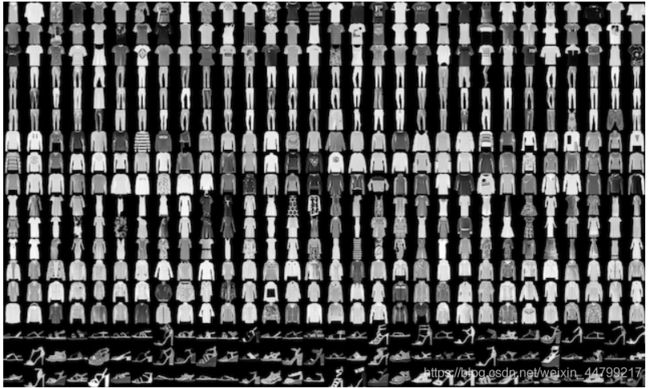

70000 张灰度图像,涵盖 10 个类别。以下图像显示了单件服饰在较低分辨率(28x28 像素)下的效果:

2.1 需求:

| 标签 | 类别 |

|---|---|

| 0 | T 恤衫/上衣 |

| 1 | 裤子 |

| 2 | 套衫 |

| 3 | 裙子 |

| 4 | 外套 |

| 5 | 凉鞋 |

| 6 | 衬衫 |

| 7 | 运动鞋 |

| 8 | 包包 |

2.2 步骤分析和代码实现:

- 读取数据集:

- 从datasets中获取相应的数据集,直接有训练集和测试集

class SingleNN(object):

def __init__(self):

(self.train, self.train_label), (self.test, self.test_label) = keras.datasets.fashion_mnist.load_data()

- 进行模型编写

- 双层:128个神经元,全连接层10个类别输出

- Dense(128, activation=tf.nn.relu):

- 定义网络结构以及初始化权重偏置参数

class SingleNN(object):

model = keras.Sequential([

keras.layers.Flatten(input_shape=(28, 28)),

keras.layers.Dense(128, activation=tf.nn.relu),

keras.layers.Dense(10, activation=tf.nn.softmax)

])

这里我们model只是放在类中,作为类的固定模型属性, 这里的激活函数暂时使用tf.nn.relu函数

- 编译定义优化过程:

这里选择我们SGD优化器

- keras.optimizers.SGD()

keras.optimizers.SGD(lr=0.01)

loss:tf.keras.losses.sparse_categorical_crossentropy

metrics:accuracy

def compile(self):

SingleNN.model.compile(optimizer=keras.optimizers.SGD(lr=0.01),

loss=tf.keras.losses.sparse_categorical_crossentropy,

metrics=['accuracy'])

return None

- 训练:

- 关于迭代次数与每次迭代样本数

- 设置batch_size=32或者128查看效果(值如何设置,优化部分会进行讲解)

def fit(self):

SingleNN.model.fit(self.train, self.train_label, epochs=5, batch_size=32)

return None

- 评估:

def evaluate(self):

test_loss, test_acc = SingleNN.model.evaluate(self.test, self.test_label)

print(test_loss, test_acc)

return None

示例代码:

import tensorflow as tf

from keras import datasets

from keras.models import Sequential

from keras.layers import Flatten, Dense

from keras.optimizers import SGD

from keras.losses import sparse_categorical_crossentropy

class SingleNN(object):

"""

构建双层神经网络进行时装模型训练与预测

"""

# 建立神经网络模型

model = Sequential([

Flatten(input_shape=(28, 28)), # 将输入数据的形状进行修改为神经网络要求的数据形状

Dense(128, activation=tf.nn.relu), # 定义隐藏层,128个神经元的网络层

Dense(10, activation=tf.nn.softmax) # 10个类别的分类问题,输出的神经元个数必须跟总类别数量相同

])

def __init__(self):

# 读取数据集,返回两个元组

# x_train:(1875, 784),y_train:(1875, 1)

(self.x_train, self.y_train), (self.x_test, self.y_test) = datasets.fashion_mnist.load_data()

def compile(self):

"""

编译模型优化器、损失和准确率

:return:

"""

SingleNN.model.compile(optimizer=SGD(lr=0.01), loss=sparse_categorical_crossentropy, metrics=['accuracy'])

return None

def fit(self):

"""

进行fit训练

:return:

"""

# 训练样本的特征值和目标值

SingleNN.model.fit(self.x_train, self.y_train, epochs=5)

return None

def evaluate(self):

"""

评估模型测试效果

:return:

"""

test_loss, test_acc = SingleNN.model.evaluate(self.x_test, self.y_test)

print(test_loss, test_acc)

return None

if __name__ == '__main__':

snn = SingleNN()

snn.compile()

snn.fit()

snn.evaluate()

运行结果:

Epoch 1/5

1875/1875 [==============================] - 1s 579us/step - loss: 171.3367 - accuracy: 0.1105

Epoch 2/5

1875/1875 [==============================] - 1s 578us/step - loss: 2.2984 - accuracy: 0.1015

Epoch 3/5

1875/1875 [==============================] - 1s 597us/step - loss: 2.3026 - accuracy: 0.0987

Epoch 4/5

1875/1875 [==============================] - 1s 639us/step - loss: 2.3025 - accuracy: 0.0962

Epoch 5/5

1875/1875 [==============================] - 1s 635us/step - loss: 2.3025 - accuracy: 0.0983

313/313 [==============================] - 0s 551us/step - loss: 2.3027 - accuracy: 0.1000

2.3027026653289795 0.10000000149011612- 效果不好,这里要进行特征归一化(优化部分会进行讲解)

# 做特征值的归一化

# 所有像素值范围0~255,

self.train = self.train / 255.0

self.test = self.test / 255.0

示例代码:

import tensorflow as tf

from keras import datasets

from keras.models import Sequential

from keras.layers import Flatten, Dense

from keras.optimizers import SGD

from keras.losses import sparse_categorical_crossentropy

class SingleNN(object):

"""

构建双层神经网络进行时装模型训练与预测

"""

# 建立神经网络模型

model = Sequential([

Flatten(input_shape=(28, 28)), # 将输入数据的形状进行修改为神经网络要求的数据形状

Dense(128, activation=tf.nn.relu), # 定义隐藏层,128个神经元的网络层

Dense(10, activation=tf.nn.softmax) # 10个类别的分类问题,输出的神经元个数必须跟总类别数量相同

])

def __init__(self):

# 读取数据集,返回两个元组

# x_train:(1875, 784),y_train:(1875, 1)

(self.x_train, self.y_train), (self.x_test, self.y_test) = datasets.fashion_mnist.load_data()

# 进行数据的归一化,注意:目标值不需要归一化

self.x_train = self.x_train / 255.0

self.x_test = self.x_test / 255.0

def compile(self):

"""

编译模型优化器、损失和准确率

:return:

"""

SingleNN.model.compile(optimizer=SGD(lr=0.01), loss=sparse_categorical_crossentropy, metrics=['accuracy'])

return None

def fit(self):

"""

进行fit训练

:return:

"""

# 训练样本的特征值和目标值

SingleNN.model.fit(self.x_train, self.y_train, epochs=5)

return None

def evaluate(self):

"""

评估模型测试效果

:return:

"""

test_loss, test_acc = SingleNN.model.evaluate(self.x_test, self.y_test)

print(test_loss, test_acc)

return None

if __name__ == '__main__':

snn = SingleNN()

snn.compile()

snn.fit()

snn.evaluate()

运行结果:

Epoch 1/5

1875/1875 [==============================] - 1s 590us/step - loss: 0.7474 - accuracy: 0.7579

Epoch 2/5

1875/1875 [==============================] - 1s 580us/step - loss: 0.5147 - accuracy: 0.8258

Epoch 3/5

1875/1875 [==============================] - 1s 575us/step - loss: 0.4706 - accuracy: 0.8378

Epoch 4/5

1875/1875 [==============================] - 1s 573us/step - loss: 0.4477 - accuracy: 0.8450

Epoch 5/5

1875/1875 [==============================] - 1s 567us/step - loss: 0.4304 - accuracy: 0.8515

313/313 [==============================] - 0s 502us/step - loss: 0.4560 - accuracy: 0.8411

0.45601874589920044 0.8410999774932861这儿的效果明显好了很多!!!

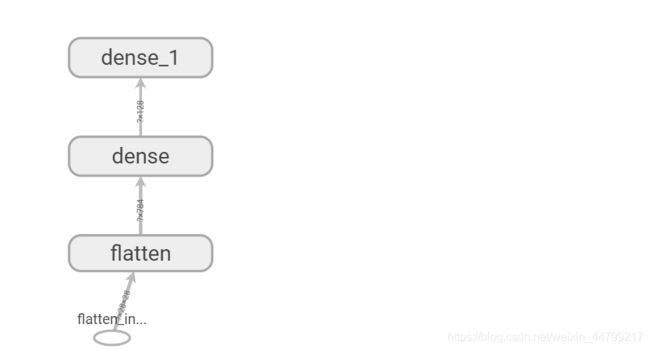

2.3 打印模型

- model.summary():查看模型结构

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

flatten (Flatten) (None, 784) 0

_________________________________________________________________

dense (Dense) (None, 128) 100480

_________________________________________________________________

dense_1 (Dense) (None, 10) 1290

=================================================================

Total params: 101,770

Trainable params: 101,770

Non-trainable params: 02.4 手动保存和恢复模型

- 保存成ckpt形式

- model.save_weights('./weights/my_model')

- model.load_weights('./weights/my_model')

SingleNN.model.save_weights("./ckpt/SingleNN")

def predict(self):

# 直接使用训练过后的权重测试

if os.path.exists("./ckpt/checkpoint"):

SingleNN.model.load_weights("./ckpt/SingleNN")

predictions = SingleNN.model.predict(self.test)

# 对预测结果进行处理

print(np.argmax(predictions, 1))

return

示例代码:

import os

import numpy as np

import tensorflow as tf

from keras import datasets

from keras.models import Sequential

from keras.layers import Flatten, Dense

from keras.optimizers import SGD

from keras.losses import sparse_categorical_crossentropy

class SingleNN(object):

"""

构建双层神经网络进行时装模型训练与预测

"""

# 建立神经网络模型

model = Sequential([

Flatten(input_shape=(28, 28)), # 将输入数据的形状进行修改为神经网络要求的数据形状

Dense(128, activation=tf.nn.relu), # 定义隐藏层,128个神经元的网络层

Dense(10, activation=tf.nn.softmax) # 10个类别的分类问题,输出的神经元个数必须跟总类别数量相同

])

# print(model.summary())

def __init__(self):

# 读取数据集,返回两个元组

# x_train:(1875, 784),y_train:(1875, 1)

(self.x_train, self.y_train), (self.x_test, self.y_test) = datasets.fashion_mnist.load_data()

# 进行数据的归一化,注意:目标值不需要归一化

self.x_train = self.x_train / 255.0

self.x_test = self.x_test / 255.0

def compile(self):

"""

编译模型优化器、损失和准确率

:return:

"""

SingleNN.model.compile(optimizer=SGD(lr=0.01), loss=sparse_categorical_crossentropy, metrics=['accuracy'])

return None

def fit(self):

"""

进行fit训练

:return:

"""

# 训练样本的特征值和目标值

SingleNN.model.fit(self.x_train, self.y_train, epochs=5)

return None

def evaluate(self):

"""

评估模型测试效果

:return:

"""

test_loss, test_acc = SingleNN.model.evaluate(self.x_test, self.y_test)

print(test_loss, test_acc)

return None

def predict(self):

"""

预测结果

:return:

"""

# 首先加载模型

if os.path.exists("./tmp/ckpt/checkpoint"):

SingleNN.model.load_weights("./tmp/ckpt/SingleNN")

predictions = SingleNN.model.predict(self.x_test)

return predictions

if __name__ == '__main__':

snn = SingleNN()

# snn.compile()

# snn.fit()

# snn.evaluate()

# # 保存模型

# SingleNN.model.save_weights("./tmp/ckpt/SingleNN")

# 进行模型预测

predictions = snn.predict()

print(predictions)

print(np.argmax(predictions, axis=1))

运行结果:

[[9.6156173e-06 6.1552450e-06 2.5047178e-05 ... 2.4859291e-01

5.6075659e-03 6.3287145e-01]

[2.0993820e-04 5.5472728e-06 9.6353877e-01 ... 8.4127844e-11

1.1876404e-04 6.0094135e-10]

[5.0108505e-05 9.9980801e-01 1.5537924e-05 ... 2.3488226e-07

5.4975771e-07 5.3934581e-08]

...

[2.8350599e-02 7.1193126e-06 2.2114406e-03 ... 3.6337989e-04

8.3771253e-01 1.2190870e-05]

[5.7001798e-05 9.9706584e-01 6.9393049e-05 ... 1.9720854e-05

4.7423578e-06 2.9315692e-05]

[3.8735327e-04 3.1307348e-04 8.3889149e-04 ... 9.6547395e-02

2.1333354e-02 8.7000076e-03]]

[9 2 1 ... 8 1 5]- 保存成h5文件 【读取速度更快,今后用的比较多】【在上面ckpt保存基础上直接加.h5就可以了】

SingleNN.model.save_weights("./ckpt/SingleNN.h5")

def predict(self):

# 直接使用训练过后的权重测试

if os.path.exists("./ckpt/checkpoint"):

SingleNN.model.load_weights("./ckpt/SingleNN.h5")

predictions = SingleNN.model.predict(self.test)

print(np.argmax(predictions, 1))

return

3. fit的callbacks详解

回调是在训练过程的给定阶段应用的一组函数。可以使用回调来获取培训期间内部状态和模型统计信息的视图。您可以将回调列表(作为关键字参数callbacks)传递给或类的fit()方法。然后将在训练的每个阶段调用回调的相关方法。

- 定制化保存模型

- 保存events文件

3.1 ModelCheckpoint

from tensorflow.python.keras.callbacks import ModelCheckpoint

- keras.callbacks.ModelCheckpoint(filepath, monitor='val_loss', save_best_only=False, save_weights_only=False, mode='auto', period=1)

- Save the model after every epoch:每隔多少次迭代保存模型

- filepath: 保存模型字符串

- 如果设置 weights.{epoch:02d}-{val_loss:.2f}.hdf5格式,将会每隔epoch number数量并且将验证集的损失保存在该位置

- 如果设置weights.{epoch:02d}-{val_acc:.2f}.hdf5,将会按照val_acc的值进行保存模型

- monitor: quantity to monitor.设置为'val_acc'或者'val_loss'

- save_best_only: if save_best_only=True, 只保留比上次模型更好的结果

- save_weights_only: if True, 只保存去那种(model.save_weights(filepath)), else the full model is saved (model.save(filepath)).

- mode: one of {auto, min, max}. 如果save_best_only=True, 对于val_acc, 要设置max, 对于val_loss要设置min

- period: 迭代保存checkpoints的间隔

check = ModelCheckpoint('./ckpt/singlenn_{epoch:02d}-{val_acc:.2f}.h5',

monitor='val_acc',

save_best_only=True,

save_weights_only=True,

mode='auto',

period=1)

SingleNN.model.fit(self.train, self.train_label, epochs=5, callbacks=[check])

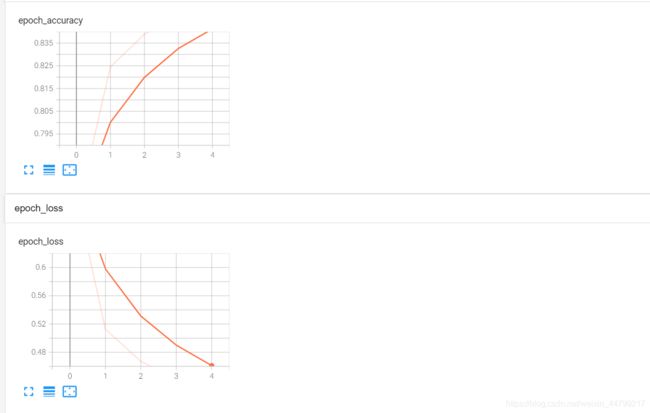

3.2 Tensorboard

- 添加Tensorboard观察损失等情况

- keras.callbacks.TensorBoard(log_dir='./logs', histogram_freq=0, batch_size=32, write_graph=True, write_grads=False, write_images=False, embeddings_freq=0, embeddings_layer_names=None, embeddings_metadata=None, embeddings_data=None, update_freq='epoch')

- log_dir:保存事件文件目录

- write_graph=True:是否显示图结构

- write_images=False:是否显示图片

- write_grads=True:是否显示梯度

histogram_freq必须大于0

# 添加tensoboard观察

tensorboard = keras.callbacks.TensorBoard(log_dir='./graph', histogram_freq=1,

write_graph=True, write_images=True)

SingleNN.model.fit(self.train, self.train_label, epochs=5, callbacks=[tensorboard])示例代码:

import os

import numpy as np

import tensorflow as tf

from keras import datasets

from keras.models import Sequential

from keras.layers import Flatten, Dense

from keras.optimizers import SGD

from keras.callbacks import ModelCheckpoint, TensorBoard

from keras.losses import sparse_categorical_crossentropy

class SingleNN(object):

"""

构建双层神经网络进行时装模型训练与预测

"""

# 建立神经网络模型

model = Sequential([

Flatten(input_shape=(28, 28)), # 将输入数据的形状进行修改为神经网络要求的数据形状

Dense(128, activation=tf.nn.relu), # 定义隐藏层,128个神经元的网络层

Dense(10, activation=tf.nn.softmax) # 10个类别的分类问题,输出的神经元个数必须跟总类别数量相同

])

# print(model.summary())

def __init__(self):

# 读取数据集,返回两个元组

# x_train:(1875, 784),y_train:(1875, 1)

(self.x_train, self.y_train), (self.x_test, self.y_test) = datasets.fashion_mnist.load_data()

# 进行数据的归一化,注意:目标值不需要归一化

self.x_train = self.x_train / 255.0

self.x_test = self.x_test / 255.0

def compile(self):

"""

编译模型优化器、损失和准确率

:return:

"""

SingleNN.model.compile(optimizer=SGD(lr=0.01), loss=sparse_categorical_crossentropy, metrics=['accuracy'])

return None

def fit(self):

"""

进行fit训练

:return:

"""

# fit当中添加回调函数,记录训练模型过程

# modelcheck = ModelCheckpoint(

# filepath="./tmp/ckpt/singleNN_{epoch:02d}-{val_loss:.2f}.h5",

# monitor='val_loss', # 保存损失还是准确率

# save_best_only=True,

# save_weights_only=True,

# mode='auto',

# period=1

# )

# 调用tensorboard回调函数

board = TensorBoard(log_dir="./tmp/graph/", histogram_freq=1, write_graph=True, write_images=True)

# 训练样本的特征值和目标值

SingleNN.model.fit(self.x_train, self.y_train, epochs=5, callbacks=[board])

return None

def evaluate(self):

"""

评估模型测试效果

:return:

"""

test_loss, test_acc = SingleNN.model.evaluate(self.x_test, self.y_test)

print(test_loss, test_acc)

return None

def predict(self):

"""

预测结果

:return:

"""

# 首先加载模型

# if os.path.exists("./tmp/ckpt/checkpoint"):

SingleNN.model.load_weights("./tmp/ckpt/SingleNN.h5")

predictions = SingleNN.model.predict(self.x_test)

return predictions

if __name__ == '__main__':

snn = SingleNN()

snn.compile()

snn.fit()

snn.evaluate()

# 保存模型

# SingleNN.model.save_weights("./tmp/ckpt/SingleNN.h5")

# 进行模型预测

# predictions = snn.predict()

# print(predictions)

# print(np.argmax(predictions, axis=1))