pytorch - 深度学习之 - LSTM预测股票走势

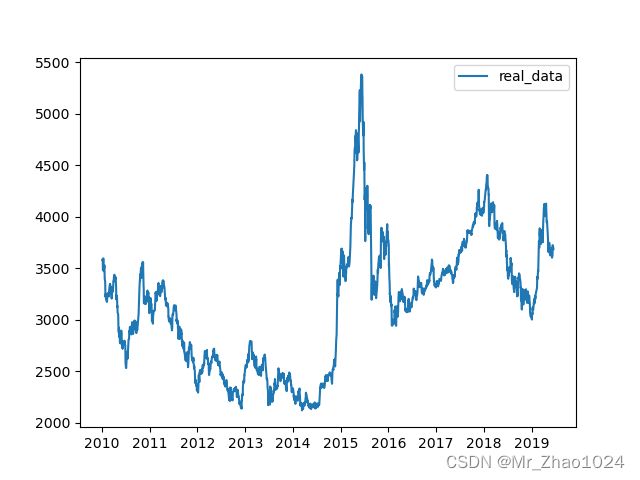

本次数据集采用的是沪深300指数数据,选取每天的最高价格。使用LSTM模型来捕捉最高价格的时序信息,通过训练模型,使之学会使用前n天的数据,来预测当天的数据。

本次数据集可使用 tushare来下载。

cons = ts.get_apis() # 建立链接

"""

获取沪深指数(000300)的信息,包括交易日期(datetime)、开盘价(open)、收盘价(close),

最高价(high)、最低价(low)、成交量(vol)、成交金额(amount)、涨跌幅(p_change)

"""

data = ts.bar('000300', conn=cons, asset='INDEX', start_date='2010-01-01',end_data='')

# 删除null值的行

data = data.dropna()

# 保存

data.to_csv('sh300.csv')数据集也可从我的资源中获取,链接为:

我的训练数据集,深沪300数据集sh300.csv-互联网文档类资源-CSDN下载

定义常量

"""

function: 使用LSTM网络预测股票行情

data:2022/01/15

author:zyd

"""

import pandas as pd

import matplotlib.pyplot as plt

import torch

import torch.nn as nn

import datetime

import numpy as np

from torch.utils.data import Dataset, DataLoader

from pandas.plotting import register_matplotlib_converters

N = 30

LR = 0.001

EPOCH = 200

batch_size = 20

train_end = -600 # 表示的是后面多少条数据作为侧式集,显然是用600条数据作为测试集

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

读取数据

"""

获取沪深指数(000300)的信息,包括交易日期(datetime)、开盘价(open)、收盘价(close),

最高价(high)、最低价(low)、成交量(vol)、成交金额(amount)、涨跌幅(p_change)

数据量总计有:2295 条

"""

def generate_data_by_n_days(series, n, index=False):

"""

通过一个序列来生成一个31*(count(*)-train_end)矩阵(用于处理时序的数据)

其中最后一列维标签数据。就是把当天的前n天作为参数,当天的数据作为label

:param series:

:param n:

:param index:

:return:

"""

if len(series) <= n:

raise Exception("The Length of series is %d, while affect by (n=%d)." % (len(series), n))

data = pd.DataFrame()

for i in range(n):

data['c%d' % i] = series.tolist()[i:-(n - i)]

data['y'] = series.tolist()[n:]

if index:

data.index = series.index[n:]

return data

def read_data(column='high', n=30, all_too=True, index=False, train_end=-500):

"""

读取数据,并且对数据进行处理。

:param column:

:param n: 表示前n天

:param all_too:

:param index:

:param train_end: 表示的是后面多少个数据作为测试集。

:return:

"""

data = pd.read_csv('./data/sh300.csv', sep=',', header=0, index_col=0)

# 以日期为索引

data.index = list(map(lambda x: datetime.datetime.strptime(x, "%Y/%m/%d"), data.index))

# 获取每天的最高价

data_column = data[column].copy()

# 拆分数据集为训练集和测试集

data_column_train, data_column_test = data_column[:train_end], data_column[train_end - n:]

# 生成训练数据

data_generate_train = generate_data_by_n_days(data_column_train, n, index=index)

if all_too:

return data_generate_train, data_column, data.index.tolist()

return data_generate_train定义模型

# 定义模型

class RNN(nn.Module):

def __init__(self, input_size):

super(RNN, self).__init__()

self.rnn = nn.LSTM(

input_size=input_size,

hidden_size=64,

num_layers=1,

batch_first=True

)

self.out = nn.Sequential(

nn.Linear(64, 1)

)

def forward(self, x):

r_out, (h_n, h_c) = self.rnn(x, None) # None即隐层状态用0初始化

out = self.out(r_out)

return out

class mytrainset(Dataset):

def __init__(self, data):

self.data, self.label = data[:, :-1].float(), data[:, -1].float()

def __getitem__(self, index):

return self.data[index], self.label[index]

def __len__(self):

return len(self.data)训练模型

# 训练模型

def train():

register_matplotlib_converters()

# 获取训练数据、原始数据、索引等信息

data, data_all, data_index = read_data('high', n=N, train_end=train_end)

# 可视化原高价数据

data_all = np.asarray(data_all.tolist())

plt.plot(data_index, data_all, label='real_data')

plt.legend(loc='upper right')

plt.savefig('D:\ClassLearnParper\pytorch_gpu\pytorch_deeplearning\chapter7_自然语言处理基础\data\原高价数据')

plt.show()

# 对数据进行预处理

data_numpy = np.asarray(data)

data_mean = np.mean(data_numpy)

data_std = np.std(data_numpy)

data_numpy = (data_numpy - data_mean) / data_std

data_tensor = torch.Tensor(data_numpy)

trainset = mytrainset(data_tensor)

trainloader = DataLoader(trainset, batch_size=batch_size, shuffle=False)

# 记录损失值,并用tensorboardx在web上展示

from tensorboardX import SummaryWriter

# 实例化SummaryWriter,并指明日志存放路径。在当前目录没有logs目录将自动创建。

writer = SummaryWriter(logdir='D:\ClassLearnParper\pytorch_gpu\\tensorboardX_logs')

rnn = RNN(N).to(device)

optimizer = torch.optim.Adam(rnn.parameters(), lr=LR)

loss_func = nn.MSELoss()

for step in range(EPOCH):

for tx, ty in trainloader:

tx = tx.to(device)

ty = ty.to(device)

# 在第1个维度上添加一个维度为1的维度,形状变为[batch,seq_len,input_size]

output = rnn(torch.unsqueeze(tx, dim=1)).to(device)

loss = loss_func(torch.squeeze(output), ty)

optimizer.zero_grad()

loss.backward()

optimizer.step()

writer.add_scalar('sh300_loss', loss, step)

# 测试模型

generate_data_train = []

generate_data_test = []

test_index = len(data_all) + train_end

data_all_nomal = (data_all - data_mean) / data_std

data_all_nomal_tensor = torch.Tensor(data_all_nomal)

for i in range(N, len(data_all)):

x = data_all_nomal_tensor[i - N:i].to(device)

# rnn的输入必须是3维,故需添加两个1维的维度,最后成为[1,1,input_size]

x = torch.unsqueeze(torch.unsqueeze(x, dim=0), dim=0)

y = rnn(x).to(device)

if i < test_index:

generate_data_train.append(torch.squeeze(y).detach().cpu().numpy() * data_std + data_mean)

else:

generate_data_test.append(torch.squeeze(y).detach().cpu().numpy() * data_std + data_mean)

plt.plot(data_index[N:train_end], generate_data_train, label='generate_train')

plt.plot(data_index[train_end:], generate_data_test, label='generate_test')

plt.plot(data_index[train_end:], data_all[train_end:], label='real-data')

plt.legend()

plt.savefig('D:\ClassLearnParper\pytorch_gpu\pytorch_deeplearning\chapter7_自然语言处理基础\data\结果图')

plt.show()

plt.clf()

plt.plot(data_index[train_end:-500], data_all[train_end:-500], label='real-data')

plt.plot(data_index[train_end:-500], generate_data_test[-600:-500], label='generate_test')

plt.legend()

plt.savefig('D:\ClassLearnParper\pytorch_gpu\pytorch_deeplearning\chapter7_自然语言处理基础\data\真实值与预测值')

plt.show()

return

if __name__ == '__main__':

train()训练结果展示:

真实数据集:

训练中的损失值显示:

训练后:训练集、测试集、预测值显示:

真实值与预测值显示