tf.segment_sum()_ tf.while_loop()_ tf.clip_by_value()_ tf.tile()_ tf.unique_with_counts()

这几天 在阅读大项目LaneNet的损失函数计算部分时,遇到了许多未曾一见的tensorflow的函数,上网查找资料后做一个学习笔记。

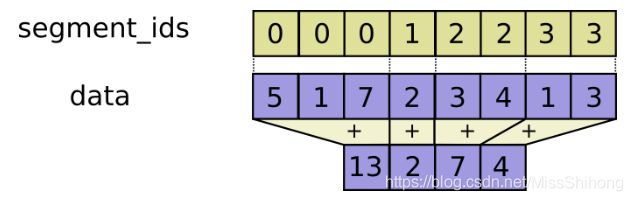

分段求和 tf.segment_sum()

1.14之后将它移到了math模块下

tf.math.segment_sum(

data,

segment_ids,

name=None

)

- o u t p u t i = ∑ j d a t a j output_i = \sum_j data_j outputi=∑jdataj 其中,求和针对所有满足

segment_ids[j] == i的 j; - segment_ids的长度必须与data的第一维度相同。

- segment_ids的序列必须递增

- 类似的, tf还支持分段求mean, max, min等

tf.unsorted_segment_sum() 是改进版,segment_ids 无需有序递增,且可以不用覆盖所有的值。

tf.math.unsorted_segment_sum(

data,

segment_ids,

num_segments, # segment_ids的最大值需 < num_segments

name=None

)

关于这两个函数的实例可参考 https://www.cnblogs.com/gaofighting/p/9706081.html

按索引提取向量 tf.gather()

用一个一维的索引数组,提取张量中对应索引的向量

a = tf.Variable([[1,2,3,4,5], [6,7,8,9,10], [11,12,13,14,15]])

index_a = tf.Variable([0,2])

b = tf.Variable([1,2,3,4,5,6,7,8,9,10])

index_b = tf.Variable([2,4,6,8])

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

print(sess.run(tf.gather(a, index_a)))

# [[ 1 2 3 4 5]

# [11 12 13 14 15]]

print(sess.run(tf.gather(b, index_b)))

# [3 5 7 9]

封装过的while循环 tf.while_loop()

看到这个函数时被惊艳了 它的用法好酷

loop = []

while cond(loop):

loop = body(loop)

loop为每轮迭代需要更新的元素的集合列表,cond函数所判断的condition成立时,进入while循环,body函数对loop中的元素进行更新;当condition不满足,循环终止。

在定义时,cond和body函数必须接受一样的参数;loop返回bool,body必须返回输入的所有参数(更新后的)

来看LaneNet中计算loss时用到的while_loop()

def cond(label, batch, out_loss, out_var, out_dist, out_reg, i):

return tf.less(i, tf.shape(batch)[0])

def body(label, batch, out_loss, out_var, out_dist, out_reg, i):

disc_loss, l_var, l_dist, l_reg = discriminative_loss_single(

prediction[i], correct_label[i], feature_dim, image_shape, delta_v, delta_d, param_var, param_dist, param_reg)

out_loss = out_loss.write(i, disc_loss)

out_var = out_var.write(i, l_var)

out_dist = out_dist.write(i, l_dist)

out_reg = out_reg.write(i, l_reg)

return label, batch, out_loss, out_var, out_dist, out_reg, i + 1

# TensorArray is a data structure that support dynamic writing

output_ta_loss = tf.TensorArray(dtype=tf.float32, size=0, dynamic_size=True)

output_ta_var = tf.TensorArray(dtype=tf.float32, size=0, dynamic_size=True)

output_ta_dist = tf.TensorArray(dtype=tf.float32, size=0, dynamic_size=True)

output_ta_reg = tf.TensorArray(dtype=tf.float32, size=0, dynamic_size=True)

_, _, out_loss_op, out_var_op, out_dist_op, out_reg_op, _ = tf.while_loop(

cond, body, [correct_label, prediction, output_ta_loss, output_ta_var, output_ta_dist, output_ta_reg, 0])

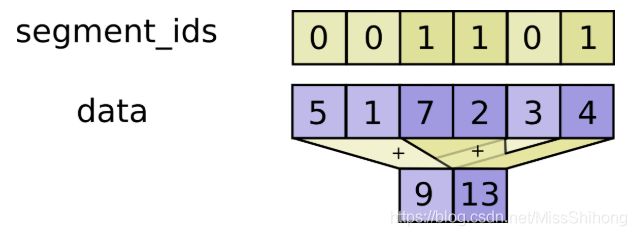

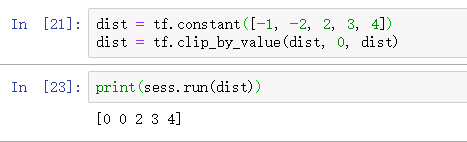

数值截断 tf.clip_by_value()

tf.clip_by_value(

t,

clip_value_min,

clip_value_max,

name=None

)

- 参数clip_value_min 和 clip_value_max 可以是一组序列,这组序列可以是Tensor也可以是list,它长度必须与待截断序列相同。

- 因此可以用来表示 [ x ] + = m a x ( 0 , x ) [x]_+ = max(0, x) [x]+=max(0,x) 这种情况

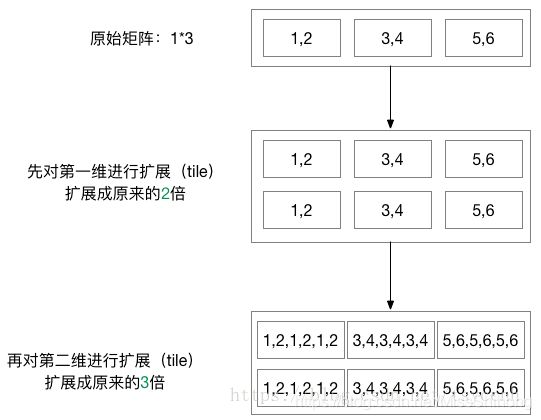

张量扩展 tf.tile()

input是待扩展的张量,multiples是扩展方法。

假如input是一个3维的张量。那么mutiples就必须是一个1x3的1维张量。这个张量的三个值依次表示input的第1,第2,第3维数据扩展几倍。

tf.tile(

input,

multiples,

name=None

)

举例说明

a = tf.constant([[1, 2], [3, 4], [5, 6]], dtype=tf.float32)

a1 = tf.tile(a, [2, 3])

tf.unique_with_counts

# tensor 'x' is [1, 1, 2, 4, 4, 4, 7, 8, 8]

y, idx, count = unique_with_counts(x)

y ==> [1, 2, 4, 7, 8]

idx ==> [0, 0, 1, 2, 2, 2, 3, 4, 4]

count ==> [2, 1, 3, 1, 2]

按布尔值获取元素 tf.boolean_mask()

- Numpy equivalent is

tensor[mask]

tf.boolean_mask(

tensor,

mask,

axis=None,

name='boolean_mask'

)

# 2-D example

tensor = [[1, 2], [3, 4], [5, 6]]

mask = np.array([True, False, True])

boolean_mask(tensor, mask) # [[1, 2], [5, 6]]