pytorch实现基本AutoEncoder与案例

文章目录

- AutoEncoder简介

- 思路与代码细节

-

- 1. 构造数据源

- 2. 构造AutoEncoder网络结构

- 3. 训练代码

- 全部代码

- 参考文章

AutoEncoder简介

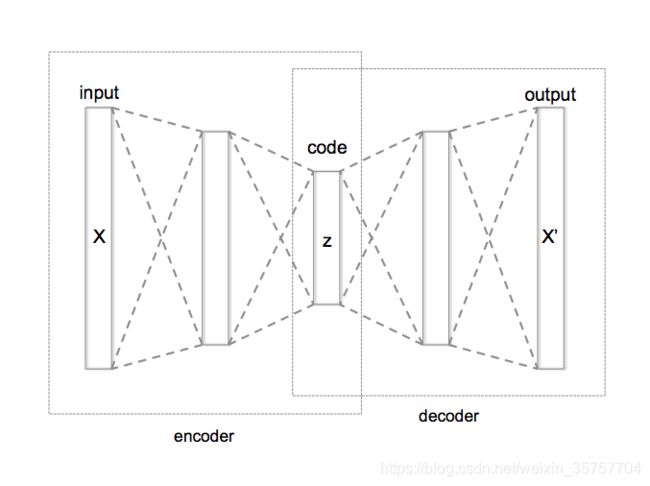

AutoEncoder的思路是使用对称的网络结构,重现原有的特征,常用来降噪与应对对抗样本

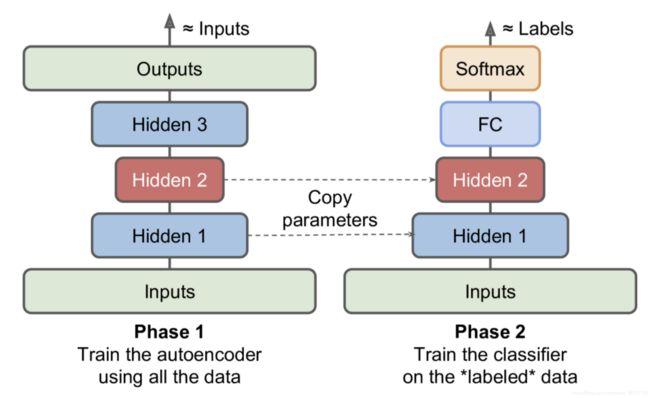

预训练AutoEncoder后运用的示意图:

思路与代码细节

1. 构造数据源

def get_train_data():

"""得到训练数据,这里使用随机数生成训练数据,由此导致最终结果并不好"""

def get_tensor_from_pd(dataframe_series) -> torch.Tensor:

return torch.tensor(data=dataframe_series.values)

import numpy as np

import pandas as pd

from sklearn import preprocessing

# 生成训练数据x并做归一化后,构造成dataframe格式,再转换为tensor格式

df = pd.DataFrame(data=preprocessing.MinMaxScaler().fit_transform(np.random.randint(0, 10, size=(2000, 300))))

y = pd.Series(np.random.randint(0, 2, 2000))

return get_tensor_from_pd(df).float(), get_tensor_from_pd(y).float()

2. 构造AutoEncoder网络结构

class AutoEncoder(nn.Module):

def __init__(self, input_size=300, hidden_layer_size=100):

super().__init__()

self.hidden_layer_size = hidden_layer_size

# 输入与输出的维度相同

self.input_size = input_size

self.output_size = input_size

self.encode_linear = nn.Linear(self.input_size, hidden_layer_size)

self.decode_linear = nn.Linear(hidden_layer_size, self.output_size)

self.relu = nn.ReLU()

self.sigmoid = nn.Sigmoid()

def forward(self, input_x):

# encode

encode_linear = self.encode_linear(input_x)

encode_out = self.relu(encode_linear)

# decode

decode_linear = self.decode_linear(encode_out) # =self.linear(lstm_out[:, -1, :])

predictions = self.sigmoid(decode_linear)

return predictions

3. 训练代码

这一部分比较套路:

# 建模三件套:loss,优化,epochs

model = AutoEncoder() # 模型

loss_function = nn.MSELoss() # loss

optimizer = torch.optim.Adam(model.parameters(), lr=0.001) # 优化器

epochs = 150

# 开始训练

model.train()

for i in range(epochs):

for seq, labels in train_loader:

optimizer.zero_grad()

y_pred = model(seq).squeeze() # 压缩维度:得到输出,并将维度为1的去除

single_loss = loss_function(y_pred, seq)

single_loss.backward()

optimizer.step()

print("Train Step:", i, " loss: ", single_loss)

全部代码

import torch

import torch.nn as nn

import torch.utils.data as Data

def get_train_data():

"""得到训练数据,这里使用随机数生成训练数据,由此导致最终结果并不好"""

def get_tensor_from_pd(dataframe_series) -> torch.Tensor:

return torch.tensor(data=dataframe_series.values)

import numpy as np

import pandas as pd

from sklearn import preprocessing

# 生成训练数据x并做归一化后,构造成dataframe格式,再转换为tensor格式

df = pd.DataFrame(data=preprocessing.MinMaxScaler().fit_transform(np.random.randint(0, 10, size=(2000, 300))))

y = pd.Series(np.random.randint(0, 2, 2000))

return get_tensor_from_pd(df).float(), get_tensor_from_pd(y).float()

class AutoEncoder(nn.Module):

def __init__(self, input_size=300, hidden_layer_size=100):

super().__init__()

self.hidden_layer_size = hidden_layer_size

# 输入与输出的维度相同

self.input_size = input_size

self.output_size = input_size

self.encode_linear = nn.Linear(self.input_size, hidden_layer_size)

self.decode_linear = nn.Linear(hidden_layer_size, self.output_size)

self.relu = nn.ReLU()

self.sigmoid = nn.Sigmoid()

def forward(self, input_x):

# encode

encode_linear = self.encode_linear(input_x)

encode_out = self.relu(encode_linear)

# decode

decode_linear = self.decode_linear(encode_out) # =self.linear(lstm_out[:, -1, :])

predictions = self.sigmoid(decode_linear)

return predictions

if __name__ == '__main__':

# 得到数据

x, y = get_train_data()

train_loader = Data.DataLoader(

dataset=Data.TensorDataset(x, y), # 封装进Data.TensorDataset()类的数据,可以为任意维度

batch_size=20, # 每块的大小

shuffle=True, # 要不要打乱数据 (打乱比较好)

num_workers=2, # 多进程(multiprocess)来读数据

)

# 建模三件套:loss,优化,epochs

model = AutoEncoder() # 模型

loss_function = nn.MSELoss() # loss

optimizer = torch.optim.Adam(model.parameters(), lr=0.001) # 优化器

epochs = 150

# 开始训练

model.train()

for i in range(epochs):

for seq, labels in train_loader:

optimizer.zero_grad()

y_pred = model(seq).squeeze() # 压缩维度:得到输出,并将维度为1的去除

single_loss = loss_function(y_pred, seq)

single_loss.backward()

optimizer.step()

print("Train Step:", i, " loss: ", single_loss)

# 每20次,输出一次前20个的结果,对比一下效果

if i % 20 == 0:

test_data = x[:20]

y_pred = model(test_data).squeeze()

print("TEST: ", test_data)

print("PRED: ", y_pred)

print("LOSS: ", loss_function(y_pred, test_data))

参考文章

- 维基百科AutoEncoder:https://en.wikipedia.org/wiki/Autoencoder

- AutoEncoder: 自动编码器AutoEncode:https://zhuanlan.zhihu.com/p/83019501