【深度学习】吴恩达深度学习-Course2改善深层神经网络:超参数调试、正则化以及优化-第二周优化算法作业

视频链接:【中英字幕】吴恩达深度学习课程第二课 — 改善深层神经网络:超参数调试、正则化以及优化

参考链接:

- 【中英】【吴恩达课后测验】Course 2 - 改善深层神经网络 - 第二周测验

- 吴恩达 deeplearning.ai - 改善深层神经网络 - 第2周测验

- 【吴恩达深度学习测验】Course 2 - 改善深层神经网络 - 第二周测验

目录

- 中文习题

- 英文习题

- 参考答案

中文习题

1.当输入第八个mini-batch的第七个的例子的第三层时,你会用哪种符号表示第三层的激活?

A. a [ 8 ] { 7 } ( 3 ) a^{[8]\{7\}(3)} a[8]{7}(3)

B. a [ 3 ] { 7 } ( 8 ) a^{[3]\{7\}(8)} a[3]{7}(8)

C. a [ 3 ] { 8 } ( 7 ) a^{[3]\{8\}(7)} a[3]{8}(7)

D. a [ 8 ] { 3 } ( 7 ) a^{[8]\{3\}(7)} a[8]{3}(7)

2.关于mini-batch的说法哪个是正确的?

A.在不同的mini-batch下,不需要显式地进行循环,就可以实现mini-batch梯度下降,从而使算法同时处理所有的数据(矢量化)。

B.使用mini-batch梯度下降训练的时间(一次训练完整个训练集)比使用梯度下降训练的时间要快。

C.mini-batch梯度下降(在单个mini-batch上计算)的一次迭代快于梯度下降的迭代。

3.为什么最好的mini-batch的大小通常不是1也不是m,而是介于两者之间?

A.如果mini-batch大小为1,则会失去mini-batch示例中矢量化带来的的好处

B.如果mini-batch的大小是m,那么你会得到batch梯度下降,这需要在进行训练之前对整个训练集进行处理。

C.如果mini-batch大小为1,就等价于随机梯度下降,相当于每次只对一个样本进行训练,所以失去了mini-batch示例中矢量化带来的好处。

D.如果如果mini-batch的大小是m,就等价于batch梯度下降,需要迭代的时间较长,且需要对整个训练集做处理。

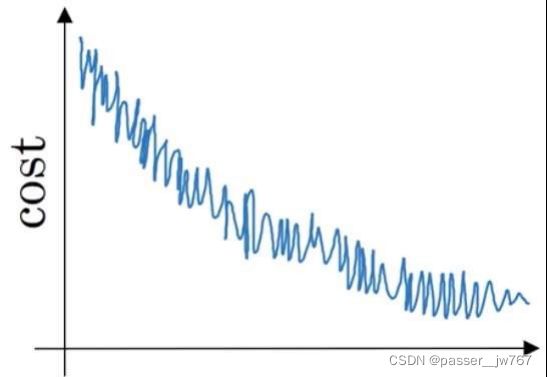

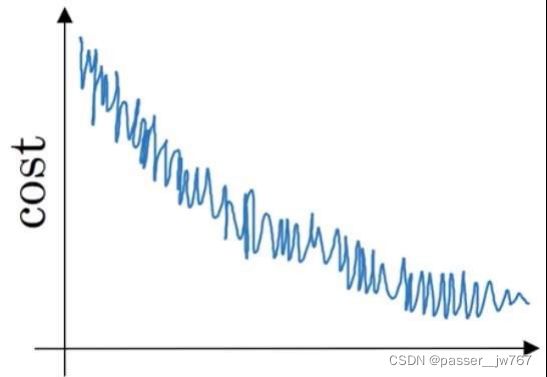

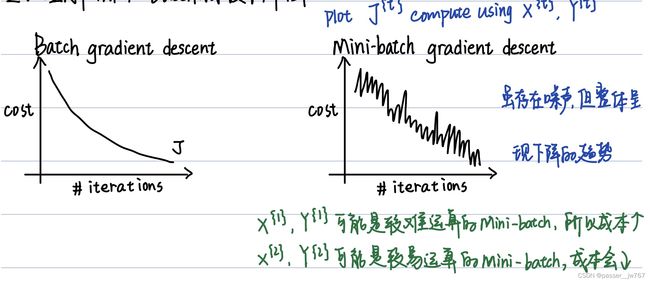

4. 假设学习算法成本为J,表示为关于迭代次数的一个函数,如下图所示:你同意下列哪一个说法?

A.如果使用的是mini-batch梯度下降,这看起来是可以接受的。但如果使用的是batch梯度下降,则某个地方出问题了。

B.如果使用的是mini-batch梯度下降,则某个地方出问题了。但如果使用的是batch梯度下降,这看起来是可以接受的。

C.无论使用的是batch梯度下降还是mini-batch梯度下降,这都是可以接受的。

D.无论使用的是batch梯度下降还是mini-batch梯度下降,一定都有地方出问题了。

5.假设卡萨布兰卡1月份开头两天温度相同:

1月1号: θ 1 = 10 ℃ θ_1=10℃ θ1=10℃ 1月2号: θ 2 = 10 ℃ θ_2=10℃ θ2=10℃

(课上使用的是华氏度,这里考虑到世界范围内广泛使用的公制,所以将使用摄氏度)

假设用一个指数加权平均来跟踪温度(β=0.5): v 0 = 0 , v t = β v t − 1 + ( 1 − β ) θ t v_0 = 0, v_t = βv_t−1 + (1 − β)θ_t v0=0,vt=βvt−1+(1−β)θt. 如果v2是第2天的无偏差修正估计值,v2corrected是偏差修正估计值,则它们分别为多少?(你可能不需要计算器就可以算出以上值,事实上你也真的不需要。记住偏差修正的功能。)

A. v 2 = 7.5 , v 2 c o r r e c t e d = 10 v_2=7.5, v^{corrected}_2=10 v2=7.5,v2corrected=10

B. v 2 = 7.5 , v 2 c o r r e c t e d = 7.5 v_2=7.5, v^{corrected}_2=7.5 v2=7.5,v2corrected=7.5

C. v 2 = 10 , v 2 c o r r e c t e d = 7.5 v_2=10, v^{corrected}_2=7.5 v2=10,v2corrected=7.5

D. v 2 = 10 , v 2 c o r r e c t e d = 10 v_2=10, v^{corrected}_2=10 v2=10,v2corrected=10

6.下列哪一个不是良好的学习率衰减表达式?其中,t代表epoch数。

A. 1 1 + 2 ∗ t α 0 \frac{1}{1+2*t}α_0 1+2∗t1α0

B. 1 t α 0 \frac{1}{\sqrt{t}}α_0 t1α0

C. e t α 0 e^tα_0 etα0

D. 0.9 5 t α 0 0.95^tα_0 0.95tα0

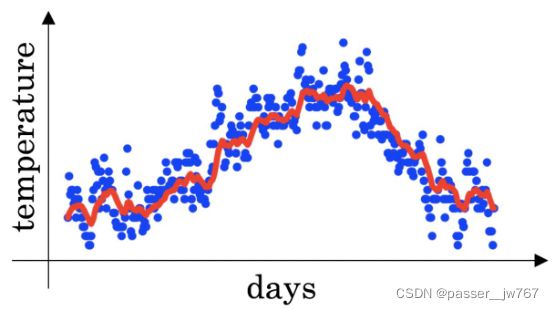

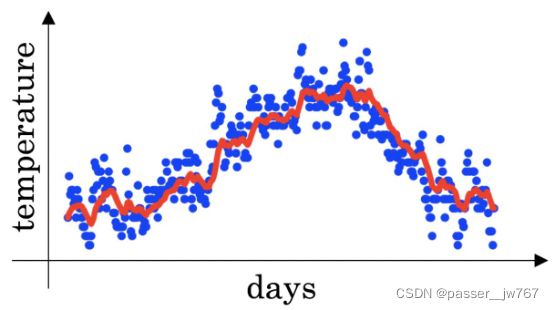

7.根据 v t = β v t − 1 + ( 1 − β ) θ t v_t=β_{vt}-1+(1-β) θ_t vt=βvt−1+(1−β)θt,对伦敦温度数据集使用指数加权平均。下图中的红线是根据β=0.5计算出来的。当改变β值时,红色曲线将发生何变化?(检查选项中两项即可)(多选)

A.β下降,红线将略微右移。

B.β上升,红线将略微右移。

C.β下降,红线内将出现更多振荡。

D.β上升,红线内将出现更多振荡。

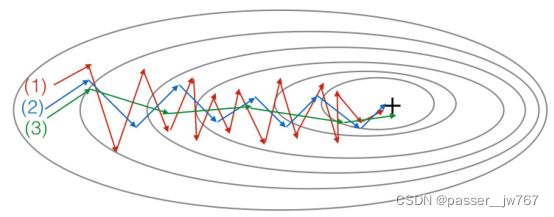

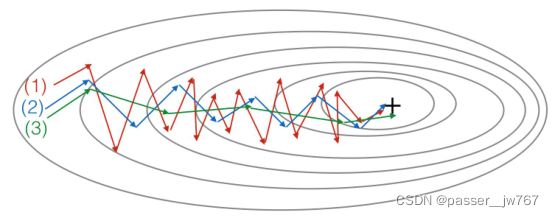

8.考虑下图:

这些图表通过梯度下降产生;梯度下降所根据的momentum分别是β=0.5和β=0.9。曲线和算法如何相互对应?

A.(1)momentum梯度下降(小β)。(2)梯度下降。(3)momentum梯度下降(大β)

B.(1)梯度下降。(2)momentum梯度下降(小β)。(3)momentum梯度下降(大β)

C.(1)momentum梯度下降(小β),(2)momentum梯度下降(小β),(3)梯度下降

D.(1)梯度下降。(2)momentum梯度下降(大β)。(3)momentum梯度下降(小β)

9.假设深度网络中batch梯度下降正花费过长的时间寻找一个小的成本函数J(W1,b1,…,WL,bL)的参数值。下列方法中,哪一个有助于找到小的J的参数值?(检查所有列出方法)(多选)

A.尝试使用Adam

B.尝试对权重进行更优的随机初始化

C.尝试mini-batch梯度下降

D.尝试将所有的权重初始化到0

E.尝试调整学习率α

10.下列关于Adam的说法中,哪一个有误?

A.Adma中学习率超参数α常常需要调整。

B.Adam结合了RMSProp和momentum的优点。

C.Adam需要配合batch梯度计算使用,而非mini-batch。

D.Adam中,我们通常会使用超参数β1,β2和ε的“默认”值(β1=0.9,β2=0.999,ε=10e-8)。

英文习题

1.Which notation would you use to denote the 3rd layer’s activations when the input is the 7th example from the 8th mini-batch?

A. a [ 8 ] { 7 } ( 3 ) a^{[8]\{7\}(3)} a[8]{7}(3)

B. a [ 3 ] { 7 } ( 8 ) a^{[3]\{7\}(8)} a[3]{7}(8)

C. a [ 3 ] { 8 } ( 7 ) a^{[3]\{8\}(7)} a[3]{8}(7)

D. a [ 8 ] { 3 } ( 7 ) a^{[8]\{3\}(7)} a[8]{3}(7)

2.Which of these statements about mini-batch gradient descent do you agree with?

A.You should implement mini-batch gradient descent without an explicit for-loop over different mini-batches, so that the algorithm processes all mini-batches at the same time (vectorization).

B.Training one epoch (one pass through the training set) using mini-batch gradient descent is faster than training one epoch using batch gradient descent.

C.One iteration of mini-batch gradient descent (computing on a single mini-batch) is faster than one iteration of batch gradient descent.

3.Why is the best mini-batch size usually not 1 and not m, but instead something in-between?(Multiple choice)(此处选项为机翻,可能存在语序混乱的情况)

A. If the mini batch size is 1, the benefits of Vectorization in the mini batch example will be lost

B. If the size of mini batch is m, you will get batch gradient descent, which requires processing the whole training set before training.

C. If the mini batch size is 1, it is equivalent to random gradient descent, which is equivalent to training only one sample at a time, so the benefits of Vectorization in the mini batch example are lost.

D. If the size of mini batch is m, it is equivalent to batch gradient descent, which requires a long iteration time and processing the whole training set.

4.Suppose your learning algorithm’s cost J, plotted as a function of the number of iterations, looks like this:Which statement is true?

A.If you’re using mini-batch gradient descent, this looks acceptable. But if you’re using batch gradient descent, something is wrong.

B.If you’re using mini-batch gradient descent, something is wrong. However, if batch gradient descent is used, this seems acceptable.

C. Whether batch gradient descent or mini batch gradient descent is used, this is acceptable.

D. Whether batch gradient descent or mini batch gradient descent is used, there must be something wrong.

5.Suppose the temperature in Casablanca over the first three days of January are the same:

Jan 1st: θ 1 = 10 ℃ θ_1=10℃ θ1=10℃

Jan 2nd: θ 2 = 10 ℃ θ_2=10℃ θ2=10℃

Say you use an exponentially weighted average with β = 0.5 to track the temperature: v 0 = 0 , v t = β v t − 1 + ( 1 − β ) θ t v_0 = 0, v_t = βv_t−1 + (1 − β)θ_t v0=0,vt=βvt−1+(1−β)θt. If v_2 is the value computed after day 2 without bias correction, and v^corrected_2 is the value you compute with bias correction. What are these values?

A. v 2 = 7.5 , v 2 c o r r e c t e d = 10 v_2=7.5, v^{corrected}_2=10 v2=7.5,v2corrected=10

B. v 2 = 7.5 , v 2 c o r r e c t e d = 7.5 v_2=7.5, v^{corrected}_2=7.5 v2=7.5,v2corrected=7.5

C. v 2 = 10 , v 2 c o r r e c t e d = 7.5 v_2=10, v^{corrected}_2=7.5 v2=10,v2corrected=7.5

D. v 2 = 10 , v 2 c o r r e c t e d = 10 v_2=10, v^{corrected}_2=10 v2=10,v2corrected=10

6.Which of these is NOT a good learning rate decay scheme? Here, t is the epoch number.

A. 1 1 + 2 ∗ t α 0 \frac{1}{1+2*t}α_0 1+2∗t1α0

B. 1 t α 0 \frac{1}{\sqrt{t}}α_0 t1α0

C. e t α 0 e^tα_0 etα0

D. 0.9 5 t α 0 0.95^tα_0 0.95tα0

7.You use an exponentially weighted average on the London temperature dataset. You use the following to track the temperature: v t = β v t − 1 + ( 1 − β ) θ t v_t=β_{vt}-1+(1-β) θ_t vt=βvt−1+(1−β)θt. The red line below was computed using β = 0.9. What would happen to your red curve as you vary β? (Check the two that apply)

A. β descending, the red line will move slightly to the right.

B. β rising, the red line will move slightly to the right.

C. β lower, there will be more oscillations within the red line.

D. β rising, there will be more oscillations within the red line.

8.Consider this figure:

These plots were generated with gradient descent; with gradient descent with momentum (β = 0.5) and gradient descent with momentum (β = 0.9). Which curve corresponds to which algorithm?

A. (1) momentum gradient decrease (small) β)。 (2) Gradient descent. (3) Momentum gradient drop (large) β)

B. (1) gradient descent. (2) Momentum gradient decrease (small) β)。 (3) Momentum gradient drop (large) β)

C. (1) momentum gradient decrease (small) β), (2) Momentum gradient decrease (small) β), (3) Gradient descent

D. (1) gradient descent. (2) Momentum gradient drop (large) β)。 (3) Momentum gradient decrease (small) β)

9.Suppose batch gradient descent in a deep network is taking excessively long to find a value of the parameters that achieves a small value for the cost function J(W[1],b[1],…,W[L],b[L]). Which of the following techniques could help find parameter values that attain a small value for J? (Check all that apply)

A. Try Adam

B. Try better random initialization of weights

C. Try Mini batch gradient descent

D. Try to initialize all weights to 0

E. Try to adjust the learning rate α

10.Which of the following statements about Adam is False?

A. Super parameter of learning rate in ADMA α Adjustments are often required.

B. Adam combines the advantages of rmsprop and momentum.

C. Adam needs to be used with batch gradient calculation instead of mini batch.

D. In Adam, we usually use super parameters β 1, β 2 and ε Default value for( β 1=0.9, β 2=0.999, ε= 10e-8)。

参考答案

- C,这里 [i]{j}(k)上标表示 第i层,第j小块,第k个示例,可能还是得按照这个顺序。

- C,对于A选项,矢量化并不能够同时处理所有的数据。B选项,一次训练完整个训练集意味着mini-batch size = m,此时与batch所用的时间是一样的。对于C选项的解释从参考链接2中引用:

对于普通的梯度下降法,一个epoch只能进行一次梯度下降;而对于Mini-batch梯度下降法,一个epoch可以进行Mini-batch的个数次梯度下降。由于训练集减小了,梯度下降一次迭代也快于梯度下降的迭代。 - ABC,这道题我对比了一下,我参考的链接中答案是不一样的,所以给出我自己的选择。然后对于D选项,我认为,“需要迭代时间较长”是不对的,当mini-batch size = m时,就变成了batch梯度下降,从而这里的“迭代时间较长”也就没有意义了,因为此时只有一个批次,不存在迭代问题。但是我总觉得这个说法优点牵强,希望有更好的说法。

- A,当我们使用Batch梯度下降时,曲线会显得较为光滑,而使用Mini-batch梯度下降时候会存在噪声。举个例子, X { 1 } , Y { 1 } X^{\{1\}},Y^{\{1\}} X{1},Y{1}可能是较难运算的Mini-batch,所以成本会增加, X { 2 } , Y { 2 } X^{\{2\}},Y^{\{2\}} X{2},Y{2}可能是较易运算的Mini-batch,所以成本会下降。见下图:

- A,在不进行偏差修正的情况下, v 1 = 0.5 × 0 + ( 1 − 0.5 ) × 10 = 5 v_1=0.5×0+(1-0.5)×10=5 v1=0.5×0+(1−0.5)×10=5, v 2 = 0.5 × 5 + ( 1 − 0.5 ) × 10 = 7.5 v_2=0.5×5+(1-0.5)×10=7.5 v2=0.5×5+(1−0.5)×10=7.5。在进行偏差修正的情况下,我们使用 v t 1 − β t \frac{v_t}{1-β^t} 1−βtvt进行计算,我们只需要在原先计算好的数值上除以 ( 1 − β 2 ) (1-β^2) (1−β2),也就是除以 ( 1 − 0.25 ) = 0.75 (1-0.25)=0.75 (1−0.25)=0.75,那么 7.5 ÷ 0.75 = 10 7.5÷0.75=10 7.5÷0.75=10。由此我们知道 v 2 = 7.5 , v 2 c o r r e c t e d = 10 v_2=7.5,v^{corrected}_2=10 v2=7.5,v2corrected=10。

- C,这道题在本周第9个视频有说过,学习率衰减的公式包括:

- 1 1 + d e c a c y _ r a t e ∗ e p o c h − n u m ∗ α 0 \frac{1}{1+decacy\_rate*epoch-num}*α_0 1+decacy_rate∗epoch−num1∗α0

- 0.9 5 e p o c h − n u m ∗ α 0 0.95^{epoch-num}*α_0 0.95epoch−num∗α0

- k e p o c h − n u m ∗ α 0 \frac{k}{\sqrt{epoch-num}}*α_0 epoch−numk∗α0

- k t ∗ α 0 \frac{k}{\sqrt{t}}*α_0 tk∗α0

- BC,本周第三节课的内容,如果不记得了可以回头看。

- B,比较乱的红线(1)就是普通的梯度下降,通过(2)、(3)的步长我们可以判断出β的大小,β越大步长越大。

- ABCE,将权重初始化为0这种方法在上周的课程中已经被认为是最不可靠的方法了。

- C,Adam可以配合batch使用也可以配合mini-batch使用。