main.py

# #################################################################

# Deep Reinforcement Learning for Online Offloading in Wireless Powered Mobile-Edge Computing Networks

#

# This file contains the main code of DROO. It loads the training samples saved in ./data/data_#.mat, splits the samples into two parts (training and testing data constitutes 80% and 20%), trains the DNN with training and validation samples, and finally tests the DNN with test data.

#

# Input: ./data/data_#.mat

# Data samples are generated according to the CD method presented in [2]. There are 30,000 samples saved in each ./data/data_#.mat, where # is the user number. Each data sample includes

# -----------------------------------------------------------------

# | wireless channel gain | input_h |

# -----------------------------------------------------------------

# | computing mode selection | output_mode |

# -----------------------------------------------------------------

# | energy broadcasting parameter | output_a |

# -----------------------------------------------------------------

# | transmit time of wireless device | output_tau |

# -----------------------------------------------------------------

# | weighted sum computation rate | output_obj |

# -----------------------------------------------------------------

#

#

# References:

# [1] 1. Liang Huang, Suzhi Bi, and Ying-Jun Angela Zhang, "Deep Reinforcement Learning for Online Offloading in Wireless Powered Mobile-Edge Computing Networks," in IEEE Transactions on Mobile Computing, early access, 2019, DOI:10.1109/TMC.2019.2928811.

# [2] S. Bi and Y. J. Zhang, “Computation rate maximization for wireless powered mobile-edge computing with binary computation offloading,” IEEE Trans. Wireless Commun., vol. 17, no. 6, pp. 4177-4190, Jun. 2018.

#

# version 1.0 -- July 2018. Written by Liang Huang (lianghuang AT zjut.edu.cn)

# ################################################################

import scipy.io as sio # import scipy.io for .mat file I/

import numpy as np # import numpy

# for tensorflow2

from memoryTF2 import MemoryDNN

from optimization import bisection

import time

def plot_rate( rate_his, rolling_intv = 50):

import matplotlib.pyplot as plt

import pandas as pd

import matplotlib as mpl

rate_array = np.asarray(rate_his)

df = pd.DataFrame(rate_his)

mpl.style.use('seaborn')

fig, ax = plt.subplots(figsize=(15,8))

# rolling_intv = 20

plt.plot(np.arange(len(rate_array))+1, df.rolling(rolling_intv, min_periods=1).mean(), 'b')

plt.fill_between(np.arange(len(rate_array))+1, df.rolling(rolling_intv, min_periods=1).min()[0], df.rolling(rolling_intv, min_periods=1).max()[0], color = 'b', alpha = 0.2)

plt.ylabel('Normalized Computation Rate')

plt.xlabel('Time Frames')

plt.show()

def save_to_txt(rate_his, file_path):

with open(file_path, 'w') as f:

for rate in rate_his:

f.write("%s \n" % rate)

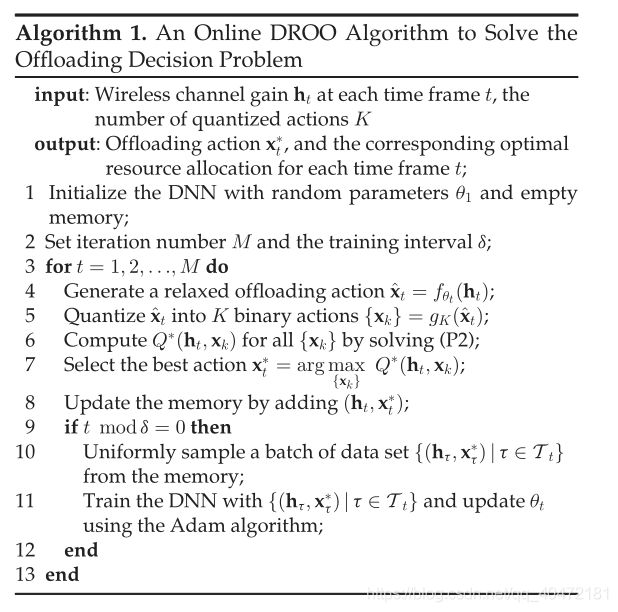

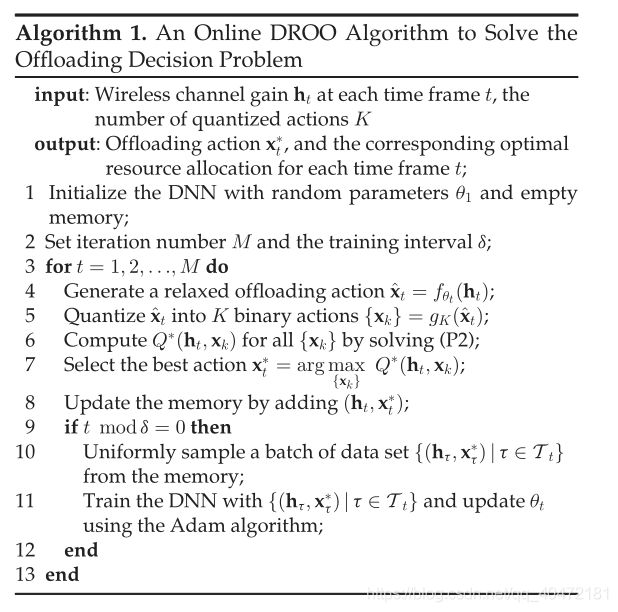

if __name__ == "__main__":

'''

This algorithm generates K modes from DNN, and chooses with largest

reward. The mode with largest reward is stored in the memory, which is

further used to train the DNN.

Adaptive K is implemented. K = max(K, K_his[-memory_size])

'''

N = 10 # number of users

n = 30000 # number of time frames

K = N # initialize K = N

decoder_mode = 'OP' # the quantization mode could be 'OP' (Order-preserving) or 'KNN'

Memory = 1024 # capacity of memory structure

Delta = 32 # Update interval for adaptive K

print('#user = %d, #channel=%d, K=%d, decoder = %s, Memory = %d, Delta = %d'%(N,n,K,decoder_mode, Memory, Delta))

# Load data

channel = sio.loadmat('./data/data_%d' %N)['input_h']

rate = sio.loadmat('./data/data_%d' %N)['output_obj'] # this rate is only used to plot figures; never used to train DROO.

# increase h to close to 1 for better training; it is a trick widely adopted in deep learning

channel = channel * 1000000

# generate the train and test data sample index

# data are splitted as 80:20

# training data are randomly sampled with duplication if n > total data size

split_idx = int(.8* len(channel))

num_test = min(len(channel) - split_idx, n - int(.8* n)) # training data size

mem = MemoryDNN(net = [N, 120, 80, N],

learning_rate = 0.01,

training_interval=10,

batch_size=128,

memory_size=Memory

)

start_time=time.time()

rate_his = []

rate_his_ratio = []

mode_his = []

k_idx_his = []

K_his = []

for i in range(n):

if i % (n//10) == 0:

print("%0.1f"%(i/n))

if i> 0 and i % Delta == 0:

# index counts from 0

if Delta > 1:

max_k = max(k_idx_his[-Delta:-1]) +1;

else:

max_k = k_idx_his[-1] +1;

K = min(max_k +1, N)

if i < n - num_test:

# training

i_idx = i % split_idx

else:

# test

i_idx = i - n + num_test + split_idx

h = channel[i_idx,:]

# the action selection must be either 'OP' or 'KNN'

m_list = mem.decode(h, K, decoder_mode)

r_list = []

for m in m_list:

r_list.append(bisection(h/1000000, m)[0])

# encode the mode with largest reward

mem.encode(h, m_list[np.argmax(r_list)])

# the main code for DROO training ends here

# the following codes store some interested metrics for illustrations

# memorize the largest reward

rate_his.append(np.max(r_list))

rate_his_ratio.append(rate_his[-1] / rate[i_idx][0])

# record the index of largest reward

k_idx_his.append(np.argmax(r_list))

# record K in case of adaptive K

K_his.append(K)

mode_his.append(m_list[np.argmax(r_list)])

total_time=time.time()-start_time

mem.plot_cost()

plot_rate(rate_his_ratio)

print("Averaged normalized computation rate:", sum(rate_his_ratio[-num_test: -1])/num_test)

print('Total time consumed:%s'%total_time)

print('Average time per channel:%s'%(total_time/n))

# save data into txt

save_to_txt(k_idx_his, "k_idx_his.txt")

save_to_txt(K_his, "K_his.txt")

save_to_txt(mem.cost_his, "cost_his.txt")

save_to_txt(rate_his_ratio, "rate_his_ratio.txt")

save_to_txt(mode_his, "mode_his.txt")

memory.py

# #################################################################

# This file contains the main DROO operations, including building DNN,

# Storing data sample, Training DNN, and generating quantized binary offloading decisions.

# version 1.0 -- January 2020. Written based on Tensorflow 2 by Weijian Pan and

# Liang Huang (lianghuang AT zjut.edu.cn)

# #################################################################

from __future__ import print_function

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

import numpy as np

print(tf.__version__)

print(tf.keras.__version__)

# DNN network for memory

class MemoryDNN:

def __init__(

self,

net,

learning_rate = 0.01,

training_interval=10,

batch_size=100,

memory_size=1000,

output_graph=False

):

self.net = net # the size of the DNN

self.training_interval = training_interval # learn every #training_interval

self.lr = learning_rate

self.batch_size = batch_size

self.memory_size = memory_size

# store all binary actions

self.enumerate_actions = []

# stored # memory entry

self.memory_counter = 1

# store training cost

self.cost_his = []

# initialize zero memory [h, m]

self.memory = np.zeros((self.memory_size, self.net[0] + self.net[-1]))

# construct memory network

self._build_net()

def _build_net(self):

self.model = keras.Sequential([

layers.Dense(self.net[1], activation='relu'), # the first hidden layer

layers.Dense(self.net[2], activation='relu'), # the second hidden layer

layers.Dense(self.net[-1], activation='sigmoid') # the output layer

])

self.model.compile(optimizer=keras.optimizers.Adam(lr=self.lr), loss=tf.losses.binary_crossentropy, metrics=['accuracy'])

def remember(self, h, m):

# replace the old memory with new memory

idx = self.memory_counter % self.memory_size

self.memory[idx, :] = np.hstack((h, m))

self.memory_counter += 1

def encode(self, h, m):

# encoding the entry

self.remember(h, m)

# train the DNN every 10 step

# if self.memory_counter> self.memory_size / 2 and self.memory_counter % self.training_interval == 0:

if self.memory_counter % self.training_interval == 0:

self.learn()

def learn(self):

# sample batch memory from all memory

if self.memory_counter > self.memory_size:

sample_index = np.random.choice(self.memory_size, size=self.batch_size)

else:

sample_index = np.random.choice(self.memory_counter, size=self.batch_size)

batch_memory = self.memory[sample_index, :]

h_train = batch_memory[:, 0: self.net[0]]

m_train = batch_memory[:, self.net[0]:]

# print(h_train) # (128, 10)

# print(m_train) # (128, 10)

# train the DNN

hist = self.model.fit(h_train, m_train, verbose=0)

self.cost = hist.history['loss'][0]

assert(self.cost > 0)

self.cost_his.append(self.cost)

def decode(self, h, k = 1, mode = 'OP'):

# to have batch dimension when feed into tf placeholder

h = h[np.newaxis, :]

m_pred = self.model.predict(h)

if mode == 'OP':

return self.knm(m_pred[0], k)

elif mode == 'KNN':

return self.knn(m_pred[0], k)

else:

print("The action selection must be 'OP' or 'KNN'")

def knm(self, m, k = 1):

# return k order-preserving binary actions

m_list = []

# generate the first binary offloading decision with respect to equation (8)

m_list.append(1*(m>0.5))

if k > 1:

# generate the remaining K-1 binary offloading decisions with respect to equation (9)

m_abs = abs(m-0.5)

idx_list = np.argsort(m_abs)[:k-1]

for i in range(k-1):

if m[idx_list[i]] >0.5:

# set the \hat{x}_{t,(k-1)} to 0

m_list.append(1*(m - m[idx_list[i]] > 0))

else:

# set the \hat{x}_{t,(k-1)} to 1

m_list.append(1*(m - m[idx_list[i]] >= 0))

return m_list

def knn(self, m, k = 1):

# list all 2^N binary offloading actions

if len(self.enumerate_actions) == 0:

import itertools

self.enumerate_actions = np.array(list(map(list, itertools.product([0, 1], repeat=self.net[0]))))

# the 2-norm

sqd = ((self.enumerate_actions - m)**2).sum(1)

idx = np.argsort(sqd)

return self.enumerate_actions[idx[:k]]

def plot_cost(self):

import matplotlib.pyplot as plt

plt.plot(np.arange(len(self.cost_his))*self.training_interval, self.cost_his)

plt.ylabel('Training Loss')

plt.xlabel('Time Frames')

plt.show()

optimization.py

# -*- coding: utf-8 -*-

"""

Created on Tue Jan 9 10:45:26 2018

@author: Administrator

"""

import numpy as np

from scipy import optimize

from scipy.special import lambertw

import scipy.io as sio # import scipy.io for .mat file I/

import time

def plot_gain( gain_his):

import matplotlib.pyplot as plt

import pandas as pd

import matplotlib as mpl

gain_array = np.asarray(gain_his)

df = pd.DataFrame(gain_his)

mpl.style.use('seaborn')

fig, ax = plt.subplots(figsize=(15,8))

rolling_intv = 20

plt.plot(np.arange(len(gain_array))+1, df.rolling(rolling_intv, min_periods=1).mean(), 'b')

plt.fill_between(np.arange(len(gain_array))+1, df.rolling(rolling_intv, min_periods=1).min()[0], df.rolling(rolling_intv, min_periods=1).max()[0], color = 'b', alpha = 0.2)

plt.ylabel('Gain ratio')

plt.xlabel('learning steps')

plt.show()

def bisection(h, M, weights=[]):

# the bisection algorithm proposed by Suzhi BI

# average time to find the optimal: 0.012535839796066284 s

# parameters and equations

o=100

p=3

u=0.7

eta1=((u*p)**(1.0/3))/o

ki=10**-26

eta2=u*p/10**-10

B=2*10**6

Vu=1.1

epsilon=B/(Vu*np.log(2))

x = [] # a =x[0], and tau_j = a[1:]

M0=np.where(M==0)[0]

M1=np.where(M==1)[0]

hi=np.array([h[i] for i in M0])

hj=np.array([h[i] for i in M1])

if len(weights) == 0:

# default weights [1, 1.5, 1, 1.5, 1, 1.5, ...]

weights = [1.5 if i%2==1 else 1 for i in range(len(M))]

wi=np.array([weights[M0[i]] for i in range(len(M0))])

wj=np.array([weights[M1[i]] for i in range(len(M1))])

def sum_rate(x):

sum1=sum(wi*eta1*(hi/ki)**(1.0/3)*x[0]**(1.0/3))

sum2=0

for i in range(len(M1)):

sum2+=wj[i]*epsilon*x[i+1]*np.log(1+eta2*hj[i]**2*x[0]/x[i+1])

return sum1+sum2

def phi(v, j):

return 1/(-1-1/(lambertw(-1/(np.exp( 1 + v/wj[j]/epsilon))).real))

def p1(v):

p1 = 0

for j in range(len(M1)):

p1 += hj[j]**2 * phi(v, j)

return 1/(1 + p1 * eta2)

def Q(v):

sum1 = sum(wi*eta1*(hi/ki)**(1.0/3))*p1(v)**(-2/3)/3

sum2 = 0

for j in range(len(M1)):

sum2 += wj[j]*hj[j]**2/(1 + 1/phi(v,j))

return sum1 + sum2*epsilon*eta2 - v

def tau(v, j):

return eta2*hj[j]**2*p1(v)*phi(v,j)

# bisection starts here

delta = 0.005

UB = 999999999

LB = 0

while UB - LB > delta:

v = (float(UB) + LB)/2

if Q(v) > 0:

LB = v

else:

UB = v

x.append(p1(v))

for j in range(len(M1)):

x.append(tau(v, j))

return sum_rate(x), x[0], x[1:]

def cd_method(h):

N = len(h)

M0 = np.random.randint(2,size = N)

gain0,a,Tj= bisection(h,M0)

g_list = []

M_list = []

while True:

for j in range(0,N):

M = np.copy(M0)

M[j] = (M[j]+1)%2

gain,a,Tj= bisection(h,M)

g_list.append(gain)

M_list.append(M)

g_max = max(g_list)

if g_max > gain0:

gain0 = g_max

M0 = M_list[g_list.index(g_max)]

else:

break

return gain0, M0

if __name__ == "__main__":

h=np.array([6.06020304235508*10**-6,1.10331933767028*10**-5,1.00213540309998*10**-7,1.21610610942759*10**-6,1.96138838395145*10**-6,1.71456339592966*10**-6,5.24563569673585*10**-6,5.89530717142197*10**-7,4.07769429231962*10**-6,2.88333185798682*10**-6])

M=np.array([1,0,0,0,1,0,0,0,0,0])

# h=np.array([1.00213540309998*10**-7,1.10331933767028*10**-5,6.06020304235508*10**-6,1.21610610942759*10**-6,1.96138838395145*10**-6,1.71456339592966*10**-6,5.24563569673585*10**-6,5.89530717142197*10**-7,4.07769429231962*10**-6,2.88333185798682*10**-6])

# M=np.array([0,0,1,0,1,0,0,0,0,0])

# h = np.array([4.6368924987170947*10**-7, 1.3479411763648968*10**-7, 7.174945246007612*10**-6, 2.5590719803595445*10**-7, 3.3189928740379023*10**-6, 1.2109071327755575*10**-5, 2.394278475886022*10**-6, 2.179121774067472*10**-6, 5.5213902658478367*10**-8, 2.168778154948169*10**-7, 2.053227965874453*10**-6, 7.002952297466865*10**-8, 7.594077851181444*10**-8, 7.904048961975136*10**-7, 8.867218892023474*10**-7, 5.886007653360979*10**-6, 2.3470565740563855*10**-6, 1.387049627074303*10**-7, 3.359475870531776*10**-7, 2.633733784949562*10**-7, 2.189895264149453*10**-6, 1.129177795302099*10**-5, 1.1760290137191366*10**-6, 1.6588656719735275*10**-7, 1.383637788476638*10**-6, 1.4485928387351664*10**-6, 1.4262265958416598*10**-6, 1.1779725004265418*10**-6, 7.738218993031842*10**-7, 4.763534225174186*10**-6])

# M =np.array( [0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1,])

# time the average speed of bisection algorithm

# repeat = 1

# M =np.random.randint(2, size=(repeat,len(h)))

# start_time=time.time()

# for i in range(repeat):

# gain,a,Tj= bisection(h,M[i,:])

# total_time=time.time()-start_time

# print('time_cost:%s'%(total_time/repeat))

gain,a,Tj= bisection(h,M)

print('y:%s'%gain)

print('a:%s'%a)

print('Tj:%s'%Tj)

# test CD method. Given h, generate the max mode

gain0, M0 = cd_method(h)

print('max y:%s'%gain0)

print(M0)

# test all data

K = [10, 20, 30] # number of users

N = 1000 # number of channel

for k in K:

# Load data

channel = sio.loadmat('./data/data_%d' %int(k))['input_h']

gain = sio.loadmat('./data/data_%d' %int(k))['output_obj']

start_time=time.time()

gain_his = []

gain_his_ratio = []

mode_his = []

for i in range(N):

if i % (N//10) == 0:

print("%0.1f"%(i/N))

i_idx = i

h = channel[i_idx,:]

# the CD method

gain0, M0 = cd_method(h)

# memorize the largest reward

gain_his.append(gain0)

gain_his_ratio.append(gain_his[-1] / gain[i_idx][0])

mode_his.append(M0)

total_time=time.time()-start_time

print('time_cost:%s'%total_time)

print('average time per channel:%s'%(total_time/N))

plot_gain(gain_his_ratio)

print("gain/max ratio: ", sum(gain_his_ratio)/N)

demo_alternate_weights.py

# #################################################################

# Deep Reinforcement Learning for Online Offloading in Wireless Powered Mobile-Edge Computing Networks

#

# This file contains a demo evaluating the performance of DROO with laternating-weight WDs. It loads the training samples with default WDs' weights from ./data/data_10.mat and with alternated weights from ./data/data_10_WeightsAlternated.mat. The channel gains in both files are the same. However, the optimal offloading mode, resource allocation, and the maximum computation rate in 'data_10_WeightsAlternated.mat' are recalculated since WDs' weights are alternated.

#

# References:

# [1] 1. Liang Huang, Suzhi Bi, and Ying-jun Angela Zhang, “Deep Reinforcement Learning for Online Offloading in Wireless Powered Mobile-Edge Computing Networks”, on arxiv:1808.01977

#

# version 1.0 -- April 2019. Written by Liang Huang (lianghuang AT zjut.edu.cn)

# #################################################################

import scipy.io as sio # import scipy.io for .mat file I/

import numpy as np # import numpy

from memory import MemoryDNN

from optimization import bisection

from main import plot_rate, save_to_txt

import time

def alternate_weights(case_id=0):

'''

Alternate the weights of all WDs. Note that, the maximum computation rate need be recomputed by solving (P2) once any WD's weight is changed.

Input: case_id = 0 for default weights; case_id = 1 for alternated weights.

Output: The alternated weights and the corresponding rate.

'''

# set alternated weights

weights=[[1,1.5,1,1.5,1,1.5,1,1.5,1,1.5],[1.5,1,1.5,1,1.5,1,1.5,1,1.5,1]]

# load the corresponding maximum computation rate

if case_id == 0:

# by defaulst, case_id = 0

rate = sio.loadmat('./data/data_10')['output_obj']

else:

# alternate weights for all WDs, case_id = 1

rate = sio.loadmat('./data/data_10_WeightsAlternated')['output_obj']

return weights[case_id], rate

if __name__ == "__main__":

'''

This demo evaluate DROO with laternating-weight WDs. We evaluate an extreme case by alternating the weights of all WDs between 1 and 1.5 at the same time, specifically, at time frame 6,000 and 8,000.

'''

N = 10 # number of users

n = 10000 # number of time frames, <= 10,000

K = N # initialize K = N

decoder_mode = 'OP' # the quantization mode could be 'OP' (Order-preserving) or 'KNN'

Memory = 1024 # capacity of memory structure

Delta = 32 # Update interval for adaptive K

print('#user = %d, #channel=%d, K=%d, decoder = %s, Memory = %d, Delta = %d'%(N,n,K,decoder_mode, Memory, Delta))

# Load data

channel = sio.loadmat('./data/data_%d' %N)['input_h']

rate = sio.loadmat('./data/data_%d' %N)['output_obj']

# increase h to close to 1 for better training; it is a trick widely adopted in deep learning

channel = channel * 1000000

# generate the train and test data sample index

# data are splitted as 80:20

# training data are randomly sampled with duplication if n > total data size

split_idx = int(.8* len(channel))

num_test = min(len(channel) - split_idx, n - int(.8* n)) # training data size

mem = MemoryDNN(net = [N, 120, 80, N],

learning_rate = 0.01,

training_interval=10,

batch_size=128,

memory_size=Memory

)

start_time=time.time()

rate_his = []

rate_his_ratio = []

mode_his = []

k_idx_his = []

K_his = []

h = channel[0,:]

# initilize the weights by setting case_id = 0.

weight, rate = alternate_weights(0)

print("WD weights at time frame %d:"%(0), weight)

for i in range(n):

# for dynamic number of WDs

if i ==0.6*n:

weight, rate = alternate_weights(1)

print("WD weights at time frame %d:"%(i), weight)

if i ==0.8*n:

weight, rate = alternate_weights(0)

print("WD weights at time frame %d:"%(i), weight)

if i % (n//10) == 0:

print("%0.1f"%(i/n))

if i> 0 and i % Delta == 0:

# index counts from 0

if Delta > 1:

max_k = max(k_idx_his[-Delta:-1]) +1;

else:

max_k = k_idx_his[-1] +1;

K = min(max_k +1, N)

i_idx = i

h = channel[i_idx,:]

# the action selection must be either 'OP' or 'KNN'

m_list = mem.decode(h, K, decoder_mode)

r_list = []

for m in m_list:

# only acitve users are used to compute the rate

r_list.append(bisection(h/1000000, m, weight)[0])

# memorize the largest reward

rate_his.append(np.max(r_list))

rate_his_ratio.append(rate_his[-1] / rate[i_idx][0])

# record the index of largest reward

k_idx_his.append(np.argmax(r_list))

# record K in case of adaptive K

K_his.append(K)

# save the mode with largest reward

mode_his.append(m_list[np.argmax(r_list)])

# if i <0.6*n:

# encode the mode with largest reward

mem.encode(h, m_list[np.argmax(r_list)])

total_time=time.time()-start_time

mem.plot_cost()

plot_rate(rate_his_ratio)

print("Averaged normalized computation rate:", sum(rate_his_ratio[-num_test: -1])/num_test)

print('Total time consumed:%s'%total_time)

print('Average time per channel:%s'%(total_time/n))

# save data into txt

save_to_txt(k_idx_his, "k_idx_his.txt")

save_to_txt(K_his, "K_his.txt")

save_to_txt(mem.cost_his, "cost_his.txt")

save_to_txt(rate_his_ratio, "rate_his_ratio.txt")

save_to_txt(mode_his, "mode_his.txt")

demo_on_off.py

# #################################################################

# Deep Reinforcement Learning for Online Offloading in Wireless Powered Mobile-Edge Computing Networks

#

# This file contains a demo evaluating the performance of DROO by randomly turning on/off some WDs. It loads the training samples from ./data/data_#.mat, where # denotes the number of active WDs in the MEC network. Note that, the maximum computation rate need be recomputed by solving (P2) once a WD is turned off/on.

#

# References:

# [1] 1. Liang Huang, Suzhi Bi, and Ying-jun Angela Zhang, “Deep Reinforcement Learning for Online Offloading in Wireless Powered Mobile-Edge Computing Networks”, submitted to IEEE Journal on Selected Areas in Communications.

#

# version 1.0 -- April 2019. Written by Liang Huang (lianghuang AT zjut.edu.cn)

# #################################################################

import scipy.io as sio # import scipy.io for .mat file I/

import numpy as np # import numpy

from memory import MemoryDNN

from optimization import bisection

from main import plot_rate, save_to_txt

import time

def WD_off(channel, N_active, N):

# turn off one WD

if N_active > 5: # current we support half of WDs are off

N_active = N_active - 1

# set the (N-active-1)th channel to close to 0

# since all channels in each time frame are randomly generated, we turn of the WD with greatest index

channel[:,N_active] = channel[:, N_active] / 1000000 # a programming trick,such that we can recover its channel gain once the WD is turned on again.

print(" The %dth WD is turned on."%(N_active +1))

# update the expected maximum computation rate

rate = sio.loadmat('./data/data_%d' %N_active)['output_obj']

return channel, rate, N_active

def WD_on(channel, N_active, N):

# turn on one WD

if N_active < N:

N_active = N_active + 1

# recover (N_active-1)th channel

channel[:,N_active-1] = channel[:, N_active-1] * 1000000

print(" The %dth WD is turned on."%(N_active))

# update the expected maximum computation rate

rate = sio.loadmat('./data/data_%d' %N_active)['output_obj']

return channel, rate, N_active

if __name__ == "__main__":

'''

This demo evaluate DROO for MEC networks where WDs can be occasionally turned off/on. After DROO converges, we randomly turn off on one WD at each time frame 6,000, 6,500, 7,000, and 7,500, and then turn them on at time frames 8,000, 8,500, and 9,000. At time frame 9,500 , we randomly turn off two WDs, resulting an MEC network with 8 acitve WDs.

'''

N = 10 # number of users

N_active = N # number of effective users

N_off = 0 # number of off-users

n = 10000 # number of time frames, <= 10,000

K = N # initialize K = N

decoder_mode = 'OP' # the quantization mode could be 'OP' (Order-preserving) or 'KNN'

Memory = 1024 # capacity of memory structure

Delta = 32 # Update interval for adaptive K

print('#user = %d, #channel=%d, K=%d, decoder = %s, Memory = %d, Delta = %d'%(N,n,K,decoder_mode, Memory, Delta))

# Load data

channel = sio.loadmat('./data/data_%d' %N)['input_h']

rate = sio.loadmat('./data/data_%d' %N)['output_obj']

# increase h to close to 1 for better training; it is a trick widely adopted in deep learning

channel = channel * 1000000

channel_bak = channel.copy()

# generate the train and test data sample index

# data are splitted as 80:20

# training data are randomly sampled with duplication if n > total data size

split_idx = int(.8* len(channel))

num_test = min(len(channel) - split_idx, n - int(.8* n)) # training data size

mem = MemoryDNN(net = [N, 120, 80, N],

learning_rate = 0.01,

training_interval=10,

batch_size=128,

memory_size=Memory

)

start_time=time.time()

rate_his = []

rate_his_ratio = []

mode_his = []

k_idx_his = []

K_his = []

h = channel[0,:]

for i in range(n):

# for dynamic number of WDs

if i ==0.6*n:

print("At time frame %d:"%(i))

channel, rate, N_active = WD_off(channel, N_active, N)

if i ==0.65*n:

print("At time frame %d:"%(i))

channel, rate, N_active = WD_off(channel, N_active, N)

if i ==0.7*n:

print("At time frame %d:"%(i))

channel, rate, N_active = WD_off(channel, N_active, N)

if i ==0.75*n:

print("At time frame %d:"%(i))

channel, rate, N_active = WD_off(channel, N_active, N)

if i ==0.8*n:

print("At time frame %d:"%(i))

channel, rate, N_active = WD_on(channel, N_active, N)

if i ==0.85*n:

print("At time frame %d:"%(i))

channel, rate, N_active = WD_on(channel, N_active, N)

if i ==0.9*n:

print("At time frame %d:"%(i))

channel, rate, N_active = WD_on(channel, N_active, N)

channel, rate, N_active = WD_on(channel, N_active, N)

if i == 0.95*n:

print("At time frame %d:"%(i))

channel, rate, N_active = WD_off(channel, N_active, N)

channel, rate, N_active = WD_off(channel, N_active, N)

if i % (n//10) == 0:

print("%0.1f"%(i/n))

if i> 0 and i % Delta == 0:

# index counts from 0

if Delta > 1:

max_k = max(k_idx_his[-Delta:-1]) +1;

else:

max_k = k_idx_his[-1] +1;

K = min(max_k +1, N)

i_idx = i

h = channel[i_idx,:]

# the action selection must be either 'OP' or 'KNN'

m_list = mem.decode(h, K, decoder_mode)

r_list = []

for m in m_list:

# only acitve users are used to compute the rate

r_list.append(bisection(h[0:N_active]/1000000, m[0:N_active])[0])

# memorize the largest reward

rate_his.append(np.max(r_list))

rate_his_ratio.append(rate_his[-1] / rate[i_idx][0])

# record the index of largest reward

k_idx_his.append(np.argmax(r_list))

# record K in case of adaptive K

K_his.append(K)

# save the mode with largest reward

mode_his.append(m_list[np.argmax(r_list)])

# if i <0.6*n:

# encode the mode with largest reward

mem.encode(h, m_list[np.argmax(r_list)])

total_time=time.time()-start_time

mem.plot_cost()

plot_rate(rate_his_ratio)

print("Averaged normalized computation rate:", sum(rate_his_ratio[-num_test: -1])/num_test)

print('Total time consumed:%s'%total_time)

print('Average time per channel:%s'%(total_time/n))

# save data into txt

save_to_txt(k_idx_his, "k_idx_his.txt")

save_to_txt(K_his, "K_his.txt")

save_to_txt(mem.cost_his, "cost_his.txt")

save_to_txt(rate_his_ratio, "rate_his_ratio.txt")

save_to_txt(mode_his, "mode_his.txt")