点云配准(PCL+ICP)

点云配准

1 点云概述

定义:点云是某个坐标系下的点的数据集。点包含了丰富的信息,包括三维坐标X,Y,Z、颜色、分类值、强度值、时间等等。

来源:

- 三维激光扫描仪采集,RGB-D相机采集

- 二维影像三维重建

- 三维模型计算生成

作用:三维重建,…

2 点云配准

点云配准旨在将多个点云正确配准到同一个坐标系下,形成更完整的点云。点云配准要应对点云非结构化、不均匀和噪声等干扰,要以更短的时间消耗达到更高的精度,时间消耗和精度往往是矛盾的,但在一定程度上优化是有可能的。点云配准广泛应用于3维重建、参数评估、定位和姿态估计等领域,在自动驾驶、机器人和增强现实等新兴应用上也有点云配准技术的参与。现有方法归纳为非学习方法和基于学习的方法进行分析。非学习方法分为经典方法和基于特征的方法;基于学习的方法分为结合了非学习方法的部分学习方法和直接的端到端学习方法[1]。

点云配准可分为两步,先粗后精:

- 粗配准(Coarse Global Registeration):基于局部几何特征

- 精配准(Fine Local Registeration):需要初始位姿(initial alignment)

相关算法:

- ICP(iterative closest point)是一种最经典的点云配准算法(Besl和Mckay,1992),通过迭代对应点搜寻和最小化点对整体距离以估计变换矩阵。因为是非凸的,所以容易陷入局部极小值。当点云配准需要较大的旋转平移时,ICP往往无法得到正确的结果。

- NDT(normal distributions transform)是另一种经典的精配准方法,通过最大化源点在目标点体素化后计算出的正态分布的概率密度上的得分进行配准。

ICP和NDT都属于非学习的配准方法,随着深度学习技术和计算能力的提高,逐步出现了基于学习的点云配准方法,其具有速度上的优势,特别是在粗配准方面有很大优势。

[1]李建微,占家旺.三维点云配准方法研究进展[J].中国图象图形学报,2022,27(02):349-367.

ICP(Iterative Closest Point,迭代最近邻点)

point-to-point ICP

算法流程:

- 得到源点云与目标点云之间的对应关系;

- 通过最小二乘法构建目标函数,迭代最小化点对整体距离。最终得到变换矩阵。

优缺点:

-

精度高,无需提取特征点;

-

使用前需完成粗配准,否则易陷入局部最优;

-

只适用于刚性配准;

刚性配准主要解决的是简单的图像整体移动(如平移、旋转等)问题;非刚性配准主要解决的是图像的柔性变换问题,它容许变换过程中任意两个像素点之间对应位置关系发生变动。

-

不适用于部分重叠点云的配准。

算法原理

假设点云 { Q } \{Q\} {Q}为目标点云(参考点云), { P } \{P\} {P}为源点云(待配准的点云), p i ( i ∈ 1 , 2 , . . . N ) p_i(i\in1,2,...N) pi(i∈1,2,...N)是 { P } \{P\} {P}中的一个点, q i q_i qi是 { Q } \{Q\} {Q}中与 p i p_i pi距离最近的点,组成点对 ( q i , p i ) (q_i,p_i) (qi,pi)

我们需要计算从 { P } \{P\} {P}到 { Q } \{Q\} {Q}的 R T RT RT变换矩阵,即旋转矩阵 R R R和平移矩阵 T T T。如果变换参数是准确的,那么点云 { P } \{P\} {P}中的每一个点 p i p_i pi,经过变换后应该与点云 { Q } \{Q\} {Q}中的点 q i q_i qi完全重合,定义误差函数如下:

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-pcgTLv8u-1658737706113)(http://zhihu.com/equation?tex=||\textbf{x}||2+%3D\sqrt{\sum{i%3D1}Nx_i2})]

Euclid范数(欧几里得范数,常用计算向量长度),即向量元素绝对值的平方和再开方。

其中n为两点云之间的点对个数, p i p_i pi为源点云中的一点, q i q_i qi为目标点云中与 p i p_i pi对应的最近邻点,R、t分别为旋转矩阵和平移矩阵。

因此,ICP问题可以描述为,寻找使得 E ( R , t ) E(R,t) E(R,t)最小时的R和t的值。

ICP的求解分为两种方式:利用线性代数进行求解(SVD),利用非线性优化进行求解。

SVD求解流程

-

计算两组匹配点的质心:

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-AZrlDHel-1658737706115)(https://s2.loli.net/2022/07/25/sIuxCtpgeXMS7Bv.png)]

-

得到去质心的点集:

x i : = p i − p ‾ , y i : = q i − q ‾ , i = 1 , 2... n \mathbf{x}_i := \mathbf{p}_{i} -\overline{\mathbf{p}}, \mathbf{y}_i := \mathbf{q}_{i} -\overline{\mathbf{q}}, i = 1,2...n xi:=pi−p,yi:=qi−q,i=1,2...n -

计算3x3矩阵 H:

H = X Y T H=XY^T H=XYTX和Y分别为去质心的源点云和目标点云矩阵,大小均为3xn。

-

对H进行SVD分解 H = U Σ V T H = U\Sigma V^T H=UΣVT,得到最优旋转矩阵:

R ∗ = V U T R^*=VU^T R∗=VUT关于SVD分解的细节参考:奇异值分解(SVD) - 知乎 (zhihu.com)

-

计算最优平移向量:

t = q ‾ − R p ‾ \mathbf{t} = \overline{\mathbf{q}} - R\overline{\mathbf{p}} t=q−Rp

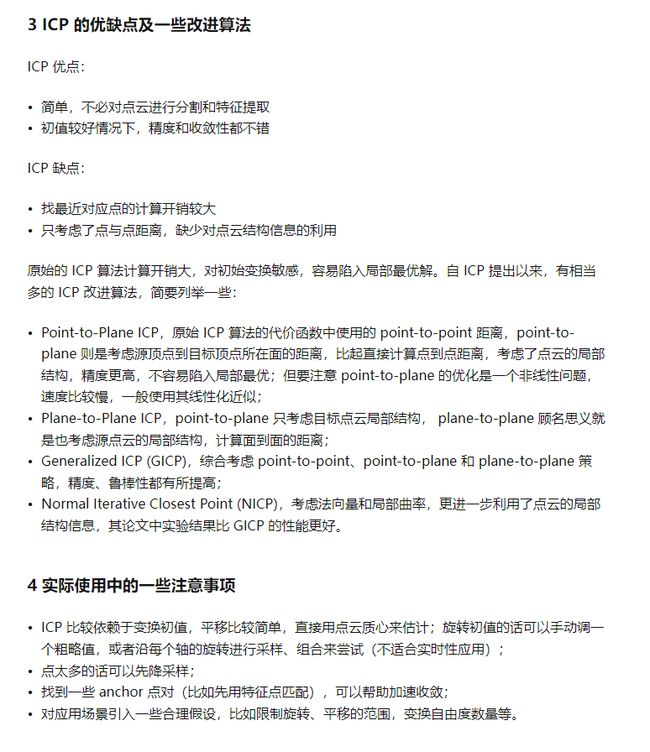

ICP的优缺点及改进

上图原文链接:三维点云配准 – ICP 算法原理及推导 - 知乎 (zhihu.com)

PCL库中ICP接口使用

参考文章链接:PCL|ICP|Interactive Iterative Closest Point|代码实践 - 知乎 (zhihu.com)

PCL 库中 ICP 的接口及其变种:

- 点到点:pcl::IterativeClosestPoint< PointSource, PointTarget, Scalar >

- 点到面:pcl::IterativeClosestPointWithNormals< PointSource, PointTarget, Scalar >

- 面到面:pcl::GeneralizedIterativeClosestPoint< PointSource, PointTarget >

其中,IterativeClosestPoint 模板类是 ICP 算法的一个基本实现,其优化求解方法基于 Singular Value Decomposition (SVD),算法迭代结束条件包括:

- 最大迭代次数:Number of iterations has reached the maximum user imposed number of iterations (via setMaximumIterations)

- 两次变换矩阵之间的差值:The epsilon (difference) between the previous transformation and the current estimated transformation is smaller than an user imposed value (via setTransformationEpsilon)

- 均方误差:The sum of Euclidean squared errors is smaller than a user defined threshold (via setEuclideanFitnessEpsilon)

基本用法:

IterativeClosestPoint<PointXYZ, PointXYZ> icp;

// Set the input source and target

icp.setInputCloud (cloud_source);

icp.setInputTarget (cloud_target);

// Set the max correspondence distance to 5cm (e.g., correspondences with higher distances will be ignored)

icp.setMaxCorrespondenceDistance (0.05);

// Set the maximum number of iterations (criterion 1)

icp.setMaximumIterations (50);

// Set the transformation epsilon (criterion 2)

icp.setTransformationEpsilon (1e-8);

// Set the euclidean distance difference epsilon (criterion 3)

icp.setEuclideanFitnessEpsilon (1);

// Perform the alignment

icp.align (cloud_source_registered);

// Obtain the transformation that aligned cloud_source to cloud_source_registered

Eigen::Matrix4f transformation = icp.getFinalTransformation ();

官方demo:

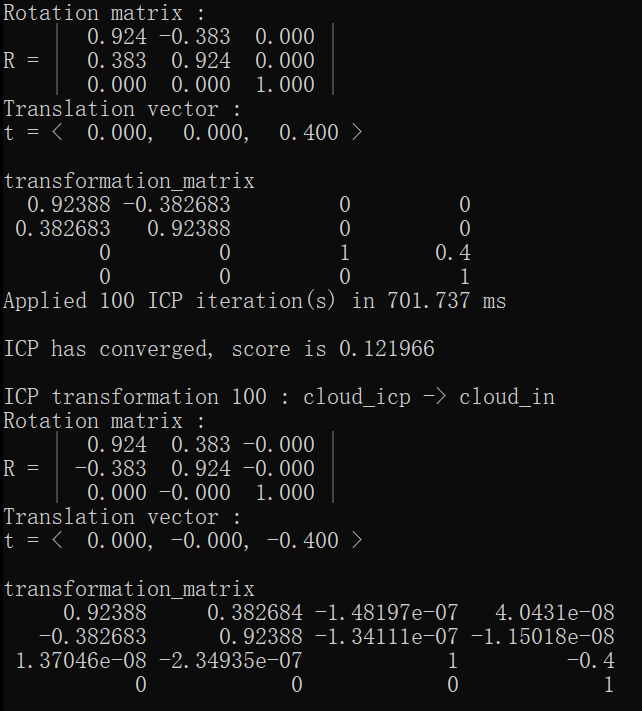

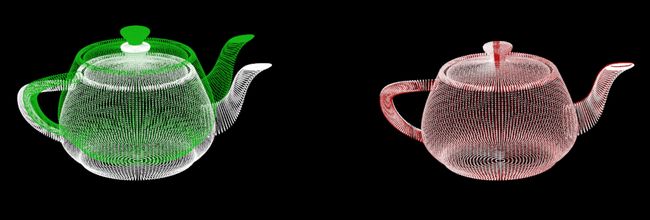

#include 使用teapot.ply(41472 points)迭代100次结果:

图中白色点云为目标点云,绿色为配准前源点云,红色为配准后点云。

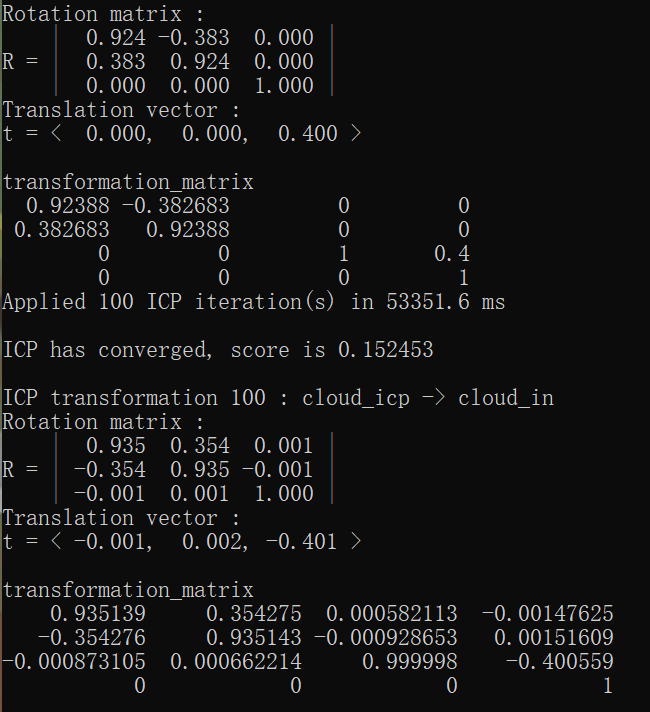

使用bunny.ply(1889 points)迭代100次结果: