RESA车道线检测算法---计算量和参数量的计算

FLOPS 基础概念理解

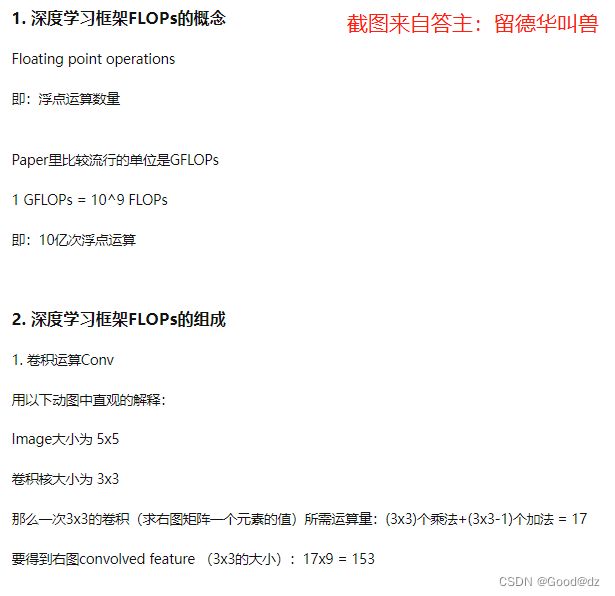

FLOPS:注意全大写,是floating point operations per second的缩写,意指每秒浮点运算次数,理解为计算速度。是一个衡量硬件性能的指标。

FLOPs:注意s小写,是floating point operations的缩写(s表复数),意指浮点运算数,理解为计算量。可以用来衡量算法/模型的复杂度。

深度学习框架 FLOPs 的组成:卷积、反卷积、激活函数、linear、BatchNorm、Upsample、Poolings等。其中,Conv所占的比重通常最大和预处理之后网络的输入图像大小有关系而 #Parameters和图像大小无关。

安转thop

pip install thop

简单案例

from torchvision.models import resnet50

from thop import profile

model = resnet50()

input = torch.randn(1, 3, 224, 224)

macs, params = profile(model, inputs=(input, ))

macs, params = clever_format([macs, params], "%.3f")

print('MACs: {}'.format(macs))

print('Params: {}'.format(params))

参考resa复现来跑通源代码

在resa-main文件夹下,添加py文件,复制以下代码来计算网络的计算量和参数量:

import torch.nn.parallel

import torch.optim

import argparse

from utils.config import Config

import torch.nn as nn

import torch

import torch.nn.functional as F

from models.resnet import ResNetWrapper

from models.decoder import BUSD, PlainDecoder

class RESA(nn.Module):

def __init__(self, cfg):

super(RESA, self).__init__()

self.iter = cfg.resa.iter

chan = cfg.resa.input_channel

fea_stride = cfg.backbone.fea_stride

self.height = cfg.img_height // fea_stride

self.width = cfg.img_width // fea_stride

self.alpha = cfg.resa.alpha

conv_stride = cfg.resa.conv_stride

for i in range(self.iter):

conv_vert1 = nn.Conv2d(

chan, chan, (1, conv_stride),

padding=(0, conv_stride//2), groups=1, bias=False)

conv_vert2 = nn.Conv2d(

chan, chan, (1, conv_stride),

padding=(0, conv_stride//2), groups=1, bias=False)

setattr(self, 'conv_d'+str(i), conv_vert1)

setattr(self, 'conv_u'+str(i), conv_vert2)

conv_hori1 = nn.Conv2d(

chan, chan, (conv_stride, 1),

padding=(conv_stride//2, 0), groups=1, bias=False)

conv_hori2 = nn.Conv2d(

chan, chan, (conv_stride, 1),

padding=(conv_stride//2, 0), groups=1, bias=False)

setattr(self, 'conv_r'+str(i), conv_hori1)

setattr(self, 'conv_l'+str(i), conv_hori2)

idx_d = (torch.arange(self.height) + self.height //

2**(self.iter - i)) % self.height

setattr(self, 'idx_d'+str(i), idx_d)

idx_u = (torch.arange(self.height) - self.height //

2**(self.iter - i)) % self.height

setattr(self, 'idx_u'+str(i), idx_u)

idx_r = (torch.arange(self.width) + self.width //

2**(self.iter - i)) % self.width

setattr(self, 'idx_r'+str(i), idx_r)

idx_l = (torch.arange(self.width) - self.width //

2**(self.iter - i)) % self.width

setattr(self, 'idx_l'+str(i), idx_l)

def forward(self, x):

x = x.clone()

for direction in ['d', 'u']:

for i in range(self.iter):

conv = getattr(self, 'conv_' + direction + str(i))

idx = getattr(self, 'idx_' + direction + str(i))

x.add_(self.alpha * F.relu(conv(x[..., idx, :])))

for direction in ['r', 'l']:

for i in range(self.iter):

conv = getattr(self, 'conv_' + direction + str(i))

idx = getattr(self, 'idx_' + direction + str(i))

x.add_(self.alpha * F.relu(conv(x[..., idx])))

return x

class ExistHead(nn.Module):

def __init__(self, cfg=None):

super(ExistHead, self).__init__()

self.cfg = cfg

self.dropout = nn.Dropout2d(0.1) # ???

self.conv8 = nn.Conv2d(128, cfg.num_classes, 1)

stride = cfg.backbone.fea_stride * 2

self.fc9 = nn.Linear(

int(cfg.num_classes * cfg.img_width / stride * cfg.img_height / stride), 128)

self.fc10 = nn.Linear(128, cfg.num_classes-1)

def forward(self, x):

x = self.dropout(x)

x = self.conv8(x)

x = F.softmax(x, dim=1)

x = F.avg_pool2d(x, 2, stride=2, padding=0)

x = x.view(-1, x.numel() // x.shape[0])

x = self.fc9(x)

x = F.relu(x)

x = self.fc10(x)

x = torch.sigmoid(x)

return x

class RESANet(nn.Module):

def __init__(self, cfg):

super(RESANet, self).__init__()

self.cfg = cfg

self.backbone = ResNetWrapper(cfg)

self.resa = RESA(cfg)

self.decoder = eval(cfg.decoder)(cfg)

self.heads = ExistHead(cfg)

def forward(self, batch):

fea = self.backbone(batch)

fea = self.resa(fea)

seg = self.decoder(fea)

exist = self.heads(fea)

output = {'seg': seg, 'exist': exist}

return output

def parse_args():

parser = argparse.ArgumentParser(description='Train a detector')

parser.add_argument('config', help='train config file path')

parser.add_argument(

'--work_dirs', type=str, default='work_dirs',

help='work dirs')

parser.add_argument(

'--load_from', default=None,

help='the checkpoint file to resume from')

parser.add_argument(

'--finetune_from', default=None,

help='whether to finetune from the checkpoint')

parser.add_argument(

'--validate',

action='store_true',

help='whether to evaluate the checkpoint during training')

parser.add_argument(

'--view',

action='store_true',

help='whether to show visualization result')

parser.add_argument('--gpus', nargs='+', type=int, default='0')

parser.add_argument('--seed', type=int,

default=None, help='random seed')

args = parser.parse_args()

return args

if __name__ == '__main__':

args = parse_args()

cfg = Config.fromfile(args.config)

from thop import profile, clever_format

model = RESANet(cfg).cuda()

x = torch.zeros((1, 3, 368, 640)).cuda() + 1

macs, params = profile(model, inputs=(x,))

# macs, params = clever_format([macs, params], "%.3f")

# print('MACs: {}'.format(macs))

# print('Params: {}'.format(params))

name = 'disA'

print("%s | %s | %s" % ("Model", "Params(M)", "FLOPs(G)"))

print("---|---|---")

print("%s | %.2f | %.2f" % (name, params / (1000 ** 2), macs / (1000 ** 3)))

运行命令如下:

python main.py configs/tusimple.py

注释如果使用configs/culane.py,需要修改输入量x的维度,根据culane的img_height = 288和img_width = 800来修改

参考文献:

Pytorch中计算自己模型的FLOPs | thop.profile() 方法 | yolov5s 网络模型参数量、计算量统计