RNN代码实现

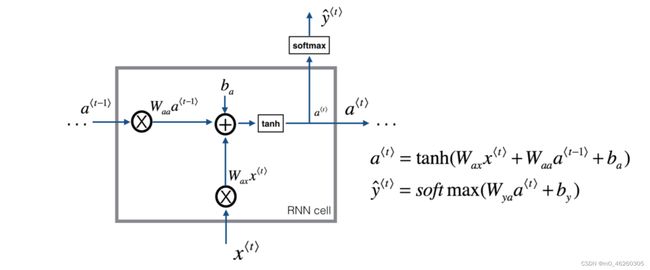

单层RNN结构

- a t − 1 a^{t-1} at−1为前一个时刻隐藏层的输出结果

- x t x^{t} xt当前的输入的向量

这里公式上需要tanh与softmax函数,首先写一下tanh与softmax函数

import numpy as np

def softmax(a):

'''使用a-np.max(a),方式数量太大溢出,使softmax更加稳定

a:shape(n,1)为一个个n维向量

'''

e_x = np.exp(a - np.max(a))

return e_x / e_x.sum(axis=0)

定义RNN元结构

def rnn_Cell(x_t,a_former,parameters):

'''

:param x_t: 当前输入向量

:param a_former: 前一个隐藏层输出向量

:param parameters: 计算的参数

:return:

'''

Wam = parameters['Wam']

Waa = parameters['Waa']

ba = parameters['ba']

Wya =parameters['Wya']

by = parameters['by']

a_now = np.tanh(np.dot(Wam,x_t)+np.dot(Waa,a_former)+ba)

y_now = softmax(np.dot(Wya,a_now)+by)

#将y_now,a_now传过去

cache=(a_now,a_former,x_t,parameters)

return a_now,y_now,cache

#随机化参数

np.random.seed(1)

xt = np.random.randn(4,10) #m batch

a_prev = np.random.randn(5,10)

Waa = np.random.randn(5,5)

Wam = np.random.randn(5,4)

Wya = np.random.randn(2,5)

ba = np.random.randn(5,1)

by = np.random.randn(2,1)

parameters = {"Waa": Waa, "Wam": Wam, "Wya": Wya, "ba": ba, "by": by}

#输入模型

print(np.dot(Waa,a_prev))

data=rnn_Cell(xt,a_prev,parameters)

#输出的隐藏层

a_now=data[0]

y_now=data[1]

cache=data[2]

print(len(cache))

[[ 9.36942267e-01 -1.36240034e+00 -1.32303370e+00 1.69031962e+00

-2.78724117e-01 7.15313401e-01 -1.28888205e-01 1.75111213e+00

-7.18652398e-01 1.41955981e+00]

[ 5.89218991e-01 2.79727931e-01 3.88438645e-01 -1.89748622e-02

3.97820042e-02 1.08256579e+00 -5.02683643e-01 -3.41263120e-01

-5.12467123e-02 -1.53898805e-03]

[-4.64822462e-01 3.91445728e-01 -9.67257335e-01 -1.23369097e+00

-1.11163794e-01 -3.57988175e-01 1.95114084e+00 8.92359894e-01

1.18103453e+00 -5.39953404e-01]

[ 1.28646795e+00 -1.47832025e+00 -1.49835640e+00 9.23766551e-02

-6.89552490e-01 9.40833304e-01 -3.65939491e-01 -6.12278335e-01

-1.90982056e+00 1.92585589e+00]

[ 8.82974965e-01 2.53115310e+00 1.02772562e+00 -3.29561099e+00

7.59847444e-02 2.77543594e+00 9.87893662e-01 -1.88374589e+00

1.61929495e+00 -2.00921669e+00]]

4

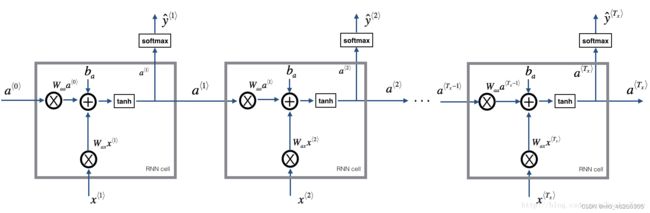

RNN前向传播

def RNN_forward(x,a0,parameters):

'''定义前向传播

x : n句号,t个词,m个词向量

a0:初始化的隐藏层,通常全0初始化

n_x表示样本个数,即多少句话

'''

cache=[] #存所有的参数

#初始化a0

m,n_x,t_x = x.shape

n_y,n_a = parameters['Wya'].shape

a = np.zeros((n_a,n_x,t_x))

y_now = np.zeros((n_y,n_x,t_x))

a_lit=a0

for i in range(0,t_x):

a_tmp,y_tmp,che= rnn_Cell(x[:,:,i],a_lit,parameters)

a[:,:,i]=a_tmp

a_lit = a_tmp

y_now[:,:,i]=y_tmp

cache.append(che)

caches= (cache,x) #将样本加上

return cache,a,y_now

测试一下

np.random.seed(1)

x = np.random.randn(4,10,3)

a0 = np.random.randn(5,10)

Waa = np.random.randn(5,5)

Wam = np.random.randn(5,4)

Wya = np.random.randn(2,5)

ba = np.random.randn(5,1)

by = np.random.randn(2,1)

parameters = {"Waa": Waa, "Wam": Wam, "Wya": Wya, "ba": ba, "by": by}

caches,a, y_pred = RNN_forward(x, a0, parameters)

RNN反向单元

def rnn_cell_backward(d_next,cache):

'''

一层的反向传播算法

cache:a_now,a_former,x_t,parameters

:parameters:

Wam = parameters['Wam']

Waa = parameters['Waa']

ba = parameters['ba']

Wya =parameters['Wya']

by = parameters['by']

d_next:下一个隐藏层的梯度

'''

c=cache

print(len(c))

a_now,a_former,x_t,parameters=c

print(parameters['Wam'])

# a_now:5, 10 ,a_former:5 10 其中10为batch大小

Wam =parameters['Wam'] #5 4

Waa =parameters['Waa'] #5 5

ba =parameters['ba'] # 5 1

Wya=parameters['Wya'] # 2 5

by=parameters['by'] # 2 1

z=1-a_now*a_now

dWam =np.dot(z,x_t.T)

print("dWax shape ",dWam.shape)

dWaa =np.dot(z,a_former.T)

print("dWaa shape ",dWaa.shape)

dx_t =z

print("dX_t shape ",dx_t.shape)

dba = np.sum(z, axis = 1, keepdims = True)

print("dba shape ",dba.shape)

da_former = np.dot(Waa.T,z)

gradients={"da_former":da_former,"dwam":dWam,"dwaa":dWaa,"dba":dba}

return gradients

测试一下

np.random.seed(1)

xt = np.random.randn(3,10,4)

a_prev = np.random.randn(5,10)

Wam = np.random.randn(5,3)

Waa = np.random.randn(5,5)

Wya = np.random.randn(2,5)

b = np.random.randn(5,1)

by = np.random.randn(2,1)

parameters = {"Wam": Wam, "Waa": Waa, "Wya": Wya, "ba": ba, "by": by}

ch,a_next, yt = RNN_forward(xt, a_prev, parameters)

print(len(ch))

da_next = np.random.randn(5,10)

gradients = rnn_cell_backward(da_next, ch[0])

4

4

[[-0.64691669 0.90148689 2.52832571]

[-0.24863478 0.04366899 -0.22631424]

[ 1.33145711 -0.28730786 0.68006984]

[-0.3198016 -1.27255876 0.31354772]

[ 0.50318481 1.29322588 -0.11044703]]

dWax shape (5, 3)

dWaa shape (5, 5)

dX_t shape (5, 10)

dba shape (5, 1)

RNN反向传播

#开始反向传播

def RNN_backward(da,cache):

'''

:param da: 输入的数据集

:param cache: T_x所有时刻的参数

:return:实现反向传播

'''

# dwam,dwaa,dba需要更新

a_now,a_former,x_t,parameters=cache[0]

n_a = a_now.shape[0]

n_x = x_t.shape[0]

dwam=np.zeros((n_a,n_x))

dwaa=np.zeros((n_a,n_a))

dba =np.zeros((n_a,1))

da_pre = np.zeros((n_a,1))

#计算梯度

for i in reversed(range(len(cache))):

'''对每一个时刻'''

gradients = rnn_cell_backward(da[:,:,i]+da_pre,cache[i])

da_pre, dWaxt, dWaat, dbat = gradients['da_former'], gradients['dwam'], gradients['dwaa'], gradients['dba']

dwam += dWaxt

dwaa += dWaat

dba += dbat

da0 = da_pre

gradients = { "da0": da0, "dWam": dwam, "dWaa": dwaa,"dba": dba}

return gradients

np.random.seed(1)

x = np.random.randn(3,10,4)

a0 = np.random.randn(5,10)

Wax = np.random.randn(5,3)

Waa = np.random.randn(5,5)

Wya = np.random.randn(2,5)

ba = np.random.randn(5,1)

by = np.random.randn(2,1)

parameters = {"Wam": Wam, "Waa": Waa, "Wya": Wya, "ba": ba, "by": by}

cache,a, y= RNN_forward(x, a0, parameters)

da = np.random.randn(5, 10, 4)

gradients = RNN_backward(da, caches)

4

[[-1.62743834 0.60231928 0.4202822 0.81095167]

[ 1.04444209 -0.40087819 0.82400562 -0.56230543]

[ 1.95487808 -1.33195167 -1.76068856 -1.65072127]

[-0.89055558 -1.1191154 1.9560789 -0.3264995 ]

[-1.34267579 1.11438298 -0.58652394 -1.23685338]]

dWax shape (5, 4)

dWaa shape (5, 5)

dX_t shape (5, 10)

dba shape (5, 1)

4

[[-1.62743834 0.60231928 0.4202822 0.81095167]

[ 1.04444209 -0.40087819 0.82400562 -0.56230543]

[ 1.95487808 -1.33195167 -1.76068856 -1.65072127]

[-0.89055558 -1.1191154 1.9560789 -0.3264995 ]

[-1.34267579 1.11438298 -0.58652394 -1.23685338]]

dWax shape (5, 4)

dWaa shape (5, 5)

dX_t shape (5, 10)

dba shape (5, 1)

4

[[-1.62743834 0.60231928 0.4202822 0.81095167]

[ 1.04444209 -0.40087819 0.82400562 -0.56230543]

[ 1.95487808 -1.33195167 -1.76068856 -1.65072127]

[-0.89055558 -1.1191154 1.9560789 -0.3264995 ]

[-1.34267579 1.11438298 -0.58652394 -1.23685338]]

dWax shape (5, 4)

dWaa shape (5, 5)

dX_t shape (5, 10)

dba shape (5, 1)