卷积网络的特征图可视化(keras自定义网络+VGG16)

参考博客:

卷积神经网络特征图可视化(自定义网络和VGG网络)

https://blog.csdn.net/dcrmg/article/details/81255498

使用Keras来搭建VGG网络

https://blog.csdn.net/qq_34783311/article/details/84994351

深度神经网络可解释性:卷积核、权重和激活可视化(pytorch+tensorboard):

https://blog.csdn.net/Bit_Coders/article/details/117781090

代码:

# coding: utf-8

from keras.models import Model

import cv2

import matplotlib.pyplot as plt

from keras.models import Sequential

from keras.layers.convolutional import Convolution2D, MaxPooling2D

from keras.layers import Activation

from pylab import *

import keras

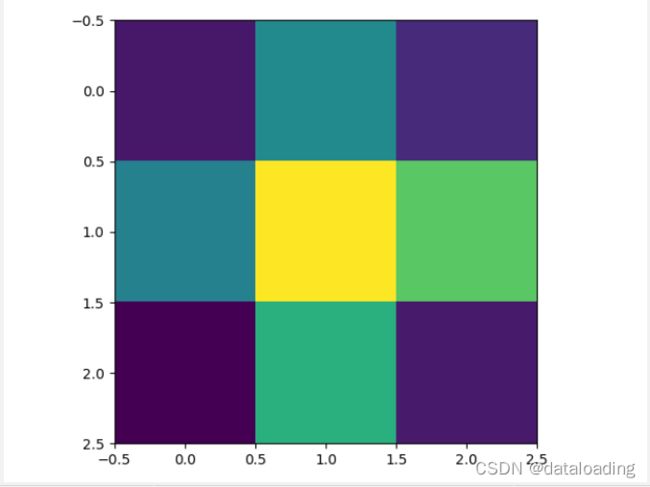

def get_row_col(num_pic):

squr = num_pic ** 0.5

row = round(squr)

col = row + 1 if squr - row > 0 else row

return row, col

def visualize_feature_map(img_batch):

feature_map = np.squeeze(img_batch, axis=0)

print(feature_map.shape)

feature_map_combination = []

plt.figure()

num_pic = feature_map.shape[2]

row, col = get_row_col(num_pic)

for i in range(0, num_pic):

feature_map_split = feature_map[:, :, i]

feature_map_combination.append(feature_map_split)

plt.subplot(row, col, i + 1)

plt.imshow(feature_map_split)

axis('off')

title('feature_map_{}'.format(i))

plt.savefig('feature_map.png')

plt.show()

# 各个特征图按1:1 叠加

feature_map_sum = sum(ele for ele in feature_map_combination)

plt.imshow(feature_map_sum)

plt.savefig("feature_map_sum.png")

plt.show()

def create_model():

model = Sequential()

# 第一层CNN

# 第一个参数是卷积核的数量,第二三个参数是卷积核的大小

model.add(Convolution2D(9, 5, 5, input_shape=img.shape))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(4, 4)))

# 第二层CNN

model.add(Convolution2D(9, 5, 5, input_shape=img.shape))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(3, 3)))

# 第三层CNN

model.add(Convolution2D(9, 5, 5, input_shape=img.shape))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

# 第四层CNN

model.add(Convolution2D(9, 3, 3, input_shape=img.shape))

model.add(Activation('relu'))

# model.add(MaxPooling2D(pool_size=(2, 2)))

return model

if __name__ == "__main__":

img = cv2.imread('001.jpg')

model = create_model()

model.summary()

img_batch = np.expand_dims(img, axis=0)

model = Model(inputs=model.input, outputs=model.get_layer('conv2d_1').output) # 获取某层的输出

conv_img = model.predict(img_batch) # conv_img 卷积结果

visualize_feature_map(conv_img)

这里定义了一个4层的卷积,每个卷积层分别包含9个卷积、Relu激活函数和尺度不等的池化操作,系数全部是随机初始化。

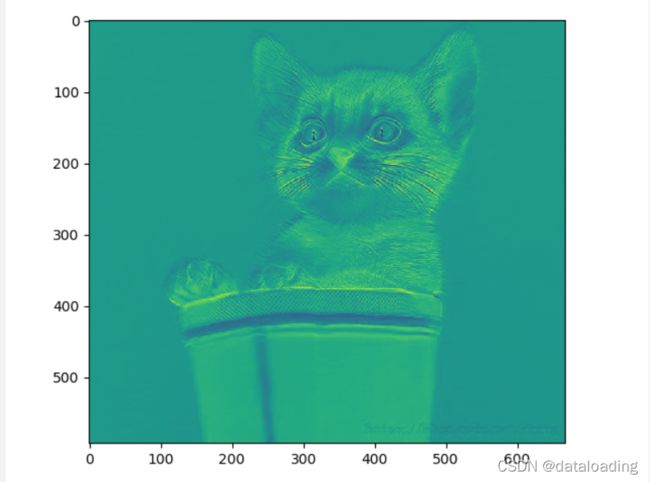

输入的原图如下:

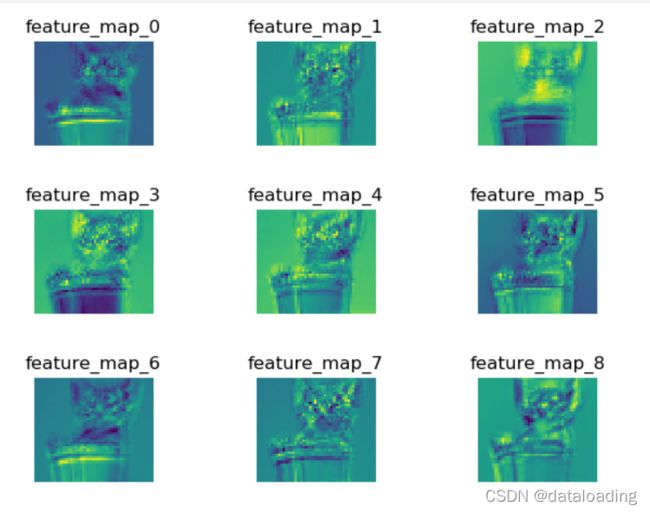

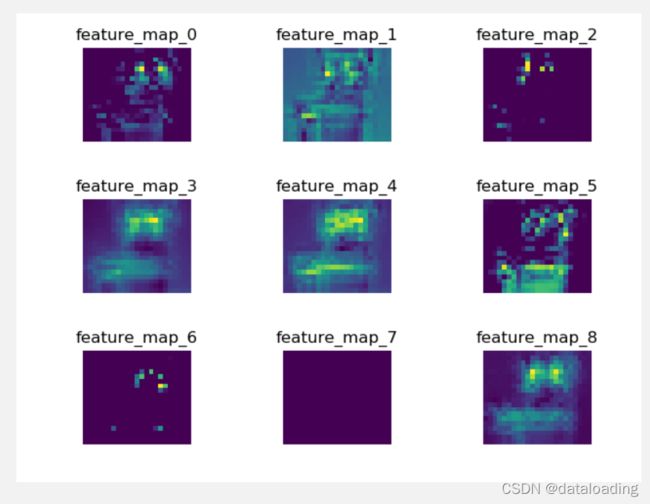

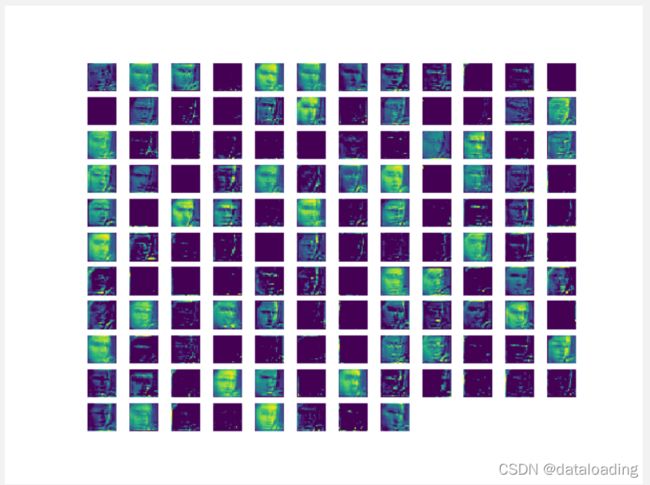

第一层卷积后可视化的特征图:

feature_map_shape: (593, 667, 9)

(593x667)x 9

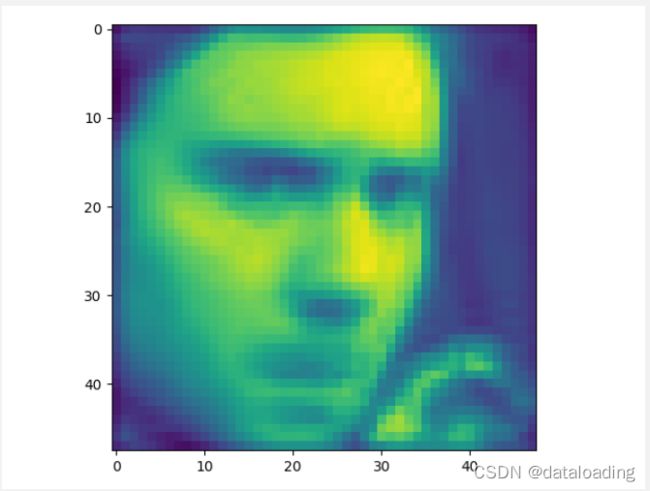

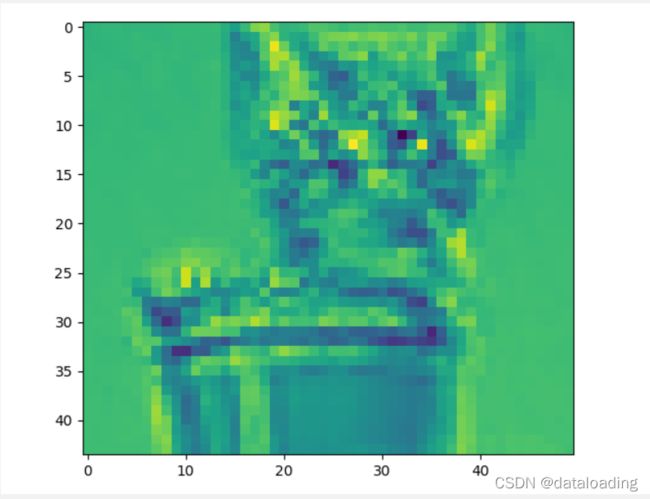

所有第一层特征图1:1融合后整体的特征图:

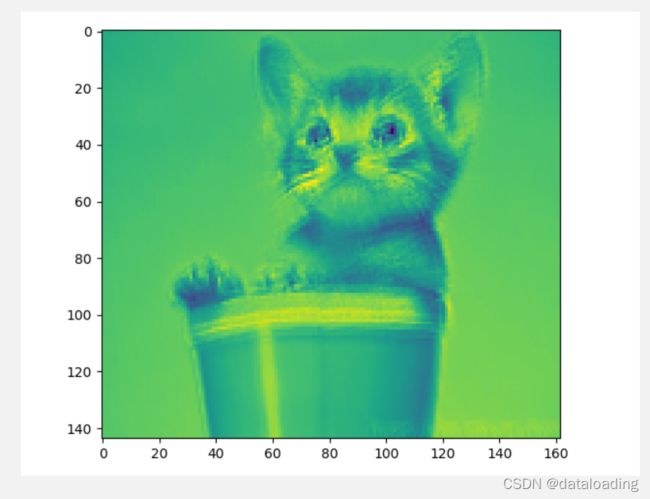

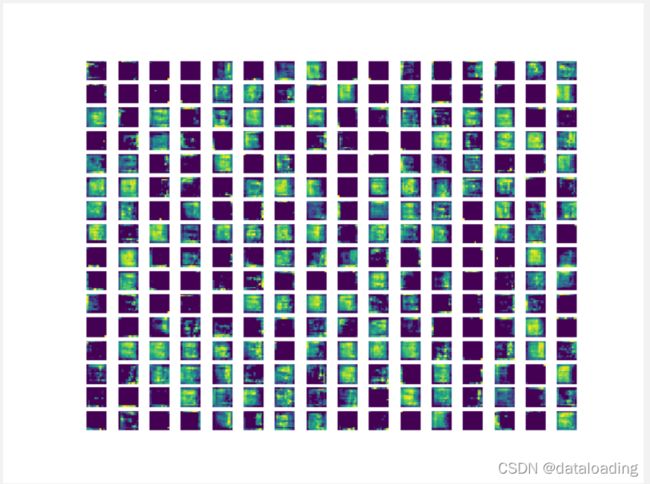

第二层卷积后可视化的特征图:

feature_map_shape: (144, 162, 9)

(144 x162) x 9

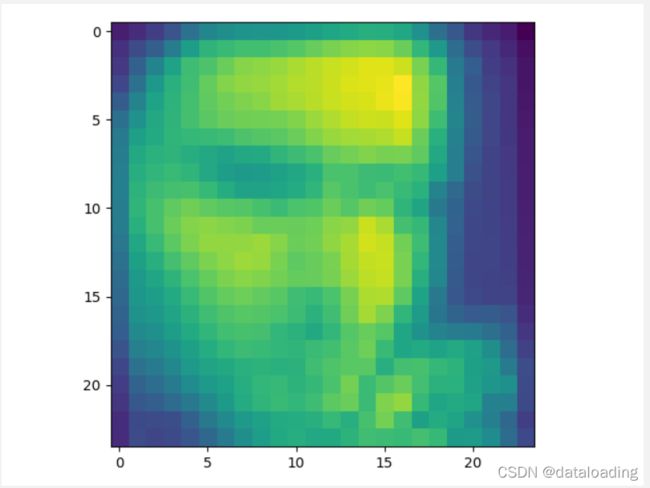

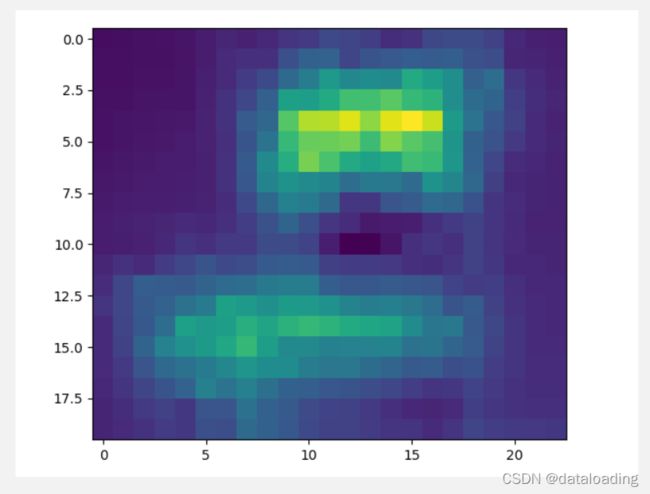

所有第二层特征图1:1融合后整体的特征图:

第三层卷积后可视化的特征图:

feature_map_shape: (44, 50, 9)

(44 x 50) x 9

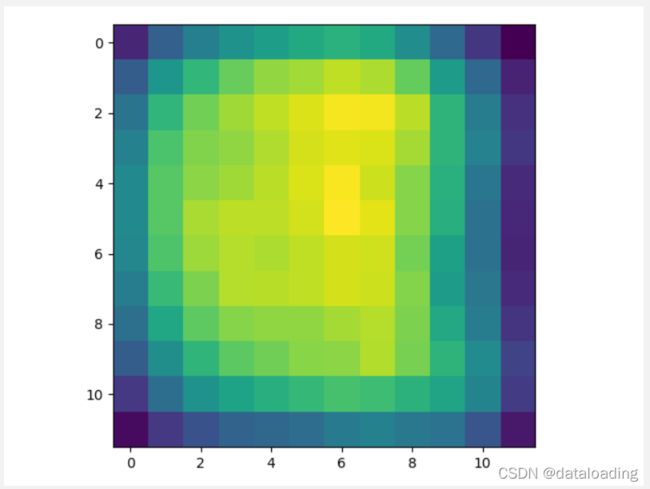

所有第三层特征图1:1融合后整体的特征图:

第四层卷积后可视化的特征图:

feature_map_shape: (20, 23, 9)

(20 x 23) x 9

所有第四层特征图1:1融合后整体的特征图:

从不同层可视化出来的特征图大概可以总结出一点规律:

- 浅层网络提取的是纹理、细节特征

- 深层网络提取的是轮廓、形状、最强特征(如猫的眼睛区域)

- 浅层网络包含更多的特征,也具备提取关键特征(如第一组特征图里的第4张特征图,提取出的是猫眼睛特征)的能力

- 相对而言,层数越深,提取的特征越具有代表性

- 图像的分辨率是越来越小的

下面是VGG16的结构:

def create_model():

model = Sequential()

# VGG16

# layer_1

model.add(keras.layers.Conv2D(filters=64, kernel_size=3, strides=1,

padding='same', activation='relu', kernel_initializer='uniform',

input_shape=(48, 48, 3)))

model.add(keras.layers.Conv2D(filters=64, kernel_size=3, strides=1,

padding='same', activation='relu', kernel_initializer='uniform'))

model.add(keras.layers.MaxPool2D(pool_size=2))

# layer_2

model.add(keras.layers.Conv2D(filters=128, kernel_size=3, strides=1,

padding='same', activation='relu', kernel_initializer='uniform'))

model.add(keras.layers.Conv2D(filters=128, kernel_size=3, strides=1,

padding='same', activation='relu', kernel_initializer='uniform'))

model.add(keras.layers.MaxPool2D(pool_size=2))

# layer_3

model.add(keras.layers.Conv2D(filters=256, kernel_size=3, strides=1,

padding='same', activation='relu'))

model.add(keras.layers.Conv2D(filters=256, kernel_size=3, strides=1,

padding='same', activation='relu'))

model.add(keras.layers.Conv2D(filters=256, kernel_size=1, strides=1,

padding='same', activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

# layer_4

model.add(keras.layers.Conv2D(filters=512, kernel_size=3, strides=1,

padding='same', activation='relu'))

model.add(keras.layers.Conv2D(filters=512, kernel_size=3, strides=1,

padding='same', activation='relu'))

model.add(keras.layers.Conv2D(filters=512, kernel_size=1, strides=1,

padding='same', activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

# layer_5

model.add(keras.layers.Conv2D(filters=512, kernel_size=3, strides=1,

padding='same', activation='relu'))

model.add(keras.layers.Conv2D(filters=512, kernel_size=3, strides=1,

padding='same', activation='relu'))

model.add(keras.layers.Conv2D(filters=512, kernel_size=1, strides=1,

padding='same', activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Flatten())

# model.add(keras.layers.AlphaDropout(0.5))

model.add(keras.layers.Dense(4096, activation='relu'))

model.add(keras.layers.Dense(4096, activation='relu'))

model.add(keras.layers.Dense(1000, activation='relu'))

model.add(keras.layers.Dense(8, activation='softmax'))

return model

原图:

48 x 48像素

![]()

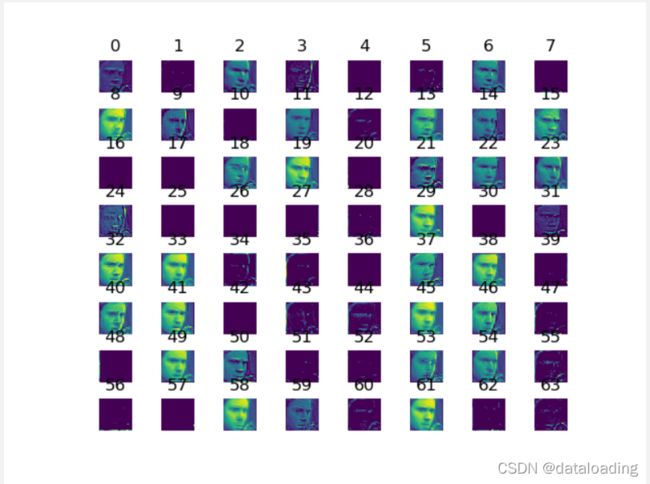

layer_1层的输出:

conv2d_2 (48, 48, 64)

layer_2层的输出:

conv2d_4 (24, 24, 128)

layer_3层的输出:

conv2d_7 (12, 12, 256)

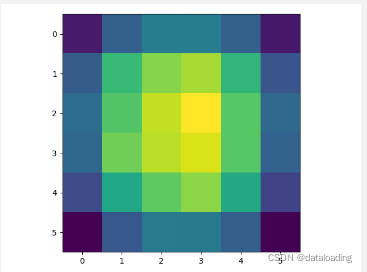

layer_4层的输出:

conv2d_10 (6, 6, 512)