GLCVActivity

import android.app.Activity;

import android.content.Intent;

import android.graphics.Bitmap;

import android.graphics.Matrix;

import android.opengl.GLSurfaceView;

import android.os.Build;

import android.os.Bundle;

import android.support.annotation.Nullable;

import android.view.View;

import android.widget.Button;

import android.widget.ImageView;

import org.opencv.android.Utils;

import org.opencv.core.CvType;

import org.opencv.core.Mat;

import org.opencv.imgproc.Imgproc;

import java.nio.ByteBuffer;

import butterknife.BindView;

import butterknife.ButterKnife;

import butterknife.OnClick;

import hankin.hjmedia.R;

import hankin.hjmedia.logger.LogUtils;

import hankin.hjmedia.opencv.OpenCVBaseActivity;

import hankin.hjmedia.opencv.utils.CV310Utils;

import hankin.hjmedia.utils.SDCardStoragePath;

import hankin.hjmedia.utils.WindowUtils;

public class GLCVActivity extends OpenCVBaseActivity {

public static void startGLCVActivity(Activity activity) {

activity.startActivity(new Intent(activity, GLCVActivity.class));

}

@BindView(R.id.glsv_glcv) GLSurfaceView mGLSurfaceView;

private GLCVRender mGLCVRender;

@BindView(R.id.iv_glcv_frame) ImageView mFrameIv;

private long mStartTime = System.currentTimeMillis();

private Mat mRGBAMat = new Mat(); // c 中设置数据

@Override

protected void onCreate(@Nullable Bundle savedInstanceState) {

setScreenOrientation(false);

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.KITKAT){

setTheme(R.style.style_videorecord);

}

super.onCreate(savedInstanceState);

WindowUtils.toggleStatusAndNavigationBar(this);

setContentView(R.layout.activity_glcv);

mUnbinder = ButterKnife.bind(this);

mGLCVRender = new GLCVRender(this, mGLSurfaceView, new GLCVRender.OnFrameOutListener() {

@Override

public void rgbaOut(final int width, final int height, ByteBuffer buffer) { // rgba out 从gl中截图

mStartTime = System.currentTimeMillis();

final Bitmap bitmap = Bitmap.createBitmap(width, height, Bitmap.Config.ARGB_8888);

bitmap.copyPixelsFromBuffer(buffer);

buffer.clear();

LogUtils.d("mydebug---", "rgbaOut time="+(System.currentTimeMillis()-mStartTime)); // rgbaOut time=25 比较耗时

runOnUiThread(new Runnable() {

@Override

public void run() {

mStartTime = System.currentTimeMillis();

mFrameIv.setImageBitmap(mirrorBitmap(bitmap));

mFrameIv.setVisibility(View.VISIBLE);

LogUtils.d("mydebug---", "postScale time="+(System.currentTimeMillis()-mStartTime)); // postScale time=41 比较耗时

}

});

}

@Override

public void yuvOut(final int width, final int height, final byte[] data) { // yuv out 从gl中截图

runOnUiThread(new Runnable() {//直接显示,不保存到本地

@Override

public void run() {

mStartTime = System.currentTimeMillis();

Bitmap bitmap = rawByteArray2RGBABitmap2_nv21(data, width, height);

mFrameIv.setImageBitmap(bitmap);

mFrameIv.setVisibility(View.VISIBLE);

LogUtils.d("mydebug---", "yuv2rgba & mirror time="+(System.currentTimeMillis()-mStartTime)); // postScale time=41 比较耗时

}

});

}

});

// opencv/etc/lbpcascades/lbpcascade_frontalface_improved.xml 检测出人脸是小脸框

// opencv/etc/haarcascades/haarcascade_frontalface_alt.xml 检测结果比 lbp 的准确些,两者耗时差不多。。。

String cascadesPath = CV310Utils.asset2SDcard(this, "opencv/etc/lbpcascades/lbpcascade_frontalface_improved.xml",

SDCardStoragePath.DEFAULT_OPENCV_CASCADE_LIBPC, "lbpcascade_frontalface_improved.xml");// 训练好了的人脸数据,从assets转存到sdcard

init(cascadesPath, mGLCVRender.mPreviewLongSide, mGLCVRender.mPreviewShortSide);

}

// jni中调用,将 c mat 传递给 java mat,然后显示

public void cmat2jmat() {

if (!mRGBAMat.empty()) {

Bitmap bmp = Bitmap.createBitmap(mRGBAMat.cols(), mRGBAMat.rows(), Bitmap.Config.ARGB_8888);

Utils.matToBitmap(mRGBAMat, bmp);

mFrameIv.setImageBitmap(bmp);

mFrameIv.setVisibility(View.VISIBLE);

// cframe time : 42, 1280, 720 速度很快,比java的要慢一些。加上人脸检测系列代码后 : cframe time : 299, 720, 1280

LogUtils.d("mydebug---", "cframe time : " + (System.currentTimeMillis() - mStartTime) + ", " + mRGBAMat.cols() + ", " + mRGBAMat.rows());

} else {

LogUtils.d("mydebug---", "mRGBAMat empty");

}

}

// 上下镜像 bitmap

private Bitmap mirrorBitmap(Bitmap bitmap) {

int height = bitmap.getHeight();

int width = bitmap.getWidth();

Matrix matrix = new Matrix();

matrix.postScale(1, -1);//上下镜像

Bitmap bt = Bitmap.createBitmap(bitmap, 0, 0, width, height, matrix, false);//利用矩阵创建新的Bitmap

if (!bitmap.isRecycled()) {

bitmap.recycle();

}

return bt;

}

//YCbCr_420_SP (NV21) 转换成 ARGB

private Bitmap rawByteArray2RGBABitmap2_nv21(byte[] data, int width, int height) {

int frameSize = width * height;

int[] rgba = new int[frameSize];

for (int i = 0; i < height; i++) {

for (int j = 0; j < width; j++) {

int y = (0xff & ((int) data[i * width + j]));

int u = (0xff & ((int) data[frameSize + (i >> 1) * width + (j & ~1) + 0]));

int v = (0xff & ((int) data[frameSize + (i >> 1) * width + (j & ~1) + 1]));

y = y < 16 ? 16 : y;

int r = Math.round(1.164f * (y - 16) + 1.596f * (v - 128));

int g = Math.round(1.164f * (y - 16) - 0.813f * (v - 128) - 0.391f * (u - 128));

int b = Math.round(1.164f * (y - 16) + 2.018f * (u - 128));

r = r < 0 ? 0 : (r > 255 ? 255 : r);

g = g < 0 ? 0 : (g > 255 ? 255 : g);

b = b < 0 ? 0 : (b > 255 ? 255 : b);

rgba[i * width + j] = 0xff000000 + (b << 16) + (g << 8) + r;

}

}

Bitmap bmp = Bitmap.createBitmap(width, height, Bitmap.Config.ARGB_8888);

bmp.setPixels(rgba, 0 , width, 0, 0, width, height);

return bmp;

}

@OnClick({R.id.btn_glcv_switch, R.id.btn_glcv_mirror, R.id.btn_glcv_gray, R.id.iv_glcv_frame, R.id.btn_glcv_javaframe,

R.id.btn_glcv_cframe, R.id.btn_glcv_face, R.id.btn_glcv_rgbaout, R.id.btn_glcv_yuvout})

void click(View view) {

int w = mGLCVRender.mPreviewLongSide, h = mGLCVRender.mPreviewShortSide;

int len = (int) (w * h * 1.5);

switch (view.getId()) {

case R.id.btn_glcv_switch: // 切换前后摄像头

mGLCVRender.switchCamera();

break;

case R.id.btn_glcv_mirror: // 左右镜像

mGLCVRender.isMirror = !mGLCVRender.isMirror;

((Button)findViewById(R.id.btn_glcv_mirror)).setText("mirror "+mGLCVRender.isMirror);

break;

case R.id.btn_glcv_gray: // 是否灰度filter

mGLCVRender.isGrayFilter = !mGLCVRender.isGrayFilter;

((Button)findViewById(R.id.btn_glcv_gray)).setText("gray "+mGLCVRender.isGrayFilter);

break;

case R.id.iv_glcv_frame:

mFrameIv.setVisibility(View.GONE);

break;

case R.id.btn_glcv_javaframe: // java中使用OpneCV的Mat,捕获camera的帧数据,显示

mStartTime = System.currentTimeMillis();

byte[] j_frame = new byte[len];

System.arraycopy(mGLCVRender.mCameraFrameBuffer, 0, j_frame, 0, len); // 从 mGLCVRender.mCameraFrameBuffer 拷贝 len 个字节到 j_frame 中

// camera默认导出的是nv21格式的数据,需要转换为rbga, w 比 h 大,反了的话,图像不正确

Mat j_nv21 = new Mat(h + h/2, w, CvType.CV_8UC1); // camera预览,不管手机竖着拿还是横着拿,都是width大于height

j_nv21.put(0, 0, j_frame); // 从 0,0 位置开始,将j_frame中的数据放到Mat中,j_frame中颜色数据排列顺序为 rgba

Mat j_rgba = new Mat(); // 这里不用设置mat的大小、类型,下面的颜色空间转换会帮助设置好

Imgproc.cvtColor(j_nv21, j_rgba, Imgproc.COLOR_YUV2RGB_NV21, 4); // nv21转rbga,最后一个参数表示rgba的4个通道数

Bitmap j_bmp = Bitmap.createBitmap(j_rgba.cols(), j_rgba.rows(), Bitmap.Config.ARGB_8888);

Utils.matToBitmap(j_rgba, j_bmp);

mFrameIv.setImageBitmap(j_bmp);

mFrameIv.setVisibility(View.VISIBLE);

// javaframe time : 20, 1280, 720 速度很快

LogUtils.d("mydebug---", "javaframe time : "+(System.currentTimeMillis()-mStartTime)+", "+j_rgba.cols()+", "+j_rgba.rows());

j_nv21.release(); // 释放 Mat

j_rgba.release();

break;

case R.id.btn_glcv_cframe:

// opencv 中 java 中的 Mat 与 c++ 中的 Mat 互转

/*

c++中的Mat传递给java中的Mat:

1、可以在java部分创建一个Mat,用于保存图像处理结果图像,获取Mat 的本地地址传入jni函数中:

Mat res = new Mat(); // java 中new一个空的mat

jni_fun(res.getNativeObjAddr()); // jni 函数, 将mat的地址,即 public final long nativeObj 成员变量 传递给ndk

2、c++部分新建Mat指针指向java传入的内存区域,将处理后的结果图像的Mat数据复制到这块内存区域,这样java中的创建的Mat就变为结果图像:

void jni_fun(jlong Mataddr) //jni 函数

{

Mat* res = (Mat*)MatAddr; // 定义一个指针,指向java虚拟机中的Mat的地址

Mat image = ndk中创建的mat; // c++中创建Mat

res->create(image.rows, image.cols, image.type()); // 将java中的Mat的宽高、类型设置成c++中一样

memcpy(res->data, image.data, image.rows*image.step); // 拷贝c++内存中的Mat的数据到java的内存中的,mat.step为列数 * 通道数。 到此完成了c++的Mat转换到java的Mat

}

java中的Mat传递给c++中的Mat: 与上面几乎一样,只是c++的Mat的宽高、类型要与java中的设置一样,然后memcpy的时候是由java拷贝到c++

*/

mStartTime = System.currentTimeMillis();

byte[] c_frame = new byte[len];

System.arraycopy(mGLCVRender.mCameraFrameBuffer, 0, c_frame, 0, len);

// camera默认导出的是nv21格式的数据,需要转换为rbga, w 比 h 大,反了的话,图像不正确

Mat c_nv21 = new Mat(h + h/2, w, CvType.CV_8UC1); // camera预览,不管手机竖着拿还是横着拿,都是width大于height

c_nv21.put(0, 0, c_frame);

LogUtils.d("mydebug---", "java c_nv21.step="+c_nv21.step1()); // java c_nv21.step=1280 mat.step为列数 * 通道数

cmat(c_nv21.getNativeObjAddr(), mRGBAMat.getNativeObjAddr());

c_nv21.release(); // 释放 Mat

break;

case R.id.btn_glcv_face: // 是否检测人脸

mGLCVRender.isFaceDetect = !mGLCVRender.isFaceDetect;

((Button)findViewById(R.id.btn_glcv_face)).setText("face "+mGLCVRender.isFaceDetect);

break;

case R.id.btn_glcv_rgbaout: // 从OpenGL中导出rgba格式图像

mGLCVRender.isRGBAOut = true;

break;

case R.id.btn_glcv_yuvout: // 从OpenGL中导出yuv格式图像

mGLCVRender.isYUVOut = true;

break;

}

}

@Override

protected void onDestroy() {

super.onDestroy();

if (!mRGBAMat.empty()) mRGBAMat.release(); // 释放 Mat

if (mGLCVRender!=null) {

mGLCVRender.release();

mGLCVRender=null;

}

}

private native void init(String cascadesPath, int previewLongSide, int previewShortSide); // 初始化,生成级联分类器对象

private native void cmat(long nv21Addr, long rgbaAddr); // java mat 传递给 c mat,最后还会传递回java

public native float[] glcv(byte[] frame); // camera frame,返回值为检测到的人脸框的四个坐标(x,y 逆时针),没有检测到时下标0的值为-1

}

GLCVRender 渲染

import android.graphics.ImageFormat;

import android.graphics.SurfaceTexture;

import android.hardware.Camera;

import android.opengl.GLES11Ext;

import android.opengl.GLES20;

import android.opengl.GLSurfaceView;

import android.opengl.Matrix;

import java.io.IOException;

import java.nio.ByteBuffer;

import java.nio.FloatBuffer;

import java.util.List;

import javax.microedition.khronos.egl.EGLConfig;

import javax.microedition.khronos.opengles.GL10;

import hankin.hjmedia.logger.LogUtils;

import hankin.hjmedia.opengles.utils.BufferUtils;

import hankin.hjmedia.opengles.utils.HjGLESUtils;

import hankin.hjmedia.opengles.utils.MyGLESUtils;

import hankin.hjmedia.utils.CamUtils;

public class GLCVRender implements GLSurfaceView.Renderer, Camera.PreviewCallback {

private GLCVActivity mActivity;

private GLSurfaceView mGLSurfaceView;

// camera

private Camera mCamera;

private int mCameraId = Camera.CameraInfo.CAMERA_FACING_FRONT;

public byte[] mCameraFrameBuffer; // 用于camera帧回调

private int mPreviewWidth = 720; // 预览画面的宽,宽大于高

private int mPreviewHeight = 1280;

public int mPreviewShortSide, mPreviewLongSide; // 预览的图像的短边、长边

private int mActualWidth = 240; // 导出的实际的宽

private int mActualHeight = 320;

private int mViewWidth;

private int mViewHeight;

public volatile boolean isMirror = true; // 左右镜像

public volatile boolean isFaceDetect = true; // 是否人脸检测

public volatile boolean isGrayFilter = true; // 是否灰度Filter

// opengl

private int mTextureId[] = new int[1];//摄像头帧数据纹理对象

private SurfaceTexture mSurfaceTexture;

private float mVertCoords[] = {//顶点坐标。z坐标不写,默认为0

-1.0f, 1.0f,//左上

-1.0f,-1.0f,//左下

1.0f,-1.0f,//右下

1.0f, 1.0f,//右上

};

private byte mVertIndexs[] = {//顶点下标

0, 1, 2, 0, 2, 3,

};

private FloatBuffer mTexCoorFb;//纹理坐标

private FloatBuffer mTexCoorFb_mirror;//纹理坐标 镜像

private ByteBuffer mVertCoorBb;//顶点坐标

private ByteBuffer mVertIndexsBb;//顶点下标

// oes

private int mOESProgram;

private int mOESVertPosition;//顶点坐标

private int mOESVertTexCoor;//纹理坐标

private int mOESVertTransMatrix;//变换矩阵

private int mOESVertCoorMatrix;//纹理坐标变换矩阵

private int mOESFragTex;//纹理单元

private float mTransformMatrix[] = new float[16];

private int mOESFrameBuffer[] = new int[1];//摄像头的帧缓冲区

private int mOESRenderBuffer[] = new int[1];

private int mOESTexId[] = new int[1];//帧数据纹理对象

// show

private FloatBuffer mShowTexCoorFb;//纹理坐标

private int mShowProgram;

private int mShowVertPosition;

private int mShowVertTexCoor;

private int mShowVertTransMatrix;

private int mShowFragTex;

// gray

private int mGrayGLIds[] = new int[3]; // 纹理id、帧缓冲区id、渲染缓冲区id(可能没有)

private FloatBuffer mGrayTexCoorFb;//纹理坐标

private int mGrayProgram;

private int mGrayVertPosition;

private int mGrayVertTexCoor;

private int mGrayVertTransMatrix;

private int mGrayFragTex;

// face detect

private int mFaceProgram;

private int mFaceVertPosition;

private int mFaceVertTransMatrix;

private int mFaceFragColor;

private float[] mFaceCoor = new float[8]; // 检测到的人脸框的四个坐标(x,y),没有检测到时下标0的值为-1

private byte mFaceVertIndexs[] = { // 索引法,绘制的顶点的下标

0, 1, 1, 2, 2, 3, 3, 0

};

private FloatBuffer mFaceCoorFb;

private ByteBuffer mFaceVertIndexsBb;

// rgba out

public volatile boolean isRGBAOut = false;

private ByteBuffer mRGBAOutBb;

private OnFrameOutListener mOnFrameOutListener;

// yuv out

public volatile boolean isYUVOut = false;

//export

private int[] mExportFrameTemp = new int[4];

private ByteBuffer mExportTempBuffer;

private byte[] mExportData;

private FloatBuffer mExportVertexBuffer;

private FloatBuffer mExportTextureBuffer;

private int mExportGLProgram;

private int mExportGLVertexCo;

private int mExportGLTextureCo;

private int mExportGLVertexMatrix;

private int mExportGLTexture;

private int mExportGLWidth;

private int mExportGLHeight;

//lazy program同show一样

private FloatBuffer mLazyTexCoorFb;

private int[] mLazyFrameTemp = new int[4];

public GLCVRender(GLCVActivity activity, GLSurfaceView glSurfaceView, OnFrameOutListener listener) {

this.mActivity = activity;

this.mGLSurfaceView = glSurfaceView;

this.mOnFrameOutListener = listener;

if (mPreviewWidth>mPreviewHeight) {

mPreviewLongSide = mPreviewWidth;

mPreviewShortSide = mPreviewHeight;

} else {

mPreviewLongSide = mPreviewHeight;

mPreviewShortSide = mPreviewWidth;

}

mGLSurfaceView.setEGLContextClientVersion(2);//glsl如果用到了 GLES20 此代码不能少

mGLSurfaceView.setRenderer(this);

mGLSurfaceView.setRenderMode(GLSurfaceView.RENDERMODE_WHEN_DIRTY);

mGLSurfaceView.setPreserveEGLContextOnPause(true);//控制EGL Context是否保存,当glsurfaceview暂停和恢复时。

initGL();

mCameraFrameBuffer = new byte[(int) (mPreviewWidth * mPreviewHeight * 1.5)]; // * 1.5 是因为camera预览出的图像数据是YUV格式的,Camera.Parameters.setPreviewFormat设置格式

startPreview(); // 将camera的预览与GLSurfaceView的生命周期隔开,这样,应用进入后台camera依然可以预览

}

private void startPreview() {

if (mCamera==null) {

mCamera = Camera.open(mCameraId);

CamUtils.setCameraDisplayOrientation(mActivity, mCameraId, mCamera);

mCamera.addCallbackBuffer(mCameraFrameBuffer);

mCamera.setPreviewCallbackWithBuffer(this);

Camera.Parameters parameters = mCamera.getParameters();

int w = mPreviewLongSide, h = mPreviewShortSide;

parameters.setPreviewSize(w, h);

parameters.setPreviewFormat(ImageFormat.NV21); // android摄像头默认PreviewFormat是ImageFormat.NV21

List focusModes = parameters.getSupportedFocusModes();

//设置持续的对焦模式

if (focusModes.contains(Camera.Parameters.FOCUS_MODE_CONTINUOUS_PICTURE)) {

parameters.setFocusMode(Camera.Parameters.FOCUS_MODE_CONTINUOUS_PICTURE);//设置持续的对焦模式后,拍摄的照片大小比不设置的将近大一倍

}

if (focusModes.contains(Camera.Parameters.FOCUS_MODE_CONTINUOUS_VIDEO)) {

parameters.setFocusMode(Camera.Parameters.FOCUS_MODE_CONTINUOUS_VIDEO);//设置持续的对焦

}

mCamera.setParameters(parameters);

if (mSurfaceTexture!=null){

try {

mCamera.setPreviewTexture(mSurfaceTexture);//设置预览的图像纹理

} catch (IOException e) {

e.printStackTrace();

}

mCamera.startPreview();

}

}

}

private void stopPreview() {

if (mCamera!=null) {

mCamera.stopPreview();

mCamera.setPreviewCallbackWithBuffer(null);

mCamera.release();

mCamera = null;

}

}

public void switchCamera() { // 切换摄像头

if (mCameraId== Camera.CameraInfo.CAMERA_FACING_BACK) {

mCameraId = Camera.CameraInfo.CAMERA_FACING_FRONT;

} else {

mCameraId = Camera.CameraInfo.CAMERA_FACING_BACK;

}

stopPreview();

startPreview();

}

private void initGL() {

GLES20.glGenTextures(1, mTextureId, 0);

GLES20.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, mTextureId[0]);

GLES20.glTexParameteri(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GL10.GL_TEXTURE_MIN_FILTER, GL10.GL_LINEAR);

GLES20.glTexParameteri(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GL10.GL_TEXTURE_MAG_FILTER, GL10.GL_LINEAR);

GLES20.glTexParameteri(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_WRAP_S, GLES20.GL_REPEAT);

GLES20.glTexParameteri(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_WRAP_T, GLES20.GL_REPEAT);

mSurfaceTexture = new SurfaceTexture(mTextureId[0]);

mSurfaceTexture.setOnFrameAvailableListener(new SurfaceTexture.OnFrameAvailableListener() {

@Override

public void onFrameAvailable(SurfaceTexture surfaceTexture) {//会一直调用,这个会后于 Renderer 的 onDrawFrame 调用,在主线程

LogUtils.v("mydebug---", "GLCVRender onFrameAvailable");

if (mGLSurfaceView != null) mGLSurfaceView.requestRender();

}

});

// oes

mVertCoorBb = BufferUtils.arr2ByteBuffer(mVertCoords);

mVertIndexsBb = BufferUtils.arr2ByteBuffer(mVertIndexs);

float oesTexCoor[] = {

//--------------- 没有使用mSurfaceTexture.getTransformMatrix(matrix);的纹理坐标,参考GLCameraActivity里的 ---------------

//--------------- 使用mSurfaceTexture.getTransformMatrix(matrix);获取纹理坐标的变换矩阵,并在glsl中使用 ---------------

1f, 0f,//右下 //左右镜像的,不管

0f, 0f,//左下

0f, 1f,//左上

1f, 1f,//右上

};

mTexCoorFb = BufferUtils.arr2FloatBuffer(oesTexCoor);

float oesTexCoor_mirror[] = {

0f, 0f,//左下 //正常照镜子

1f, 0f,//右下

1f, 1f,//右上

0f, 1f,//左上

};

mTexCoorFb_mirror = BufferUtils.arr2FloatBuffer(oesTexCoor_mirror);

// show

float showTexCoor[] = {

//0f, 1f,//左上

//0f, 0f,//左下

//1f, 0f,//右下

//1f, 1f,//右上

1f, 1f,//右上 //正常照镜子 这里的纹理坐标与之前代码的纹理坐标不一致,原因未明(代码不一致?)

0f, 1f,//左上

0f, 0f,//左下

1f, 0f,//右下

};

mShowTexCoorFb = BufferUtils.arr2FloatBuffer(showTexCoor);

// gray

float grayTexCoor[] = {

0f, 1f,//左上

0f, 0f,//左下

1f, 0f,//右下

1f, 1f,//右上

};

mGrayTexCoorFb = BufferUtils.arr2FloatBuffer(grayTexCoor);

// face detect

mFaceCoorFb = BufferUtils.arr2FloatBuffer(mFaceCoor);

mFaceVertIndexsBb = BufferUtils.arr2ByteBuffer(mFaceVertIndexs);

// yuv out

float exportVertCoor[] = {

-1f,-1f,//左下

-1f, 1f,//左上

1f,-1f,//右下

1f, 1f,//右上

};

mExportVertexBuffer = BufferUtils.arr2FloatBuffer(exportVertCoor);

float exportTexCoor[] = {

0f,0f,//左下

0f,1f,//左上

1f,0f,//右下

1f,1f,//右上

};

mExportTextureBuffer = BufferUtils.arr2FloatBuffer(exportTexCoor);

float lazyTexCoor[] = {

//0f, 0f,//左下 // yuv out 的是左右镜像了的

//1f, 0f,//右下

//1f, 1f,//右上

//0f, 1f,//左上

0f, 1f,//左上 // 正常的

1f, 1f,//右上

1f, 0f,//右下

0f, 0f,//左下

};

mLazyTexCoorFb = BufferUtils.arr2FloatBuffer(lazyTexCoor);

}

private void createProgram() {

//oes 如果 glCreateProgram 是在 onSurfaceCreated 前,会返回0

mOESProgram = MyGLESUtils.createGLProgram(mActivity, "shader/common/oes.vert", "shader/common/oes.frag");

mOESVertPosition = GLES20.glGetAttribLocation(mOESProgram, "vPosition");

mOESVertTexCoor = GLES20.glGetAttribLocation(mOESProgram, "vCoord");

mOESVertTransMatrix = GLES20.glGetUniformLocation(mOESProgram, "vMatrix");

mOESVertCoorMatrix = GLES20.glGetUniformLocation(mOESProgram, "vCoordMatrix");

mOESFragTex = GLES20.glGetUniformLocation(mOESProgram, "vTexture");

//show

mShowProgram = MyGLESUtils.createGLProgram(mActivity, "shader/common/show.vert", "shader/common/show.frag");

mShowVertPosition = GLES20.glGetAttribLocation(mShowProgram, "vPosition");

mShowVertTexCoor = GLES20.glGetAttribLocation(mShowProgram, "a_tex0Coor");

mShowFragTex = GLES20.glGetUniformLocation(mShowProgram, "fTex0");

mShowVertTransMatrix = GLES20.glGetUniformLocation(mShowProgram, "vMatrix");

// gray

mGrayProgram = MyGLESUtils.createGLProgram(mActivity, "shader/media/glcv/gray.vert", "shader/media/glcv/gray.frag");

mGrayVertPosition = GLES20.glGetAttribLocation(mShowProgram, "vPosition");

mGrayVertTexCoor = GLES20.glGetAttribLocation(mShowProgram, "a_tex0Coor");

mGrayFragTex = GLES20.glGetUniformLocation(mShowProgram, "fTex0");

mGrayVertTransMatrix = GLES20.glGetUniformLocation(mShowProgram, "vMatrix");

// face detect

mFaceProgram = MyGLESUtils.createGLProgram(mActivity, "shader/common/first.vert", "shader/common/first.frag");

mFaceVertPosition = GLES20.glGetAttribLocation(mFaceProgram, "vPosition");

mFaceFragColor = GLES20.glGetUniformLocation(mFaceProgram, "vColor");

mFaceVertTransMatrix = GLES20.glGetUniformLocation(mFaceProgram, "vModelViewProjection");

LogUtils.d("mydebug---", "mOESProgram="+mOESProgram+", mShowProgram="+mShowProgram+", mFaceProgram="+mFaceProgram);

// yuv out

mExportGLProgram= MyGLESUtils.createGLProgram(mActivity, "shader/common/yuvout.vert", "shader/common/yuvout.frag");

mExportGLVertexCo= GLES20.glGetAttribLocation(mExportGLProgram, "aVertexCo");

mExportGLTextureCo= GLES20.glGetAttribLocation(mExportGLProgram, "aTextureCo");

mExportGLVertexMatrix= GLES20.glGetUniformLocation(mExportGLProgram, "uVertexMatrix");

mExportGLTexture= GLES20.glGetUniformLocation(mExportGLProgram, "uTexture");

mExportGLWidth= GLES20.glGetUniformLocation(mExportGLProgram, "uWidth");

mExportGLHeight= GLES20.glGetUniformLocation(mExportGLProgram, "uHeight");

}

/*设置摄像头的帧缓冲区*/

private void setFrameBuffers() {

int binds[] = {-3, -3, -3};

GLES20.glGetIntegerv(GLES20.GL_FRAMEBUFFER_BINDING, binds, 0); // 获取当前绑定的帧缓冲区id

GLES20.glGetIntegerv(GLES20.GL_RENDERBUFFER_BINDING, binds, 1); // 获取当前绑定的渲染缓冲区id

GLES20.glGetIntegerv(GLES20.GL_TEXTURE_BINDING_2D, binds, 2); // 获取当前绑定的纹理对象id

LogUtils.d("mydebug---", "binding frame="+binds[0]+", render="+binds[1]+", texture="+binds[2]); // binding frame=0, render=0, texture=0 三者默认值都是0

int w = mViewWidth, h = mViewHeight; // 不管预览尺寸如何,纹理、渲染缓冲区的尺寸要是view的尺寸,否则显示的图像会被裁剪

// oes

if (mOESFrameBuffer[0]!=0) GLES20.glDeleteFramebuffers(1, mOESFrameBuffer, 0);

if (mOESRenderBuffer[0]!=0) GLES20.glDeleteRenderbuffers(1, mOESRenderBuffer, 0);

if (mOESTexId[0]!=0) GLES20.glDeleteTextures(1, mOESTexId, 0);

GLES20.glGenFramebuffers(1, mOESFrameBuffer, 0);

GLES20.glGenRenderbuffers(1, mOESRenderBuffer, 0);

GLES20.glGenTextures(1, mOESTexId, 0);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, mOESTexId[0]);

GLES20.glTexImage2D(GLES20.GL_TEXTURE_2D, 0, GLES20.GL_RGBA, w, h, 0, GLES20.GL_RGBA, GLES20.GL_UNSIGNED_BYTE, null);//指定当前纹理对象宽高,但是没有像素值

GLES20.glTexParameteri(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_MAG_FILTER, GLES20.GL_LINEAR);

GLES20.glTexParameteri(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_MIN_FILTER, GLES20.GL_LINEAR);

GLES20.glTexParameteri(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_WRAP_S, GLES20.GL_REPEAT);

GLES20.glTexParameteri(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_WRAP_T, GLES20.GL_REPEAT);

GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, mOESFrameBuffer[0]);//绑定自定义帧缓冲区

GLES20.glFramebufferTexture2D(GLES20.GL_FRAMEBUFFER, GLES20.GL_COLOR_ATTACHMENT0, GLES20.GL_TEXTURE_2D, mOESTexId[0], 0);//帧缓冲区关联2D纹理,用作输出结果

// 渲染缓冲区可以省略,有没有,结果是一样的

GLES20.glBindRenderbuffer(GLES20.GL_RENDERBUFFER, mOESRenderBuffer[0]);

GLES20.glRenderbufferStorage(GLES20.GL_RENDERBUFFER, GLES20.GL_DEPTH_COMPONENT16, w, h);//指定渲染缓冲区内的存储的图像的尺寸和格式

GLES20.glFramebufferRenderbuffer(GLES20.GL_FRAMEBUFFER, GLES20.GL_DEPTH_ATTACHMENT, GLES20.GL_RENDERBUFFER, mOESRenderBuffer[0]);//关联渲染缓冲区与帧缓冲区

// gray

HjGLESUtils.genTexture(mGrayGLIds, 0, w, h);

HjGLESUtils.genFramebuffer(mGrayGLIds, 1, mGrayGLIds[0]);

HjGLESUtils.genRenderbuffer(mGrayGLIds, 2, w, h);

// yuv out 仅仅是数据导出的时候,是不需要 关联渲染缓冲区 的?

HjGLESUtils.genTexture(mExportFrameTemp, 0, w, h);

HjGLESUtils.genFramebuffer(mExportFrameTemp, 1, mExportFrameTemp[0]);

// lazy

HjGLESUtils.genTexture(mLazyFrameTemp, 0, w, h);

HjGLESUtils.genFramebuffer(mLazyFrameTemp, 1, mLazyFrameTemp[0]);

int status = GLES20.glCheckFramebufferStatus(GLES20.GL_FRAMEBUFFER);

LogUtils.d("mydebug---", "帧缓冲区是否完整 :"+(status==GLES20.GL_FRAMEBUFFER_COMPLETE));//如果不完整,渲染会出错

GLES20.glBindRenderbuffer(GLES20.GL_RENDERBUFFER, 0);//回到默认渲染缓冲区

GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, 0);//回到默认帧缓冲区

}

@Override

public void onSurfaceCreated(GL10 gl, EGLConfig config) { // Surface 的这三个回调函数都在 GLThread 线程

LogUtils.d("mydebug---", "GLCVRender onSurfaceCreated");

createProgram();

}

@Override

public void onSurfaceChanged(GL10 gl, int width, int height) { // Surface 的这三个回调函数都在 GLThread 线程

LogUtils.d("mydebug---", "GLCVRender onSurfaceChanged : width="+width+", height="+height);

this.mViewWidth = width;

this.mViewHeight = height;

GLES20.glViewport(0, 0, width, height);

// 模型视图投影矩阵

float[] projection = new float[16];

float[] modelView = new float[16];

// 由于要绘制的顶点坐标是1,所以这里的投影矩阵的坐标也要是1,这两个地方要一致,否则贴图会缺少一部分

Matrix.orthoM(projection, 0, -1, 1, -1.0f, 1.0f, 1, 3);//正交投影,参数这样传入,裁剪面的宽高比与视口的相同,视口变换时图形就不会有变形

Matrix.setLookAtM(modelView, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0);//设置视点,参数参考gluLookAt函数

Matrix.multiplyMM(mTransformMatrix, 0, projection, 0, modelView, 0);//两个矩阵相乘,这里相乘是做投影变换

// rgba out

mRGBAOutBb = ByteBuffer.allocateDirect(mViewWidth * mViewHeight * 4); // 同 FrameBuffer 一样, GLES20.glReadPixels 的尺寸也要是 view 的宽高,如果是preview的尺寸,图像会被裁剪

// yuv out

mExportTempBuffer= ByteBuffer.allocate(mViewWidth*mViewHeight*3/2);

mExportData = new byte[mViewWidth*mViewHeight*3/2];

setFrameBuffers();

}

@Override

public void onDrawFrame(GL10 gl) { // Surface 的这三个回调函数都在 GLThread 线程

LogUtils.v("mydebug---", "GLCVRender onDrawFrame");

GLES20.glClear(GLES20.GL_COLOR_BUFFER_BIT | GLES20.GL_DEPTH_BUFFER_BIT);

int textureId = 0;//当前纹理

//自定义缓冲区 摄像头帧数据

GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, mOESFrameBuffer[0]);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, 0);//默认纹理

mSurfaceTexture.updateTexImage();

float[] matrix = new float[16];

mSurfaceTexture.getTransformMatrix(matrix);//获取纹理的纹理坐标变换矩阵

GLES20.glUseProgram(mOESProgram);

GLES20.glUniformMatrix4fv(mOESVertTransMatrix, 1, false, mTransformMatrix, 0);//模型视图投影矩阵

GLES20.glUniformMatrix4fv(mOESVertCoorMatrix, 1, false, matrix, 0);

GLES20.glUniform1i(mOESFragTex, 0);//纹理单元

GLES20.glEnableVertexAttribArray(mOESVertTexCoor);

GLES20.glVertexAttribPointer(mOESVertTexCoor, 2, GLES20.GL_FLOAT, false, 0, isMirror ? mTexCoorFb_mirror : mTexCoorFb);

GLES20.glEnableVertexAttribArray(mOESVertPosition);

GLES20.glVertexAttribPointer(mOESVertPosition, 2, GLES20.GL_FLOAT, false, 0, mVertCoorBb);

GLES20.glDrawElements(GLES20.GL_TRIANGLES, mVertIndexs.length, GLES20.GL_UNSIGNED_BYTE, mVertIndexsBb);

GLES20.glDisableVertexAttribArray(mOESVertTexCoor);

GLES20.glDisableVertexAttribArray(mOESVertPosition);

textureId = mOESTexId[0];

//自定义缓冲区 灰度filter

if (isGrayFilter) {

GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, mGrayGLIds[1]);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, textureId);//自定义帧缓冲区纹理

GLES20.glUseProgram(mGrayProgram);

GLES20.glUniformMatrix4fv(mGrayVertTransMatrix, 1, false, mTransformMatrix, 0);

GLES20.glUniform1i(mGrayFragTex, 0);//纹理单元

GLES20.glEnableVertexAttribArray(mGrayVertTexCoor);

GLES20.glVertexAttribPointer(mGrayVertTexCoor, 2, GLES20.GL_FLOAT, false, 0, mGrayTexCoorFb);

GLES20.glEnableVertexAttribArray(mGrayVertPosition);

GLES20.glVertexAttribPointer(mGrayVertPosition, 2, GLES20.GL_FLOAT, false, 0, mVertCoorBb);

GLES20.glDrawElements(GLES20.GL_TRIANGLES, mVertIndexs.length, GLES20.GL_UNSIGNED_BYTE, mVertIndexsBb);

GLES20.glDisableVertexAttribArray(mGrayVertTexCoor);

GLES20.glDisableVertexAttribArray(mGrayVertPosition);

textureId = mGrayGLIds[0];

}

//自定义缓冲区(用上一个帧缓冲区id,因为只是绘制人脸框,没有纹理,没有自己的帧缓冲区) face detect

synchronized (this) { // 线程锁

if (isFaceDetect && mFaceCoor!=null) {

GLES20.glUseProgram(mFaceProgram);

float faceMatrix[] = new float[16];

System.arraycopy(mTransformMatrix, 0, faceMatrix, 0, 16); // 重新拷贝一个变换矩阵

// 参数介绍 : 1 变换矩阵 2 索引开始位置 3 旋转角度 456 设置绕哪个轴旋转

Matrix.rotateM(faceMatrix, 0, 90, 0, 0, 1); // Matrix.rotateM 表示对矩阵进行旋转变换、scaleM 表示缩放变换、translateM 表示平移变换

if (!isMirror) MyGLESUtils.flip(faceMatrix, true, false, false); // x方向翻转一下,否则人脸框位置不对,这里不是人脸框的坐标计算不正确,而是gl的问题

if (mCameraId==Camera.CameraInfo.CAMERA_FACING_BACK) MyGLESUtils.flip(faceMatrix, true, false, false); // 前后摄像头也会影响镜像。。 暂不明这之间关系

// 因为投影矩阵是1,所以画的线条投影到视口后线宽会拉伸,如果人脸框画在默认缓冲区,线宽不会被拉伸,但是 yuv out 没有人脸框。。。

GLES20.glUniformMatrix4fv(mFaceVertTransMatrix, 1, false, faceMatrix, 0);

GLES20.glLineWidth(3.f); // 设置线宽

GLES20.glEnableVertexAttribArray(mFaceVertPosition);

GLES20.glVertexAttribPointer(mFaceVertPosition, 2, GLES20.GL_FLOAT, false, 0, mFaceCoorFb);

float color4[] = {1, 0, 0, 1}; // 红

GLES20.glUniform4fv(mFaceFragColor, 1, color4, 0);

gl.glDrawElements(GL10.GL_LINES, mFaceVertIndexs.length, GL10.GL_UNSIGNED_BYTE, mFaceVertIndexsBb);

GLES20.glDisableVertexAttribArray(mFaceVertPosition);

}

}

//默认缓冲区

GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, 0);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, textureId);//自定义帧缓冲区纹理

GLES20.glUseProgram(mShowProgram);

GLES20.glUniformMatrix4fv(mShowVertTransMatrix, 1, false, mTransformMatrix, 0);

GLES20.glUniform1i(mShowFragTex, 0);//纹理单元

GLES20.glEnableVertexAttribArray(mShowVertTexCoor);

GLES20.glVertexAttribPointer(mShowVertTexCoor, 2, GLES20.GL_FLOAT, false, 0, mShowTexCoorFb);

GLES20.glEnableVertexAttribArray(mShowVertPosition);

GLES20.glVertexAttribPointer(mShowVertPosition, 2, GLES20.GL_FLOAT, false, 0, mVertCoorBb);

GLES20.glDrawElements(GLES20.GL_TRIANGLES, mVertIndexs.length, GLES20.GL_UNSIGNED_BYTE, mVertIndexsBb);

GLES20.glDisableVertexAttribArray(mShowVertTexCoor);

GLES20.glDisableVertexAttribArray(mShowVertPosition);

// rgba out

if (isRGBAOut) {

isRGBAOut = false;

long start = System.currentTimeMillis();

//读取当前帧缓冲区数据。但是读出来的像素相比于GLES中绘制的,旋转了180°,而且是左右镜像的,需要 gl.glScalef(1, -1, 1); 才能是正常的

GLES20.glReadPixels(0, 0, mViewWidth, mViewHeight, GLES20.GL_RGBA, GLES20.GL_UNSIGNED_BYTE, mRGBAOutBb); // 这里不管preview是什么尺寸,都需要用view的尺寸,否则图像会被裁剪

mRGBAOutBb.position(0);//不加此句代码 bitmap.copyPixelsFromBuffer 时会报 Buffer not large enough for pixels

if (mOnFrameOutListener != null) mOnFrameOutListener.rgbaOut(mViewWidth, mViewHeight, mRGBAOutBb);

LogUtils.d("mydebug---", "rgba out time="+(System.currentTimeMillis()-start)); // rgba out time=993 非常耗时

}

// yuv out

if (isYUVOut) {

isYUVOut = false;

long start = System.currentTimeMillis();

// lazy

GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, mLazyFrameTemp[1]);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, textureId);

GLES20.glUseProgram(mShowProgram);

GLES20.glUniformMatrix4fv(mShowVertTransMatrix, 1, false, mTransformMatrix, 0);

GLES20.glUniform1i(mShowFragTex, 0);

GLES20.glEnableVertexAttribArray(mShowVertTexCoor);

GLES20.glVertexAttribPointer(mShowVertTexCoor, 2, GLES20.GL_FLOAT, false, 0, mLazyTexCoorFb);

GLES20.glEnableVertexAttribArray(mShowVertPosition);

GLES20.glVertexAttribPointer(mShowVertPosition, 2, GLES20.GL_FLOAT, false, 0, mVertCoorBb);

GLES20.glDrawElements(GLES20.GL_TRIANGLES, mVertIndexs.length, GLES20.GL_UNSIGNED_BYTE, mVertIndexsBb);

GLES20.glDisableVertexAttribArray(mShowVertTexCoor);

GLES20.glDisableVertexAttribArray(mShowVertPosition);//------draw

textureId = mLazyFrameTemp[0];

// export

GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, mExportFrameTemp[1]);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, textureId);

GLES20.glUseProgram(mExportGLProgram);

GLES20.glUniformMatrix4fv(mExportGLVertexMatrix, 1, false, mTransformMatrix, 0);

GLES20.glUniform1f(mExportGLWidth, mViewWidth);

GLES20.glUniform1f(mExportGLHeight, mViewHeight);

GLES20.glUniform1i(mExportGLTexture, 0);

GLES20.glEnableVertexAttribArray(mExportGLVertexCo);

GLES20.glVertexAttribPointer(mExportGLVertexCo,2, GLES20.GL_FLOAT, false, 0, mExportVertexBuffer);

GLES20.glEnableVertexAttribArray(mExportGLTextureCo);

GLES20.glVertexAttribPointer(mExportGLTextureCo, 2, GLES20.GL_FLOAT, false, 0, mExportTextureBuffer);

GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP, 0, 4);

GLES20.glDisableVertexAttribArray(mExportGLVertexCo);

GLES20.glDisableVertexAttribArray(mExportGLTextureCo);//------draw

// GLES20.glReadPixels 是读取当前帧缓冲区关联的纹理的数据

GLES20.glReadPixels(0, 0, mViewWidth, mViewHeight*3/8, GLES20.GL_RGBA, GLES20.GL_UNSIGNED_BYTE, mExportTempBuffer); // 导出的数据不需要再做翻转之类的处理

mExportTempBuffer.get(mExportData, 0, mViewWidth*mViewHeight*3/2); // 从 ByteBuffer 中获取YUV数据

mExportTempBuffer.clear(); // 这里与 .position(0); 效果是一样的

LogUtils.d("mydebug---", "yuv out time="+(System.currentTimeMillis()-start)); // yuv out time=74 随着运行,耗时会越来越少

if (mOnFrameOutListener!=null) mOnFrameOutListener.yuvOut(mViewWidth, mViewHeight, mExportData);

}

}

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

/** 最开始的几条日志输出,这些函数的调用都是有顺序的?

GLCVRender onDrawFrame

GLCVRender onDrawFrame

GLCVRender onFrameAvailable

GLCVRender onDrawFrame

GLCVRender onPreviewFrame : 1382400

GLCVRender onFrameAvailable

GLCVRender onDrawFrame

GLCVRender onPreviewFrame : 1382400

GLCVRender onFrameAvailable

GLCVRender onDrawFrame

GLCVRender onPreviewFrame : 1382400

GLCVRender onFrameAvailable

GLCVRender onDrawFrame

GLCVRender onPreviewFrame : 1382400

*/

// 要让相机预览的数据每次都回调,需要每次都 addCallbackBuffer 一次 , mBuffers 中即为每帧的像素数据,与data一致 , data图像格式是YUV的

if (mCamera!=null) mCamera.addCallbackBuffer(mCameraFrameBuffer);

LogUtils.v("mydebug---", "GLCVRender onPreviewFrame : "+data.length);

if (isFaceDetect) {

long start = System.currentTimeMillis();

float face[] = mActivity.glcv(data);

synchronized (this) { // 线程锁

if (face != null) {

mFaceCoor = face;

mFaceCoorFb.put(mFaceCoor);

mFaceCoorFb.position(0);

StringBuilder sb = new StringBuilder();

for (int i = 0; i < mFaceCoor.length; i++) {

sb.append(mFaceCoor[i] + ", ");

}

LogUtils.v("mydebug---", "face=" + sb.toString() + ", time=" + (System.currentTimeMillis() - start));

} else {

mFaceCoor = null;

LogUtils.v("mydebug---", "face=null" + ", time=" + (System.currentTimeMillis() - start));

}

}

}

}

public void release() {

stopPreview();

if (mSurfaceTexture!=null) mSurfaceTexture.release();

if (mOESFrameBuffer[0]!=0) GLES20.glDeleteFramebuffers(1, mOESFrameBuffer, 0);

if (mOESRenderBuffer[0]!=0) GLES20.glDeleteRenderbuffers(1, mOESRenderBuffer, 0);

if (mTextureId[0]!=0) GLES20.glDeleteTextures(1, mTextureId, 0); // 删除纹理对象

if (mOESTexId[0]!=0) GLES20.glDeleteTextures(1, mOESTexId, 0); // 删除纹理对象

}

interface OnFrameOutListener {

void rgbaOut(int width, int height, ByteBuffer buffer);

void yuvOut(int width, int height, byte[] data);

}

}

c++代码(OpenCV人脸检测)

#include "hjcommon.hpp"

#include "opencv2/opencv.hpp"

using namespace std;

using namespace cv;

static CascadeClassifier mCascader; // 级联分类器,实测用haar检测与用lbp检测,耗时差不多。。。 但是haar比lbp准确。。。 lbp有一个小脸框的训练文件

static int mShortSide; // 图像的短边长、长边长,用于计算 OpenGL 顶点坐标(OpenGL顶点坐标范围为 [-1, 1])

static int mLongSide;

static const int mReference = 160;

static int mResizeRate = 0; // 图像缩小系数,暂以 160 为参照,图像太大的话人脸检测时太耗时, 一系列参数为 160、Size(50, 50) 时,1080P、720P、240P检测总耗时分别为:70 40 30

static Mat mDetectFaceMat; // 帧数据,人脸检测mat

static Mat mGrayMat;

JNIEXPORT void JNICALL Java_hankin_hjmedia_media_glcv_GLCVActivity_init(JNIEnv *env, jobject instance, jstring cascadesPath_, jint previewLongSide_, jint previewShortSide_) // 初始化,生成级联分类器对象

{

char cascadesPath[512];

hjcpyJstr2char(env, cascadesPath_, cascadesPath);

LOGD("cascadesPath=%s", cascadesPath); // cascadesPath=/storage/emulated/0/hjMedia/opencv/etc/lbpcascades/lbpcascade_frontalface_improved.xml

bool isLoad = mCascader.load(cascadesPath); // 加载级联分类器 人脸识别的训练文件

LOGD("mCascader.load=%d", isLoad); // mCascader.load=1

mLongSide = previewLongSide_;

mShortSide = previewShortSide_;

mDetectFaceMat.create(mShortSide + mShortSide/2, mLongSide, CV_8UC1); // camera帧数据是nv21

if (mShortSide > mReference) mResizeRate = mShortSide/mReference; // 图像缩小系数

if (mResizeRate<=1) mResizeRate = 0; // 比例系数为1,图像不缩小

}

// opencv 中 java 中的 Mat 与 c++ 中的 Mat 互转

/*

c++中的Mat传递给java中的Mat:

1、可以在java部分创建一个Mat,用于保存图像处理结果图像,获取Mat 的本地地址传入jni函数中:

Mat res = new Mat(); // java 中new一个空的mat

jni_fun(res.getNativeObjAddr()); // jni 函数, 将mat的地址,即 public final long nativeObj 成员变量 传递给ndk

2、c++部分新建Mat指针指向java传入的内存区域,将处理后的结果图像的Mat数据复制到这块内存区域,这样java中的创建的Mat就变为结果图像:

void jni_fun(jlong Mataddr) //jni 函数

{

Mat* res = (Mat*)MatAddr; // 定义一个指针,指向java虚拟机中的Mat的地址

Mat image = ndk中创建的mat; // c++中创建Mat

res->create(image.rows, image.cols, image.type()); // 将java中的Mat的宽高、类型设置成c++中一样

memcpy(res->data, image.data, image.rows*image.step); // 拷贝c++内存中的Mat的数据到java的内存中的,mat.step为列数 * 通道数。 到此完成了c++的Mat转换到java的Mat

}

java中的Mat传递给c++中的Mat: 与上面几乎一样,只是c++的Mat的宽高、类型要与java中的设置一样,然后memcpy的时候是由java拷贝到c++

*/

JNIEXPORT void JNICALL Java_hankin_hjmedia_media_glcv_GLCVActivity_cmat(JNIEnv *env, jobject instance, jlong nv21Addr_, jlong rgbaAddr_) // java mat 传递给 c mat,最后还会传递回java

{

// java mat 2 c mat --------------------------------------------------------------------------

Mat *j_nv21 = (Mat*) nv21Addr_;

// j_nv21->rows=1080(为720*1.5), cols=1280, depth=0, channels=1, step=1280 mat.step为列数 * 通道数

LOGD("j_nv21->rows=%d, cols=%d, depth=%d, channels=%d, step=%d", j_nv21->rows, j_nv21->cols, j_nv21->depth(), j_nv21->channels(), *(j_nv21->step.p));

Mat c_nv21(j_nv21->rows, j_nv21->cols, j_nv21->type()); // 这里需要设置Mat的属性,否则报错

memcpy(c_nv21.data, j_nv21->data, j_nv21->rows*(*(j_nv21->step.p)));

Mat c_rgba;

cvtColor(c_nv21, c_rgba, COLOR_YUV2RGB_NV21, 4); // nv21转rbga,最后一个参数表示rgba的4个通道数

// c_rgba.rows=720, cols=1280, depth=0, channels=4, step=5120

LOGD("c_rgba.rows=%d, cols=%d, depth=%d, channels=%d, step=%d", c_rgba.rows, c_rgba.cols, c_rgba.depth(), c_rgba.channels(), *(c_rgba.step.p));

rotate(c_rgba, c_rgba, ROTATE_90_COUNTERCLOCKWISE); // 将倒着的图像逆时针旋转90°,将图像摆正,否则人脸检测的时候会检测不出来

// 1 c_rgba.rows=1280, cols=720, depth=0, channels=4, step=2880

LOGD("1 c_rgba.rows=%d, cols=%d, depth=%d, channels=%d, step=%d", c_rgba.rows, c_rgba.cols, c_rgba.depth(), c_rgba.channels(), *(c_rgba.step.p));

// 检测人脸 --------------------------------------------------------------------------

LOGD("mCascader.empty()=%d", mCascader.empty()); // mCascader.empty()=0

if (!mCascader.empty())

{

Mat gray;

cvtColor(c_rgba, gray, COLOR_BGR2GRAY); // 转灰度图

equalizeHist(gray, gray); // 直方图均衡化,提升对比度,提升图像特征提取的准确率

vector faces;

// haar是浮点数计算,lbp是整型,所以lbp比haar识别快(可快一倍),建议优先使用lbp检测

mCascader.detectMultiScale(gray, faces, 1.1, 3, 0, Size(30, 30)); // 人脸检测,如果图像是倒的,会检测不出来

LOGD("detectMultiScale faces.size()=%d", faces.size()); // detectMultiScale faces.size()=1

for (size_t t = 0; t < faces.size(); t++) {

rectangle(c_rgba, faces[t], Scalar(255, 0, 0, 255), 2, 8, 0); // 在 c_rgba 中绘制出人脸框,Scalar 的顺序同java中一样是 rgba ,不传a颜色会出差错

}

}

// c mat 2 java mat --------------------------------------------------------------------------

Mat *j_rgba = (Mat*) rgbaAddr_;

j_rgba->create(c_rgba.size(), c_rgba.type()); // 必须设置mat的大小、类型,否则报错

memcpy(j_rgba->data, c_rgba.data, c_rgba.rows*(*(c_rgba.step.p))); // GLCVActivity 中的 mRGBAMat 会被赋值

// j_rgba->rows=720, cols=1280, depth=0, channels=4, step=5120

LOGD("j_rgba->rows=%d, cols=%d, depth=%d, channels=%d, step=%d", j_rgba->rows, j_rgba->cols, j_rgba->depth(), j_rgba->channels(), *(j_rgba->step.p));

// call java func --------------------------------------------------------------------------

jclass clz = env->GetObjectClass(instance);

jmethodID mid = env->GetMethodID(clz, "cmat2jmat", "()V");

env->CallVoidMethod(instance, mid);

}

static float calcOpenGLCoor(float len, float total) // 根据实际长度len与总长total,算出对应的OpenGL坐标([-1, 1] 之间,因为我OpenGL代码是这么写的)

{

return 2.f * (len/total - 0.5f);

}

JNIEXPORT jfloatArray JNICALL Java_hankin_hjmedia_media_glcv_GLCVActivity_glcv(JNIEnv *env, jobject instance, jbyteArray frame_) // camera frame,返回值为检测到的人脸框的四个坐标(x,y 逆时针),没有检测到时下标0的值为-1

{

jbyte *nv21 = env->GetByteArrayElements(frame_, 0);

//double start = getTickCount(), end; // 检测代码运行耗时

memcpy(mDetectFaceMat.data, nv21, mDetectFaceMat.rows*(*(mDetectFaceMat.step.p)));

//end = getTickCount();

//LOGD("memcpy time=%f", (end-start)/getTickFrequency()); // memcpy time=0.003396

//start = end;

cvtColor(mDetectFaceMat, mGrayMat, COLOR_YUV2GRAY_NV21); // 将 nv21 转为 灰度图

//end = getTickCount();

//LOGD("cvtColor time=%f", (end-start)/getTickFrequency()); // cvtColor time=0.001496

//start = end;

rotate(mGrayMat, mGrayMat, ROTATE_90_COUNTERCLOCKWISE); // 将倒着的图像逆时针旋转90°,将图像摆正,否则人脸检测的时候会检测不出来

//end = getTickCount();

//LOGD("rotate time=%f", (end-start)/getTickFrequency()); // rotate time=0.006418

//start = end;

int detectWidth = mShortSide;

int detectHeight = mLongSide;

if (mResizeRate>0)

{

detectWidth = mGrayMat.cols/mResizeRate;

detectHeight = mGrayMat.rows/mResizeRate;

resize(mGrayMat, mGrayMat, Size(detectWidth, detectHeight)); // 缩小图像尺寸,加快人脸检测速度

//end = getTickCount();

//LOGD("resize time=%f", (end-start)/getTickFrequency()); // resize time=0.001251

//start = end;

}

equalizeHist(mGrayMat, mGrayMat); // 直方图均衡化,提升对比度,提升图像特征提取的准确率

//end = getTickCount();

//LOGD("equalizeHist time=%f", (end-start)/getTickFrequency()); // equalizeHist time=0.020551

//start = end;

vector faces;

// haar是浮点数计算,lbp是整型,所以lbp比haar识别快(可快一倍),建议优先使用lbp检测

mCascader.detectMultiScale(mGrayMat, faces, 1.1, 3, 0, Size(50, 50)); // 人脸检测,如果图像是倒的,会检测不出来

//end = getTickCount();

//LOGD("detectMultiScale time=%f", (end-start)/getTickFrequency()); // detectMultiScale time=0.318728 检测用时最久的部分(图像720*1280时),单位秒 detectMultiScale time=0.033288(240*320时)

//start = end;

// LOGD("frame faces.size()=%d", faces.size()); // detectMultiScale faces.size()=1

// 返回值

jfloatArray retArr = env->NewFloatArray(8); // 同java中一样,元素默认值为0,检测到的人脸框的四个坐标(x,y 逆时针),没有检测到时下标0的值为-1

jfloat *ret = env->GetFloatArrayElements(retArr, 0);

if (!faces.empty())

{

Rect rect = faces[0];

float ltX = calcOpenGLCoor(rect.x, detectWidth); // 计算人脸框的四个坐标

float ltY = calcOpenGLCoor(rect.y, detectHeight);

float rbX = calcOpenGLCoor(rect.x + rect.width, detectWidth);

float rbY = calcOpenGLCoor(rect.y + rect.height, detectHeight);

// LOGD("detectWidth=%d, detectHeight=%d, %d, %d, %d, %d, %f, %f, %f, %f", detectWidth, detectHeight, rect.x, rect.y, rect.x + rect.width, rect.y + rect.height, ltX, ltY, rbX, rbY);

ret[0] = ltX;

ret[1] = ltY;

ret[2] = ltX;

ret[3] = rbY;

ret[4] = rbX;

ret[5] = rbY;

ret[6] = rbX;

ret[7] = ltY;

env->ReleaseFloatArrayElements(retArr, ret, 0);

}

else

retArr = NULL;

//end = getTickCount();

//LOGD("ret time=%f", (end-start)/getTickFrequency()); // ret time=0.000113

env->ReleaseByteArrayElements(frame_, nv21, 0); // 释放

return retArr;

}

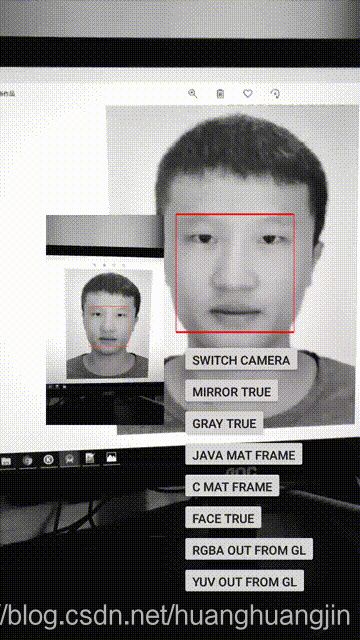

效果图