fpn 论文笔记

摘要

Feature pyramids are a basic component in recognition systems for detecting objects at different scales. But recent deep learning object detectors have avoided pyramid representations, in part because they are compute and memory intensive. In this paper, we exploit the inherent multi-scale,

pyramidal hierarchy of deep convolutional networks to construct feature pyramids with marginal extra cost. A top-down architecture with lateral connections is developed for building high-level semantic feature maps at all scales. This architecture, called a Feature Pyramid Network (FPN),

shows significant improvement as a generic feature extractor in several applications. Using FPN in a basic Faster R-CNN system, our method achieves state-of-the-art single model results on the COCO detection benchmark without bells and whistles, surpassing all existing single-model entries including those from the COCO 2016 challenge winners. In addition, our method can run at 6 FPS on a GPU and thus is a practical and accurate solution to multi-scale object detection. Code will be made publicly available.

特征金字塔可以用于检测不同尺度的目标,是目标检测中非常重要的组件。但是深度学习的目标检测中没有使用,原因在于金字塔的表示太浪费计算资源。作者探索出了一个利用卷积神经网络中固有的多尺度金字塔结构来构造特征金字塔,而且开销很小。主要思路就是一个top-down结构加上一个横向连接(lateral connections),用来构建所有尺度特征图的高级别的语义信息。在最近的几个目标检测的网络上测试,不但速度快,而且效果好。

介绍

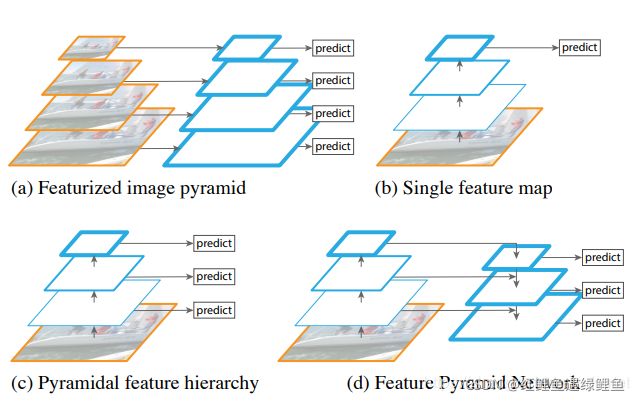

Feature pyramids built upon image pyramids (for short we call these featurized image pyramids) form the basis of a standard solution[1] (Fig. 1(a)).

最简单粗暴的思路,就是构造多尺度的图像金字塔来提取特征,从而得到的特征金字塔。

Featurized image pyramids were heavily used in the era of hand-engineered features [5, 25]. They were so critical that object detectors like DPM [7] required dense scale sampling to achieve good results (e.g., 10 scales per octave).

原始特征金字塔都是手工设计的,如果在DPM算法中,计算了很多不同尺度的特征才得到不错的结果。

The principle advantage of featurizing each level of an image pyramid is that it produces a multi-scale feature representation in which all levels are semantically strong, including the high-resolution levels.

对一个图像金字塔每一层进行特征化的主要优势是他能产生一个多尺度的特征表示,保证所有尺度的语义特征非常强,而且有很高的分辨率。

A deep ConvNet computes a feature hierarchy layer by layer, and with subsampling layers the feature hierarchy has an inherent multi-scale, pyramidal shape. This in-network feature hierarchy

produces feature maps of different spatial resolutions, but introduces large semantic gaps caused by different depths. The high-resolution maps have low-level features that harm their representational capacity for object recognition.

深度卷积网络计算特征的时候是逐层的,并且降采样层得到的特征图有其内部固有的多尺度金字塔的形态特征。这种网络内部的特征层级产生的特征图有不同的分辨率,但是由于不同的深度,所以会产生较大的语义跨度。高分辨率的特征图有低级别的特征,但是会对目标识别的表达能力有损害。

Ideally, the SSD-style pyramid would reuse the multi-scale feature maps from different layers computed in the forward pass and thus come free of cost. But to avoid using low-level features SSD foregoes reusing already computed layers and instead builds the pyramid starting from high up in the network (e.g., conv4 3 of VGG nets [36]) and then by adding several new layers. Thus it misses the opportunity to reuse the higher-resolution maps of the feature hierarchy. We show that these are important for detecting small objects.

SSD网络从前向传播计算得到的层中,重用了多尺度的特征,因此没有什么多余的计算损耗。

但是SSD网络没有把前面层计算的特征重新使用,而是直接从高层开始构建金字塔。因此错过了重新使用层次结构高分辨率特征图的机会,这对检测小目标很关键

介绍里面说的很啰嗦,揭示了一点网络深度与语义特征的关系,此外作者总结了一下SSD中对不同层次的特征度直接进行检测目标的缺点,这个很重要。

总结起来有如下几点,在网上找到这个图:

图片来源下面的连接,解释的也非常好,借鉴了

图片来源下面的连接,解释的也非常好,借鉴了

https://cloud.tencent.com/developer/article/1494983

总结一下,层数越深的特征图,分辨率越低,语义信息越丰富。层数越浅的特征图,分辨率越高,但是语义信息较差。上面文章还用了Unet(语义分割的模型)举例子。

- 对图像进行多尺度变换并提取特征会非常耗时(比如有一个卷积网络,对同一个图的四个不同尺度进行提取特征并训练,明显消耗四倍时间),传统图像算法中的高斯金字塔就是这个。对应a

- 图b就是faster rcnn用的,对小目标的检测效果比较差,原因上面说了,因为卷积核的降采样特性会降低分辨率,导致小目标不友好。对应b

- 这种特征金字塔有改善,SSD就是这么做的,但是SSD做的还不够绝=_= 比如提取的分辨率最高的特征是在conv4上。SSD作者使用conv4作为首个特征图,而不是把前面的conv层得到的特征度都使用了,原因很可能是上面总结中来看,应该就是浅层的特征图虽然分辨率高,但是语义信息少,不利于提取目标的信息,这也引出了fpn的思路。对应c

- 就是fpn的方法,把高层的特征图通过上采样与底层的特征度相融合,得到一个高分辨率且语义信息得到补充的特征图,更加方便检测不同尺度的目标。对应d

ps:猜测作者的思路应该是用SSD和语义分割的上采样得到的

There are recent methods exploiting lateral/skip connections that associate low-level feature maps across resolutions and semantic levels, including U-Net [31] and Sharp-Mask [28] for segmentation, Recombinator networks [17] for face detection, and Stacked Hourglass networks [26] for keypoint estimation.

作者研究了最近一些关于横向连接和跳跃连接(lateral / skip connections)的网络结构,得出其目的是将低层次的特征图与语义级别的特征关联起来,比如unet和hourglass这些耳熟能详的网络。也再次印证了作者思路的来源。

Feature Pyramid Networks

Our goal is to leverage a ConvNet’s pyramidal feature hierarchy, which has semantics from low to high levels, and build a feature pyramid with high-level semantics throughout.

我们的目标是平衡卷积网络金字塔特征的层次,使其具有从低级到高级的语义信息,并构建一个自始至终都有高级语义信息的特征金字塔。

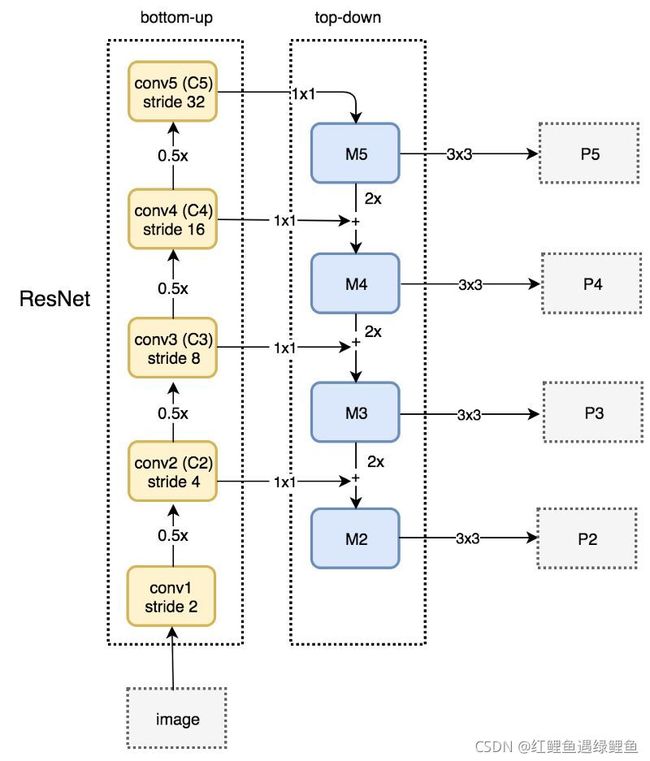

Our method takes a single-scale image of an arbitrary size as input, and outputs proportionally sized feature maps at multiple levels, in a fully convolutional fashion. This process is independent of the backbone convolutional architectures (e.g., [19, 36, 16]), and in this paper we present results using ResNets [16]. The construction of our pyramid involves a bottom-up pathway, a top-down pathway, and lateral connections, as introduced in the following.

作者的方法是使用一个单尺度的任意尺寸普通图像输入,然后使用全卷积输出多个层级的适当尺寸的特征图。该过程不影响backbone的卷积结构,此外本文使用ResNet来作为结果展示。作者的金字塔架构包含自底向上的通路、自顶向下的通路以及一个横向连接。

在网上经常能看到fpn和ResNet搅和在一起,不明所以。FPN+ResNet这种组合方式究竟是开发人员自己“组装”的还是FPN就是这个德行?从文中来看,FPN + ResNet这种组合方式是FPN文章作者自己拼的,属于“强强联合”吧。

bottom-up 通路

自底向上的通路就是卷积网络正向传播的过程,中间会产生一个包含多级特征图的特征层级。在ResNet上,作者使用每个stage的最后一个残差块(residual block)的激活输出,并把这些最后的残差快的输出定义为

{ C 2 , C 3 , C 4 , C 5 } \{C_2, C_3, C_4, C_5\} {C2,C3,C4,C5},分别是conv2, conv3, conv4 和conv5的输出,步长分别是{4, 8, 16, 32}。这里不用conv1,因为太占内存(large memory footprint)。

top-down 通路和横向连接

The top-down pathway hallucinates higher resolution features by upsampling spatially coarser, but semantically stronger, feature maps from higher pyramid levels. These features are then enhanced with features from the bottom-up pathway via lateral connections. Each lateral connection merges feature maps of the same spatial size from the bottom-up pathway and the top-down pathway. The bottom-up feature map is of lower-level semantics, but its activations are more accurately localized as it was subsampled fewer times.

top-down 的通路通过上采样可以产生更粗糙但是语义信息更强的高分辨率特征。将这些特征横向连接bottom-up的通路来进行增强。每个横向连接都合并来自bottom-up和top-down相同大小的特征图。bottom-up的特征图有较低语义信息,但是它的激活和定位信息更准确,因为它的降采样次数更少。

上面的图展示了如何将bottom-up与top-down进行横向连接。

上面的图展示了如何将bottom-up与top-down进行横向连接。

最顶层的特征图分辨率较低,使用上采样扩大两倍尺寸(使用最简单的最近邻上采样)。同时与对应的bottom-up的特征图进行element-wise相加(这里通过1×1卷积来降低channel)。

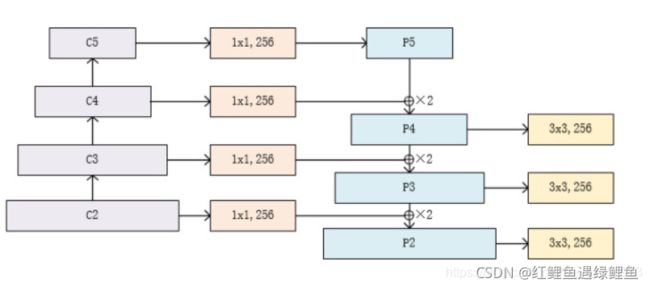

To start the iteration, we simply attach a 1×1 convolutional layer on C 5 to produce the coarsest resolution map. Finally, we append a 3×3 convolution on each merged map to generate the final feature map, which is to reduce the aliasing effect of upsampling. This final set of feature maps is called { P 2 , P 3 , P 4 , P 5 } \{P_2 , P_3 , P_4 , P_5 \} {P2,P3,P4,P5}, corresponding to { C 2 , C 3 , C 4 , C 5 } \{C_2 , C_3 , C_4 , C_5 \} {C2,C3,C4,C5}that are respectively of the same spatial sizes.

先对 C 5 C_5 C5使用1×1的卷积操作获取粗糙分辨率的特征图,然后将得到的结果与bottom-up的特征图合并,最后将合并的特征图使用3×3的卷积,消除上采样带来的混叠效应。最后得到的特征图记为 { P 2 , P 3 , P 4 , P 5 } \{P_2 , P_3 , P_4 , P_5 \} {P2,P3,P4,P5},与 { C 2 , C 3 , C 4 , C 5 } \{C_2 , C_3 , C_4 , C_5 \} {C2,C3,C4,C5}有相同的大小。

来个图,从网上看到别人总结的,感觉很不错。

来源

http://www.elecfans.com/d/724390.html

再次总结一下这个结构的原因

bottom-up结构可以逐级获取更高的更强的语义信息,越up的层级但是分辨率低,语义信息越强,对小目标忽略的也更多,越bottom的特征图分辨率越高,激活和定位信息更准确,因为降采样次数少;

top-down的操作是把bottom-up得到的特征图进行恢复为高分辨率,得到的粗糙的语义信息;

通过横向连接,将粗糙的语义信息与准确的定位信息进行融合,可以更好的获得小目标的定位。

这部分原理应该可以看看unet是怎么讲的

实验

如何把上面得到的特征图金字塔用到RPN上去获取多尺度的目标信息?

anchor 生成

原始的faster rcnn 是在最后一个特征图上,做多个尺度乘以3种不同长宽比的anchor生成。

在FPN种,在每一个尺度层定义了不同大小的anchor,作者在每一个金字塔层级应用了一种尺度乘以3种长宽比的anchor,对于P2,P3,P4,P5,P6这些层,定义anchor的大小为 { 3 2 2 , 6 4 2 , 12 8 2 , 25 6 2 , 51 2 2 } \{32^2, 64^2, 128^2, 256^2, 512^2 \} {322,642,1282,2562,5122},另外每个尺度的特征图都有3个长宽对比度:1:2,1:1,2:1。所以整个特征金字塔有15种anchor。

IOU计算

如果某个anchor和任意一个Ground truth的IOU都大于0.7,则是正样本。如果一个anchor和任意一个ground truth的IOU都小于0.3,则为负样本。

Note that scales of GT boxes are not explicitly used to assign them to the levels of the pyramid; instead, GT boxes are associated with anchors, which have been assigned to pyramid levels.

ground true框的尺度没有明确将他们赋予金字塔的层级;相反,ground true是和已经分配的给金字塔层级的anchor相关联的。

RPN设置

We note that the parameters of the heads are shared across all feature pyramid levels; we have also evaluated the alternative without sharing parameters and observed similar accuracy. The good performance of sharing parameters indicates that all levels of our pyramid share similar semantic levels. This advantage is analogous to that of using a featurized image pyramid, where a common head classifier can be applied to features computed at any image scale.

作者注意到头部的参数在所有金字塔层级中共享,作者评估了没有使用参数共享并观察准确率。参数共享的性能很好的原因在于所有金字塔层级都分享了相似的语义特征。

这个优点类似于特征金字塔,可以将常见的头部分类器应用于任何图像尺度下的计算特征。

上面提到了两个头部,第一个头部是指的bottom-up结构中的最后一层conv得到的特征图,第二个head是指后面的全连接。

代码

借用下面连接中的图和代码

https://blog.csdn.net/qq_41251963/article/details/109398699

import torch.nn as nn

import torch.nn.functional as F

import math

#ResNet的基本Bottleneck类

class Bottleneck(nn.Module):

expansion=4#通道倍增数

def __init__(self,in_planes,planes,stride=1,downsample=None):

super(Bottleneck,self).__init__()

self.bottleneck=nn.Sequential(

nn.Conv2d(in_planes,planes,1,bias=False),

nn.BatchNorm2d(planes),

nn.ReLU(inplace=True),

nn.Conv2d(planes,planes,3,stride,1,bias=False),

nn.BatchNorm2d(planes),

nn.ReLU(inplace=True),

nn.Conv2d(planes,self.expansion*planes,1,bias=False),

nn.BatchNorm2d(self.expansion*planes),

)

self.relu=nn.ReLU(inplace=True)

self.downsample=downsample

def forward(self,x):

identity=x

out=self.bottleneck(x)

if self.expansion is not None:

identity=self.downsample(x)

out+=identity

out=self.relu(out)

return out

#FNP的类,初始化需要一个list,代表RESNET的每一个阶段的Bottleneck的数量

class FPN(nn.Module):

def __init__(self,layers):

super(FPN,self).__init__()

self.inplanes=64

#处理输入的C1模块(C1代表了RestNet的前几个卷积与池化层)

self.conv1=nn.Conv2d(3,64,7,2,3,bias=False)

self.bn1=nn.BatchNorm2d(64)

self.relu=nn.ReLU(inplace=True)

self.maxpool=nn.MaxPool2d(3,2,1)

#搭建自下而上的C2,C3,C4,C5

self.layer1=self._make_layer(64,layers[0])

self.layer2=self._make_layer(128,layers[1],2)

self.layer3=self._make_layer(256,layers[2],2)

self.layer4=self._make_layer(512,layers[3],2)

#对C5减少通道数,得到P5

self.toplayer=nn.Conv2d(2048,256,1,1,0)

#3x3卷积融合特征

self.smooth1=nn.Conv2d(256,256,3,1,1)

self.smooth2=nn.Conv2d(256,256,3,1,1)

self.smooth3=nn.Conv2d(256,256,3,1,1)

#横向连接,保证通道数相同

self.latlayer1=nn.Conv2d(1024,256,1,1,0)

self.latlayer2=nn.Conv2d(512,256,1,1,0)

self.latlayer3=nn.Conv2d(256,256,1,1,0)

def _make_layer(self,planes,blocks,stride=1):

downsample=None

if stride!=1 or self.inplanes != Bottleneck.expansion*planes:

downsample=nn.Sequential(

nn.Conv2d(self.inplanes,Bottleneck.expansion*planes,1,stride,bias=False),

nn.BatchNorm2d(Bottleneck.expansion*planes)

)

layers=[]

layers.append(Bottleneck(self.inplanes,planes,stride,downsample))

self.inplanes=planes*Bottleneck.expansion

for i in range(1,blocks):

layers.append(Bottleneck(self.inplanes,planes))

return nn.Sequential(*layers)

#自上而下的采样模块

def _upsample_add(self,x,y):

_,_,H,W=y.shape

return F.upsample(x,size=(H,W),mode='bilinear')+y

def forward(self,x):

#自下而上 bottom-up

c1=self.maxpool(self.relu(self.bn1(self.conv1(x))))

c2=self.layer1(c1)

c3=self.layer2(c2)

c4=self.layer3(c3)

c5=self.layer4(c4)

#自上而下 top-down

p5=self.toplayer(c5)

p4=self._upsample_add(p5,self.latlayer1(c4))

p3=self._upsample_add(p4,self.latlayer2(c3))

p2=self._upsample_add(p3,self.latlayer3(c2))

#卷积的融合,平滑处理

p4=self.smooth1(p4)

p3=self.smooth2(p3)

p2=self.smooth3(p2)

return p2,p3,p4,p5